Tutorials

Red Hat OpenShift Service on AWS tutorials

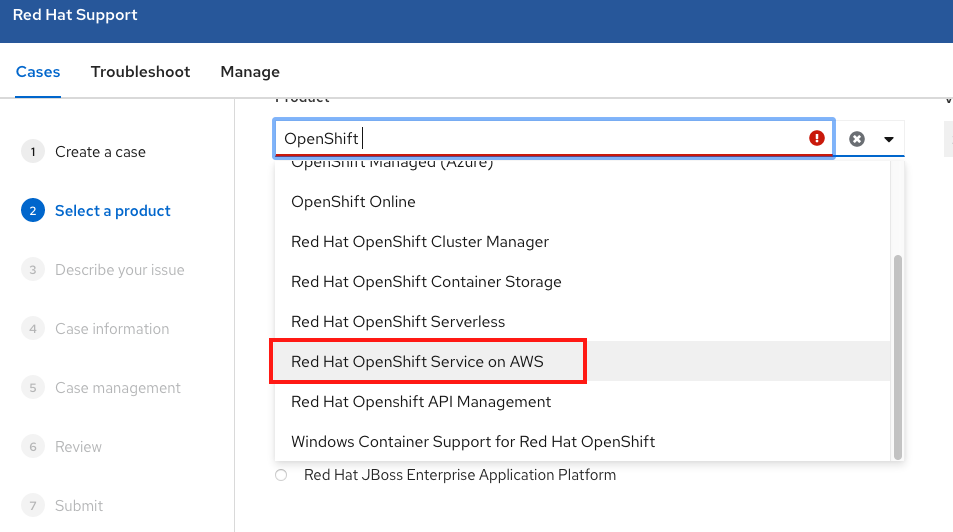

Abstract

Chapter 1. Tutorials overview

Step-by-step tutorials from Red Hat experts to help you get the most out of your Managed OpenShift cluster.

In an effort to make this Cloud Expert tutorial content available quickly, it may not yet be tested on every supported configuration.

Chapter 2. Tutorial: ROSA with HCP activation and account linking

This tutorial describes the process for activating Red Hat OpenShift Service on AWS (ROSA) with hosted control planes (HCP) and linking to an AWS account, before deploying the first cluster.

If you have received a private offer for the product, make sure to proceed according to the instructions provided with the private offer before following this tutorial. The private offer is designed either for a case when the product is already activated, which replaces an active subscription, or for first time activations.

2.1. Prerequisites

- Make sure to log into the Red Hat account that you plan to associate with the AWS account where you have activated ROSA with HCP in previous steps.

- Only a single AWS account that will be used for service billing can be associated with a Red Hat account. Typically an organizational AWS account that has other AWS accounts, such as developer accounts, linked would be the one that is to be billed, rather than individual AWS end user accounts.

- Red Hat accounts belonging to the same Red Hat organization will be linked with the same AWS account. Therefore, you can manage who has access to creating ROSA with HCP clusters on the Red Hat organization account level.

2.2. Subscription enablement and AWS account setup

Activate the ROSA with HCP product at the AWS console page by clicking the Get started button:

If you have activated ROSA before but did not complete the process, you can click the button and complete the account linking as described in the following steps.

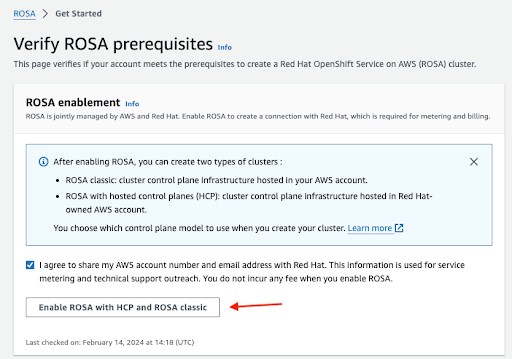

Confirm that you want your contact information to be shared with Red Hat and enable the service:

- You will not be charged by enabling the service in this step. The connection is made for billing and metering that will take place only after you deploy your first cluster. This could take a few minutes.

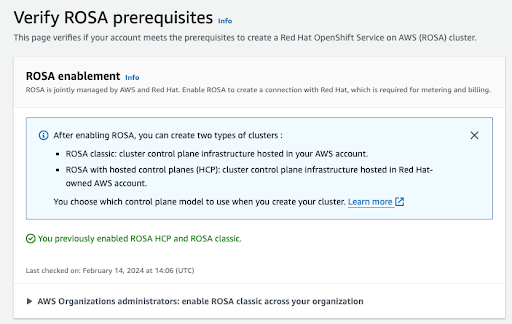

After the process is completed, you will see a confirmation:

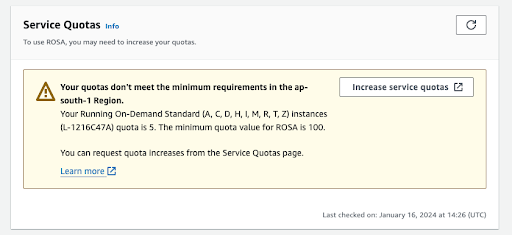

Other sections on this verification page show the status of additional prerequisites. In case any of these prerequisites are not met, a respective message is shown. Here is an example of insufficient quotas in the selected region:

- Click the Increase service quotas button or use the Learn more link to get more information about the about how to manage service quotas. In the case of insufficient quotas, note that quotas are region-specific. You can use the region switcher in the upper right corner of the web console to re-run the quota check for any region you are interested in and then submit service quota increase requests as needed.

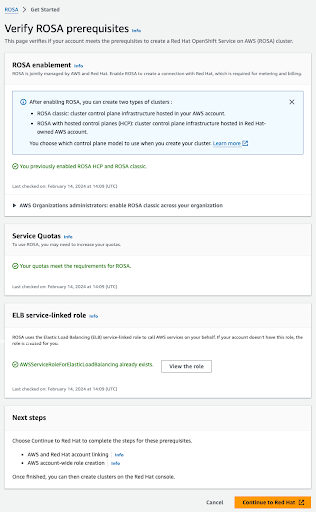

If all the prerequisites are met, the page will look like this:

The ELB service-linked role is created for you automatically. You can click any of the small Info blue links to get contextual help and resources.

2.3. AWS and Red Hat account and subscription linking

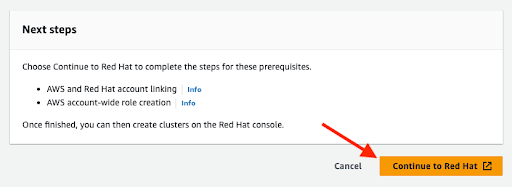

Click the orange Continue to Red Hat button to proceed with account linking:

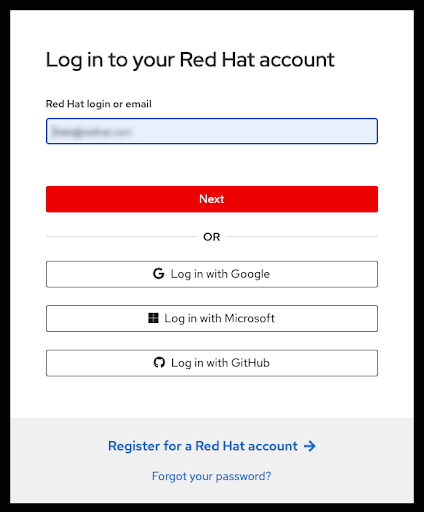

If you are not already logged in to your Red Hat account in your current browser’s session, you will be asked to log in to your account:

- You can also register for a new Red Hat account or reset your password on this page.

- Make sure to log into the Red Hat account that you plan to associate with the AWS account where you have activated ROSA with HCP in previous steps.

- Only a single AWS account that will be used for service billing can be associated with a Red Hat account. Typically an organizational AWS account that has other AWS accounts, such as developer accounts, linked would be the one that is to be billed, rather than individual AWS end user accounts.

- Red Hat accounts belonging to the same Red Hat organization will be linked with the same AWS account. Therefore, you can manage who has access to creating ROSA with HCP clusters on the Red Hat organization account level.

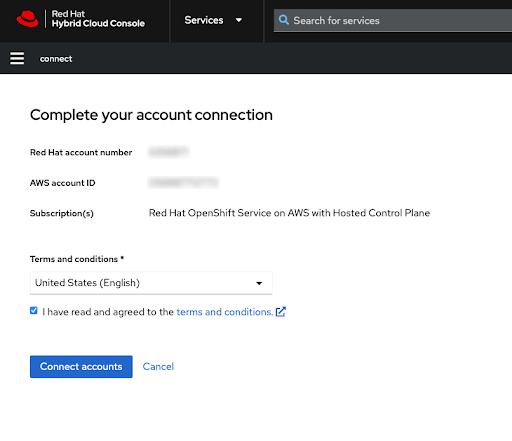

Complete the Red Hat account linking after reviewing the terms and conditions:

NoteThis step is available only if the logged-in Red Hat account, or the Red Hat organization managing the Red Hat account, was not linked to an AWS account before.

Both the Red Hat and AWS account numbers are shown on this screen.

Click the Connect accounts button if you agree with the service terms.

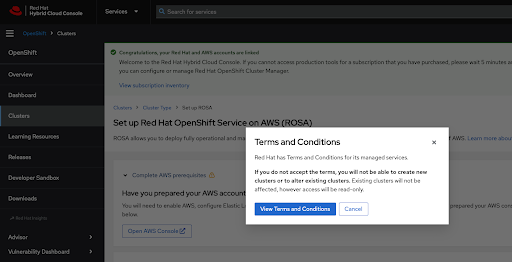

If this is the first time you are using the Red Hat Hybrid Cloud Console, you will be asked to agree with the general managed services terms and conditions before being able to create the first ROSA cluster:

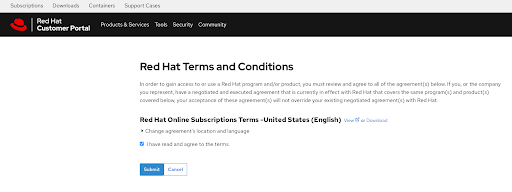

Additional terms that need to be reviewed and accepted will be shown after clicking the View Terms and Conditions button:

Submit your agreement once you have reviewed any additional terms when prompted at this time.

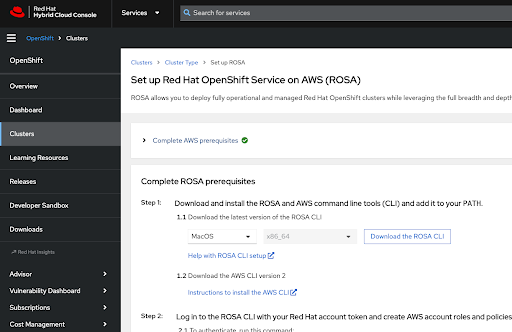

The Hybrid Cloud Console provides a confirmation that AWS prerequisites were completed and lists the first steps needed for cluster deployment:

The following steps pertain to technical deployment of the cluster:

- It is possible that these steps will be performed on a different machine than where the service enablement and account linking were completed.

As mentioned previously, any Red Hat account belonging to the Red Hat organization that was linked with the AWS account that activated the ROSA service will have access to creating a cluster and will be able to select the billing AWS account that was linked under this Red Hat organization previously.

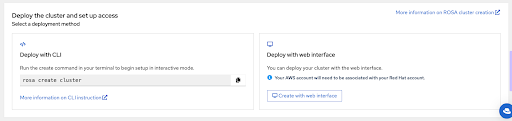

The last section of this page shows cluster deployment options, either using the

rosaCLI or through the web console:

-

The following steps describe cluster deployment using the

rosaCLI. -

If you are interested in deployment using the web console only, you can skip to the ROSA with HCP cluster deployment using the web console section. However, note that the

rosaCLI is required for certain tasks, such as creating the account roles. If you are deploying ROSA for the first time, follow this the CLI steps until running therosa whoamicommand, before skipping to the web console deployment steps.

2.4. ROSA with HCP cluster deployment using the CLI

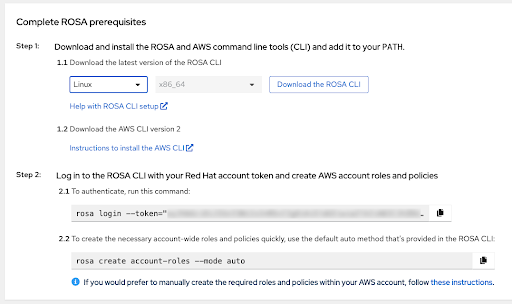

Click the Download the ROSA CLI button to download the ROSA command line interface (CLI) for your operating system and set it up as described in the Help with ROSA CLI setup.

ImportantMake sure that you have the most recent AWS CLI installed. See Instructions to install the AWS CLI for more information.

-

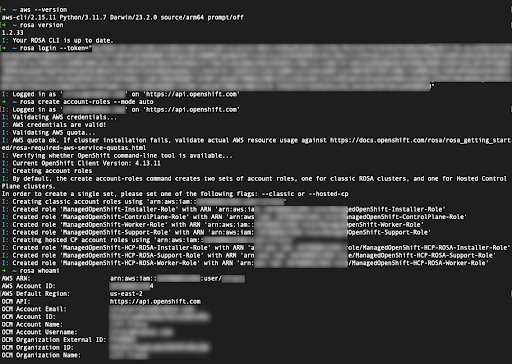

After the previous steps are completed, you can verify that both CLI are available by running the

rosa version. This command shows an update notification if you are using an older version andaws –versioncommands in your terminal. The prerequisite for creating a ROSA with HCP cluster is to log in using the

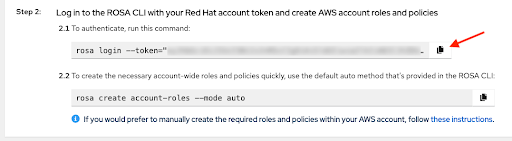

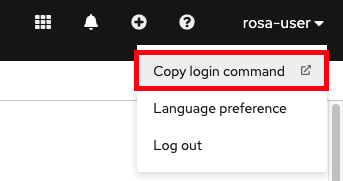

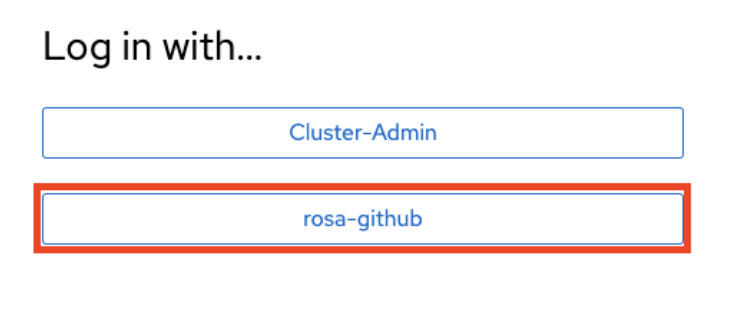

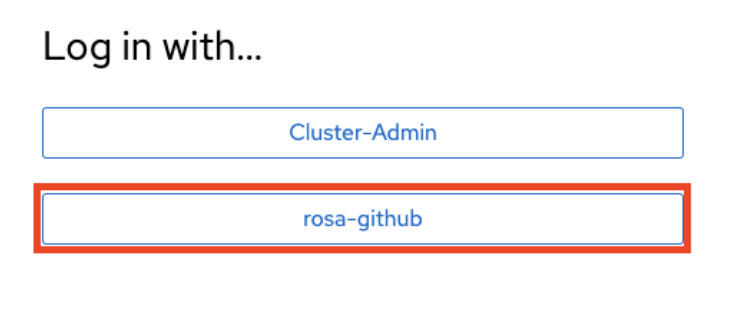

rosacli by the personalized command with your unique token shown under step 2.1. To authenticate, run this command on the web console. Use the copy button for easy copy and pasting of the command with full token into your terminal:

Do not share your unique token.

-

The final prerequisite before your first cluster deployment is making sure the necessary account-wide roles and policies are created. The

rosaCLI can help with that by using the command shown under step 2.2. To create the necessary account-wide roles and policies quickly… on the web console. The alternative to that is manual creation of these roles and policies. After logging in, creating the account roles, and verifying your identity using the

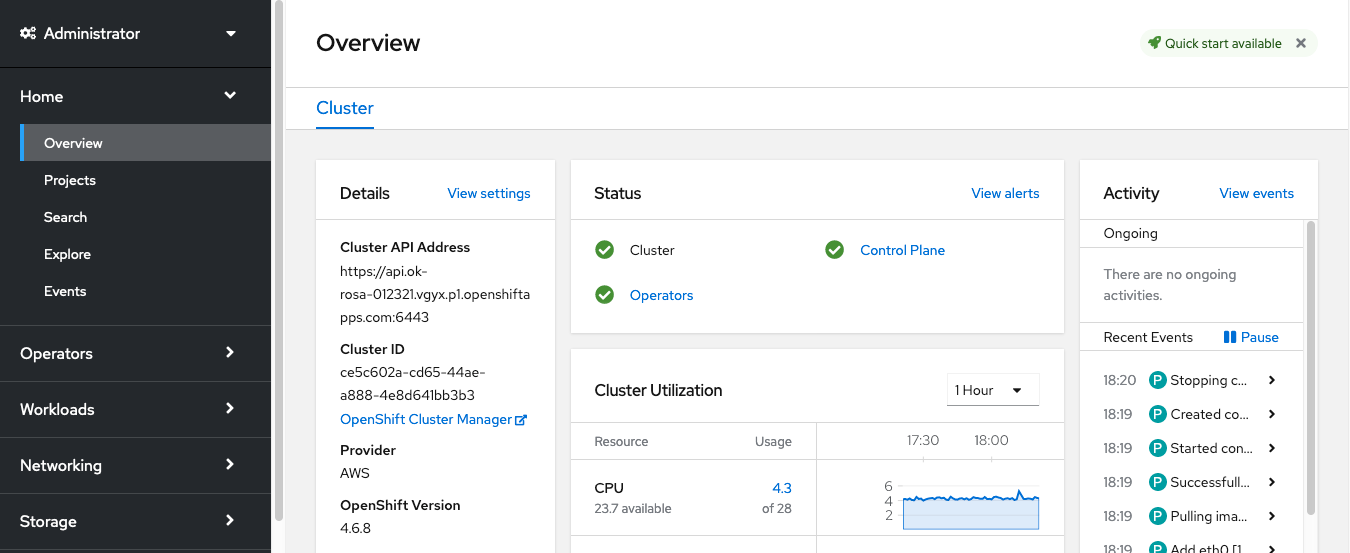

rosa whoamicommand, your terminal will look similar to this:

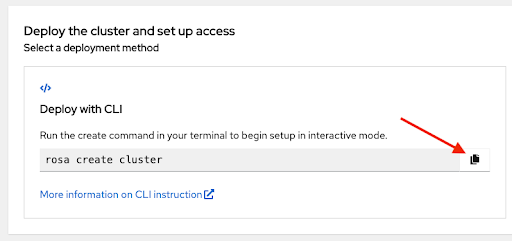

Initiate the cluster deployment using the presented command. You can click the copy button again and paste the command in your terminal:

-

To use a custom AWS profile, one of the non-default profiles specified in your

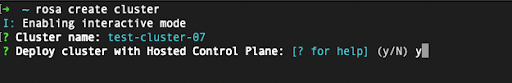

~/.aws/credentials, you can add the–profile <profile_name>selector to the rosa create cluster command so that the command looks like rosa create cluster–profile stage. If no AWS CLI profile is specified using this option, the default AWS CLI profile will determine the AWS infrastructure profile into which the cluster is deployed. The billing AWS profile is selected in one of the following steps. After entering a cluster name, you will be asked whether to use the hosted control plane. Select yes:

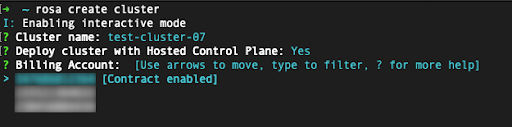

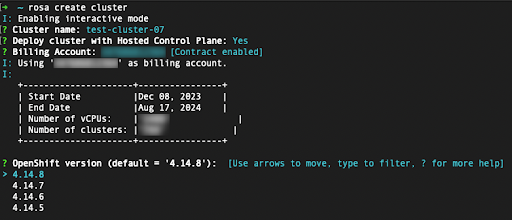

When deploying a ROSA with HCP cluster, the billing AWS account needs to be specified:

- Only AWS accounts that were linked to the Red Hat organization that is currently used will be shown.

- The specified AWS account will be charged for using the ROSA service, regardless of whether the infrastructure AWS account is linked to it in the same AWS organization.

- You can see an indicator of whether the ROSA contract is enabled for a given AWS billing account or not.

- To select an AWS account that does not have the contract enabled, refer to the first few steps in this tutorial to enable the contract and allow the service charging, which is required for a successful cluster deployment.

In the following steps, you will specify technical details of the cluster that is to be deployed:

- These steps are beyond the scope of this tutorial. See Creating ROSA with HCP clusters using the default options for more details about how to complete the ROSA with HCP cluster deployment using the CLI.

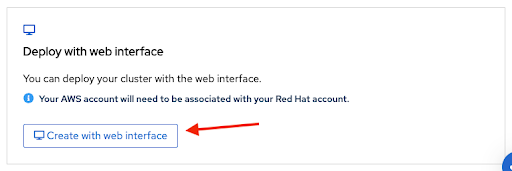

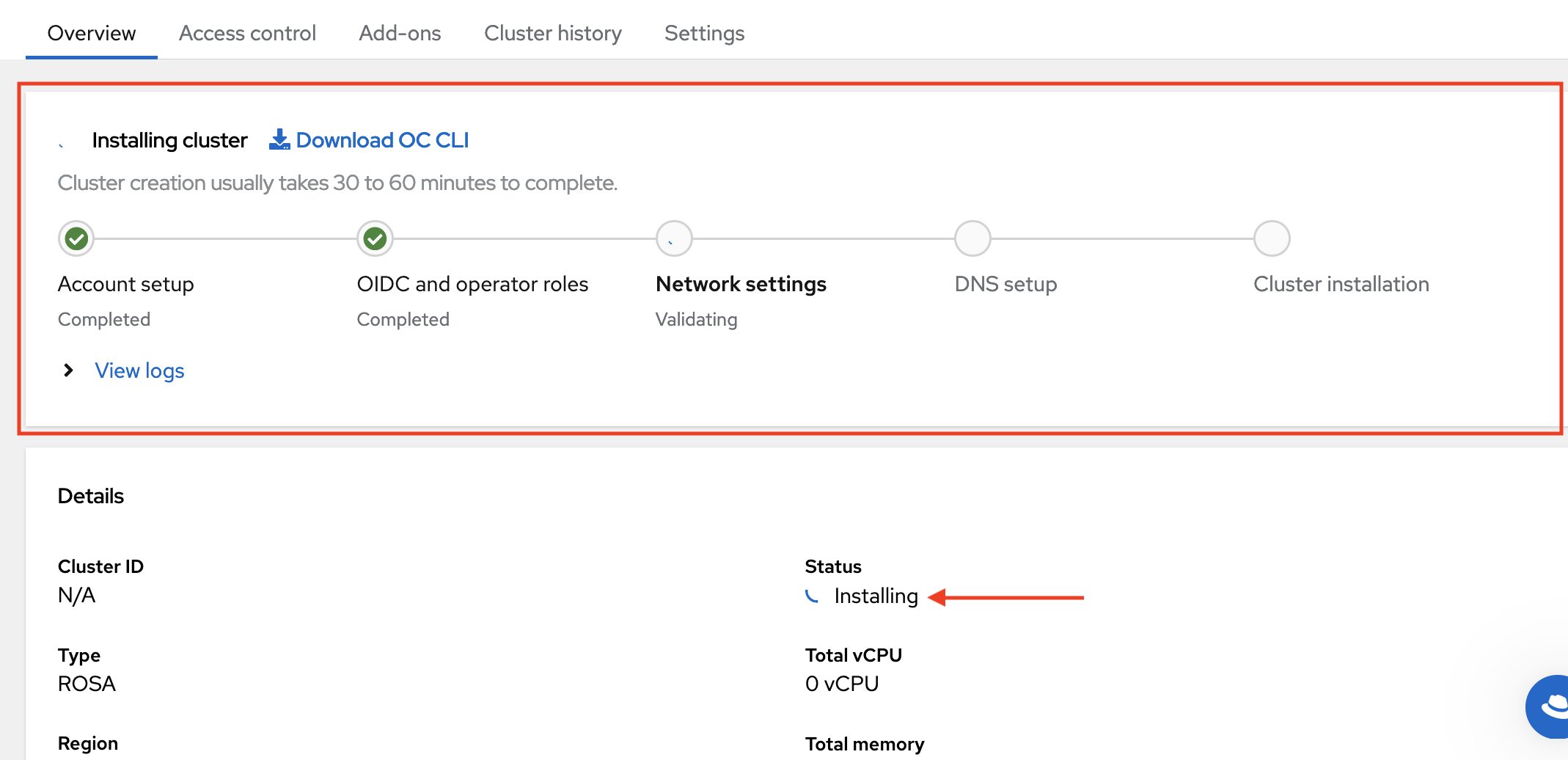

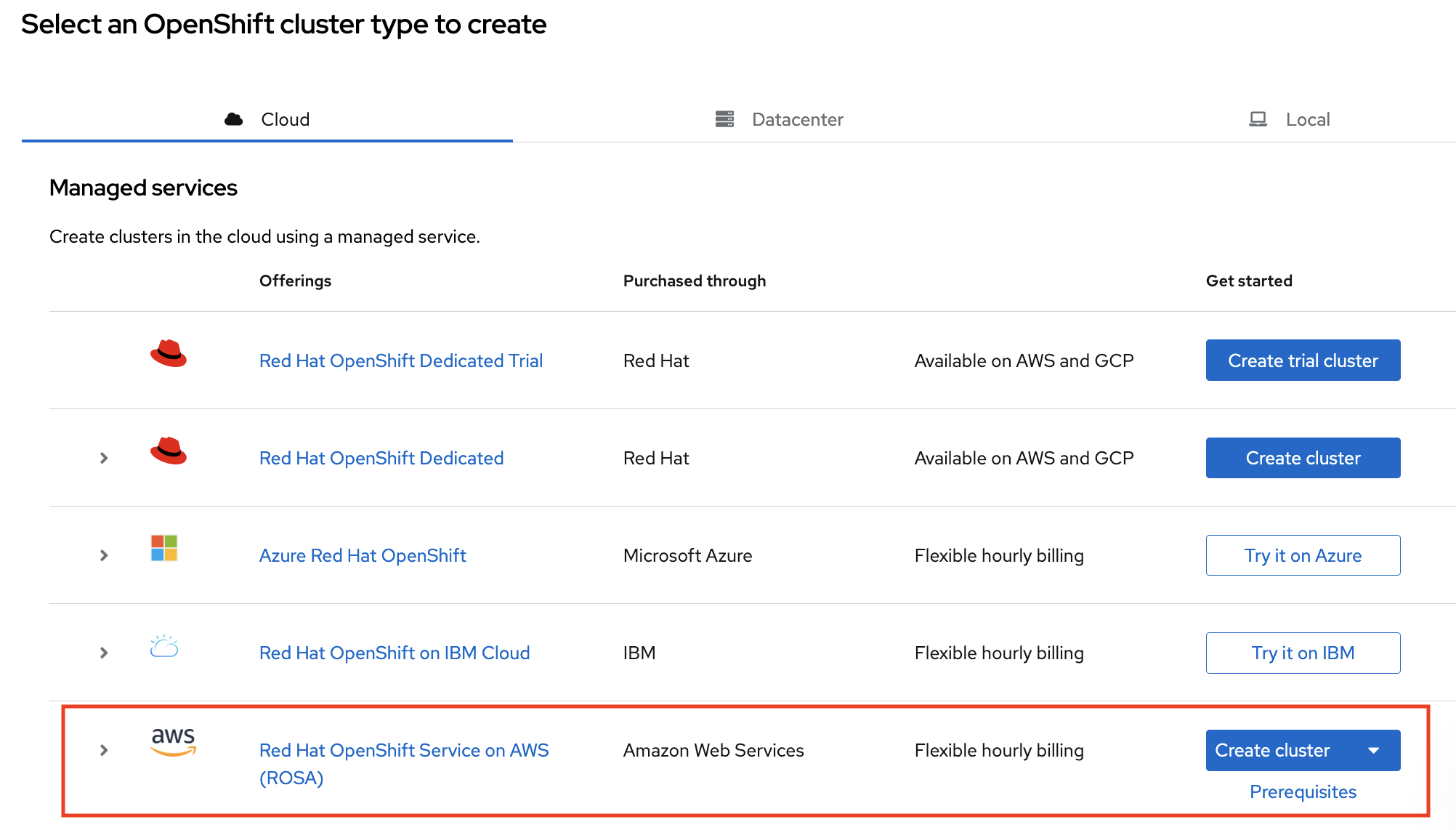

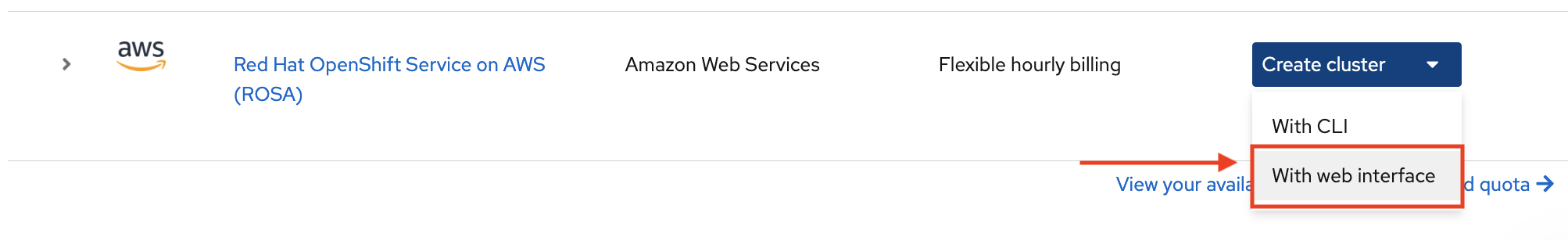

2.5. ROSA with HCP cluster deployment using the web console

A cluster can be created using the web console by selecting the second option in the bottom section of the introductory Set up ROSA page:

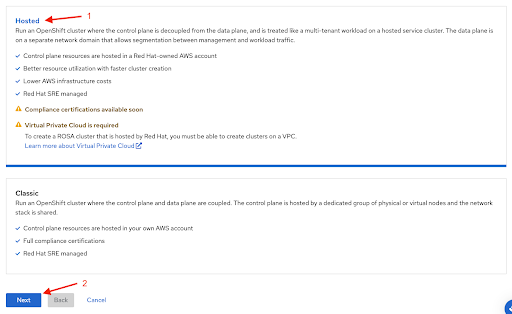

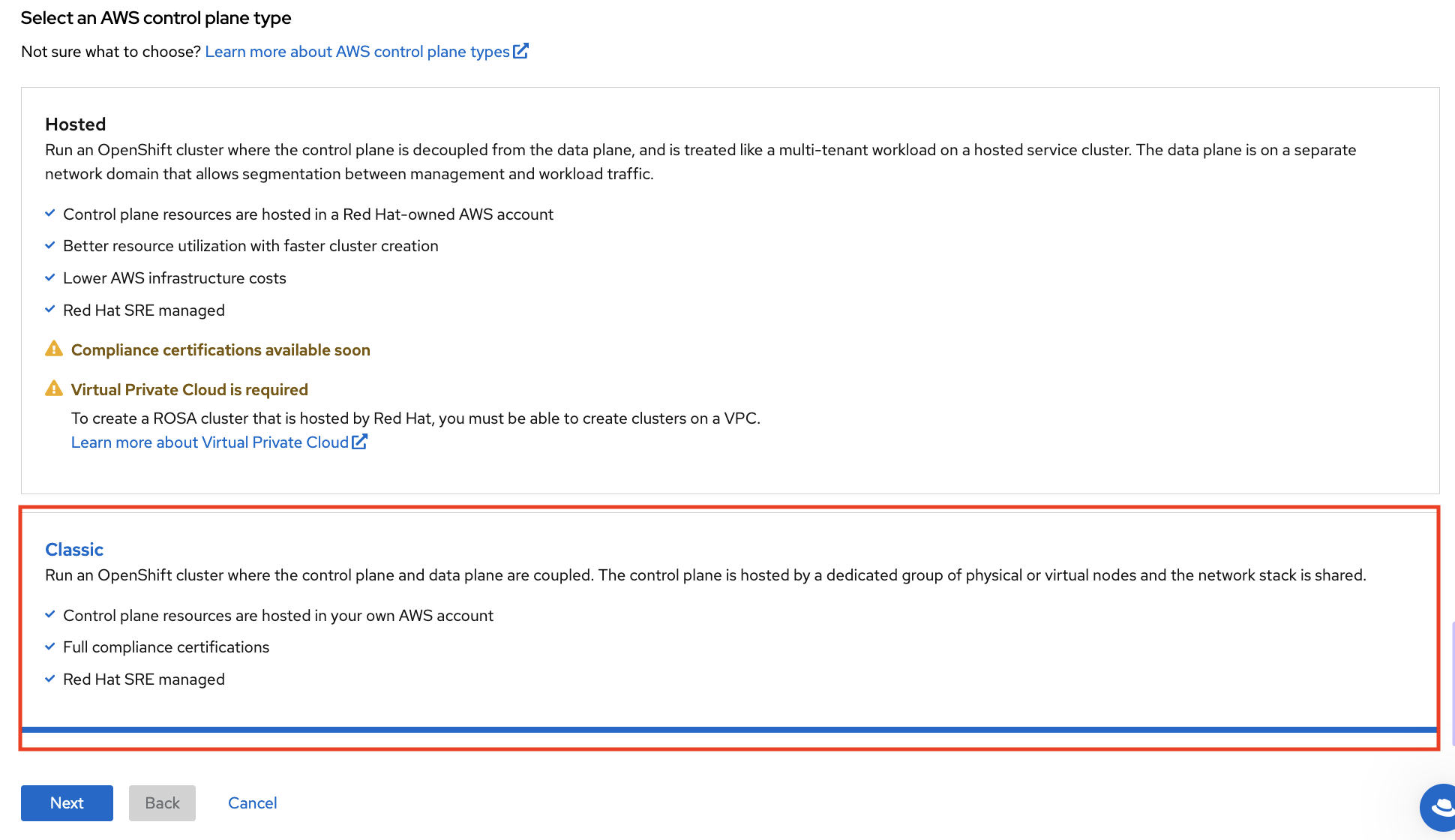

The first step when creating a ROSA cluster using the web console is the control plane selection. Make sure the Hosted option is selected before clicking the Next button:

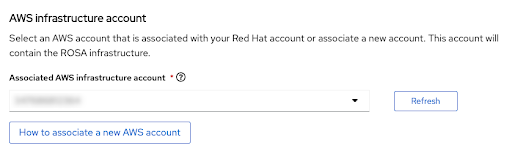

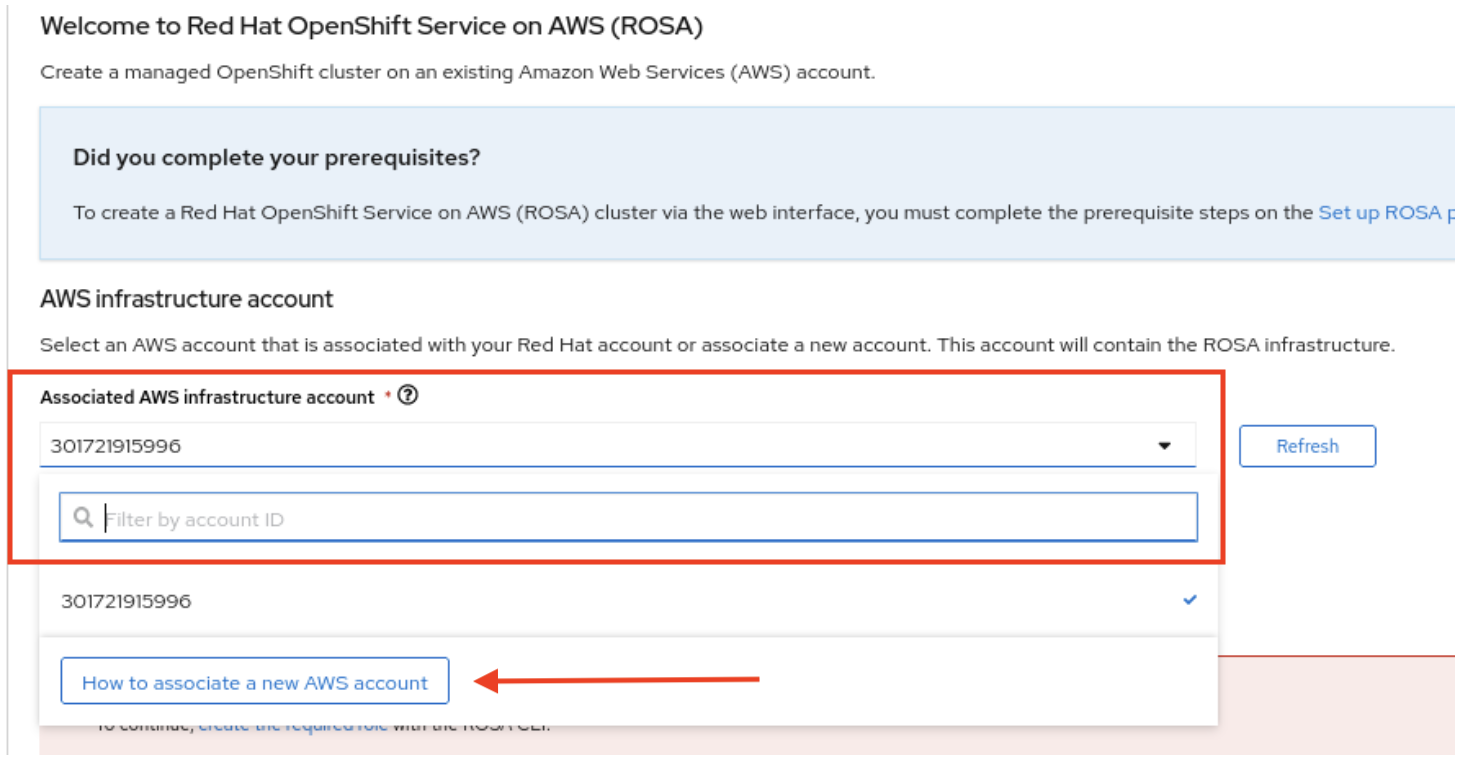

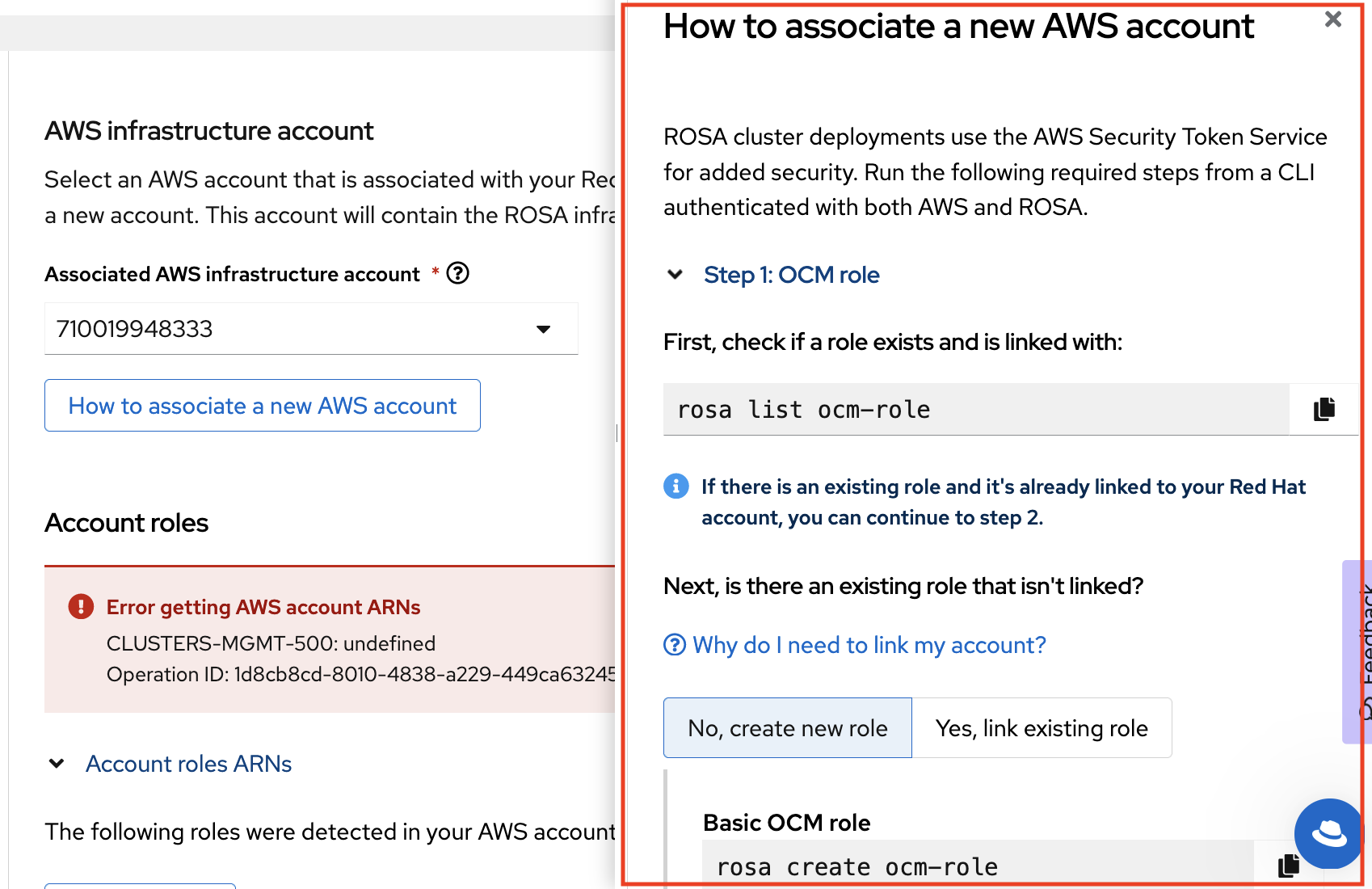

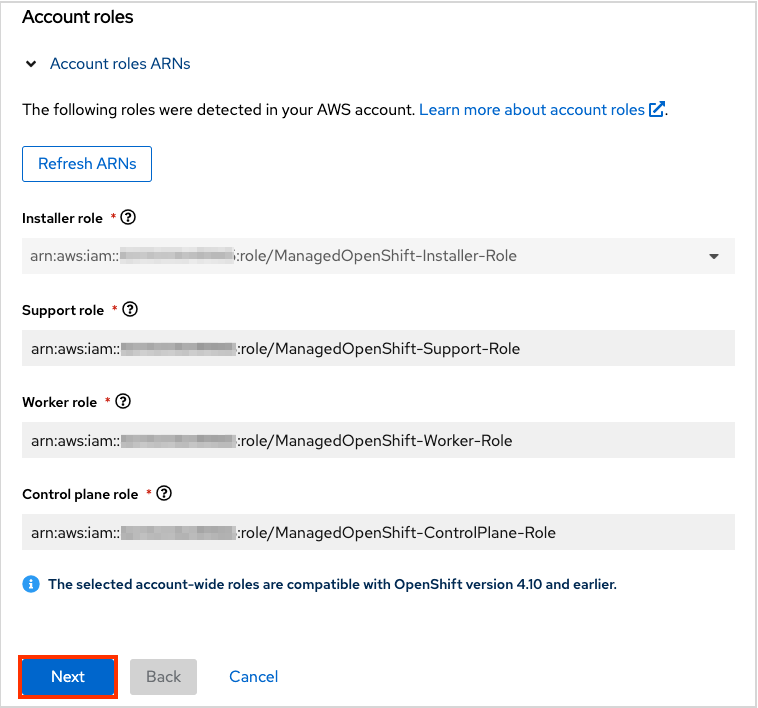

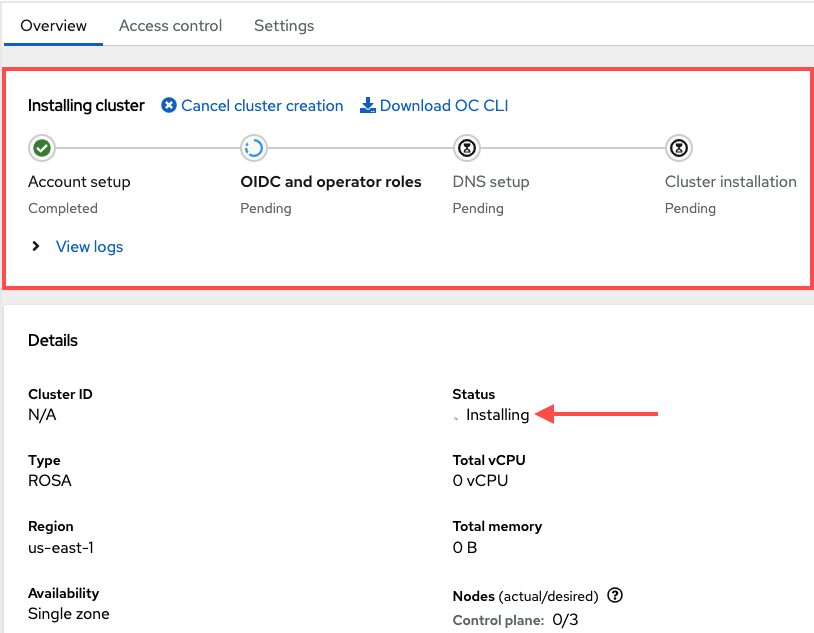

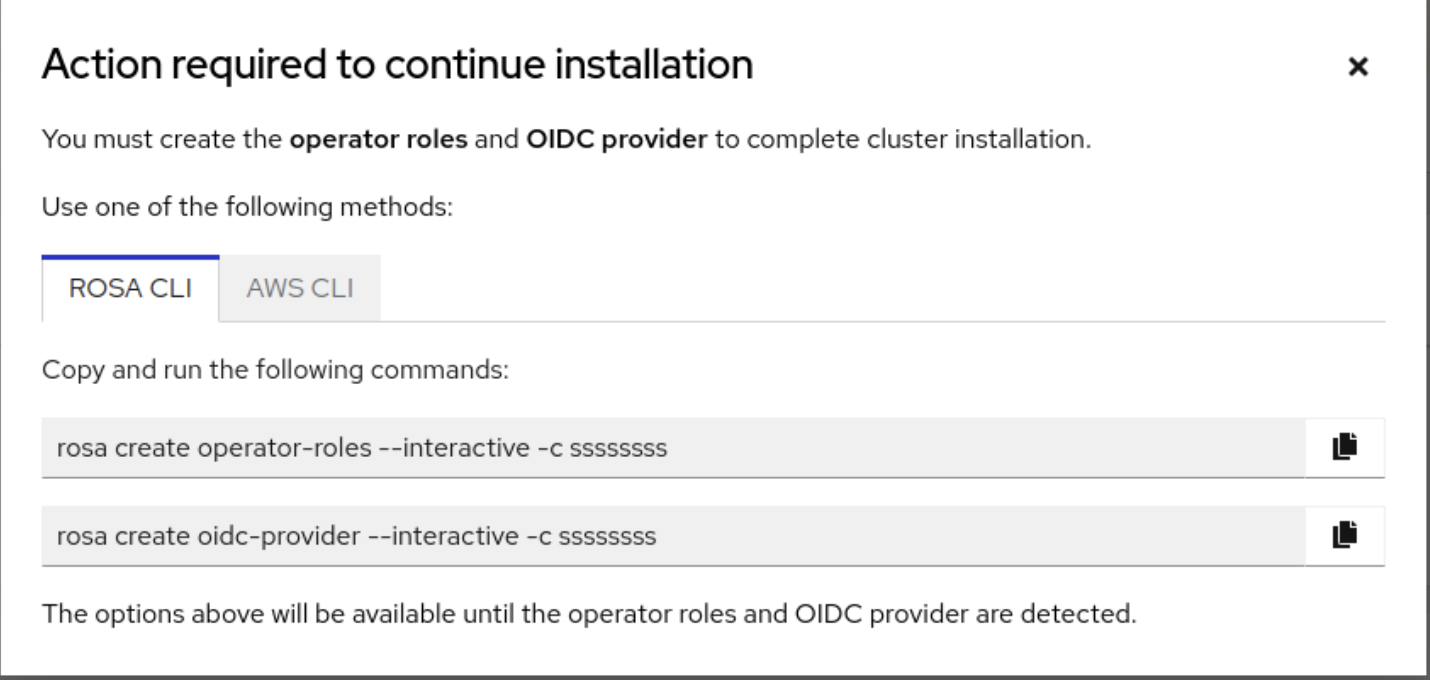

The next step Accounts and roles allows you specifying the infrastructure AWS account, into which the the ROSA cluster will be deployed and where the resources will be consumed and managed:

- Click the How to associate a new AWS account, if you don not see the account into which you want to deploy the ROSA cluster for detailed information on how to create or link account roles for this association.

-

The

rosaCLI is used for this. -

If you are using multiple AWS accounts and have their profiles configured for the AWS CLI, you can use the

--profileselector to specify the AWS profile when working with therosaCLI commands.

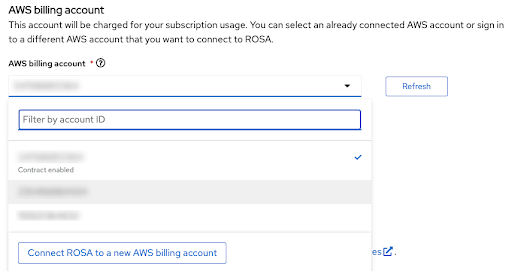

The billing AWS account is selected in the immediately following section:

- Only AWS accounts that were linked to the Red Hat organization that is currently used will be shown.

- The specified AWS account will be charged for using the ROSA service, regardless of whether the infrastructure AWS account is linked to it in the same AWS organization.

- You can see an indicator whether the ROSA contract is enabled for a given AWS billing account or not.

- In case you would like to use an AWS account that does not have a contract enabled yet, you can either use the Connect ROSA to a new AWS billing account to reach the ROSA AWS console page, where you can activate it after logging in using the respective AWS account by following steps described earlier in this tutorial, or ask the administrator of the AWS account to do that for you.

The following steps past the billing AWS account selection are beyond the scope of this tutorial.

Additional resources

- For information on using the CLI to create a cluster, see Creating a ROSA with HCP cluster using the CLI.

- See this learning path for more details on how to complete ROSA cluster deployment using the web console.

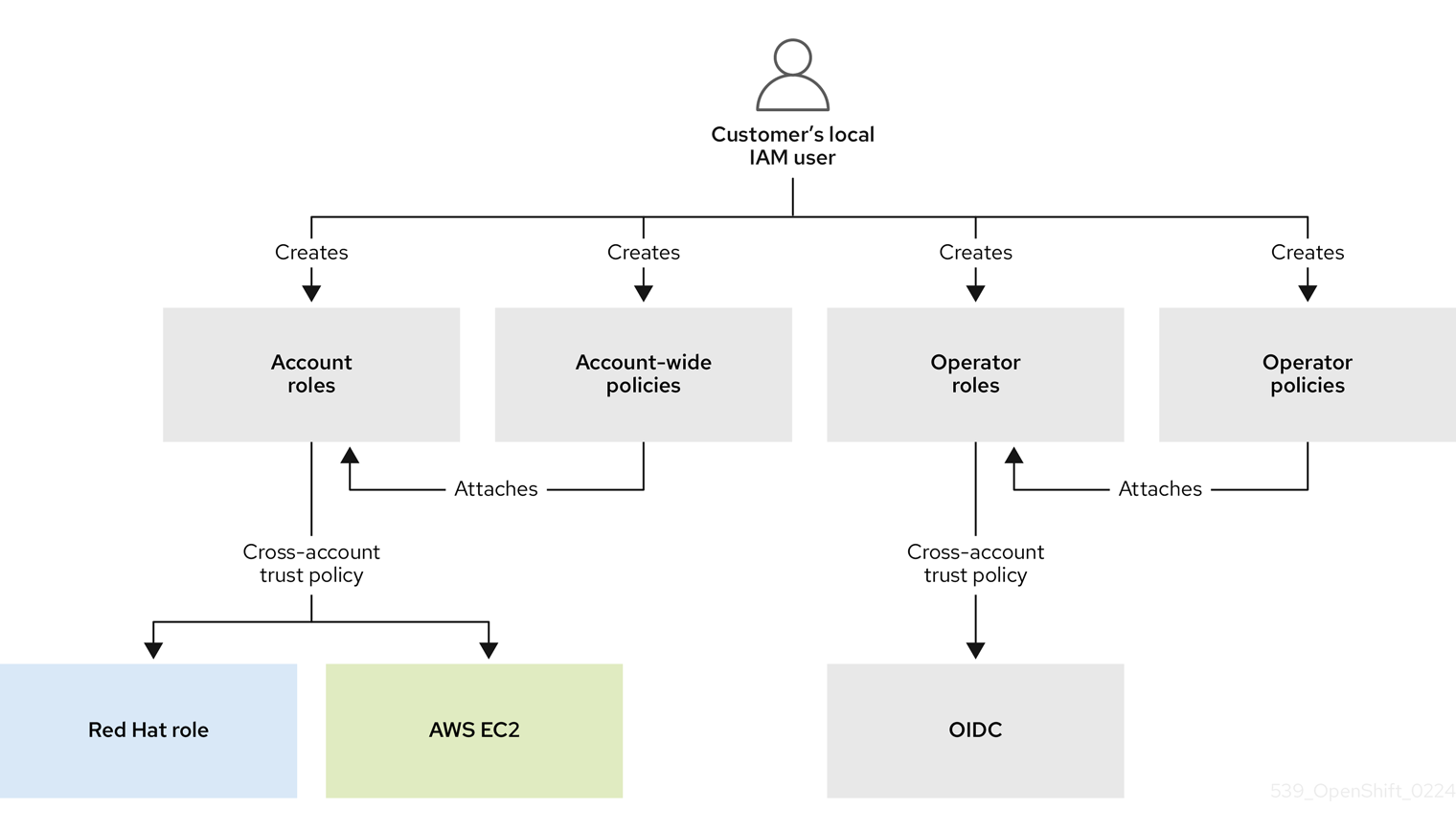

Chapter 3. Tutorial: Verifying Permissions for a ROSA STS Deployment

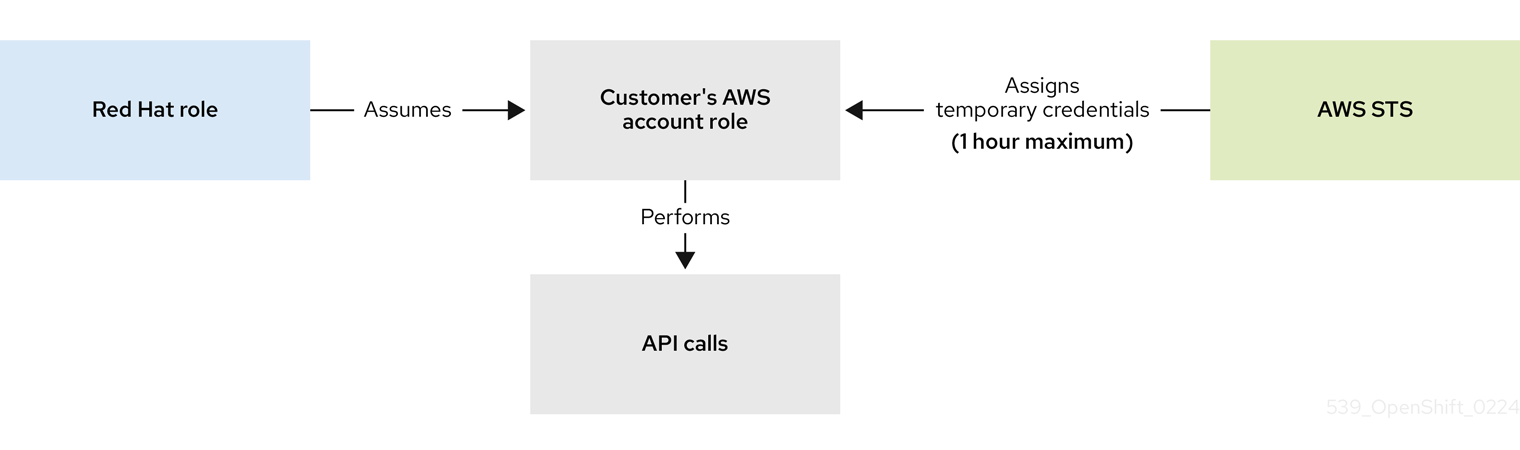

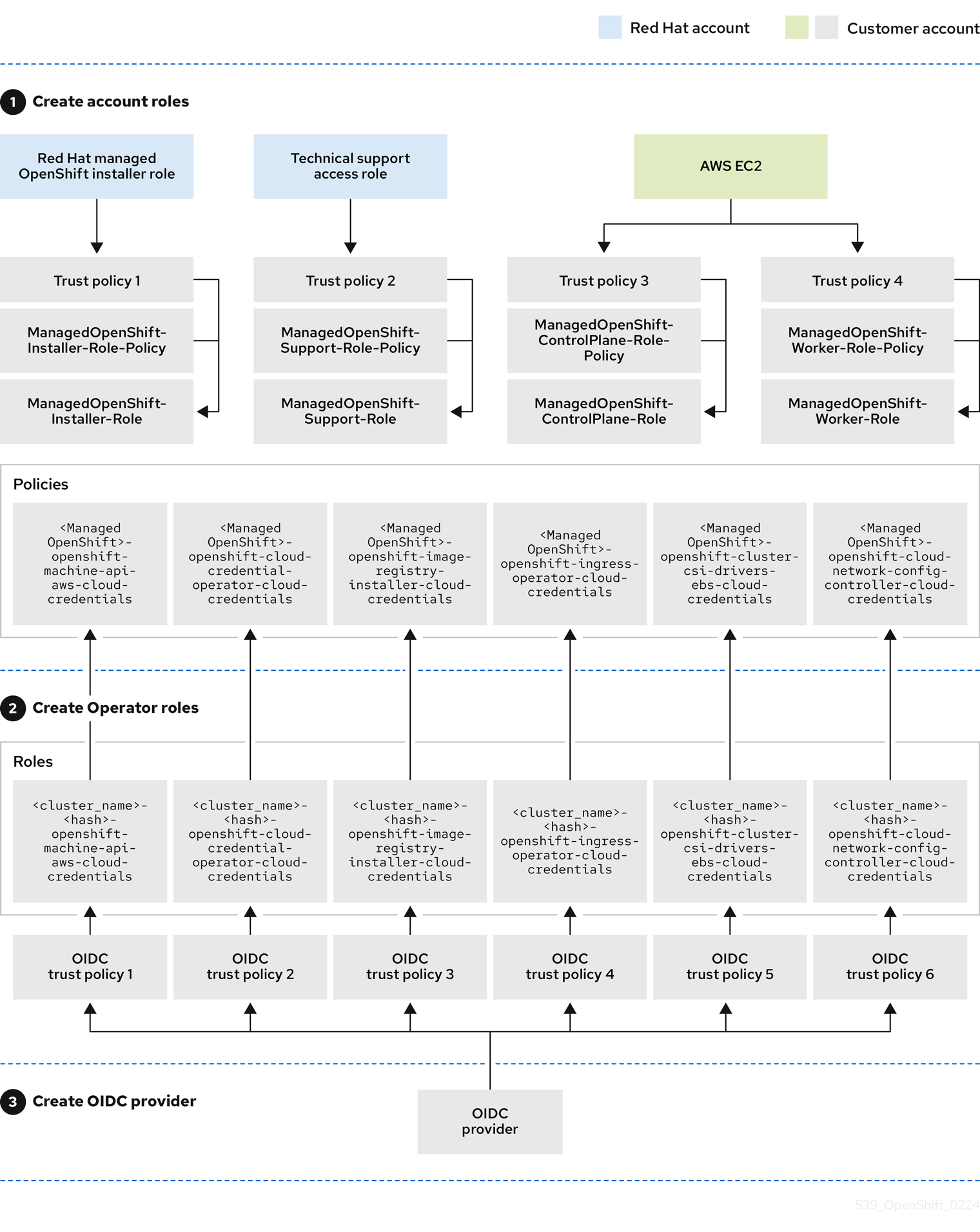

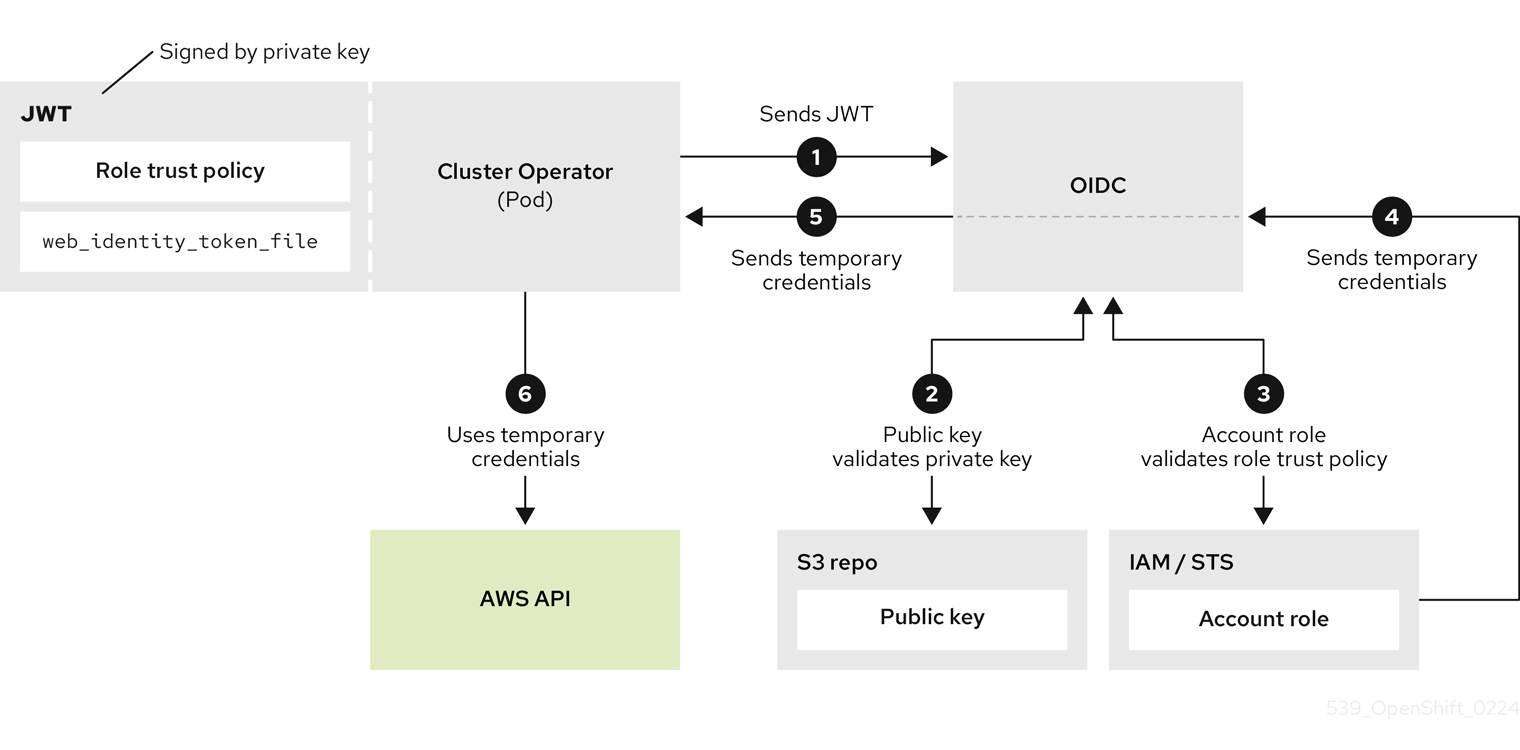

To proceed with the deployment of a ROSA cluster, an account must support the required roles and permissions. AWS Service Control Policies (SCPs) cannot block the API calls made by the installer or operator roles.

Details about the IAM resources required for an STS-enabled installation of ROSA can be found here: About IAM resources for ROSA clusters that use STS

This guide is validated for ROSA v4.11.X.

3.1. Prerequisites

3.2. Verifying ROSA permissions

To verify the permissions required for ROSA, we can run the script included in the following section without ever creating any AWS resources.

The script uses the rosa, aws, and jq CLI commands to create files in the working directory that will be used to verify permissions in the account connected to the current AWS configuration.

The AWS Policy Simulator is used to verify the permissions of each role policy against the API calls extracted by jq; results are then stored in a text file appended with .results.

This script is designed to verify the permissions for the current account and region.

3.3. Usage Instructions

To use the script, run the following commands in a

bashterminal (the -p option defines a prefix for the roles):$ mkdir scratch $ cd scratch $ cat << 'EOF' > verify-permissions.sh #!/bin/bash while getopts 'p:' OPTION; do case "$OPTION" in p) PREFIX="$OPTARG" ;; ?) echo "script usage: $(basename \$0) [-p PREFIX]" >&2 exit 1 ;; esac done shift "$(($OPTIND -1))" rosa create account-roles --mode manual --prefix $PREFIX INSTALLER_POLICY=$(cat sts_installer_permission_policy.json | jq ) CONTROL_PLANE_POLICY=$(cat sts_instance_controlplane_permission_policy.json | jq) WORKER_POLICY=$(cat sts_instance_worker_permission_policy.json | jq) SUPPORT_POLICY=$(cat sts_support_permission_policy.json | jq) simulatePolicy () { outputFile="${2}.results" echo $2 aws iam simulate-custom-policy --policy-input-list "$1" --action-names $(jq '.Statement | map(select(.Effect == "Allow"))[].Action | if type == "string" then . else .[] end' "$2" -r) --output text > $outputFile } simulatePolicy "$INSTALLER_POLICY" "sts_installer_permission_policy.json" simulatePolicy "$CONTROL_PLANE_POLICY" "sts_instance_controlplane_permission_policy.json" simulatePolicy "$WORKER_POLICY" "sts_instance_worker_permission_policy.json" simulatePolicy "$SUPPORT_POLICY" "sts_support_permission_policy.json" EOF $ chmod +x verify-permissions.sh $ ./verify-permissions.sh -p SimPolTestAfter the script completes, review each results file to ensure that none of the required API calls are blocked:

$ for file in $(ls *.results); do echo $file; cat $file; done

The output will look similar to the following:

sts_installer_permission_policy.json.results EVALUATIONRESULTS autoscaling:DescribeAutoScalingGroups allowed * MATCHEDSTATEMENTS PolicyInputList.1 IAM Policy ENDPOSITION 6 195 STARTPOSITION 17 3 EVALUATIONRESULTS ec2:AllocateAddress allowed * MATCHEDSTATEMENTS PolicyInputList.1 IAM Policy ENDPOSITION 6 195 STARTPOSITION 17 3 EVALUATIONRESULTS ec2:AssociateAddress allowed * MATCHEDSTATEMENTS PolicyInputList.1 IAM Policy ...

NoteIf any actions are blocked, review the error provided by AWS and consult with your Administrator to determine if SCPs are blocking the required API calls.

Chapter 4. Configuring log forwarding for CloudWatch logs and STS

Use this tutorial to deploy the Red Hat OpenShift Logging Operator and configure it to use Security Token Services (STS) authentication to forward logs to CloudWatch.

Prerequisites

- A Red Hat OpenShift Service on AWS (ROSA) Classic cluster

-

The

jqcommand-line interface (CLI) -

The Amazon Web Services (AWS) CLI (

aws)

4.1. Setting up your environment

Configure the following environment variables, changing the cluster name to suit your cluster:

NoteYou must be logged in as an administrator.

$ export ROSA_CLUSTER_NAME=$(oc get infrastructure cluster -o=jsonpath="{.status.infrastructureName}" | sed 's/-[a-z0-9]\{5\}$//') $ export REGION=$(rosa describe cluster -c ${ROSA_CLUSTER_NAME} --output json | jq -r .region.id) $ export OIDC_ENDPOINT=$(oc get authentication.config.openshift.io cluster -o json | jq -r .spec.serviceAccountIssuer | sed 's|^https://||') $ export AWS_ACCOUNT_ID=`aws sts get-caller-identity --query Account --output text` $ export AWS_PAGER="" $ export SCRATCH="/tmp/${ROSA_CLUSTER_NAME}/clf-cloudwatch-sts" $ mkdir -p ${SCRATCH}Ensure all fields output correctly before moving to the next section:

$ echo "Cluster: ${ROSA_CLUSTER_NAME}, Region: ${REGION}, OIDC Endpoint: ${OIDC_ENDPOINT}, AWS Account ID: ${AWS_ACCOUNT_ID}"

4.2. Preparing your AWS account

Create an Identity Access Management (IAM) policy for logging:

$ POLICY_ARN=$(aws iam list-policies --query "Policies[?PolicyName=='RosaCloudWatch'].{ARN:Arn}" --output text) $ if [[ -z "${POLICY_ARN}" ]]; then cat << EOF > ${SCRATCH}/policy.json { "Version": "2012-10-17", "Statement": [ { "Effect": "Allow", "Action": [ "logs:CreateLogGroup", "logs:CreateLogStream", "logs:DescribeLogGroups", "logs:DescribeLogStreams", "logs:PutLogEvents", "logs:PutRetentionPolicy" ], "Resource": "arn:aws:logs:*:*:*" } ] } EOF POLICY_ARN=$(aws iam create-policy --policy-name "RosaCloudWatch" \ --policy-document file:///${SCRATCH}/policy.json --query Policy.Arn --output text) fi $ echo ${POLICY_ARN}Create an IAM role trust policy for the cluster:

$ cat <<EOF > ${SCRATCH}/trust-policy.json { "Version": "2012-10-17", "Statement": [{ "Effect": "Allow", "Principal": { "Federated": "arn:aws:iam::${AWS_ACCOUNT_ID}:oidc-provider/${OIDC_ENDPOINT}" }, "Action": "sts:AssumeRoleWithWebIdentity", "Condition": { "StringEquals": { "${OIDC_ENDPOINT}:sub": "system:serviceaccount:openshift-logging:logcollector" } } }] } EOF $ ROLE_ARN=$(aws iam create-role --role-name "${ROSA_CLUSTER_NAME}-RosaCloudWatch" \ --assume-role-policy-document file://${SCRATCH}/trust-policy.json \ --query Role.Arn --output text) $ echo ${ROLE_ARN}Attach the IAM policy to the IAM role:

$ aws iam attach-role-policy --role-name "${ROSA_CLUSTER_NAME}-RosaCloudWatch" \ --policy-arn ${POLICY_ARN}

4.3. Deploying Operators

Deploy the Red Hat OpenShift Logging Operator:

$ cat << EOF | oc apply -f - apiVersion: operators.coreos.com/v1alpha1 kind: Subscription metadata: labels: operators.coreos.com/cluster-logging.openshift-logging: "" name: cluster-logging namespace: openshift-logging spec: channel: stable installPlanApproval: Automatic name: cluster-logging source: redhat-operators sourceNamespace: openshift-marketplace EOFCreate a secret:

$ cat << EOF | oc apply -f - apiVersion: v1 kind: Secret metadata: name: cloudwatch-credentials namespace: openshift-logging stringData: role_arn: $ROLE_ARN EOF

4.4. Configuring cluster logging

Create a

ClusterLogForwardercustom resource (CR):$ cat << EOF | oc apply -f - apiVersion: "logging.openshift.io/v1" kind: ClusterLogForwarder metadata: name: instance namespace: openshift-logging spec: outputs: - name: cw type: cloudwatch cloudwatch: groupBy: namespaceName groupPrefix: rosa-${ROSA_CLUSTER_NAME} region: ${REGION} secret: name: cloudwatch-credentials pipelines: - name: to-cloudwatch inputRefs: - infrastructure - audit - application outputRefs: - cw EOFCreate a

ClusterLoggingCR:$ cat << EOF | oc apply -f - apiVersion: logging.openshift.io/v1 kind: ClusterLogging metadata: name: instance namespace: openshift-logging spec: collection: logs: type: vector managementState: Managed EOF

4.5. Checking CloudWatch for logs

Use either the AWS console or the AWS CLI to validate that there are log streams from the cluster.

To validate the logs in the AWS CLI, run the following command:

$ aws logs describe-log-groups --log-group-name-prefix rosa-${ROSA_CLUSTER_NAME}Sample output

{ "logGroups": [ { "logGroupName": "rosa-xxxx.audit", "creationTime": 1661286368369, "metricFilterCount": 0, "arn": "arn:aws:logs:us-east-2:xxxx:log-group:rosa-xxxx.audit:*", "storedBytes": 0 }, { "logGroupName": "rosa-xxxx.infrastructure", "creationTime": 1661286369821, "metricFilterCount": 0, "arn": "arn:aws:logs:us-east-2:xxxx:log-group:rosa-xxxx.infrastructure:*", "storedBytes": 0 } ] }NoteIf this is a new cluster, you might not see a log group for

applicationlogs as applications are not yet running.

4.6. Cleaning up your resources

Delete the

ClusterLogForwarderCR:$ oc delete -n openshift-logging clusterlogforwarder instance

Delete the

ClusterLoggingCR:$ oc delete -n openshift-logging clusterlogging instance

Detach the IAM policy to the IAM role:

$ aws iam detach-role-policy --role-name "${ROSA_CLUSTER_NAME}-RosaCloudWatch" \ --policy-arn "${POLICY_ARN}"Delete the IAM role:

$ aws iam delete-role --role-name "${ROSA_CLUSTER_NAME}-RosaCloudWatch"Delete the IAM policy:

ImportantOnly delete the IAM policy if there are no other resources using the policy.

$ aws iam delete-policy --policy-arn "${POLICY_ARN}"Delete the CloudWatch log groups:

$ aws logs delete-log-group --log-group-name "rosa-${ROSA_CLUSTER_NAME}.audit" $ aws logs delete-log-group --log-group-name "rosa-${ROSA_CLUSTER_NAME}.infrastructure"

Chapter 5. Tutorial: Using AWS WAF and Amazon CloudFront to protect ROSA workloads

AWS WAF is a web application firewall that lets you monitor the HTTP and HTTPS requests that are forwarded to your protected web application resources.

You can use an Amazon CloudFront to add a Web Application Firewall (WAF) to your Red Hat OpenShift Service on AWS (ROSA) workloads. Using an external solution protects ROSA resources from experiencing denial of service due to handling the WAF.

5.1. Prerequisites

- A ROSA Classic cluster.

-

You have access to the OpenShift CLI (

oc). -

You have access to the AWS CLI (

aws).

5.1.1. Environment setup

Prepare the environment variables:

$ export AWS_PAGER="" $ export CLUSTER_NAME=$(oc get infrastructure cluster -o=jsonpath="{.status.infrastructureName}" | sed 's/-[a-z0-9]\{5\}$//') $ export REGION=$(oc get infrastructure cluster -o=jsonpath="{.status.platformStatus.aws.region}") $ export AWS_ACCOUNT_ID=$(aws sts get-caller-identity --query Account --output text) $ export SCRATCH="/tmp/${CLUSTER_NAME}/cloudfront-waf" $ mkdir -p ${SCRATCH} $ echo "Cluster: ${CLUSTER_NAME}, Region: ${REGION}, AWS Account ID: ${AWS_ACCOUNT_ID}"

5.2. Custom domain setup

It is necessary to configure a secondary ingress controller to segment your external WAF-protected traffic from your standard (and default) cluster ingress controller. In ROSA, we do this using the Custom Domain Operator.

Prerequisites

-

A unique domain, such as

*.apps.<company_name>.io -

A custom SAN or wildcard certificate, such as

CN=*.apps.<company_name>.io

Procedure

Create a new project

$ oc new-project waf-demo

Create a new TLS secret from a private key and a public certificate, where

fullchain.pemis your full wildcard certificate chain (including any intermediaries) andprivkey.pemis your wildcard certificate’s private key.Example

$ oc -n waf-demo create secret tls waf-tls --cert=fullchain.pem --key=privkey.pem

Create a new

CustomDomaincustom resource (CR):Example

waf-custom-domain.yamlapiVersion: managed.openshift.io/v1alpha1 kind: CustomDomain metadata: name: cloudfront-waf spec: domain: apps.<company_name>.io 1 scope: External loadBalancerType: NLB certificate: name: waf-tls namespace: waf-demo routeSelector: 2 matchLabels: route: waf

Apply the CR:

Example

$ oc apply -f waf-custom-domain.yaml

Verify that your custom domain ingress controller has been deployed and is

Ready:$ oc get customdomains

Example output

NAME ENDPOINT DOMAIN STATUS cloudfront-waf xxrywp.<company_name>.cluster-01.opln.s1.openshiftapps.com *.apps.<company_name>.io Ready

5.2.1. Configure the AWS WAF

The AWS WAF service is a web application firewall that lets you monitor, protect, and control the HTTP and HTTPS requests that are forwarded to your protected web application resources, like ROSA.

Create a AWS WAF rules file to apply to our web ACL:

$ cat << EOF > ${SCRATCH}/waf-rules.json [ { "Name": "AWS-AWSManagedRulesCommonRuleSet", "Priority": 0, "Statement": { "ManagedRuleGroupStatement": { "VendorName": "AWS", "Name": "AWSManagedRulesCommonRuleSet" } }, "OverrideAction": { "None": {} }, "VisibilityConfig": { "SampledRequestsEnabled": true, "CloudWatchMetricsEnabled": true, "MetricName": "AWS-AWSManagedRulesCommonRuleSet" } }, { "Name": "AWS-AWSManagedRulesSQLiRuleSet", "Priority": 1, "Statement": { "ManagedRuleGroupStatement": { "VendorName": "AWS", "Name": "AWSManagedRulesSQLiRuleSet" } }, "OverrideAction": { "None": {} }, "VisibilityConfig": { "SampledRequestsEnabled": true, "CloudWatchMetricsEnabled": true, "MetricName": "AWS-AWSManagedRulesSQLiRuleSet" } } ] EOFThis will enable the Core (Common) and SQL AWS Managed Rule Sets.

Create an AWS WAF Web ACL using the rules we specified above:

$ WAF_WACL=$(aws wafv2 create-web-acl \ --name cloudfront-waf \ --region ${REGION} \ --default-action Allow={} \ --scope CLOUDFRONT \ --visibility-config SampledRequestsEnabled=true,CloudWatchMetricsEnabled=true,MetricName=${CLUSTER_NAME}-waf-metrics \ --rules file://${SCRATCH}/waf-rules.json \ --query 'Summary.Name' \ --output text)

5.3. Configure Amazon CloudFront

Retrieve the newly created custom domain ingress controller’s NLB hostname:

$ NLB=$(oc -n openshift-ingress get service router-cloudfront-waf \ -o jsonpath='{.status.loadBalancer.ingress[0].hostname}') $ echo "Origin domain: ${NLB}"Import your certificate into Amazon Certificate Manager, where

cert.pemis your wildcard certificate,fullchain.pemis your wildcard certificate’s chain andprivkey.pemis your wildcard certificate’s private key.NoteRegardless of what region your cluster is deployed, you must import this certificate to

us-east-1as Amazon CloudFront is a global AWS service.Example

$ aws acm import-certificate --certificate file://cert.pem \ --certificate-chain file://fullchain.pem \ --private-key file://privkey.pem \ --region us-east-1

- Log into the AWS console to create a CloudFront distribution.

Configure the CloudFront distribution by using the following information:

NoteIf an option is not specified in the table below, leave them the default (which may be blank).

Option Value Origin domain

Output from the command above [1]

Name

rosa-waf-ingress [2]

Viewer protocol policy

Redirect HTTP to HTTPS

Allowed HTTP methods

GET, HEAD, OPTIONS, PUT, POST, PATCH, DELETE

Cache policy

CachingDisabled

Origin request policy

AllViewer

Web Application Firewall (WAF)

Enable security protections

Use existing WAF configuration

true

Choose a web ACL

cloudfront-wafAlternate domain name (CNAME)

*.apps.<company_name>.io [3]

Custom SSL certificate

Select the certificate you imported from the step above [4]

-

Run

echo ${NLB}to get the origin domain. - If you have multiple clusters, ensure the origin name is unique.

- This should match the wildcard domain you used to create the custom domain ingress controller.

- This should match the alternate domain name entered above.

-

Run

Retrieve the Amazon CloudFront Distribution endpoint:

$ aws cloudfront list-distributions --query "DistributionList.Items[?Origins.Items[?DomainName=='${NLB}']].DomainName" --output textUpdate the DNS of your custom wildcard domain with a CNAME to the Amazon CloudFront Distribution endpoint from the step above.

Example

*.apps.<company_name>.io CNAME d1b2c3d4e5f6g7.cloudfront.net

5.4. Deploy a sample application

Deploy a hello world application:

$ oc -n waf-demo new-app --image=docker.io/openshift/hello-openshift

Create a route for the application specifying your custom domain name:

Example

$ oc -n waf-demo create route edge --service=hello-openshift hello-openshift-tls \ --hostname hello-openshift.apps.<company_name>.io

Label the route to admit it to your custom domain ingress controller:

$ oc -n waf-demo label route.route.openshift.io/hello-openshift-tls route=waf

5.5. Test the WAF

Test that the app is accessible behind Amazon CloudFront:

Example

$ curl "https://hello-openshift.apps.<company_name>.io"

Example output

Hello OpenShift!

Test that the WAF denies a bad request:

Example

$ curl -X POST "https://hello-openshift.apps.<company_name>.io" \ -F "user='<script><alert>Hello></alert></script>'"

Example output

<html> <head><title>403 Forbidden</title></head> <body> <center><h1>403 Forbidden</h1></center> </body> </html

The expected result is a

403 Forbiddenerror, which means the AWS WAF is protecting your application.

5.6. Additional resources

- Custom domains for applications in the Red Hat documentation

- Adding Extra Security with AWS WAF, CloudFront and ROSA | Amazon Web Services on YouTube

Chapter 6. Tutorial: Using AWS WAF and AWS ALBs to protect ROSA workloads

AWS WAF is a web application firewall that lets you monitor the HTTP and HTTPS requests that are forwarded to your protected web application resources.

You can use an AWS Application Load Balancer (ALB) to add a Web Application Firewall (WAF) to your Red Hat OpenShift Service on AWS (ROSA) workloads. Using an external solution protects ROSA resources from experiencing denial of service due to handling the WAF.

It is recommended that you use the CloudFront method unless you absolutely must use an ALB based solution.

6.1. Prerequisites

AWS ALBs require a multi-AZ cluster, as well as three public subnets split across three AZs in the same VPC as the cluster.

- A multi-AZ ROSA Classic cluster.

-

You have access to the OpenShift CLI (

oc). -

You have access to the AWS CLI (

aws).

6.1.1. Environment setup

Prepare the environment variables:

$ export AWS_PAGER="" $ export CLUSTER_NAME=$(oc get infrastructure cluster -o=jsonpath="{.status.infrastructureName}" | sed 's/-[a-z0-9]\{5\}$//') $ export REGION=$(oc get infrastructure cluster -o=jsonpath="{.status.platformStatus.aws.region}") $ export OIDC_ENDPOINT=$(oc get authentication.config.openshift.io cluster -o jsonpath='{.spec.serviceAccountIssuer}' | sed 's|^https://||') $ export AWS_ACCOUNT_ID=$(aws sts get-caller-identity --query Account --output text) $ export SCRATCH="/tmp/${CLUSTER_NAME}/alb-waf" $ mkdir -p ${SCRATCH} $ echo "Cluster: ${CLUSTER_NAME}, Region: ${REGION}, OIDC Endpoint: ${OIDC_ENDPOINT}, AWS Account ID: ${AWS_ACCOUNT_ID}"

6.1.2. AWS VPC and subnets

This section only applies to clusters that were deployed into existing VPCs. If you did not deploy your cluster into an existing VPC, skip this section and proceed to the installation section below.

Set the below variables to the proper values for your ROSA deployment:

$ export VPC_ID=<vpc-id> $ export PUBLIC_SUBNET_IDS=<public-subnets> $ export PRIVATE_SUBNET_IDS=<private-subnets>

Add a tag to your cluster’s VPC with the cluster name:

$ aws ec2 create-tags --resources ${VPC_ID} --tags Key=kubernetes.io/cluster/${CLUSTER_NAME},Value=owned --region ${REGION}Add a tag to your public subnets:

$ aws ec2 create-tags \ --resources ${PUBLIC_SUBNET_IDS} \ --tags Key=kubernetes.io/role/elb,Value='' \ --region ${REGION}Add a tag to your private subnets:

$ aws ec2 create-tags \ --resources "${PRIVATE_SUBNET_IDS}" \ --tags Key=kubernetes.io/role/internal-elb,Value='' \ --region ${REGION}

6.2. Deploy the AWS Load Balancer Operator

The AWS Load Balancer Operator is used to used to install, manage and configure an instance of aws-load-balancer-controller in a ROSA cluster. To deploy ALBs in ROSA, we need to first deploy the AWS Load Balancer Operator.

Create an AWS IAM policy for the AWS Load Balancer Controller:

NoteThe policy is sourced from the upstream AWS Load Balancer Controller policy plus permission to create tags on subnets. This is required by the operator to function.

$ oc new-project aws-load-balancer-operator $ POLICY_ARN=$(aws iam list-policies --query \ "Policies[?PolicyName=='aws-load-balancer-operator-policy'].{ARN:Arn}" \ --output text) $ if [[ -z "${POLICY_ARN}" ]]; then wget -O "${SCRATCH}/load-balancer-operator-policy.json" \ https://raw.githubusercontent.com/rh-mobb/documentation/main/content/docs/rosa/aws-load-balancer-operator/load-balancer-operator-policy.json POLICY_ARN=$(aws --region "$REGION" --query Policy.Arn \ --output text iam create-policy \ --policy-name aws-load-balancer-operator-policy \ --policy-document "file://${SCRATCH}/load-balancer-operator-policy.json") fi $ echo $POLICY_ARNCreate an AWS IAM trust policy for AWS Load Balancer Operator:

$ cat <<EOF > "${SCRATCH}/trust-policy.json" { "Version": "2012-10-17", "Statement": [ { "Effect": "Allow", "Condition": { "StringEquals" : { "${OIDC_ENDPOINT}:sub": ["system:serviceaccount:aws-load-balancer-operator:aws-load-balancer-operator-controller-manager", "system:serviceaccount:aws-load-balancer-operator:aws-load-balancer-controller-cluster"] } }, "Principal": { "Federated": "arn:aws:iam::$AWS_ACCOUNT_ID:oidc-provider/${OIDC_ENDPOINT}" }, "Action": "sts:AssumeRoleWithWebIdentity" } ] } EOFCreate an AWS IAM role for the AWS Load Balancer Operator:

$ ROLE_ARN=$(aws iam create-role --role-name "${CLUSTER_NAME}-alb-operator" \ --assume-role-policy-document "file://${SCRATCH}/trust-policy.json" \ --query Role.Arn --output text) $ echo $ROLE_ARN $ aws iam attach-role-policy --role-name "${CLUSTER_NAME}-alb-operator" \ --policy-arn $POLICY_ARNCreate a secret for the AWS Load Balancer Operator to assume our newly created AWS IAM role:

$ cat << EOF | oc apply -f - apiVersion: v1 kind: Secret metadata: name: aws-load-balancer-operator namespace: aws-load-balancer-operator stringData: credentials: | [default] role_arn = $ROLE_ARN web_identity_token_file = /var/run/secrets/openshift/serviceaccount/token EOFInstall the Red Hat AWS Load Balancer Operator:

$ cat << EOF | oc apply -f - apiVersion: operators.coreos.com/v1 kind: OperatorGroup metadata: name: aws-load-balancer-operator namespace: aws-load-balancer-operator spec: upgradeStrategy: Default --- apiVersion: operators.coreos.com/v1alpha1 kind: Subscription metadata: name: aws-load-balancer-operator namespace: aws-load-balancer-operator spec: channel: stable-v1.0 installPlanApproval: Automatic name: aws-load-balancer-operator source: redhat-operators sourceNamespace: openshift-marketplace startingCSV: aws-load-balancer-operator.v1.0.0 EOF

Deploy an instance of the AWS Load Balancer Controller using the operator:

NoteIf you get an error here wait a minute and try again, it means the Operator has not completed installing yet.

$ cat << EOF | oc apply -f - apiVersion: networking.olm.openshift.io/v1 kind: AWSLoadBalancerController metadata: name: cluster spec: credentials: name: aws-load-balancer-operator enabledAddons: - AWSWAFv2 EOFCheck the that the operator and controller pods are both running:

$ oc -n aws-load-balancer-operator get pods

You should see the following, if not wait a moment and retry:

NAME READY STATUS RESTARTS AGE aws-load-balancer-controller-cluster-6ddf658785-pdp5d 1/1 Running 0 99s aws-load-balancer-operator-controller-manager-577d9ffcb9-w6zqn 2/2 Running 0 2m4s

6.3. Deploy a sample application

Create a new project for our sample application:

$ oc new-project hello-world

Deploy a hello world application:

$ oc new-app -n hello-world --image=docker.io/openshift/hello-openshift

Convert the pre-created service resource to a NodePort service type:

$ oc -n hello-world patch service hello-openshift -p '{"spec":{"type":"NodePort"}}'Deploy an AWS ALB using the AWS Load Balancer Operator:

$ cat << EOF | oc apply -f - apiVersion: networking.k8s.io/v1 kind: Ingress metadata: name: hello-openshift-alb namespace: hello-world annotations: alb.ingress.kubernetes.io/scheme: internet-facing spec: ingressClassName: alb rules: - http: paths: - path: / pathType: Exact backend: service: name: hello-openshift port: number: 8080 EOFCurl the AWS ALB Ingress endpoint to verify the hello world application is accessible:

NoteAWS ALB provisioning takes a few minutes. If you receive an error that says

curl: (6) Could not resolve host, please wait and try again.$ INGRESS=$(oc -n hello-world get ingress hello-openshift-alb -o jsonpath='{.status.loadBalancer.ingress[0].hostname}') $ curl "http://${INGRESS}"Example output

Hello OpenShift!

6.3.1. Configure the AWS WAF

The AWS WAF service is a web application firewall that lets you monitor, protect, and control the HTTP and HTTPS requests that are forwarded to your protected web application resources, like ROSA.

Create a AWS WAF rules file to apply to our web ACL:

$ cat << EOF > ${SCRATCH}/waf-rules.json [ { "Name": "AWS-AWSManagedRulesCommonRuleSet", "Priority": 0, "Statement": { "ManagedRuleGroupStatement": { "VendorName": "AWS", "Name": "AWSManagedRulesCommonRuleSet" } }, "OverrideAction": { "None": {} }, "VisibilityConfig": { "SampledRequestsEnabled": true, "CloudWatchMetricsEnabled": true, "MetricName": "AWS-AWSManagedRulesCommonRuleSet" } }, { "Name": "AWS-AWSManagedRulesSQLiRuleSet", "Priority": 1, "Statement": { "ManagedRuleGroupStatement": { "VendorName": "AWS", "Name": "AWSManagedRulesSQLiRuleSet" } }, "OverrideAction": { "None": {} }, "VisibilityConfig": { "SampledRequestsEnabled": true, "CloudWatchMetricsEnabled": true, "MetricName": "AWS-AWSManagedRulesSQLiRuleSet" } } ] EOFThis will enable the Core (Common) and SQL AWS Managed Rule Sets.

Create an AWS WAF Web ACL using the rules we specified above:

$ WAF_ARN=$(aws wafv2 create-web-acl \ --name ${CLUSTER_NAME}-waf \ --region ${REGION} \ --default-action Allow={} \ --scope REGIONAL \ --visibility-config SampledRequestsEnabled=true,CloudWatchMetricsEnabled=true,MetricName=${CLUSTER_NAME}-waf-metrics \ --rules file://${SCRATCH}/waf-rules.json \ --query 'Summary.ARN' \ --output text)Annotate the Ingress resource with the AWS WAF Web ACL ARN:

$ oc annotate -n hello-world ingress.networking.k8s.io/hello-openshift-alb \ alb.ingress.kubernetes.io/wafv2-acl-arn=${WAF_ARN}Wait for 10 seconds for the rules to propagate and test that the app still works:

$ curl "http://${INGRESS}"Example output

Hello OpenShift!

Test that the WAF denies a bad request:

$ curl -X POST "http://${INGRESS}" \ -F "user='<script><alert>Hello></alert></script>'"Example output

<html> <head><title>403 Forbidden</title></head> <body> <center><h1>403 Forbidden</h1></center> </body> </html

The expected result is a

403 Forbiddenerror, which means the AWS WAF is protecting your application.

6.4. Additional resources

- Custom domains for applications in the Red Hat documentation

- Adding Extra Security with AWS WAF, CloudFront and ROSA | Amazon Web Services on YouTube

Chapter 7. Tutorial: Deploying OpenShift API for Data Protection on a ROSA cluster

This content is authored by Red Hat experts, but has not yet been tested on every supported configuration.

Prerequisites

Environment

Prepare the environment variables:

NoteChange the cluster name to match your ROSA cluster and ensure you are logged into the cluster as an Administrator. Ensure all fields are outputted correctly before moving on.

$ export CLUSTER_NAME=$(oc get infrastructure cluster -o=jsonpath="{.status.infrastructureName}" | sed 's/-[a-z0-9]\{5\}$//') $ export ROSA_CLUSTER_ID=$(rosa describe cluster -c ${CLUSTER_NAME} --output json | jq -r .id) $ export REGION=$(rosa describe cluster -c ${CLUSTER_NAME} --output json | jq -r .region.id) $ export OIDC_ENDPOINT=$(oc get authentication.config.openshift.io cluster -o jsonpath='{.spec.serviceAccountIssuer}' | sed 's|^https://||') $ export AWS_ACCOUNT_ID=`aws sts get-caller-identity --query Account --output text` $ export CLUSTER_VERSION=`rosa describe cluster -c ${CLUSTER_NAME} -o json | jq -r .version.raw_id | cut -f -2 -d '.'` $ export ROLE_NAME="${CLUSTER_NAME}-openshift-oadp-aws-cloud-credentials" $ export AWS_PAGER="" $ export SCRATCH="/tmp/${CLUSTER_NAME}/oadp" $ mkdir -p ${SCRATCH} $ echo "Cluster ID: ${ROSA_CLUSTER_ID}, Region: ${REGION}, OIDC Endpoint: ${OIDC_ENDPOINT}, AWS Account ID: ${AWS_ACCOUNT_ID}"

7.1. Prepare AWS Account

Create an IAM Policy to allow for S3 Access:

$ POLICY_ARN=$(aws iam list-policies --query "Policies[?PolicyName=='RosaOadpVer1'].{ARN:Arn}" --output text) if [[ -z "${POLICY_ARN}" ]]; then $ cat << EOF > ${SCRATCH}/policy.json { "Version": "2012-10-17", "Statement": [ { "Effect": "Allow", "Action": [ "s3:CreateBucket", "s3:DeleteBucket", "s3:PutBucketTagging", "s3:GetBucketTagging", "s3:PutEncryptionConfiguration", "s3:GetEncryptionConfiguration", "s3:PutLifecycleConfiguration", "s3:GetLifecycleConfiguration", "s3:GetBucketLocation", "s3:ListBucket", "s3:GetObject", "s3:PutObject", "s3:DeleteObject", "s3:ListBucketMultipartUploads", "s3:AbortMultipartUpload", "s3:ListMultipartUploadParts", "ec2:DescribeSnapshots", "ec2:DescribeVolumes", "ec2:DescribeVolumeAttribute", "ec2:DescribeVolumesModifications", "ec2:DescribeVolumeStatus", "ec2:CreateTags", "ec2:CreateVolume", "ec2:CreateSnapshot", "ec2:DeleteSnapshot" ], "Resource": "*" } ]} EOF $ POLICY_ARN=$(aws iam create-policy --policy-name "RosaOadpVer1" \ --policy-document file:///${SCRATCH}/policy.json --query Policy.Arn \ --tags Key=rosa_openshift_version,Value=${CLUSTER_VERSION} Key=rosa_role_prefix,Value=ManagedOpenShift Key=operator_namespace,Value=openshift-oadp Key=operator_name,Value=openshift-oadp \ --output text) fi $ echo ${POLICY_ARN}Create an IAM Role trust policy for the cluster:

$ cat <<EOF > ${SCRATCH}/trust-policy.json { "Version": "2012-10-17", "Statement": [{ "Effect": "Allow", "Principal": { "Federated": "arn:aws:iam::${AWS_ACCOUNT_ID}:oidc-provider/${OIDC_ENDPOINT}" }, "Action": "sts:AssumeRoleWithWebIdentity", "Condition": { "StringEquals": { "${OIDC_ENDPOINT}:sub": [ "system:serviceaccount:openshift-adp:openshift-adp-controller-manager", "system:serviceaccount:openshift-adp:velero"] } } }] } EOF $ ROLE_ARN=$(aws iam create-role --role-name \ "${ROLE_NAME}" \ --assume-role-policy-document file://${SCRATCH}/trust-policy.json \ --tags Key=rosa_cluster_id,Value=${ROSA_CLUSTER_ID} Key=rosa_openshift_version,Value=${CLUSTER_VERSION} Key=rosa_role_prefix,Value=ManagedOpenShift Key=operator_namespace,Value=openshift-adp Key=operator_name,Value=openshift-oadp \ --query Role.Arn --output text) $ echo ${ROLE_ARN}Attach the IAM Policy to the IAM Role:

$ aws iam attach-role-policy --role-name "${ROLE_NAME}" \ --policy-arn ${POLICY_ARN}

7.2. Deploy OADP on the cluster

Create a namespace for OADP:

$ oc create namespace openshift-adp

Create a credentials secret:

$ cat <<EOF > ${SCRATCH}/credentials [default] role_arn = ${ROLE_ARN} web_identity_token_file = /var/run/secrets/openshift/serviceaccount/token EOF $ oc -n openshift-adp create secret generic cloud-credentials \ --from-file=${SCRATCH}/credentialsDeploy the OADP Operator:

NoteThere is currently an issue with version 1.1 of the Operator with backups that have a

PartiallyFailedstatus. This does not seem to affect the backup and restore process, but it should be noted as there are issues with it.$ cat << EOF | oc create -f - apiVersion: operators.coreos.com/v1 kind: OperatorGroup metadata: generateName: openshift-adp- namespace: openshift-adp name: oadp spec: targetNamespaces: - openshift-adp --- apiVersion: operators.coreos.com/v1alpha1 kind: Subscription metadata: name: redhat-oadp-operator namespace: openshift-adp spec: channel: stable-1.2 installPlanApproval: Automatic name: redhat-oadp-operator source: redhat-operators sourceNamespace: openshift-marketplace EOF

Wait for the Operator to be ready:

$ watch oc -n openshift-adp get pods

Example output

NAME READY STATUS RESTARTS AGE openshift-adp-controller-manager-546684844f-qqjhn 1/1 Running 0 22s

Create Cloud Storage:

$ cat << EOF | oc create -f - apiVersion: oadp.openshift.io/v1alpha1 kind: CloudStorage metadata: name: ${CLUSTER_NAME}-oadp namespace: openshift-adp spec: creationSecret: key: credentials name: cloud-credentials enableSharedConfig: true name: ${CLUSTER_NAME}-oadp provider: aws region: $REGION EOFCheck your application’s storage default storage class:

$ oc get pvc -n <namespace> 1- 1

- Enter your application’s namespace.

Example output

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE applog Bound pvc-351791ae-b6ab-4e8b-88a4-30f73caf5ef8 1Gi RWO gp3-csi 4d19h mysql Bound pvc-16b8e009-a20a-4379-accc-bc81fedd0621 1Gi RWO gp3-csi 4d19h

$ oc get storageclass

Example output

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE gp2 kubernetes.io/aws-ebs Delete WaitForFirstConsumer true 4d21h gp2-csi ebs.csi.aws.com Delete WaitForFirstConsumer true 4d21h gp3 ebs.csi.aws.com Delete WaitForFirstConsumer true 4d21h gp3-csi (default) ebs.csi.aws.com Delete WaitForFirstConsumer true 4d21h

Using either gp3-csi, gp2-csi, gp3 or gp2 will work. If the application(s) that are being backed up are all using PV’s with CSI, include the CSI plugin in the OADP DPA configuration.

CSI only: Deploy a Data Protection Application:

$ cat << EOF | oc create -f - apiVersion: oadp.openshift.io/v1alpha1 kind: DataProtectionApplication metadata: name: ${CLUSTER_NAME}-dpa namespace: openshift-adp spec: backupImages: true features: dataMover: enable: false backupLocations: - bucket: cloudStorageRef: name: ${CLUSTER_NAME}-oadp credential: key: credentials name: cloud-credentials prefix: velero default: true config: region: ${REGION} configuration: velero: defaultPlugins: - openshift - aws - csi restic: enable: false EOFNoteIf you run this command for CSI volumes, you can skip the next step.

Non-CSI volumes: Deploy a Data Protection Application:

$ cat << EOF | oc create -f - apiVersion: oadp.openshift.io/v1alpha1 kind: DataProtectionApplication metadata: name: ${CLUSTER_NAME}-dpa namespace: openshift-adp spec: backupImages: true features: dataMover: enable: false backupLocations: - bucket: cloudStorageRef: name: ${CLUSTER_NAME}-oadp credential: key: credentials name: cloud-credentials prefix: velero default: true config: region: ${REGION} configuration: velero: defaultPlugins: - openshift - aws restic: enable: false snapshotLocations: - velero: config: credentialsFile: /tmp/credentials/openshift-adp/cloud-credentials-credentials enableSharedConfig: 'true' profile: default region: ${REGION} provider: aws EOF

-

In OADP 1.1.x ROSA STS environments, the container image backup and restore (

spec.backupImages) value must be set tofalseas it is not supported. -

The Restic feature (

restic.enable=false) is disabled and not supported in ROSA STS environments. -

The DataMover feature (

dataMover.enable=false) is disabled and not supported in ROSA STS environments.

7.3. Perform a backup

The following sample hello-world application has no attached persistent volumes. Either DPA configuration will work.

Create a workload to back up:

$ oc create namespace hello-world $ oc new-app -n hello-world --image=docker.io/openshift/hello-openshift

Expose the route:

$ oc expose service/hello-openshift -n hello-world

Check that the application is working:

$ curl `oc get route/hello-openshift -n hello-world -o jsonpath='{.spec.host}'`Example output

Hello OpenShift!

Back up the workload:

$ cat << EOF | oc create -f - apiVersion: velero.io/v1 kind: Backup metadata: name: hello-world namespace: openshift-adp spec: includedNamespaces: - hello-world storageLocation: ${CLUSTER_NAME}-dpa-1 ttl: 720h0m0s EOFWait until the backup is done:

$ watch "oc -n openshift-adp get backup hello-world -o json | jq .status"

Example output

{ "completionTimestamp": "2022-09-07T22:20:44Z", "expiration": "2022-10-07T22:20:22Z", "formatVersion": "1.1.0", "phase": "Completed", "progress": { "itemsBackedUp": 58, "totalItems": 58 }, "startTimestamp": "2022-09-07T22:20:22Z", "version": 1 }Delete the demo workload:

$ oc delete ns hello-world

Restore from the backup:

$ cat << EOF | oc create -f - apiVersion: velero.io/v1 kind: Restore metadata: name: hello-world namespace: openshift-adp spec: backupName: hello-world EOF

Wait for the Restore to finish:

$ watch "oc -n openshift-adp get restore hello-world -o json | jq .status"

Example output

{ "completionTimestamp": "2022-09-07T22:25:47Z", "phase": "Completed", "progress": { "itemsRestored": 38, "totalItems": 38 }, "startTimestamp": "2022-09-07T22:25:28Z", "warnings": 9 }Check that the workload is restored:

$ oc -n hello-world get pods

Example output

NAME READY STATUS RESTARTS AGE hello-openshift-9f885f7c6-kdjpj 1/1 Running 0 90s

$ curl `oc get route/hello-openshift -n hello-world -o jsonpath='{.spec.host}'`Example output

Hello OpenShift!

- For troubleshooting tips please refer to the OADP team’s troubleshooting documentation

- Additional sample applications can be found in the OADP team’s sample applications directory

7.4. Cleanup

Delete the workload:

$ oc delete ns hello-world

Remove the backup and restore resources from the cluster if they are no longer required:

$ oc delete backup hello-world $ oc delete restore hello-world

To delete the backup/restore and remote objects in s3:

$ velero backup delete hello-world $ velero restore delete hello-world

Delete the Data Protection Application:

$ oc -n openshift-adp delete dpa ${CLUSTER_NAME}-dpaDelete the Cloud Storage:

$ oc -n openshift-adp delete cloudstorage ${CLUSTER_NAME}-oadpWarningIf this command hangs, you might need to delete the finalizer:

$ oc -n openshift-adp patch cloudstorage ${CLUSTER_NAME}-oadp -p '{"metadata":{"finalizers":null}}' --type=mergeRemove the Operator if it is no longer required:

$ oc -n openshift-adp delete subscription oadp-operator

Remove the namespace for the Operator:

$ oc delete ns redhat-openshift-adp

Remove the Custom Resource Definitions from the cluster if you no longer wish to have them:

$ for CRD in `oc get crds | grep velero | awk '{print $1}'`; do oc delete crd $CRD; done $ for CRD in `oc get crds | grep -i oadp | awk '{print $1}'`; do oc delete crd $CRD; doneDelete the AWS S3 Bucket:

$ aws s3 rm s3://${CLUSTER_NAME}-oadp --recursive $ aws s3api delete-bucket --bucket ${CLUSTER_NAME}-oadpDetach the Policy from the role:

$ aws iam detach-role-policy --role-name "${ROLE_NAME}" \ --policy-arn "${POLICY_ARN}"Delete the role:

$ aws iam delete-role --role-name "${ROLE_NAME}"

Chapter 8. Tutorial: AWS Load Balancer Operator on ROSA

This content is authored by Red Hat experts, but has not yet been tested on every supported configuration.

Load Balancers created by the AWS Load Balancer Operator cannot be used for OpenShift Routes, and should only be used for individual services or ingress resources that do not need the full layer 7 capabilities of an OpenShift Route.

The AWS Load Balancer Controller manages AWS Elastic Load Balancers for a Red Hat OpenShift Service on AWS (ROSA) cluster. The controller provisions AWS Application Load Balancers (ALB) when you create Kubernetes Ingress resources and AWS Network Load Balancers (NLB) when implementing Kubernetes Service resources with a type of LoadBalancer.

Compared with the default AWS in-tree load balancer provider, this controller is developed with advanced annotations for both ALBs and NLBs. Some advanced use cases are:

- Using native Kubernetes Ingress objects with ALBs

- Integrate ALBs with the AWS Web Application Firewall (WAF) service

- Specify custom NLB source IP ranges

- Specify custom NLB internal IP addresses

The AWS Load Balancer Operator is used to used to install, manage and configure an instance of aws-load-balancer-controller in a ROSA cluster.

8.1. Prerequisites

AWS ALBs require a multi-AZ cluster, as well as three public subnets split across three AZs in the same VPC as the cluster. This makes ALBs unsuitable for many PrivateLink clusters. AWS NLBs do not have this restriction.

- A multi-AZ ROSA classic cluster

- BYO VPC cluster

- AWS CLI

- OC CLI

8.1.1. Environment

Prepare the environment variables:

$ export AWS_PAGER="" $ export ROSA_CLUSTER_NAME=$(oc get infrastructure cluster -o=jsonpath="{.status.infrastructureName}" | sed 's/-[a-z0-9]\{5\}$//') $ export REGION=$(oc get infrastructure cluster -o=jsonpath="{.status.platformStatus.aws.region}") $ export OIDC_ENDPOINT=$(oc get authentication.config.openshift.io cluster -o jsonpath='{.spec.serviceAccountIssuer}' | sed 's|^https://||') $ export AWS_ACCOUNT_ID=$(aws sts get-caller-identity --query Account --output text) $ export SCRATCH="/tmp/${ROSA_CLUSTER_NAME}/alb-operator" $ mkdir -p ${SCRATCH} $ echo "Cluster: ${ROSA_CLUSTER_NAME}, Region: ${REGION}, OIDC Endpoint: ${OIDC_ENDPOINT}, AWS Account ID: ${AWS_ACCOUNT_ID}"

8.1.2. AWS VPC and subnets

This section only applies to clusters that were deployed into existing VPCs. If you did not deploy your cluster into an existing VPC, skip this section and proceed to the installation section below.

Set the below variables to the proper values for your ROSA deployment:

$ export VPC_ID=<vpc-id> $ export PUBLIC_SUBNET_IDS=<public-subnets> $ export PRIVATE_SUBNET_IDS=<private-subnets> $ export CLUSTER_NAME=$(oc get infrastructure cluster -o=jsonpath="{.status.infrastructureName}")Add a tag to your cluster’s VPC with the cluster name:

$ aws ec2 create-tags --resources ${VPC_ID} --tags Key=kubernetes.io/cluster/${CLUSTER_NAME},Value=owned --region ${REGION}Add a tag to your public subnets:

$ aws ec2 create-tags \ --resources ${PUBLIC_SUBNET_IDS} \ --tags Key=kubernetes.io/role/elb,Value='' \ --region ${REGION}Add a tag to your private subnets:

$ aws ec2 create-tags \ --resources "${PRIVATE_SUBNET_IDS}" \ --tags Key=kubernetes.io/role/internal-elb,Value='' \ --region ${REGION}

8.2. Installation

Create an AWS IAM policy for the AWS Load Balancer Controller:

NoteThe policy is sourced from the upstream AWS Load Balancer Controller policy plus permission to create tags on subnets. This is required by the operator to function.

$ oc new-project aws-load-balancer-operator $ POLICY_ARN=$(aws iam list-policies --query \ "Policies[?PolicyName=='aws-load-balancer-operator-policy'].{ARN:Arn}" \ --output text) $ if [[ -z "${POLICY_ARN}" ]]; then wget -O "${SCRATCH}/load-balancer-operator-policy.json" \ https://raw.githubusercontent.com/rh-mobb/documentation/main/content/docs/rosa/aws-load-balancer-operator/load-balancer-operator-policy.json POLICY_ARN=$(aws --region "$REGION" --query Policy.Arn \ --output text iam create-policy \ --policy-name aws-load-balancer-operator-policy \ --policy-document "file://${SCRATCH}/load-balancer-operator-policy.json") fi $ echo $POLICY_ARNCreate an AWS IAM trust policy for AWS Load Balancer Operator:

$ cat <<EOF > "${SCRATCH}/trust-policy.json" { "Version": "2012-10-17", "Statement": [ { "Effect": "Allow", "Condition": { "StringEquals" : { "${OIDC_ENDPOINT}:sub": ["system:serviceaccount:aws-load-balancer-operator:aws-load-balancer-operator-controller-manager", "system:serviceaccount:aws-load-balancer-operator:aws-load-balancer-controller-cluster"] } }, "Principal": { "Federated": "arn:aws:iam::$AWS_ACCOUNT_ID:oidc-provider/${OIDC_ENDPOINT}" }, "Action": "sts:AssumeRoleWithWebIdentity" } ] } EOFCreate an AWS IAM role for the AWS Load Balancer Operator:

$ ROLE_ARN=$(aws iam create-role --role-name "${ROSA_CLUSTER_NAME}-alb-operator" \ --assume-role-policy-document "file://${SCRATCH}/trust-policy.json" \ --query Role.Arn --output text) $ echo $ROLE_ARN $ aws iam attach-role-policy --role-name "${ROSA_CLUSTER_NAME}-alb-operator" \ --policy-arn $POLICY_ARNCreate a secret for the AWS Load Balancer Operator to assume our newly created AWS IAM role:

$ cat << EOF | oc apply -f - apiVersion: v1 kind: Secret metadata: name: aws-load-balancer-operator namespace: aws-load-balancer-operator stringData: credentials: | [default] role_arn = $ROLE_ARN web_identity_token_file = /var/run/secrets/openshift/serviceaccount/token EOFInstall the Red Hat AWS Load Balancer Operator:

$ cat << EOF | oc apply -f - apiVersion: operators.coreos.com/v1 kind: OperatorGroup metadata: name: aws-load-balancer-operator namespace: aws-load-balancer-operator spec: upgradeStrategy: Default --- apiVersion: operators.coreos.com/v1alpha1 kind: Subscription metadata: name: aws-load-balancer-operator namespace: aws-load-balancer-operator spec: channel: stable-v1.0 installPlanApproval: Automatic name: aws-load-balancer-operator source: redhat-operators sourceNamespace: openshift-marketplace startingCSV: aws-load-balancer-operator.v1.0.0 EOF

Deploy an instance of the AWS Load Balancer Controller using the operator:

NoteIf you get an error here wait a minute and try again, it means the Operator has not completed installing yet.

$ cat << EOF | oc apply -f - apiVersion: networking.olm.openshift.io/v1 kind: AWSLoadBalancerController metadata: name: cluster spec: credentials: name: aws-load-balancer-operator EOFCheck the that the operator and controller pods are both running:

$ oc -n aws-load-balancer-operator get pods

You should see the following, if not wait a moment and retry:

NAME READY STATUS RESTARTS AGE aws-load-balancer-controller-cluster-6ddf658785-pdp5d 1/1 Running 0 99s aws-load-balancer-operator-controller-manager-577d9ffcb9-w6zqn 2/2 Running 0 2m4s

8.3. Validating the deployment

Create a new project:

$ oc new-project hello-world

Deploy a hello world application:

$ oc new-app -n hello-world --image=docker.io/openshift/hello-openshift

Configure a NodePort service for the AWS ALB to connect to:

$ cat << EOF | oc apply -f - apiVersion: v1 kind: Service metadata: name: hello-openshift-nodeport namespace: hello-world spec: ports: - port: 80 targetPort: 8080 protocol: TCP type: NodePort selector: deployment: hello-openshift EOFDeploy an AWS ALB using the AWS Load Balancer Operator:

$ cat << EOF | oc apply -f - apiVersion: networking.k8s.io/v1 kind: Ingress metadata: name: hello-openshift-alb namespace: hello-world annotations: alb.ingress.kubernetes.io/scheme: internet-facing spec: ingressClassName: alb rules: - http: paths: - path: / pathType: Exact backend: service: name: hello-openshift-nodeport port: number: 80 EOFCurl the AWS ALB Ingress endpoint to verify the hello world application is accessible:

NoteAWS ALB provisioning takes a few minutes. If you receive an error that says

curl: (6) Could not resolve host, please wait and try again.$ INGRESS=$(oc -n hello-world get ingress hello-openshift-alb \ -o jsonpath='{.status.loadBalancer.ingress[0].hostname}') $ curl "http://${INGRESS}"Example output

Hello OpenShift!

Deploy an AWS NLB for your hello world application:

$ cat << EOF | oc apply -f - apiVersion: v1 kind: Service metadata: name: hello-openshift-nlb namespace: hello-world annotations: service.beta.kubernetes.io/aws-load-balancer-type: external service.beta.kubernetes.io/aws-load-balancer-nlb-target-type: instance service.beta.kubernetes.io/aws-load-balancer-scheme: internet-facing spec: ports: - port: 80 targetPort: 8080 protocol: TCP type: LoadBalancer selector: deployment: hello-openshift EOFTest the AWS NLB endpoint:

NoteNLB provisioning takes a few minutes. If you receive an error that says

curl: (6) Could not resolve host, please wait and try again.$ NLB=$(oc -n hello-world get service hello-openshift-nlb \ -o jsonpath='{.status.loadBalancer.ingress[0].hostname}') $ curl "http://${NLB}"Example output

Hello OpenShift!

8.4. Cleaning up

Delete the hello world application namespace (and all the resources in the namespace):

$ oc delete project hello-world

Delete the AWS Load Balancer Operator and the AWS IAM roles:

$ oc delete subscription aws-load-balancer-operator -n aws-load-balancer-operator $ aws iam detach-role-policy \ --role-name "${ROSA_CLUSTER_NAME}-alb-operator" \ --policy-arn $POLICY_ARN $ aws iam delete-role \ --role-name "${ROSA_CLUSTER_NAME}-alb-operator"Delete the AWS IAM policy:

$ aws iam delete-policy --policy-arn $POLICY_ARN

Chapter 9. Tutorial: Configuring ROSA/OSD to use custom TLS ciphers on the Ingress Controller

This content is authored by Red Hat experts, but has not yet been tested on every supported configuration.

This guide demonstrates how to properly patch the cluster Ingress Controllers, as well as Ingress Controllers created by the Custom Domain Operator. This functionality allows customers to modify the tlsSecurityProfile value on cluster Ingress Controllers. This guide demonstrates how to apply a custom tlsSecurityProfile, a scoped service account with the associated role and role binding, and a CronJob that the cipher changes are reapplied with 60 minutes in the event that an Ingress Controller is recreated or modified.

Prerequisites

-

Review the OpenShift Documentation that explains the options for the

tlsSecurityProfile. By default, Ingress Controllers are configured to use theIntermediateprofile, which corresponds to the Intermediate Mozilla profile.

Procedure

Create a service account for the CronJob to use.

A service account allows our CronJob to directly access the cluster API, without using a regular user’s credentials. To create a service account, run the following command:

$ oc create sa cron-ingress-patch-sa -n openshift-ingress-operator

Create a role and role binding that allows limited access to patch the Ingress Controllers.

Role-based access control (RBAC) is critical to ensuring security inside your cluster. Creating a role allows us to provide scoped access to only the API resources needed within the cluster. To create the role, run the following command:

$ oc create role cron-ingress-patch-role --verb=get,patch,update --resource=ingresscontroller.operator.openshift.io -n openshift-ingress-operator

Once the role has been created, you must bind the role to the service account using a role binding. To create the role binding, run the following command:

$ oc create rolebinding cron-ingress-patch-rolebinding --role=cron-ingress-patch-role --serviceaccount=openshift-ingress-operator:cron-ingress-patch-sa -n openshift-ingress-operator

Patch the Ingress Controllers.

ImportantThe examples provided below add an additional cipher to the Ingress Controller’s

tlsSecurityProfileto allow IE 11 access from Windows Server 2008 R2. Modify this command to meet your specific business requirements.Before creating the CronJob, apply the

tlsSecurityProfileconfiguration to validate changes. This process depends on if you are using the Custom Domain Operator.Clusters not using the Custom Domain Operator:

If you are only using the default Ingress Controller, and not using the Custom Domain Operator, run the following command to patch the Ingress Controller:

$ oc patch ingresscontroller/default -n openshift-ingress-operator --type=merge -p '{"spec":{"tlsSecurityProfile":{"type":"Custom","custom":{"ciphers":["TLS_AES_128_GCM_SHA256","TLS_AES_256_GCM_SHA384","ECDHE-ECDSA-AES128-GCM-SHA256","ECDHE-RSA-AES128-GCM-SHA256","ECDHE-ECDSA-AES256-GCM-SHA384","ECDHE-RSA-AES256-GCM-SHA384","ECDHE-ECDSA-CHACHA20-POLY1305","ECDHE-RSA-CHACHA20-POLY1305","DHE-RSA-AES128-GCM-SHA256","DHE-RSA-AES256-GCM-SHA384","TLS_CHACHA20_POLY1305_SHA256","TLS_ECDHE_RSA_WITH_AES_128_CBC_SHA"],"minTLSVersion":"VersionTLS12"}}}}'This patch adds the

TLS_ECDHE_RSA_WITH_AES_128_CBC_SHAcipher which allows access from IE 11 on Windows Server 2008 R2 when using RSA certificates.Once you run the command, you will receive a response that looks like this:

Example output

ingresscontroller.operator.openshift.io/default patched

Clusters using the Custom Domain Operator:

Customers who are using the Custom Domain Operator need to loop through each of their Ingress Controllers to patch each one. To patch all of your cluster’s Ingress Controllers, run the following command:

$ for ic in $(oc get ingresscontroller -o name -n openshift-ingress-operator); do oc patch ${ic} -n openshift-ingress-operator --type=merge -p '{"spec":{"tlsSecurityProfile":{"type":"Custom","custom":{"ciphers":["TLS_AES_128_GCM_SHA256","TLS_AES_256_GCM_SHA384","ECDHE-ECDSA-AES128-GCM-SHA256","ECDHE-RSA-AES128-GCM-SHA256","ECDHE-ECDSA-AES256-GCM-SHA384","ECDHE-RSA-AES256-GCM-SHA384","ECDHE-ECDSA-CHACHA20-POLY1305","ECDHE-RSA-CHACHA20-POLY1305","DHE-RSA-AES128-GCM-SHA256","DHE-RSA-AES256-GCM-SHA384","TLS_CHACHA20_POLY1305_SHA256","TLS_ECDHE_RSA_WITH_AES_128_CBC_SHA"],"minTLSVersion":"VersionTLS12"}}}}'; doneOnce you run the command, you will receive a response that looks like this:

Example output

ingresscontroller.operator.openshift.io/default patched ingresscontroller.operator.openshift.io/custom1 patched ingresscontroller.operator.openshift.io/custom2 patched

Create the CronJob to ensure that the TLS configuration is not overwritten.

Occasionally, the cluster’s Ingress Controllers can get recreated. In these cases, the Ingress Controller will likely not retain the

tlsSecurityProfilechanges that were applied. To ensure this does not happen, create a CronJob that goes through and updates the cluster’s Ingress Controllers. This process depends on if you are using the Custom Domain Operator.Clusters not using the Custom Domain Operator:

If you are not using the Custom Domain Operator, create the CronJob by running the following command:

$ cat << EOF | oc apply -f - apiVersion: batch/v1 kind: CronJob metadata: name: tls-patch namespace: openshift-ingress-operator spec: schedule: '@hourly' jobTemplate: spec: template: spec: containers: - name: tls-patch image: registry.redhat.io/openshift4/ose-tools-rhel8:latest args: - /bin/sh - '-c' - oc patch ingresscontroller/default -n openshift-ingress-operator --type=merge -p '{"spec":{"tlsSecurityProfile":{"type":"Custom","custom":{"ciphers":["TLS_AES_128_GCM_SHA256","TLS_AES_256_GCM_SHA384","ECDHE-ECDSA-AES128-GCM-SHA256","ECDHE-RSA-AES128-GCM-SHA256","ECDHE-ECDSA-AES256-GCM-SHA384","ECDHE-RSA-AES256-GCM-SHA384","ECDHE-ECDSA-CHACHA20-POLY1305","ECDHE-RSA-CHACHA20-POLY1305","DHE-RSA-AES128-GCM-SHA256","DHE-RSA-AES256-GCM-SHA384","TLS_CHACHA20_POLY1305_SHA256","TLS_ECDHE_RSA_WITH_AES_128_CBC_SHA"],"minTLSVersion":"VersionTLS12"}}}}' restartPolicy: Never serviceAccountName: cron-ingress-patch-sa EOFNoteThis CronJob runs every hour and patches the Ingress Controllers, if necessary. It is important that this CronJob does not run constantly, as it can trigger reconciles that could overload the OpenShift Ingress Operator. Most of the time, the logs of the CronJob pod looks like the following example, as it will not be changing anything:

Example output

ingresscontroller.operator.openshift.io/default patched (no change)

Clusters using the Custom Domain Operator:

If you are using the Custom Domain Operator, the CronJob needs to loop through and patch each Ingress Controller. To create this CronJob, run the following command:

$ cat << EOF | oc apply -f - apiVersion: batch/v1 kind: CronJob metadata: name: tls-patch namespace: openshift-ingress-operator spec: schedule: '@hourly' jobTemplate: spec: template: spec: containers: - name: tls-patch image: registry.redhat.io/openshift4/ose-tools-rhel8:latest args: - /bin/sh - '-c' - for ic in $(oc get ingresscontroller -o name -n openshift-ingress-operator); do oc patch ${ic} -n openshift-ingress-operator --type=merge -p '{"spec":{"tlsSecurityProfile":{"type":"Custom","custom":{"ciphers":["TLS_AES_128_GCM_SHA256","TLS_AES_256_GCM_SHA384","ECDHE-ECDSA-AES128-GCM-SHA256","ECDHE-RSA-AES128-GCM-SHA256","ECDHE-ECDSA-AES256-GCM-SHA384","ECDHE-RSA-AES256-GCM-SHA384","ECDHE-ECDSA-CHACHA20-POLY1305","ECDHE-RSA-CHACHA20-POLY1305","DHE-RSA-AES128-GCM-SHA256","DHE-RSA-AES256-GCM-SHA384","TLS_CHACHA20_POLY1305_SHA256","TLS_ECDHE_RSA_WITH_AES_128_CBC_SHA"],"minTLSVersion":"VersionTLS12"}}}}'; done restartPolicy: Never serviceAccountName: cron-ingress-patch-sa EOFNoteThis CronJob runs every hour and patches the Ingress Controllers, if necessary. It is important that this CronJob does not run constantly, as it can trigger reconciles that could overload the OpenShift Ingress Operator. Most of the time, the logs of the CronJob pod will look something like this, as it will not be changing anything:

Example output

ingresscontroller.operator.openshift.io/default patched (no change) ingresscontroller.operator.openshift.io/custom1 patched (no change) ingresscontroller.operator.openshift.io/custom2 patched (no change)

Chapter 10. Tutorial: Configuring Microsoft Entra ID (formerly Azure Active Directory) as an identity provider

You can configure Microsoft Entra ID (formerly Azure Active Directory) as the cluster identity provider in Red Hat OpenShift Service on AWS (ROSA).

This tutorial guides you to complete the following tasks:

- Register a new application in Entra ID for authentication.

- Configure the application registration in Entra ID to include optional and group claims in tokens.

- Configure the Red Hat OpenShift Service on AWS cluster to use Entra ID as the identity provider.

- Grant additional permissions to individual groups.

10.1. Prerequisites

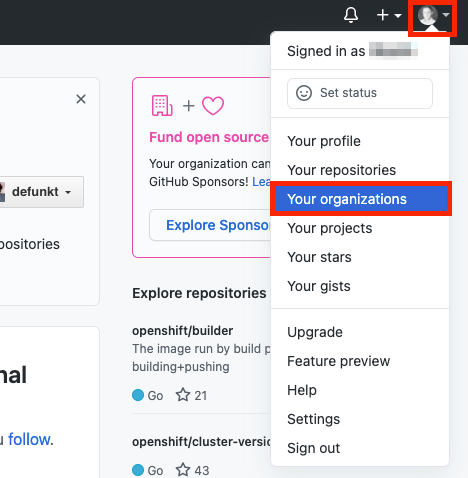

- You created a set of security groups and assigned users by following the Microsoft documentation.

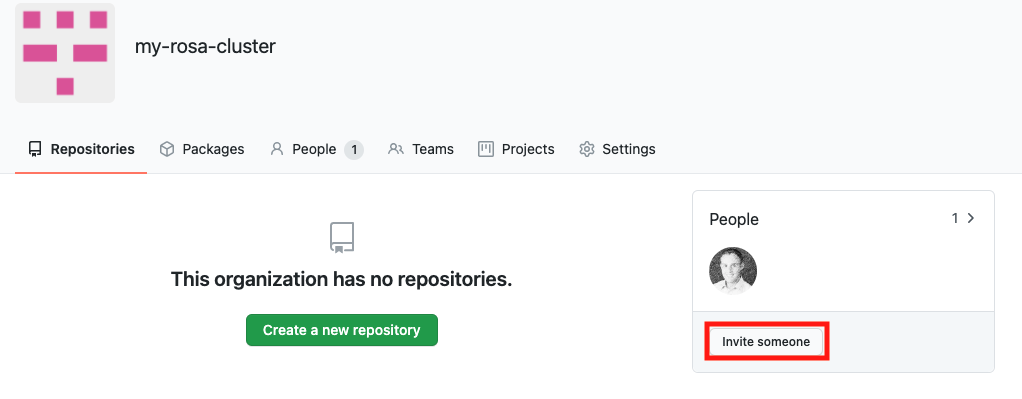

10.2. Registering a new application in Entra ID for authentication

To register your application in Entra ID, first create the OAuth callback URL, then register your application.

Procedure

Create the cluster’s OAuth callback URL by changing the specified variables and running the following command:

NoteRemember to save this callback URL; it will be required later in the process.

$ domain=$(rosa describe cluster -c <cluster_name> | grep "DNS" | grep -oE '\S+.openshiftapps.com') $ echo "OAuth callback URL: https://oauth-openshift.apps.$domain/oauth2callback/AAD"

The "AAD" directory at the end of the OAuth callback URL must match the OAuth identity provider name that you will set up later in this process.

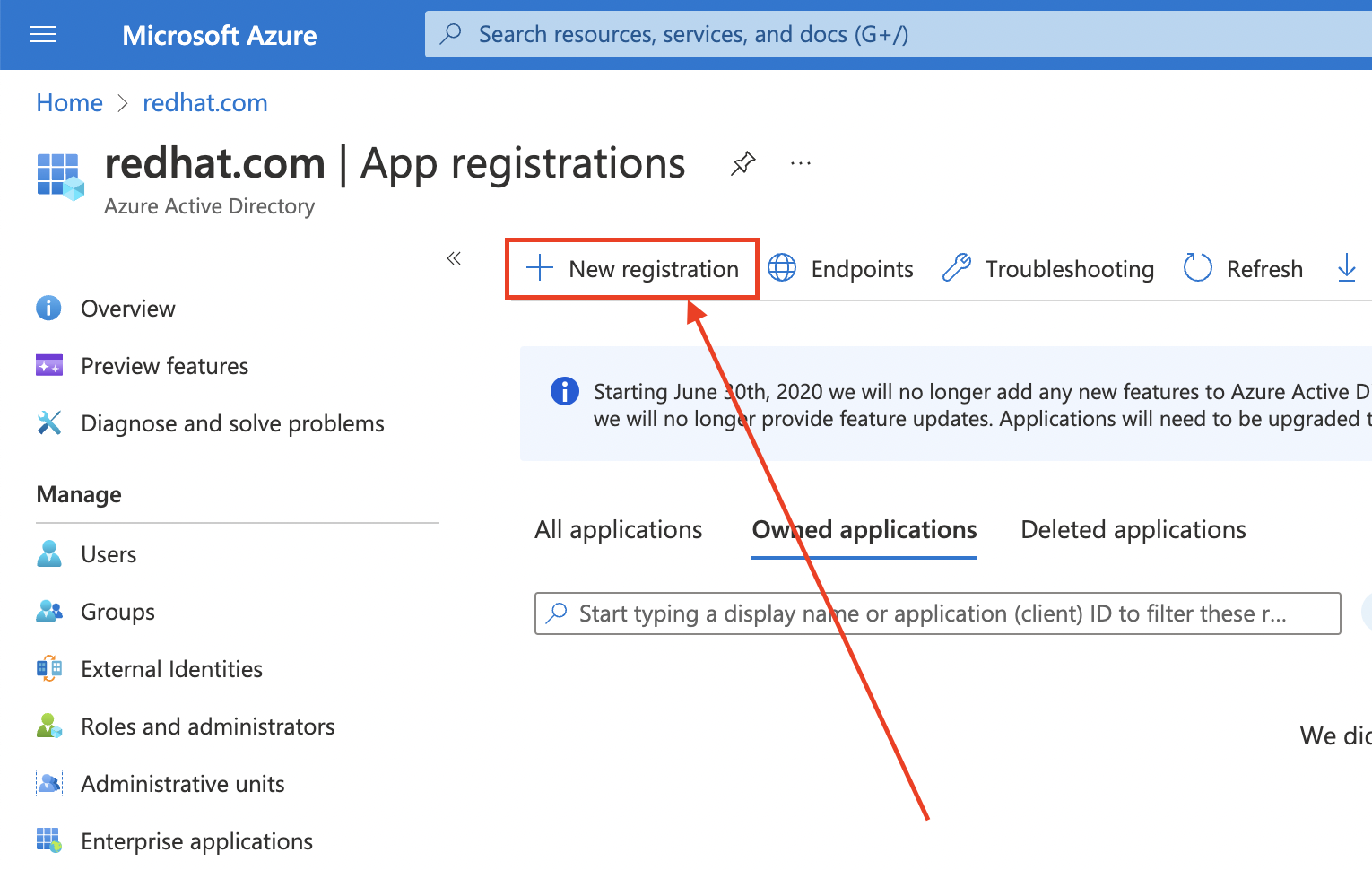

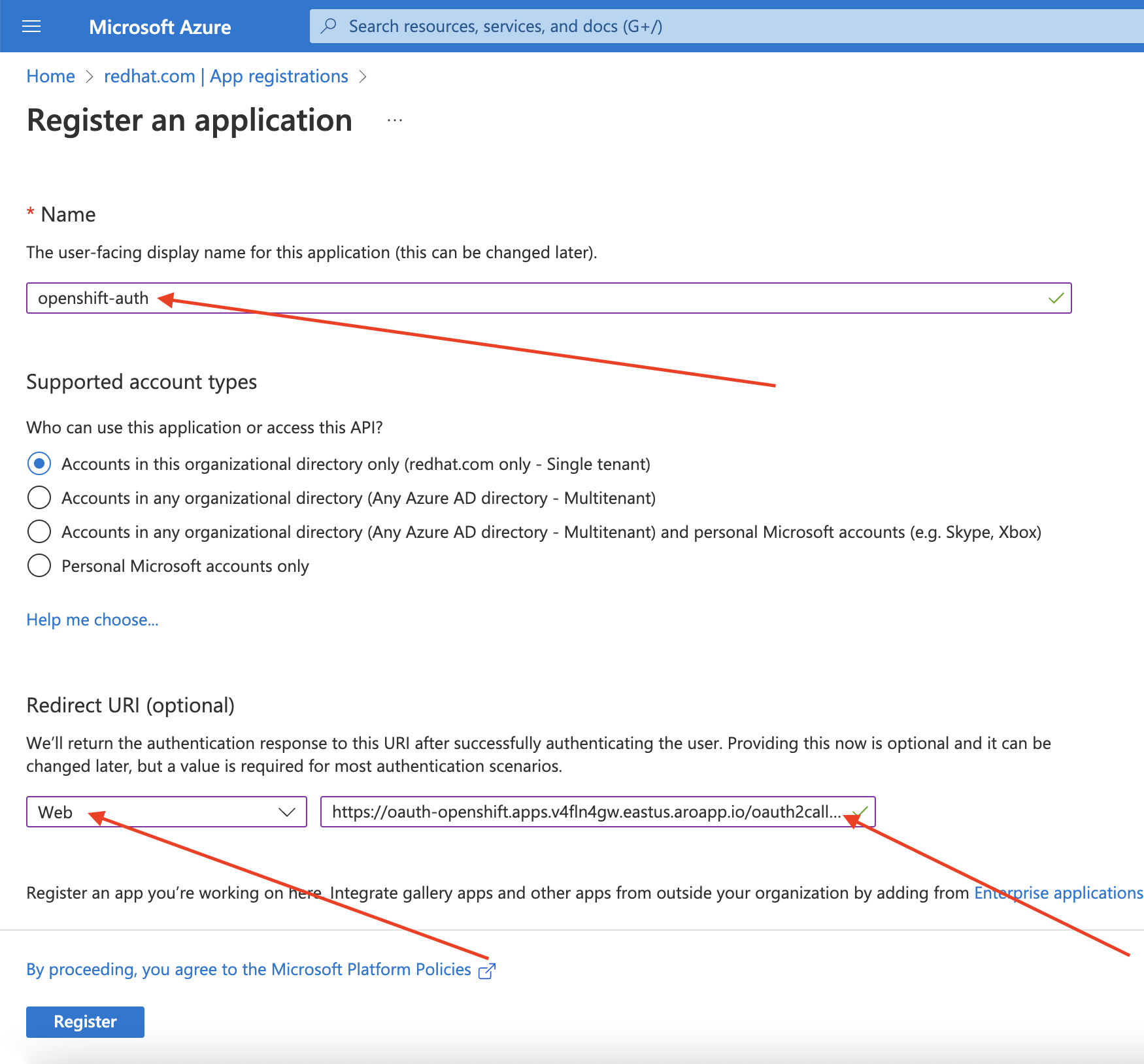

Create the Entra ID application by logging in to the Azure portal, and select the App registrations blade. Then, select New registration to create a new application.

-

Name the application, for example

openshift-auth. - Select Web from the Redirect URI dropdown and enter the value of the OAuth callback URL you retrieved in the previous step.

After providing the required information, click Register to create the application.

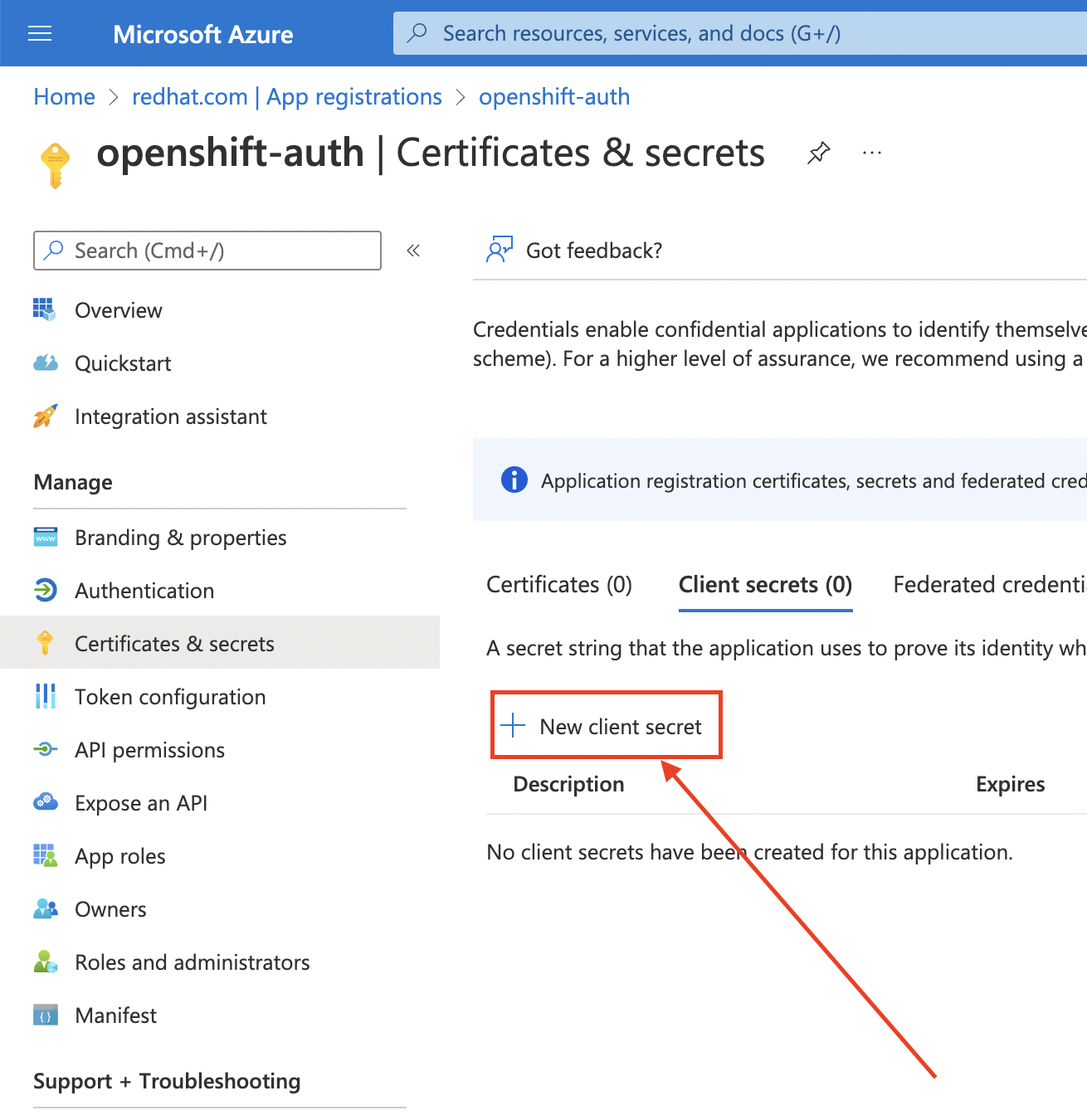

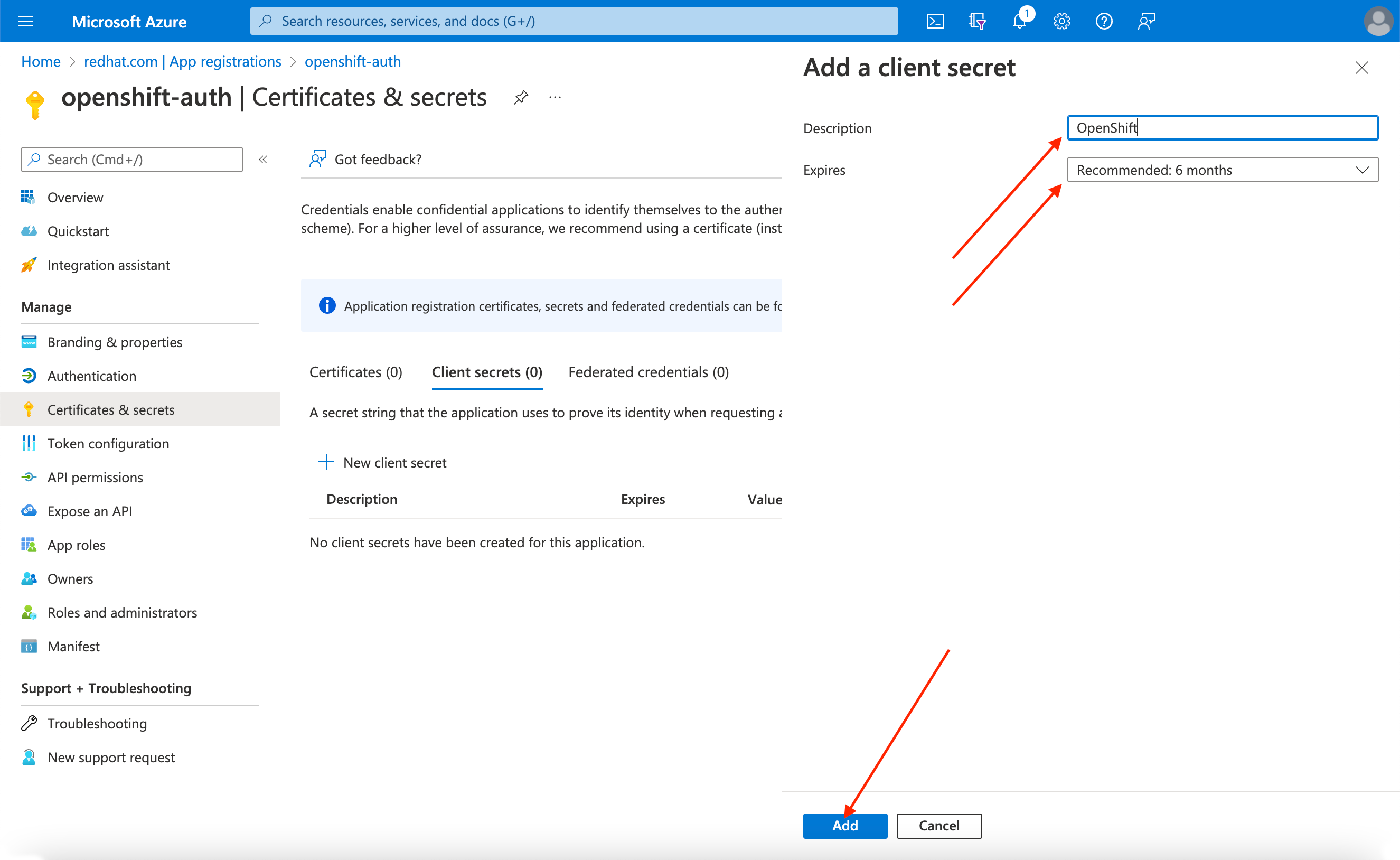

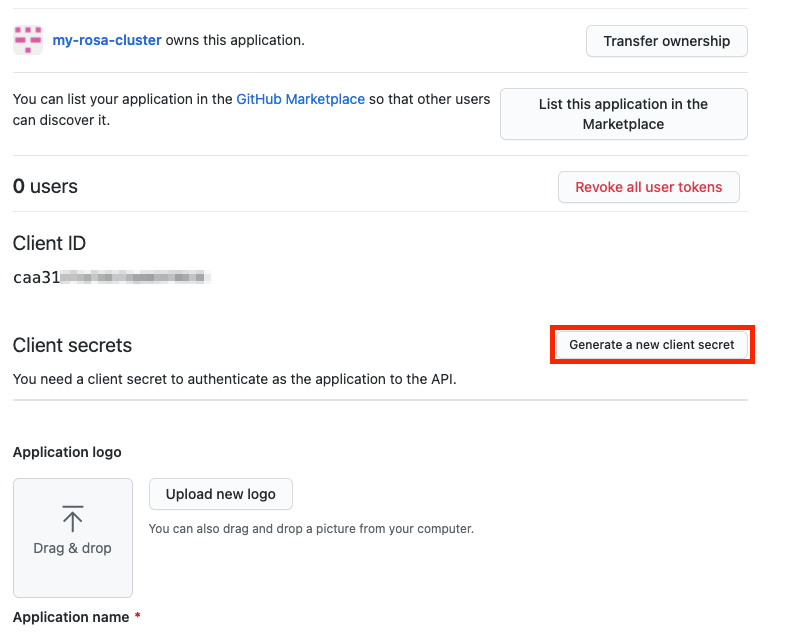

Select the Certificates & secrets sub-blade and select New client secret.

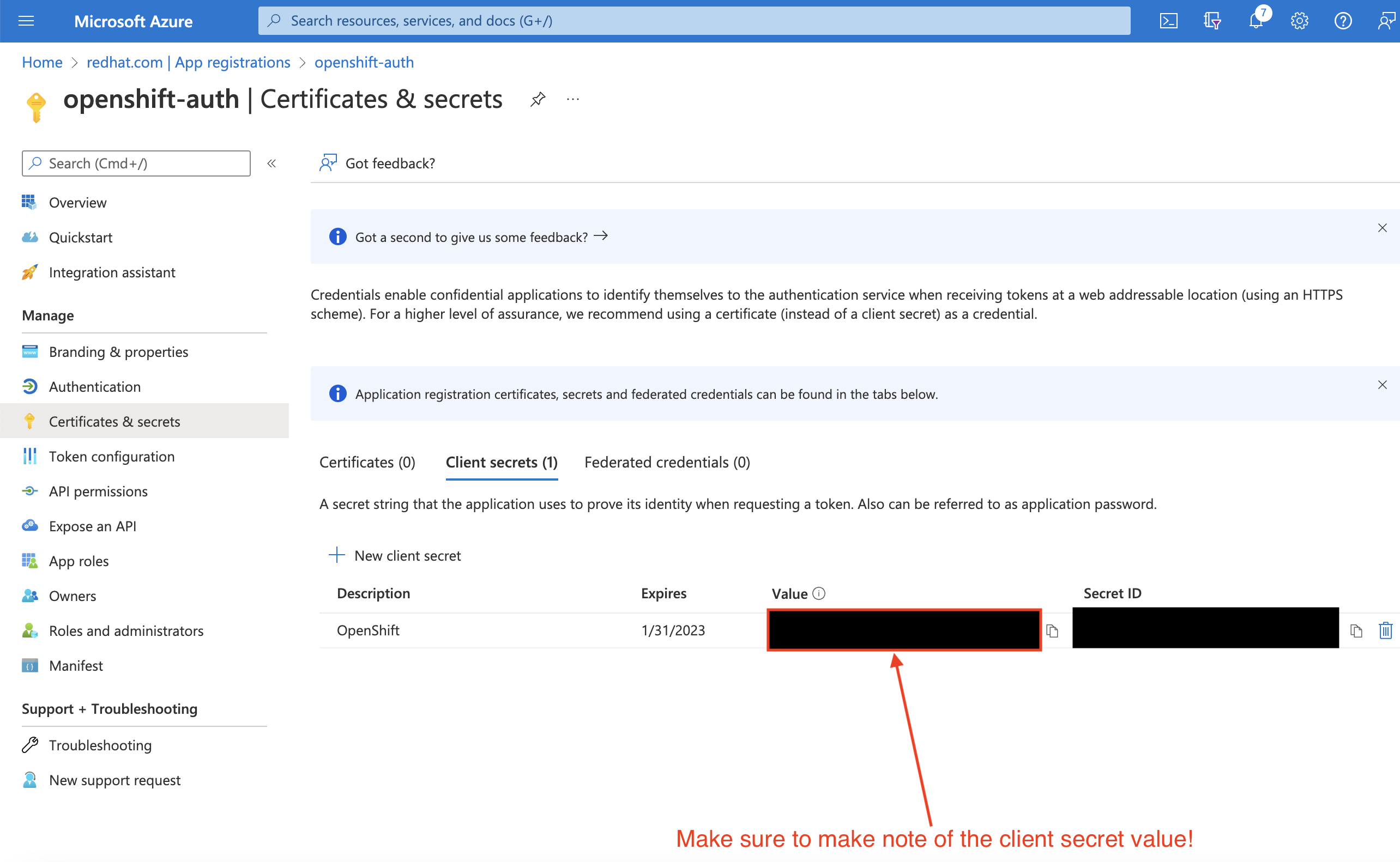

Complete the requested details and store the generated client secret value. This secret is required later in this process.

ImportantAfter initial setup, you cannot see the client secret. If you did not record the client secret, you must generate a new one.

Select the Overview sub-blade and note the

Application (client) IDandDirectory (tenant) ID. You will need these values in a future step.

10.3. Configuring the application registration in Entra ID to include optional and group claims

So that Red Hat OpenShift Service on AWS has enough information to create the user’s account, you must configure Entra ID to give two optional claims: email and preferred_username. For more information about optional claims in Entra ID, see the Microsoft documentation.

In addition to individual user authentication, Red Hat OpenShift Service on AWS provides group claim functionality. This functionality allows an OpenID Connect (OIDC) identity provider, such as Entra ID, to offer a user’s group membership for use within Red Hat OpenShift Service on AWS.

Configuring optional claims

You can configure the optional claims in Entra ID.

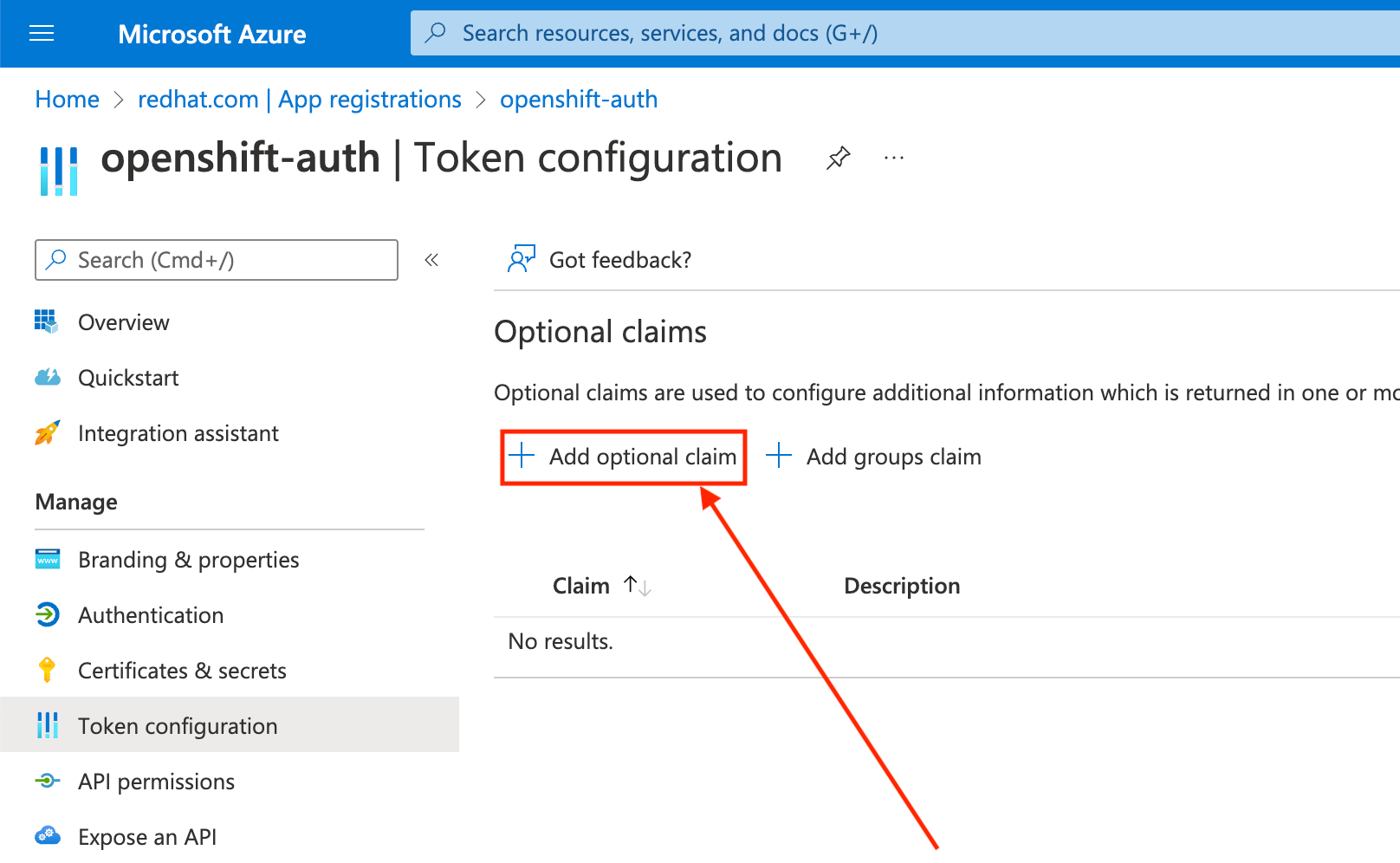

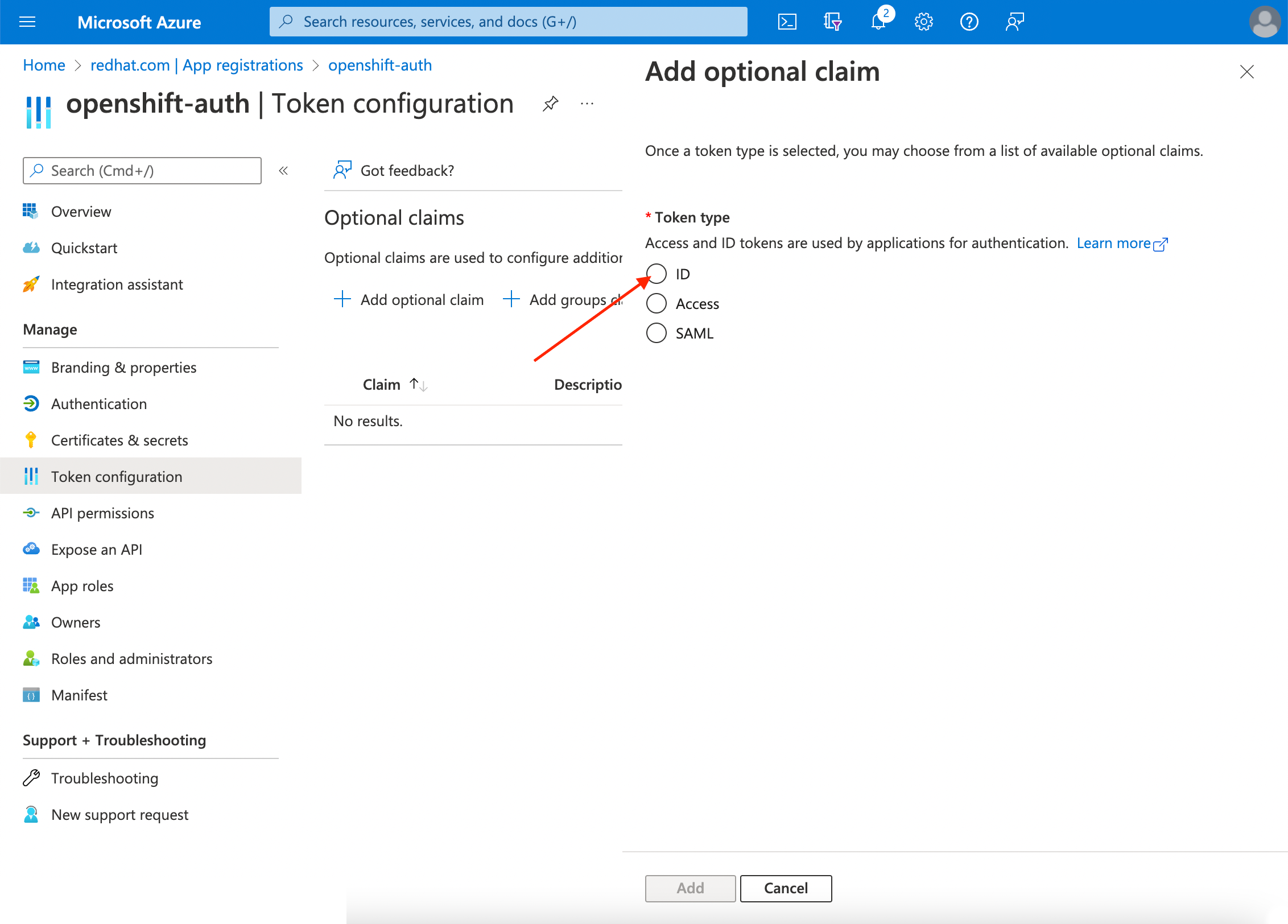

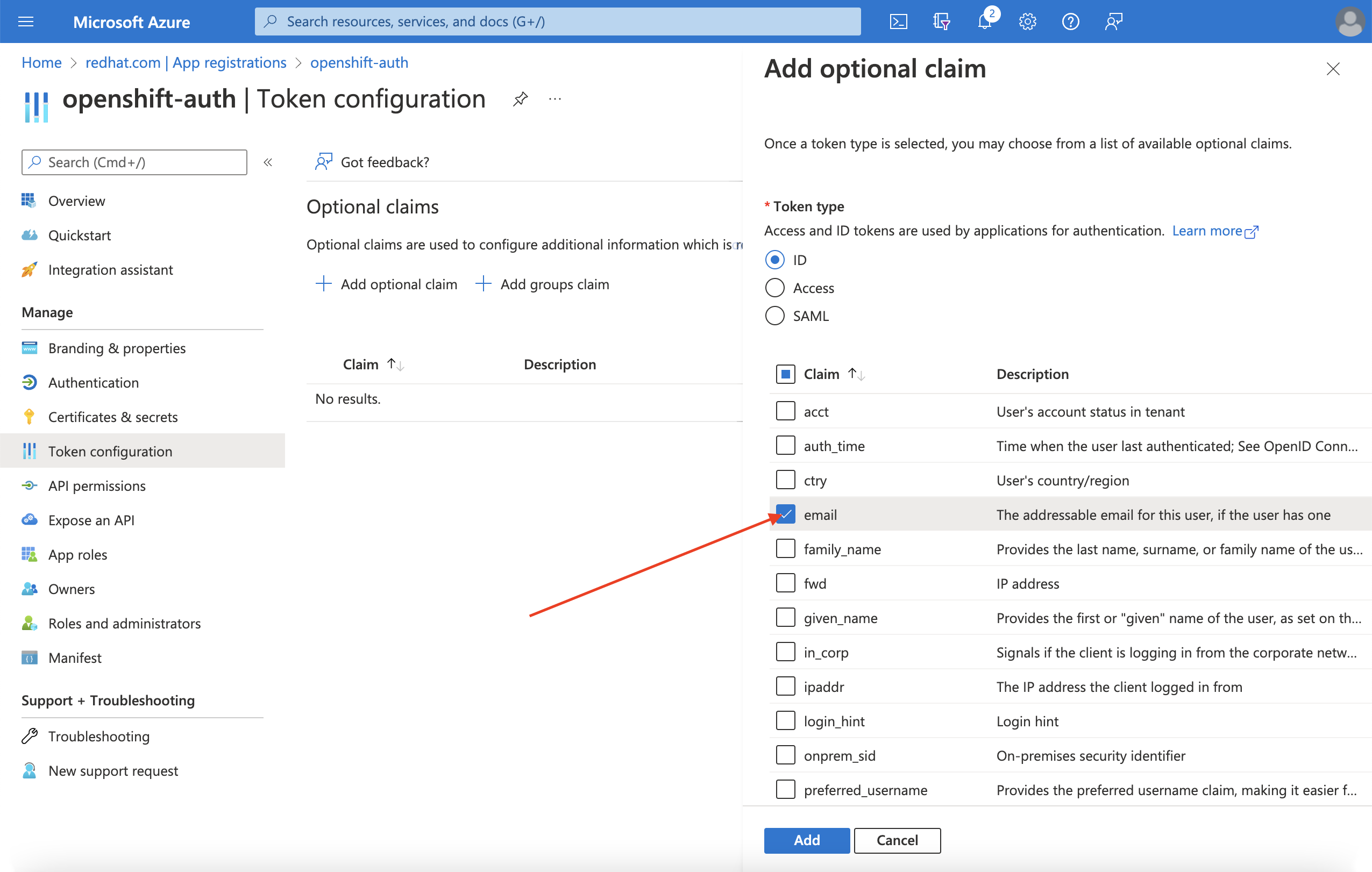

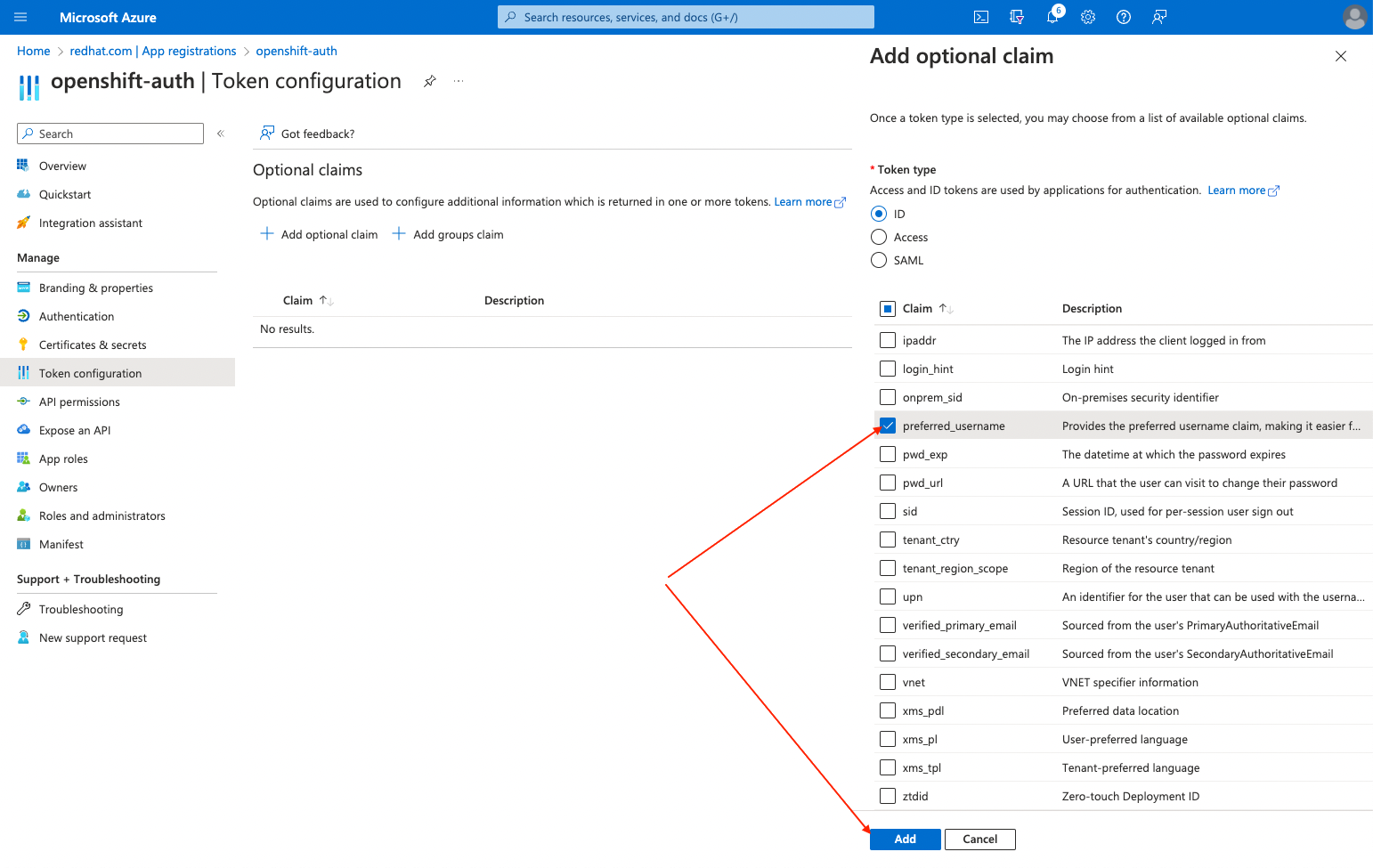

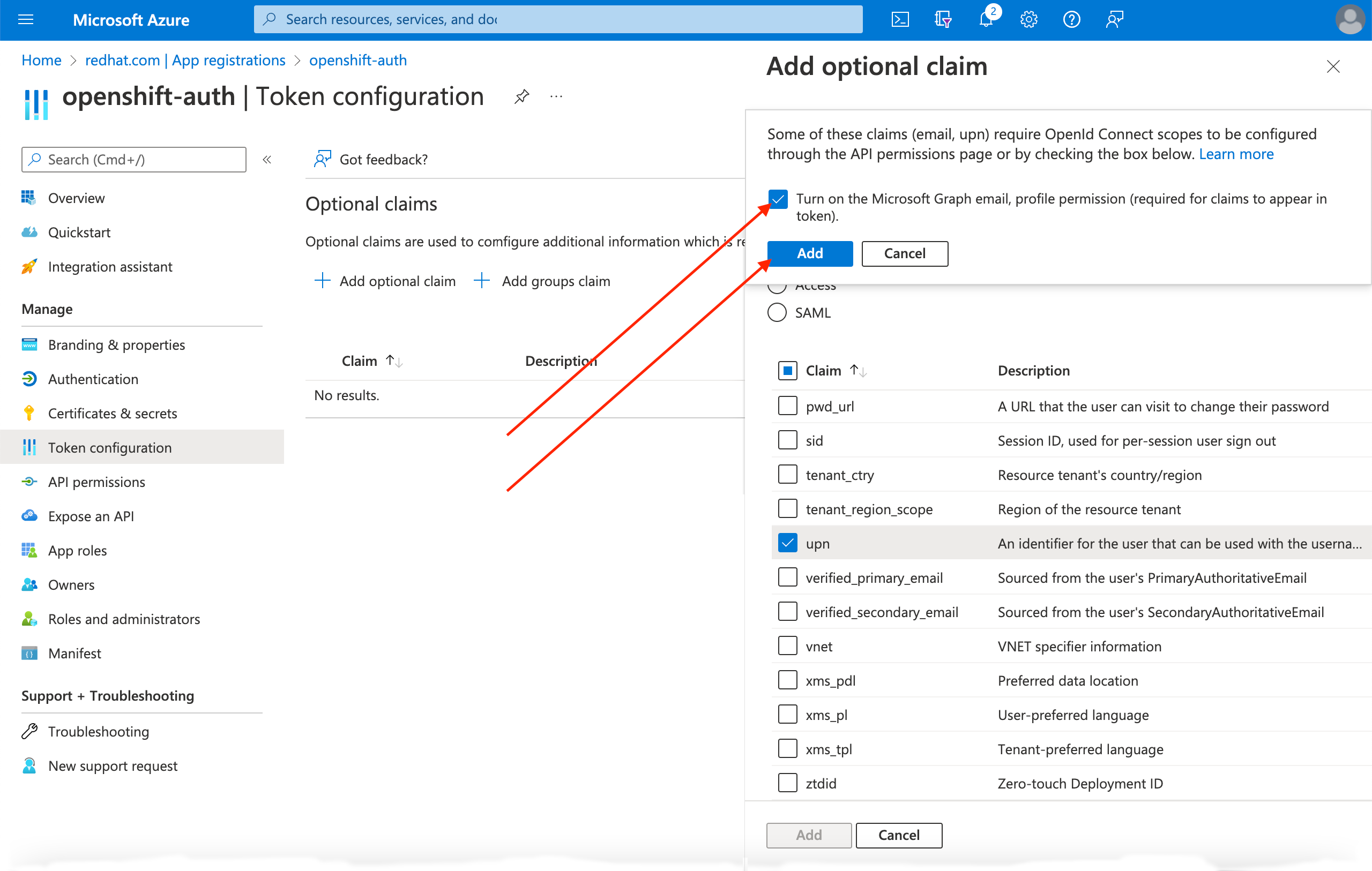

Click the Token configuration sub-blade and select the Add optional claim button.

Select the ID radio button.

Select the email claim checkbox.

Select the

preferred_usernameclaim checkbox. Then, click Add to configure the email and preferred_username claims your Entra ID application.

A dialog box appears at the top of the page. Follow the prompt to enable the necessary Microsoft Graph permissions.

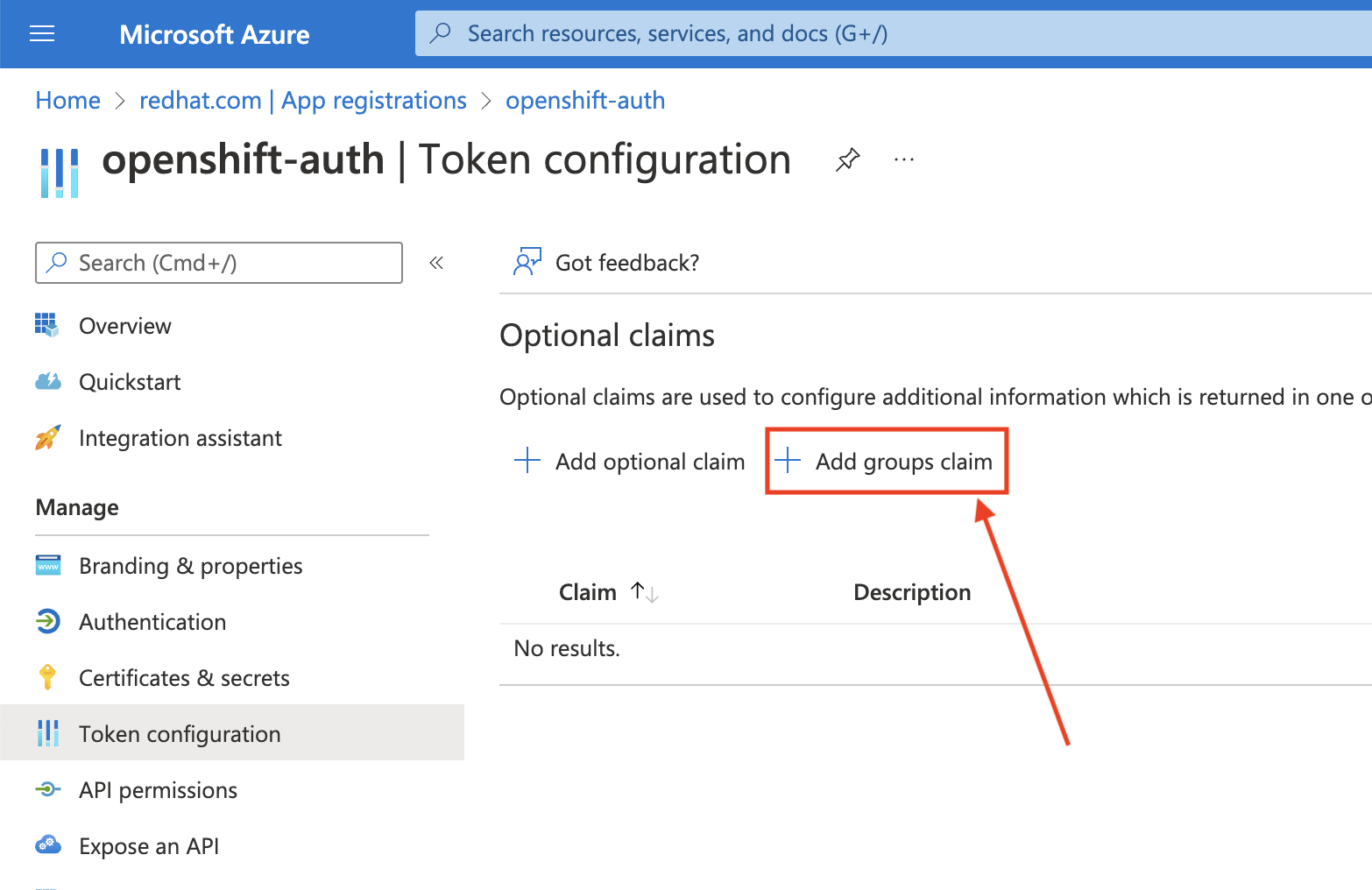

Configuring group claims (optional)

Configure Entra ID to offer a groups claim.

Procedure

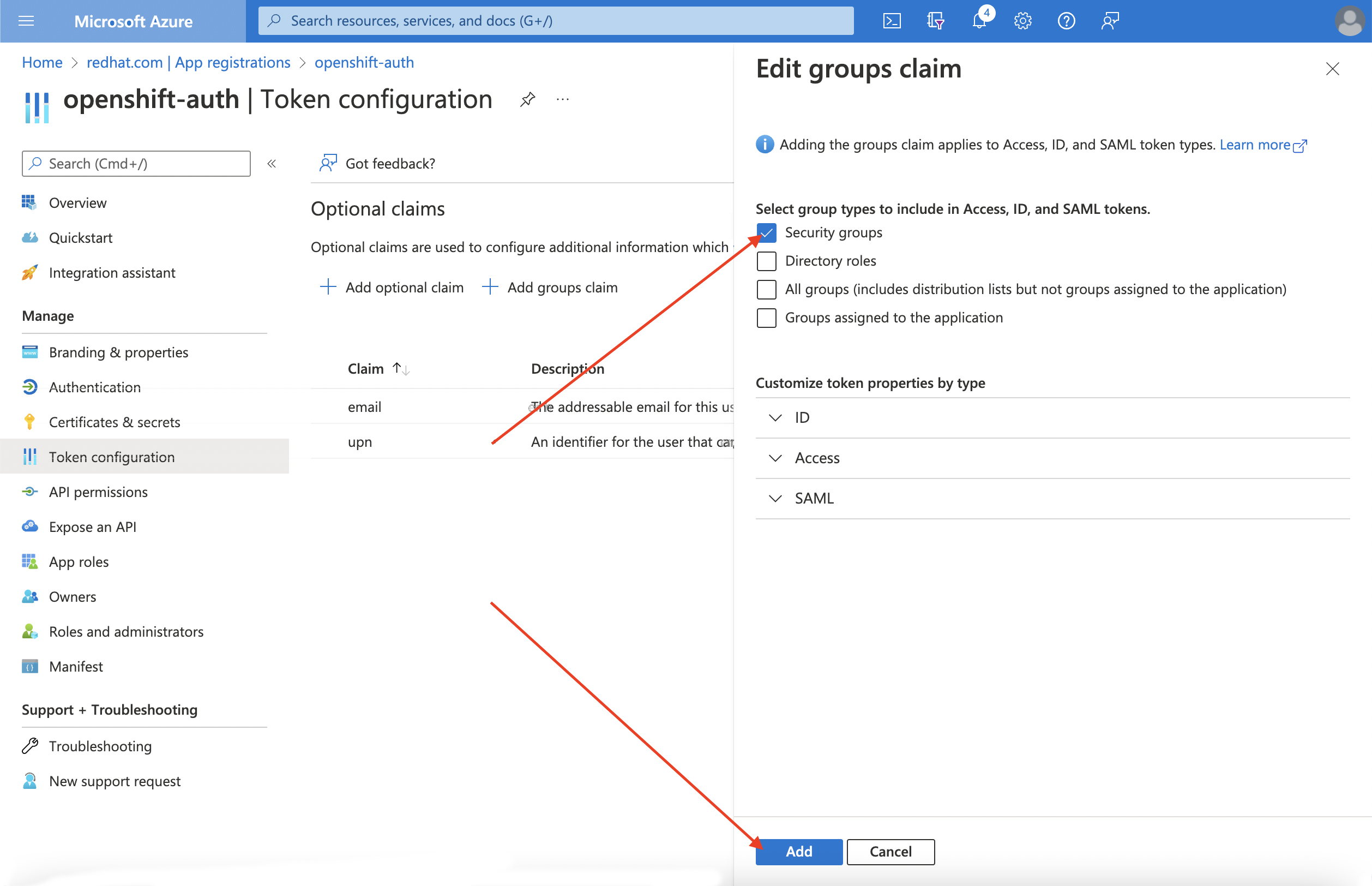

From the Token configuration sub-blade, click Add groups claim.

To configure group claims for your Entra ID application, select Security groups and then click the Add.

NoteIn this example, the group claim includes all of the security groups that a user is a member of. In a real production environment, ensure that the groups that the group claim only includes groups that apply to Red Hat OpenShift Service on AWS.

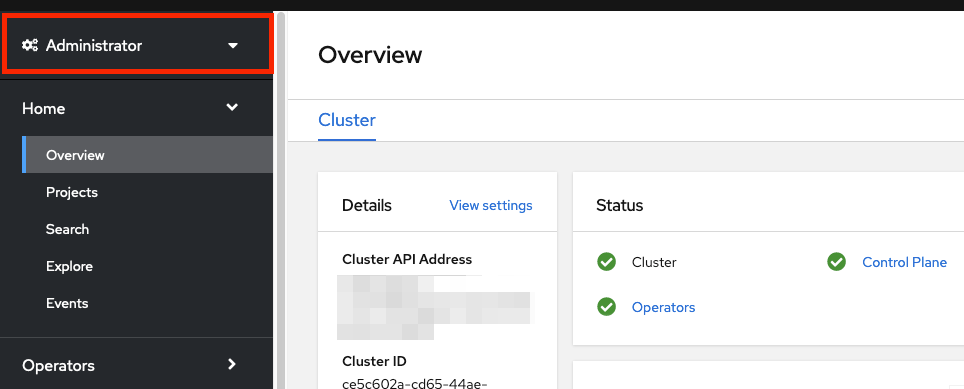

10.4. Configuring the Red Hat OpenShift Service on AWS cluster to use Entra ID as the identity provider

You must configure Red Hat OpenShift Service on AWS to use Entra ID as its identity provider.

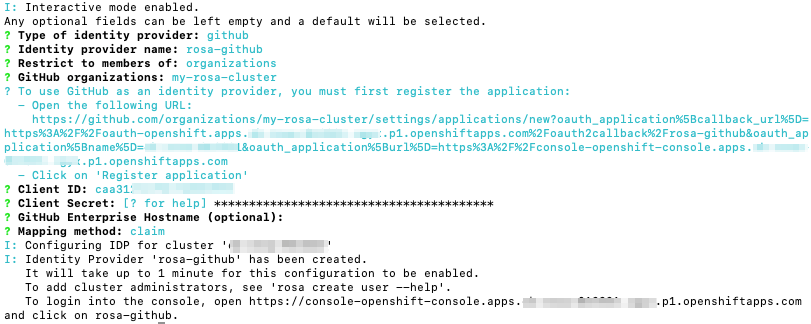

Although ROSA offers the ability to configure identity providers by using OpenShift Cluster Manager, use the ROSA CLI to configure the cluster’s OAuth provider to use Entra ID as its identity provider. Before configuring the identity provider, set the necessary variables for the identity provider configuration.

Procedure

Create the variables by running the following command:

$ CLUSTER_NAME=example-cluster 1 $ IDP_NAME=AAD 2 $ APP_ID=yyyyyyyy-yyyy-yyyy-yyyy-yyyyyyyyyyyy 3 $ CLIENT_SECRET=xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx 4 $ TENANT_ID=zzzzzzzz-zzzz-zzzz-zzzz-zzzzzzzzzzzz 5

Configure the cluster’s OAuth provider by running the following command. If you enabled group claims, ensure that you use the

--group-claims groupsargument.If you enabled group claims, run the following command:

$ rosa create idp \ --cluster ${CLUSTER_NAME} \ --type openid \ --name ${IDP_NAME} \ --client-id ${APP_ID} \ --client-secret ${CLIENT_SECRET} \ --issuer-url https://login.microsoftonline.com/${TENANT_ID}/v2.0 \ --email-claims email \ --name-claims name \ --username-claims preferred_username \ --extra-scopes email,profile \ --groups-claims groupsIf you did not enable group claims, run the following command:

$ rosa create idp \ --cluster ${CLUSTER_NAME} \ --type openid \ --name ${IDP_NAME} \ --client-id ${APP_ID} \ --client-secret ${CLIENT_SECRET} \ --issuer-url https://login.microsoftonline.com/${TENANT_ID}/v2.0 \ --email-claims email \ --name-claims name \ --username-claims preferred_username \ --extra-scopes email,profile

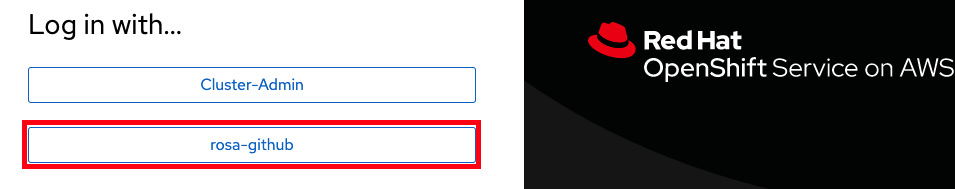

After a few minutes, the cluster authentication Operator reconciles your changes, and you can log in to the cluster by using Entra ID.

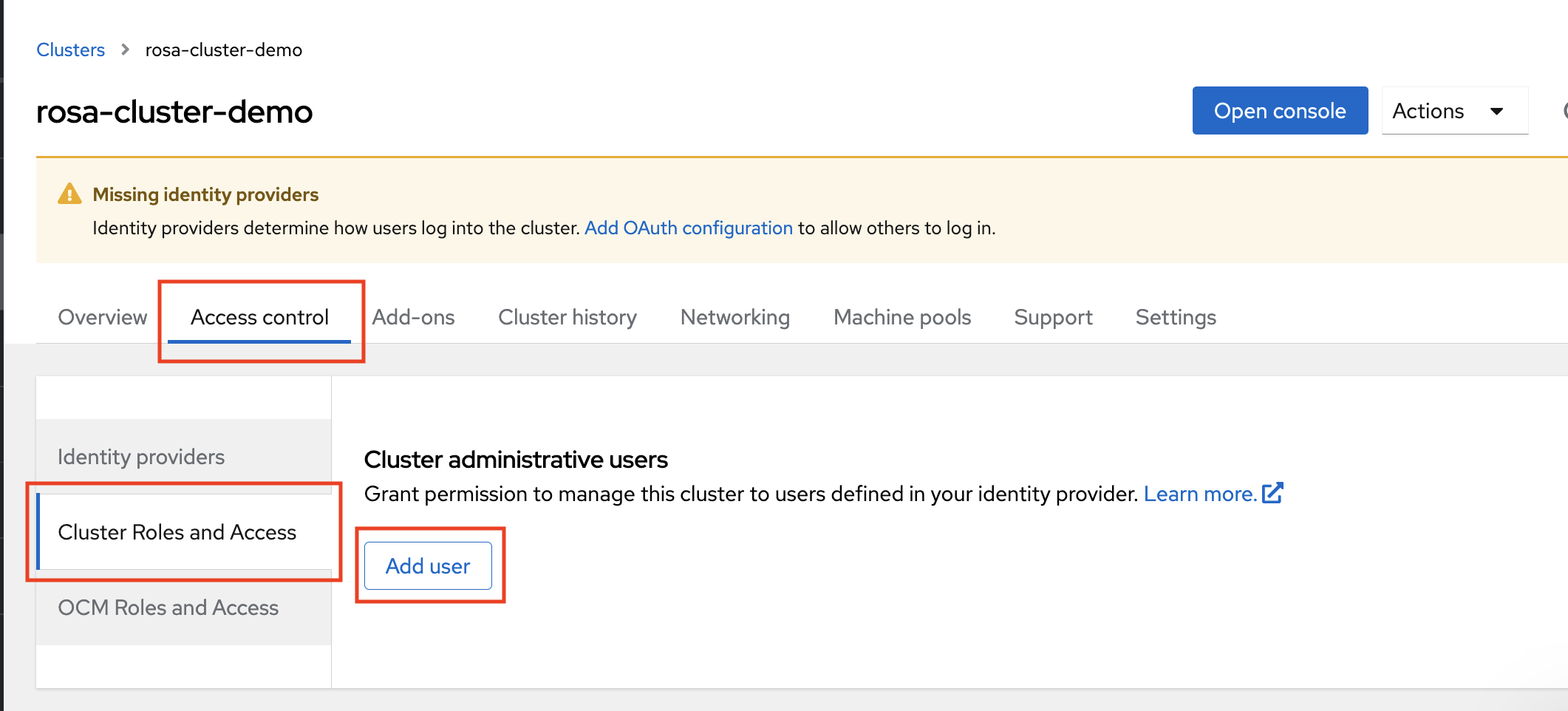

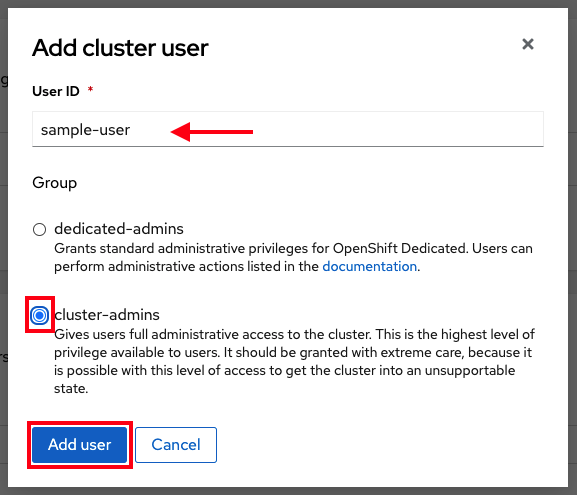

10.5. Granting additional permissions to individual users and groups

When your first log in, you might notice that you have very limited permissions. By default, Red Hat OpenShift Service on AWS only grants you the ability to create new projects, or namespaces, in the cluster. Other projects are restricted from view.

You must grant these additional abilities to individual users and groups.

Granting additional permissions to individual users

Red Hat OpenShift Service on AWS includes a significant number of preconfigured roles, including the cluster-admin role that grants full access and control over the cluster.

Procedure

Grant a user access to the

cluster-adminrole by running the following command:$ rosa grant user cluster-admin \ --user=<USERNAME> 1 --cluster=${CLUSTER_NAME}- 1

- Provide the Entra ID username that you want to have cluster admin permissions.

Granting additional permissions to individual groups

If you opted to enable group claims, the cluster OAuth provider automatically creates or updates the user’s group memberships by using the group ID. The cluster OAuth provider does not automatically create RoleBindings and ClusterRoleBindings for the groups that are created; you are responsible for creating those bindings by using your own processes.

To grant an automatically generated group access to the cluster-admin role, you must create a ClusterRoleBinding to the group ID.

Procedure

Create the

ClusterRoleBindingby running the following command:$ oc create clusterrolebinding cluster-admin-group \ --clusterrole=cluster-admin \ --group=<GROUP_ID> 1- 1

- Provide the Entra ID group ID that you want to have cluster admin permissions.

Now, any user in the specified group automatically receives

cluster-adminaccess.

10.6. Additional resources

For more information about how to use RBAC to define and apply permissions in Red Hat OpenShift Service on AWS, see the Red Hat OpenShift Service on AWS documentation.

Chapter 11. Tutorial: Using AWS Secrets Manager CSI on ROSA with STS

The AWS Secrets and Configuration Provider (ASCP) provides a way to expose AWS Secrets as Kubernetes storage volumes. With the ASCP, you can store and manage your secrets in Secrets Manager and then retrieve them through your workloads running on Red Hat OpenShift Service on AWS (ROSA).

11.1. Prerequisites

Ensure that you have the following resources and tools before starting this process:

- A ROSA cluster deployed with STS

- Helm 3

-

awsCLI -

ocCLI -

jqCLI

Additional environment requirements

Log in to your ROSA cluster by running the following command:

$ oc login --token=<your-token> --server=<your-server-url>

You can find your login token by accessing your cluster in pull secret from Red Hat OpenShift Cluster Manager.

Validate that your cluster has STS by running the following command:

$ oc get authentication.config.openshift.io cluster -o json \ | jq .spec.serviceAccountIssuer

Example output

"https://xxxxx.cloudfront.net/xxxxx"

If your output is different, do not proceed. See Red Hat documentation on creating an STS cluster before continuing this process.

Set the