Security hardening

Enhancing security of Red Hat Enterprise Linux 9 systems

Résumé

Rendre l'open source plus inclusif

Red Hat s'engage à remplacer les termes problématiques dans son code, sa documentation et ses propriétés Web. Nous commençons par ces quatre termes : master, slave, blacklist et whitelist. En raison de l'ampleur de cette entreprise, ces changements seront mis en œuvre progressivement au cours de plusieurs versions à venir. Pour plus de détails, voir le message de notre directeur technique Chris Wright.

Fournir un retour d'information sur la documentation de Red Hat

Nous apprécions vos commentaires sur notre documentation. Faites-nous savoir comment nous pouvons l'améliorer.

Soumettre des commentaires sur des passages spécifiques

- Consultez la documentation au format Multi-page HTML et assurez-vous que le bouton Feedback apparaît dans le coin supérieur droit après le chargement complet de la page.

- Utilisez votre curseur pour mettre en évidence la partie du texte que vous souhaitez commenter.

- Cliquez sur le bouton Add Feedback qui apparaît près du texte en surbrillance.

- Ajoutez vos commentaires et cliquez sur Submit.

Soumettre des commentaires via Bugzilla (compte requis)

- Connectez-vous au site Web de Bugzilla.

- Sélectionnez la version correcte dans le menu Version.

- Saisissez un titre descriptif dans le champ Summary.

- Saisissez votre suggestion d'amélioration dans le champ Description. Incluez des liens vers les parties pertinentes de la documentation.

- Cliquez sur Submit Bug.

Chapitre 1. Securing RHEL during installation

Security begins even before you start the installation of Red Hat Enterprise Linux. Configuring your system securely from the beginning makes it easier to implement additional security settings later.

1.1. BIOS and UEFI security

Password protection for the BIOS (or BIOS equivalent) and the boot loader can prevent unauthorized users who have physical access to systems from booting using removable media or obtaining root privileges through single user mode. The security measures you should take to protect against such attacks depends both on the sensitivity of the information about the workstation and the location of the machine.

For example, if a machine is used in a trade show and contains no sensitive information, then it may not be critical to prevent such attacks. However, if an employee’s laptop with private, unencrypted SSH keys for the corporate network is left unattended at that same trade show, it could lead to a major security breach with ramifications for the entire company.

If the workstation is located in a place where only authorized or trusted people have access, however, then securing the BIOS or the boot loader may not be necessary.

1.1.1. BIOS passwords

The two primary reasons for password protecting the BIOS of a computer are[1]:

- Preventing changes to BIOS settings — If an intruder has access to the BIOS, they can set it to boot from a CD-ROM or a flash drive. This makes it possible for them to enter rescue mode or single user mode, which in turn allows them to start arbitrary processes on the system or copy sensitive data.

- Preventing system booting — Some BIOSes allow password protection of the boot process. When activated, an attacker is forced to enter a password before the BIOS launches the boot loader.

Because the methods for setting a BIOS password vary between computer manufacturers, consult the computer’s manual for specific instructions.

If you forget the BIOS password, it can either be reset with jumpers on the motherboard or by disconnecting the CMOS battery. For this reason, it is good practice to lock the computer case if possible. However, consult the manual for the computer or motherboard before attempting to disconnect the CMOS battery.

1.1.2. Non-BIOS-based systems security

Other systems and architectures use different programs to perform low-level tasks roughly equivalent to those of the BIOS on x86 systems. For example, the Unified Extensible Firmware Interface (UEFI) shell.

For instructions on password protecting BIOS-like programs, see the manufacturer’s instructions.

1.2. Disk partitioning

Red Hat recommends creating separate partitions for the /boot, /, /home, /tmp, and /var/tmp/ directories.

/boot-

This partition is the first partition that is read by the system during boot up. The boot loader and kernel images that are used to boot your system into Red Hat Enterprise Linux 9 are stored in this partition. This partition should not be encrypted. If this partition is included in

/and that partition is encrypted or otherwise becomes unavailable then your system is not able to boot. /home-

When user data (

/home) is stored in/instead of in a separate partition, the partition can fill up causing the operating system to become unstable. Also, when upgrading your system to the next version of Red Hat Enterprise Linux 9 it is a lot easier when you can keep your data in the/homepartition as it is not be overwritten during installation. If the root partition (/) becomes corrupt your data could be lost forever. By using a separate partition there is slightly more protection against data loss. You can also target this partition for frequent backups. /tmpand/var/tmp/-

Both the

/tmpand/var/tmp/directories are used to store data that does not need to be stored for a long period of time. However, if a lot of data floods one of these directories it can consume all of your storage space. If this happens and these directories are stored within/then your system could become unstable and crash. For this reason, moving these directories into their own partitions is a good idea.

During the installation process, you have an option to encrypt partitions. You must supply a passphrase. This passphrase serves as a key to unlock the bulk encryption key, which is used to secure the partition’s data.

1.3. Restricting network connectivity during the installation process

When installing Red Hat Enterprise Linux 9, the installation medium represents a snapshot of the system at a particular time. Because of this, it may not be up-to-date with the latest security fixes and may be vulnerable to certain issues that were fixed only after the system provided by the installation medium was released.

When installing a potentially vulnerable operating system, always limit exposure only to the closest necessary network zone. The safest choice is the “no network” zone, which means to leave your machine disconnected during the installation process. In some cases, a LAN or intranet connection is sufficient while the Internet connection is the riskiest. To follow the best security practices, choose the closest zone with your repository while installing Red Hat Enterprise Linux 9 from a network.

1.4. Installing the minimum amount of packages required

It is best practice to install only the packages you will use because each piece of software on your computer could possibly contain a vulnerability. If you are installing from the DVD media, take the opportunity to select exactly what packages you want to install during the installation. If you find you need another package, you can always add it to the system later.

1.5. Post-installation procedures

The following steps are the security-related procedures that should be performed immediately after installation of Red Hat Enterprise Linux 9.

Update your system. Enter the following command as root:

# dnf updateEven though the firewall service,

firewalld, is automatically enabled with the installation of Red Hat Enterprise Linux, there are scenarios where it might be explicitly disabled, for example in the kickstart configuration. In such a case, it is recommended to consider re-enabling the firewall.To start

firewalldenter the following commands as root:# systemctl start firewalld # systemctl enable firewalld

To enhance security, disable services you do not need. For example, if there are no printers installed on your computer, disable the

cupsservice using the following command:# systemctl disable cupsTo review active services, enter the following command:

$ systemctl list-units | grep service

Chapitre 2. Installing the system in FIPS mode

To enable the cryptographic module self-checks mandated by the Federal Information Processing Standard (FIPS) 140-3, you have to operate RHEL 9 in FIPS mode.

You can achieve this by:

- Starting the installation in FIPS mode.

- Switching the system into FIPS mode after the installation.

To avoid cryptographic key material regeneration and reevaluation of the compliance of the resulting system associated with converting already deployed systems, Red Hat recommends starting the installation in FIPS mode.

The cryptographic modules of RHEL 9 are not yet certified for the FIPS 140-3 requirements.

2.1. Federal Information Processing Standard (FIPS)

The Federal Information Processing Standard (FIPS) Publication 140-3 is a computer security standard developed by the U.S. Government and industry working group to validate the quality of cryptographic modules. See the official FIPS publications at NIST Computer Security Resource Center.

The FIPS 140-3 standard ensures that cryptographic tools implement their algorithms correctly. One of the mechanisms for that is runtime self-checks. See the full FIPS 140-3 standard at FIPS PUB 140-3 for further details and other specifications of the FIPS standard.

To learn about compliance requirements, see the Red Hat Government Standards page.

2.2. Installing the system with FIPS mode enabled

To enable the cryptographic module self-checks mandated by the Federal Information Processing Standard (FIPS) Publication 140-3, enable FIPS mode during the system installation.

Red Hat recommends installing RHEL with FIPS mode enabled, as opposed to enabling FIPS mode later. Enabling FIPS mode during the installation ensures that the system generates all keys with FIPS-approved algorithms and continuous monitoring tests in place.

Procédure

Add the

fips=1option to the kernel command line during the system installation.During the software selection stage, do not install any third-party software.

After the installation, the system starts in FIPS mode automatically.

Vérification

After the system starts, check that FIPS mode is enabled:

$ fips-mode-setup --check FIPS mode is enabled.

Ressources supplémentaires

- Editing boot options section in the Boot options for RHEL Installer document

2.3. Ressources supplémentaires

Chapitre 3. Using system-wide cryptographic policies

The system-wide cryptographic policies is a system component that configures the core cryptographic subsystems, covering the TLS, IPsec, SSH, DNSSec, and Kerberos protocols. It provides a small set of policies, which the administrator can select.

3.1. Politiques cryptographiques à l'échelle du système

When a system-wide policy is set up, applications in RHEL follow it and refuse to use algorithms and protocols that do not meet the policy, unless you explicitly request the application to do so. That is, the policy applies to the default behavior of applications when running with the system-provided configuration but you can override it if required.

RHEL 9 contains the following predefined policies:

|

| The default system-wide cryptographic policy level offers secure settings for current threat models. It allows the TLS 1.2 and 1.3 protocols, as well as the IKEv2 and SSH2 protocols. The RSA keys and Diffie-Hellman parameters are accepted if they are at least 2048 bits long. |

|

| This policy ensures maximum compatibility with Red Hat Enterprise Linux 6 and earlier; it is less secure due to an increased attack surface. SHA-1 is allowed to be used as TLS hash, signature, and algorithm. CBC-mode ciphers are allowed to be used with SSH. Applications using GnuTLS allow certificates signed with SHA-1. It allows the TLS 1.2 and 1.3 protocols, as well as the IKEv2 and SSH2 protocols. The RSA keys and Diffie-Hellman parameters are accepted if they are at least 2048 bits long. |

|

| A conservative security level that is believed to withstand any near-term future attacks. This level does not allow the use of SHA-1 in DNSSec or as an HMAC. SHA2-224 and SHA3-224 hashes are disabled. 128-bit ciphers are disabled. CBC-mode ciphers are disabled except in Kerberos. It allows the TLS 1.2 and 1.3 protocols, as well as the IKEv2 and SSH2 protocols. The RSA keys and Diffie-Hellman parameters are accepted if they are at least 3072 bits long. |

|

|

A policy level that conforms with the FIPS 140-2 requirements. This is used internally by the |

Red Hat continuously adjusts all policy levels so that all libraries, except when using the LEGACY policy, provide secure defaults. Even though the LEGACY profile does not provide secure defaults, it does not include any algorithms that are easily exploitable. As such, the set of enabled algorithms or acceptable key sizes in any provided policy may change during the lifetime of Red Hat Enterprise Linux.

Such changes reflect new security standards and new security research. If you must ensure interoperability with a specific system for the whole lifetime of Red Hat Enterprise Linux, you should opt-out from cryptographic-policies for components that interact with that system or re-enable specific algorithms using custom policies.

Because a cryptographic key used by a certificate on the Customer Portal API does not meet the requirements by the FUTURE system-wide cryptographic policy, the redhat-support-tool utility does not work with this policy level at the moment.

To work around this problem, use the DEFAULT crypto policy while connecting to the Customer Portal API.

The specific algorithms and ciphers described in the policy levels as allowed are available only if an application supports them.

Tool for managing crypto policies

To view or change the current system-wide cryptographic policy, use the update-crypto-policies tool, for example:

$ update-crypto-policies --show DEFAULT # update-crypto-policies --set FUTURE Setting system policy to FUTURE

To ensure that the change of the cryptographic policy is applied, restart the system.

Strong crypto defaults by removing insecure cipher suites and protocols

The following list contains cipher suites and protocols removed from the core cryptographic libraries in Red Hat Enterprise Linux 9. They are not present in the sources, or their support is disabled during the build, so applications cannot use them.

- DES (since RHEL 7)

- All export grade cipher suites (since RHEL 7)

- MD5 in signatures (since RHEL 7)

- SSLv2 (since RHEL 7)

- SSLv3 (since RHEL 8)

- All ECC curves < 224 bits (since RHEL 6)

- All binary field ECC curves (since RHEL 6)

Algorithmes désactivés à tous les niveaux de la politique

The following algorithms are disabled in LEGACY, DEFAULT, FUTURE and FIPS cryptographic policies included in RHEL 9. They can be enabled only by applying a custom cryptographic policy or by an explicit configuration of individual applications, but the resulting configuration would not be considered supported.

- TLS antérieur à la version 1.2 (depuis RHEL 9, était < 1.0 dans RHEL 8)

- DTLS antérieur à la version 1.2 (depuis RHEL 9, était < 1.0 dans RHEL 8)

- DH avec les paramètres < 2048 bits (depuis RHEL 9, était < 1024 bits dans RHEL 8)

- RSA avec une taille de clé de < 2048 bits (depuis RHEL 9, était < 1024 bits dans RHEL 8)

- DSA (depuis RHEL 9, était < 1024 bits dans RHEL 8)

- 3DES (depuis RHEL 9)

- RC4 (depuis RHEL 9)

- FFDHE-1024 (depuis RHEL 9)

- DHE-DSS (depuis RHEL 9)

- Camellia (depuis RHEL 9)

- ARIA

- IKEv1 (depuis RHEL 8)

Algorithms enabled in the crypto-policies levels

The following table shows the comparison of all four crypto-policies levels with regard to select algorithms.

LEGACY | DEFAULT | FIPS | FUTURE | |

|---|---|---|---|---|

| IKEv1 | non | non | non | non |

| 3DES | non | non | non | non |

| RC4 | non | non | non | non |

| DH | min. 2048-bit | min. 2048-bit | min. 2048-bit | min. 3072-bit |

| RSA | min. 2048-bit | min. 2048-bit | min. 2048-bit | min. 3072-bit |

| DSA | non | non | non | non |

| TLS v1.1 and older | non | non | non | non |

| TLS v1.2 and newer | yes | yes | yes | yes |

| SHA-1 in digital signatures and certificates | yes | non | non | non |

| CBC mode ciphers | yes | no[a] | no[b] | no[c] |

| Symmetric ciphers with keys < 256 bits | yes | yes | yes | non |

[a]

CBC ciphers are disabled for SSH

[b]

CBC ciphers are disabled for all protocols except Kerberos

[c]

CBC ciphers are disabled for all protocols except Kerberos

| ||||

Ressources supplémentaires

-

update-crypto-policies(8)man page

3.2. Switching the system-wide cryptographic policy to mode compatible with earlier releases

The default system-wide cryptographic policy in Red Hat Enterprise Linux 9 does not allow communication using older, insecure protocols. For environments that require to be compatible with Red Hat Enterprise Linux 6 and in some cases also with earlier releases, the less secure LEGACY policy level is available.

Switching to the LEGACY policy level results in a less secure system and applications.

Procédure

To switch the system-wide cryptographic policy to the

LEGACYlevel, enter the following command asroot:# update-crypto-policies --set LEGACY Setting system policy to LEGACY

Ressources supplémentaires

-

For the list of available cryptographic policy levels, see the

update-crypto-policies(8)man page. -

For defining custom cryptographic policies, see the

Custom Policiessection in theupdate-crypto-policies(8)man page and theCrypto Policy Definition Formatsection in thecrypto-policies(7)man page.

3.3. Mise en place de politiques cryptographiques à l'échelle du système dans la console web

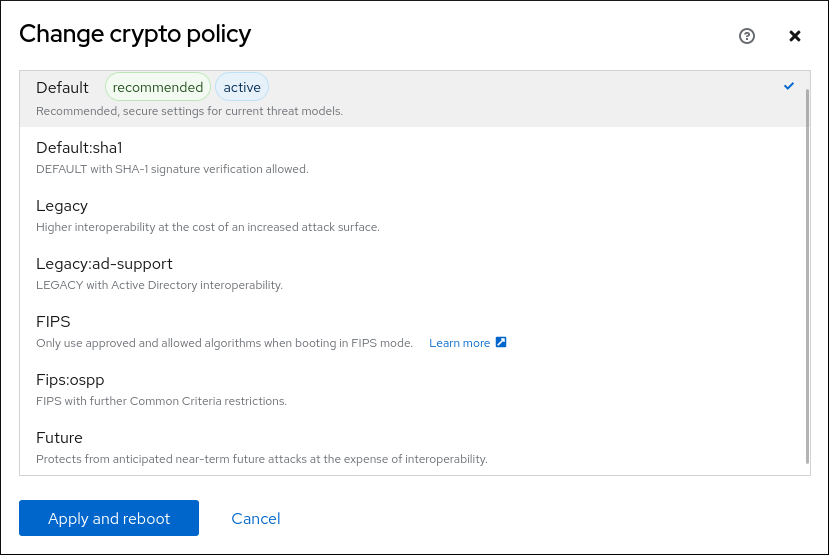

You can set one of system-wide cryptographic policies and subpolicies directly in the RHEL web console interface. Besides the four predefined system-wide cryptographic policies, you can also apply the following combinations of policies and subpolicies through the graphical interface now:

-

DEFAULT:SHA1est la politiqueDEFAULTavec l'algorithmeSHA-1activé. -

LEGACY:AD-SUPPORTest la stratégieLEGACYavec des paramètres moins sûrs qui améliorent l'interopérabilité des services Active Directory. -

FIPS:OSPPest la politiqueFIPSavec des restrictions supplémentaires inspirées de la norme Critères communs pour l'évaluation de la sécurité des technologies de l'information.

Conditions préalables

- La console web RHEL 9 a été installée. Pour plus de détails, voir Installation et activation de la console web.

-

You have

rootprivileges or permissions to enter administrative commands withsudo.

Procédure

- Log in to the web console. For more information, see Logging in to the web console.

Dans la carte Configuration de la page Overview, cliquez sur la valeur actuelle de votre police d'assurance à côté de Crypto policy.

In the Change crypto policy dialog window, click on the policy you want to start using on your system.

- Cliquez sur le bouton Appliquer et redémarrer.

Vérification

-

After the restart, log back in to web console, and check that the Crypto policy value corresponds to the one you selected. Alternatively, you can enter the

update-crypto-policies --showcommand to display the current system-wide cryptographic policy in your terminal.

3.4. Switching the system to FIPS mode

The system-wide cryptographic policies contain a policy level that enables cryptographic modules self-checks in accordance with the requirements by the Federal Information Processing Standard (FIPS) Publication 140-3. The fips-mode-setup tool that enables or disables FIPS mode internally uses the FIPS system-wide cryptographic policy level.

Red Hat recommends installing Red Hat Enterprise Linux 9 with FIPS mode enabled, as opposed to enabling FIPS mode later. Enabling FIPS mode during the installation ensures that the system generates all keys with FIPS-approved algorithms and continuous monitoring tests in place.

The cryptographic modules of RHEL 9 are not yet certified for the FIPS 140-3 requirements.

Procédure

To switch the system to FIPS mode:

# fips-mode-setup --enable Kernel initramdisks are being regenerated. This might take some time. Setting system policy to FIPS Note: System-wide crypto policies are applied on application start-up. It is recommended to restart the system for the change of policies to fully take place. FIPS mode will be enabled. Please reboot the system for the setting to take effect.Redémarrez votre système pour permettre au noyau de passer en mode FIPS :

# reboot

Vérification

After the restart, you can check the current state of FIPS mode:

# fips-mode-setup --check FIPS mode is enabled.

Ressources supplémentaires

-

fips-mode-setup(8)man page - Installing the system in FIPS mode

- Security Requirements for Cryptographic Modules on the National Institute of Standards and Technology (NIST) web site.

3.5. Enabling FIPS mode in a container

To enable the full set of cryptographic module self-checks mandated by the Federal Information Processing Standard Publication 140-2 (FIPS mode), the host system kernel must be running in FIPS mode. The podman utility automatically enables FIPS mode on supported containers.

The fips-mode-setup command does not work correctly in containers, and it cannot be used to enable or check FIPS mode in this scenario.

The cryptographic modules of RHEL 9 are not yet certified for the FIPS 140-3 requirements.

Conditions préalables

- The host system must be in FIPS mode.

Procédure

-

On systems with FIPS mode enabled, the

podmanutility automatically enables FIPS mode on supported containers.

Ressources supplémentaires

3.6. List of RHEL applications using cryptography that is not compliant with FIPS 140-3

Red Hat recommends utilizing libraries from the core crypto components set, as they are guaranteed to pass all relevant crypto certifications, such as FIPS 140-3, and also follow the RHEL system-wide crypto policies.

See the RHEL core crypto components article for an overview of the core cryptographic components, the information about how are they selected, how are they integrated into the operating system, how do they support hardware security modules and smart cards, and how do cryptographic certifications apply to them.

Tableau 3.1. List of RHEL 8 applications using cryptography that is not compliant with FIPS 140-3

| Application | Détails |

|---|---|

| Bacula | Implements the CRAM-MD5 authentication protocol. |

| Cyrus SASL | Uses the SCRAM-SHA-1 authentication method. |

| Dovecot | Uses SCRAM-SHA-1. |

| Emacs | Uses SCRAM-SHA-1. |

| FreeRADIUS | Uses MD5 and SHA-1 for authentication protocols. |

| Ghostscript | Custom cryptography implementation (MD5, RC4, SHA-2, AES) to encrypt and decrypt documents. |

| GRUB2 |

Supports legacy firmware protocols requiring SHA-1 and includes the |

| ipxe | Implements TLS stack. |

| Kerberos | Preserves support for SHA-1 (interoperability with Windows). |

| lasso |

The |

| MariaDB, MariaDB Connector |

The |

| MySQL |

|

| OpenIPMI | The RAKP-HMAC-MD5 authentication method is not approved for FIPS usage and does not work in FIPS mode. |

| Ovmf (UEFI firmware), Edk2, shim | Full crypto stack (an embedded copy of the OpenSSL library). |

| perl-CPAN | Digest MD5 authentication. |

| perl-Digest-HMAC, perl-Digest-SHA | Uses HMAC, HMAC-SHA1, HMAC-MD5, SHA-1, SHA-224, and so on. |

| perl-Mail-DKIM | The Signer class uses the RSA-SHA1 algorithm by default. |

| PKCS #12 file processing (OpenSSL, GnuTLS, NSS, Firefox, Java) | All uses of PKCS #12 are not FIPS-compliant, because the Key Derivation Function (KDF) used for calculating the whole-file HMAC is not FIPS-approved. As such, PKCS #12 files are considered to be plain text for the purposes of FIPS compliance. For key-transport purposes, wrap PKCS #12 (.p12) files using a FIPS-approved encryption scheme. |

| Poppler | Can save PDFs with signatures, passwords, and encryption based on non-allowed algorithms if they are present in the original PDF (for example MD5, RC4, and SHA-1). |

| PostgreSQL | KDF uses SHA-1. |

| QAT Engine | Mixed hardware and software implementation of cryptographic primitives (RSA, EC, DH, AES, …) |

| Rubis | Provides insecure MD5 and SHA-1 library functions. |

| Samba | Preserves support for RC4 and DES (interoperability with Windows). |

| Syslinux | BIOS passwords use SHA-1. |

| Unbound | DNS specification requires that DNSSEC resolvers use a SHA-1-based algorithm in DNSKEY records for validation. |

| Valgrind | AES, SHA hashes.[a] |

[a]

Re-implements in software hardware-offload operations, such as AES-NI or SHA-1 and SHA-2 on ARM.

| |

3.7. Excluding an application from following system-wide crypto policies

You can customize cryptographic settings used by your application preferably by configuring supported cipher suites and protocols directly in the application.

You can also remove a symlink related to your application from the /etc/crypto-policies/back-ends directory and replace it with your customized cryptographic settings. This configuration prevents the use of system-wide cryptographic policies for applications that use the excluded back end. Furthermore, this modification is not supported by Red Hat.

3.7.1. Examples of opting out of system-wide crypto policies

wget

To customize cryptographic settings used by the wget network downloader, use --secure-protocol and --ciphers options. For example:

$ wget --secure-protocol=TLSv1_1 --ciphers="SECURE128" https://example.com

See the HTTPS (SSL/TLS) Options section of the wget(1) man page for more information.

boucler

To specify ciphers used by the curl tool, use the --ciphers option and provide a colon-separated list of ciphers as a value. For example:

$ curl https://example.com --ciphers '@SECLEVEL=0:DES-CBC3-SHA:RSA-DES-CBC3-SHA'

See the curl(1) man page for more information.

Firefox

Even though you cannot opt out of system-wide cryptographic policies in the Firefox web browser, you can further restrict supported ciphers and TLS versions in Firefox’s Configuration Editor. Type about:config in the address bar and change the value of the security.tls.version.min option as required. Setting security.tls.version.min to 1 allows TLS 1.0 as the minimum required, security.tls.version.min 2 enables TLS 1.1, and so on.

OpenSSH

To opt out of system-wide crypto policies for your OpenSSH client, perform one of the following tasks:

-

For a given user, override the global

ssh_configwith a user-specific configuration in the~/.ssh/configfile. -

For the entire system, specify the crypto policy in a drop-in configuration file located in the

/etc/ssh/ssh_config.d/directory, with a two-digit number prefix smaller than 50, so that it lexicographically precedes the50-redhat.conffile, and with a.confsuffix, for example,49-crypto-policy-override.conf.

See the ssh_config(5) man page for more information.

Libreswan

See the Configuring IPsec connections that opt out of the system-wide crypto policies in the Securing networks document for detailed information.

Ressources supplémentaires

-

update-crypto-policies(8)man page

3.8. Customizing system-wide cryptographic policies with subpolicies

Use this procedure to adjust the set of enabled cryptographic algorithms or protocols.

You can either apply custom subpolicies on top of an existing system-wide cryptographic policy or define such a policy from scratch.

The concept of scoped policies allows enabling different sets of algorithms for different back ends. You can limit each configuration directive to specific protocols, libraries, or services.

Furthermore, directives can use asterisks for specifying multiple values using wildcards.

The /etc/crypto-policies/state/CURRENT.pol file lists all settings in the currently applied system-wide cryptographic policy after wildcard expansion. To make your cryptographic policy more strict, consider using values listed in the /usr/share/crypto-policies/policies/FUTURE.pol file.

You can find example subpolicies in the /usr/share/crypto-policies/policies/modules/ directory. The subpolicy files in this directory contain also descriptions in lines that are commented out.

Procédure

Checkout to the

/etc/crypto-policies/policies/modules/directory:# cd /etc/crypto-policies/policies/modules/Create subpolicies for your adjustments, for example:

# touch MYCRYPTO-1.pmod # touch SCOPES-AND-WILDCARDS.pmod

ImportantUse upper-case letters in file names of policy modules.

Open the policy modules in a text editor of your choice and insert options that modify the system-wide cryptographic policy, for example:

# vi MYCRYPTO-1.pmodmin_rsa_size = 3072 hash = SHA2-384 SHA2-512 SHA3-384 SHA3-512

# vi SCOPES-AND-WILDCARDS.pmod# Disable the AES-128 cipher, all modes cipher = -AES-128-* # Disable CHACHA20-POLY1305 for the TLS protocol (OpenSSL, GnuTLS, NSS, and OpenJDK) cipher@TLS = -CHACHA20-POLY1305 # Allow using the FFDHE-1024 group with the SSH protocol (libssh and OpenSSH) group@SSH = FFDHE-1024+ # Disable all CBC mode ciphers for the SSH protocol (libssh and OpenSSH) cipher@SSH = -*-CBC # Allow the AES-256-CBC cipher in applications using libssh cipher@libssh = AES-256-CBC+

- Save the changes in the module files.

Apply your policy adjustments to the

DEFAULTsystem-wide cryptographic policy level:# update-crypto-policies --set DEFAULT:MYCRYPTO-1:SCOPES-AND-WILDCARDSTo make your cryptographic settings effective for already running services and applications, restart the system:

# reboot

Vérification

Check that the

/etc/crypto-policies/state/CURRENT.polfile contains your changes, for example:$ cat /etc/crypto-policies/state/CURRENT.pol | grep rsa_size min_rsa_size = 3072

Ressources supplémentaires

-

Custom Policiessection in theupdate-crypto-policies(8)man page -

Crypto Policy Definition Formatsection in thecrypto-policies(7)man page - How to customize crypto policies in RHEL 8.2 Red Hat blog article

3.9. Re-enabling SHA-1

The use of the SHA-1 algorithm for creating and verifying signatures is restricted in the DEFAULT cryptographic policy. If your scenario requires the use of SHA-1 for verifying existing or third-party cryptographic signatures, you can enable it by applying the SHA1 subpolicy, which RHEL 9 provides by default. Note that it weakens the security of the system.

Conditions préalables

-

The system uses the

DEFAULTsystem-wide cryptographic policy.

Procédure

Apply the

SHA1subpolicy to theDEFAULTcryptographic policy:# update-crypto-policies --set DEFAULT:SHA1 Setting system policy to DEFAULT:SHA1 Note: System-wide crypto policies are applied on application start-up. It is recommended to restart the system for the change of policies to fully take place.Redémarrer le système :

# reboot

Vérification

Display the current cryptographic policy:

# update-crypto-policies --show DEFAULT:SHA1

Switching to the LEGACY cryptographic policy by using the update-crypto-policies --set LEGACY command also enables SHA-1 for signatures. However, the LEGACY cryptographic policy makes your system much more vulnerable by also enabling other weak cryptographic algorithms. Use this workaround only for scenarios that require the enablement of other legacy cryptographic algorithms than SHA-1 signatures.

Ressources supplémentaires

3.10. Creating and setting a custom system-wide cryptographic policy

The following steps demonstrate customizing the system-wide cryptographic policies by a complete policy file.

Procédure

Create a policy file for your customizations:

# cd /etc/crypto-policies/policies/ # touch MYPOLICY.pol

Alternatively, start by copying one of the four predefined policy levels:

# cp /usr/share/crypto-policies/policies/DEFAULT.pol /etc/crypto-policies/policies/MYPOLICY.polEdit the file with your custom cryptographic policy in a text editor of your choice to fit your requirements, for example:

# vi /etc/crypto-policies/policies/MYPOLICY.polSwitch the system-wide cryptographic policy to your custom level:

# update-crypto-policies --set MYPOLICYTo make your cryptographic settings effective for already running services and applications, restart the system:

# reboot

Ressources supplémentaires

-

Custom Policiessection in theupdate-crypto-policies(8)man page and theCrypto Policy Definition Formatsection in thecrypto-policies(7)man page - How to customize crypto policies in RHEL Red Hat blog article

Chapitre 4. Définition d'une politique cryptographique personnalisée à l'aide du rôle de système RHEL crypto-policies

En tant qu'administrateur, vous pouvez utiliser le rôle système crypto_policies RHEL pour configurer rapidement et de manière cohérente des politiques cryptographiques personnalisées sur de nombreux systèmes différents à l'aide du package Ansible Core.

4.1. crypto_policies Variables et faits relatifs au rôle du système

Dans un playbook crypto_policies System Role, vous pouvez définir les paramètres du fichier de configuration crypto_policies en fonction de vos préférences et de vos limites.

Si vous ne configurez aucune variable, le rôle système ne configure pas le système et se contente de signaler les faits.

Variables sélectionnées pour le rôle du système crypto_policies

crypto_policies_policy- Détermine la stratégie cryptographique que le rôle système applique aux nœuds gérés. Pour plus d'informations sur les différentes stratégies cryptographiques, voir Stratégies cryptographiques à l'échelle du système.

crypto_policies_reload-

S'il est défini sur

yes, les services concernés, actuellement les servicesipsec,bindetsshd, se rechargent après l'application d'une stratégie cryptographique. La valeur par défaut estyes. crypto_policies_reboot_ok-

S'il est défini sur

yeset qu'un redémarrage est nécessaire après que le rôle système a modifié la politique de cryptage, il définitcrypto_policies_reboot_requiredsuryes. La valeur par défaut estno.

Faits définis par le système crypto_policies Rôle

crypto_policies_active- Liste la politique actuellement sélectionnée.

crypto_policies_available_policies- Répertorie toutes les politiques disponibles sur le système.

crypto_policies_available_subpolicies- Répertorie toutes les sous-politiques disponibles sur le système.

Ressources supplémentaires

4.2. Définition d'une politique cryptographique personnalisée à l'aide du rôle de système crypto_policies

Vous pouvez utiliser le rôle de système crypto_policies pour configurer un grand nombre de nœuds gérés de manière cohérente à partir d'un seul nœud de contrôle.

Conditions préalables

-

Accès et autorisations à un ou plusieurs managed nodes, qui sont des systèmes que vous souhaitez configurer avec le rôle de système

crypto_policies. Accès et autorisations à un nœud de contrôle, qui est un système à partir duquel Red Hat Ansible Core configure d'autres systèmes.

Sur le nœud de contrôle :

-

Les paquets

ansible-coreetrhel-system-rolessont installés.

-

Les paquets

RHEL 8.0-8.5 provided access to a separate Ansible repository that contains Ansible Engine 2.9 for automation based on Ansible. Ansible Engine contains command-line utilities such as ansible, ansible-playbook, connectors such as docker and podman, and many plugins and modules. For information about how to obtain and install Ansible Engine, see the How to download and install Red Hat Ansible Engine Knowledgebase article.

RHEL 8.6 et 9.0 ont introduit Ansible Core (fourni en tant que paquetage ansible-core ), qui contient les utilitaires de ligne de commande Ansible, les commandes et un petit ensemble de plugins Ansible intégrés. RHEL fournit ce paquetage par l'intermédiaire du dépôt AppStream, et sa prise en charge est limitée. Pour plus d'informations, consultez l'article de la base de connaissances intitulé Scope of support for the Ansible Core package included in the RHEL 9 and RHEL 8.6 and later AppStream repositories (Portée de la prise en charge du package Ansible Core inclus dans les dépôts AppStream RHEL 9 et RHEL 8.6 et versions ultérieures ).

- Un fichier d'inventaire qui répertorie les nœuds gérés.

Procédure

Créez un nouveau fichier

playbook.ymlavec le contenu suivant :--- - hosts: all tasks: - name: Configure crypto policies include_role: name: rhel-system-roles.crypto_policies vars: - crypto_policies_policy: FUTURE - crypto_policies_reboot_ok: trueVous pouvez remplacer la valeur FUTURE par votre politique cryptographique préférée, par exemple :

DEFAULT,LEGACY, etFIPS:OSPP.La variable

crypto_policies_reboot_ok: trueprovoque le redémarrage du système après que le rôle système a modifié la stratégie cryptographique.Pour plus de détails, voir crypto_policies Variables et faits relatifs au rôle du système.

Facultatif : Vérifier la syntaxe du playbook.

# ansible-playbook --syntax-check playbook.ymlExécutez le playbook sur votre fichier d'inventaire :

# ansible-playbook -i inventory_file playbook.yml

Vérification

Sur le nœud de contrôle, créez un autre playbook nommé, par exemple,

verify_playbook.yml:- hosts: all tasks: - name: Verify active crypto policy include_role: name: rhel-system-roles.crypto_policies - debug: var: crypto_policies_activeCe playbook ne modifie aucune configuration sur le système, mais signale uniquement la politique active sur les nœuds gérés.

Exécutez le playbook sur le même fichier d'inventaire :

# ansible-playbook -i inventory_file verify_playbook.yml TASK [debug] ************************** ok: [host] => { "crypto_policies_active": "FUTURE" }

La variable

"crypto_policies_active":indique la politique active sur le nœud géré.

4.3. Ressources supplémentaires

-

/usr/share/ansible/roles/rhel-system-roles.crypto_policies/README.mdfichier. -

ansible-playbook(1)page de manuel. - Préparation d'un nœud de contrôle et de nœuds gérés à l'utilisation des rôles système RHEL.

Chapitre 5. Configuring applications to use cryptographic hardware through PKCS #11

Separating parts of your secret information about dedicated cryptographic devices, such as smart cards and cryptographic tokens for end-user authentication and hardware security modules (HSM) for server applications, provides an additional layer of security. In RHEL, support for cryptographic hardware through the PKCS #11 API is consistent across different applications, and the isolation of secrets on cryptographic hardware is not a complicated task.

5.1. Cryptographic hardware support through PKCS #11

PKCS #11 (Public-Key Cryptography Standard) defines an application programming interface (API) to cryptographic devices that hold cryptographic information and perform cryptographic functions. These devices are called tokens, and they can be implemented in a hardware or software form.

A PKCS #11 token can store various object types including a certificate; a data object; and a public, private, or secret key. These objects are uniquely identifiable through the PKCS #11 URI scheme.

A PKCS #11 URI is a standard way to identify a specific object in a PKCS #11 module according to the object attributes. This enables you to configure all libraries and applications with the same configuration string in the form of a URI.

RHEL provides the OpenSC PKCS #11 driver for smart cards by default. However, hardware tokens and HSMs can have their own PKCS #11 modules that do not have their counterpart in the system. You can register such PKCS #11 modules with the p11-kit tool, which acts as a wrapper over the registered smart-card drivers in the system.

To make your own PKCS #11 module work on the system, add a new text file to the /etc/pkcs11/modules/ directory

You can add your own PKCS #11 module into the system by creating a new text file in the /etc/pkcs11/modules/ directory. For example, the OpenSC configuration file in p11-kit looks as follows:

$ cat /usr/share/p11-kit/modules/opensc.module

module: opensc-pkcs11.soRessources supplémentaires

5.2. Utilisation de clés SSH stockées sur une carte à puce

Red Hat Enterprise Linux vous permet d'utiliser les clés RSA et ECDSA stockées sur une carte à puce sur les clients OpenSSH. Utilisez cette procédure pour activer l'authentification à l'aide d'une carte à puce au lieu d'un mot de passe.

Conditions préalables

-

Côté client, le paquet

openscest installé et le servicepcscdest en cours d'exécution.

Procédure

Dresser la liste de toutes les clés fournies par le module PKCS #11 d'OpenSC, y compris leurs URI PKCS #11, et enregistrer le résultat dans le fichier keys.pub:

$ ssh-keygen -D pkcs11: > keys.pub $ ssh-keygen -D pkcs11: ssh-rsa AAAAB3NzaC1yc2E...KKZMzcQZzx pkcs11:id=%02;object=SIGN%20pubkey;token=SSH%20key;manufacturer=piv_II?module-path=/usr/lib64/pkcs11/opensc-pkcs11.so ecdsa-sha2-nistp256 AAA...J0hkYnnsM= pkcs11:id=%01;object=PIV%20AUTH%20pubkey;token=SSH%20key;manufacturer=piv_II?module-path=/usr/lib64/pkcs11/opensc-pkcs11.so

Pour permettre l'authentification à l'aide d'une carte à puce sur un serveur distant (example.com), transférez la clé publique sur le serveur distant. Utilisez la commande

ssh-copy-idavec keys.pub créé à l'étape précédente :$ ssh-copy-id -f -i keys.pub username@example.comPour vous connecter à example.com à l'aide de la clé ECDSA issue de la commande

ssh-keygen -Dà l'étape 1, vous pouvez utiliser uniquement un sous-ensemble de l'URI, qui fait référence de manière unique à votre clé, par exemple :$ ssh -i "pkcs11:id=%01?module-path=/usr/lib64/pkcs11/opensc-pkcs11.so" example.com Enter PIN for 'SSH key': [example.com] $Vous pouvez utiliser la même chaîne URI dans le fichier

~/.ssh/configpour rendre la configuration permanente :$ cat ~/.ssh/config IdentityFile "pkcs11:id=%01?module-path=/usr/lib64/pkcs11/opensc-pkcs11.so" $ ssh example.com Enter PIN for 'SSH key': [example.com] $

Comme OpenSSH utilise le wrapper

p11-kit-proxyet que le module OpenSC PKCS #11 est enregistré dans le kit PKCS#11, vous pouvez simplifier les commandes précédentes :$ ssh -i "pkcs11:id=%01" example.com Enter PIN for 'SSH key': [example.com] $

Si vous omettez la partie id= d'un URI PKCS #11, OpenSSH charge toutes les clés disponibles dans le module proxy. Cela peut réduire la quantité de données à saisir :

$ ssh -i pkcs11: example.com

Enter PIN for 'SSH key':

[example.com] $Ressources supplémentaires

- Fedora 28 : Meilleur support des cartes à puce dans OpenSSH

-

p11-kit(8),opensc.conf(5),pcscd(8),ssh(1), etssh-keygen(1)pages de manuel

5.3. Configuring applications to authenticate using certificates from smart cards

Authentication using smart cards in applications may increase security and simplify automation.

The

wgetnetwork downloader enables you to specify PKCS #11 URIs instead of paths to locally stored private keys, and thus simplifies creating scripts for tasks that require safely stored private keys and certificates. For example:$ wget --private-key 'pkcs11:token=softhsm;id=%01;type=private?pin-value=111111' --certificate 'pkcs11:token=softhsm;id=%01;type=cert' https://example.com/See the

wget(1)man page for more information.Specifying PKCS #11 URI for use by the

curltool is analogous:$ curl --key 'pkcs11:token=softhsm;id=%01;type=private?pin-value=111111' --cert 'pkcs11:token=softhsm;id=%01;type=cert' https://example.com/See the

curl(1)man page for more information.NoteBecause a PIN is a security measure that controls access to keys stored on a smart card and the configuration file contains the PIN in the plain-text form, consider additional protection to prevent an attacker from reading the PIN. For example, you can use the

pin-sourceattribute and provide afile:URI for reading the PIN from a file. See RFC 7512: PKCS #11 URI Scheme Query Attribute Semantics for more information. Note that using a command path as a value of thepin-sourceattribute is not supported.-

The

Firefoxweb browser automatically loads thep11-kit-proxymodule. This means that every supported smart card in the system is automatically detected. For using TLS client authentication, no additional setup is required and keys from a smart card are automatically used when a server requests them.

Using PKCS #11 URIs in custom applications

If your application uses the GnuTLS or NSS library, support for PKCS #11 URIs is ensured by their built-in support for PKCS #11. Also, applications relying on the OpenSSL library can access cryptographic hardware modules thanks to the openssl-pkcs11 engine.

With applications that require working with private keys on smart cards and that do not use NSS, GnuTLS, and OpenSSL, use p11-kit to implement registering PKCS #11 modules.

Ressources supplémentaires

-

p11-kit(8)man page.

5.4. Using HSMs protecting private keys in Apache

The Apache HTTP server can work with private keys stored on hardware security modules (HSMs), which helps to prevent the keys' disclosure and man-in-the-middle attacks. Note that this usually requires high-performance HSMs for busy servers.

For secure communication in the form of the HTTPS protocol, the Apache HTTP server (httpd) uses the OpenSSL library. OpenSSL does not support PKCS #11 natively. To use HSMs, you have to install the openssl-pkcs11 package, which provides access to PKCS #11 modules through the engine interface. You can use a PKCS #11 URI instead of a regular file name to specify a server key and a certificate in the /etc/httpd/conf.d/ssl.conf configuration file, for example:

SSLCertificateFile "pkcs11:id=%01;token=softhsm;type=cert" SSLCertificateKeyFile "pkcs11:id=%01;token=softhsm;type=private?pin-value=111111"

Install the httpd-manual package to obtain complete documentation for the Apache HTTP Server, including TLS configuration. The directives available in the /etc/httpd/conf.d/ssl.conf configuration file are described in detail in the /usr/share/httpd/manual/mod/mod_ssl.html file.

5.5. Using HSMs protecting private keys in Nginx

The Nginx HTTP server can work with private keys stored on hardware security modules (HSMs), which helps to prevent the keys' disclosure and man-in-the-middle attacks. Note that this usually requires high-performance HSMs for busy servers.

Because Nginx also uses the OpenSSL for cryptographic operations, support for PKCS #11 must go through the openssl-pkcs11 engine. Nginx currently supports only loading private keys from an HSM, and a certificate must be provided separately as a regular file. Modify the ssl_certificate and ssl_certificate_key options in the server section of the /etc/nginx/nginx.conf configuration file:

ssl_certificate /path/to/cert.pem ssl_certificate_key "engine:pkcs11:pkcs11:token=softhsm;id=%01;type=private?pin-value=111111";

Note that the engine:pkcs11: prefix is needed for the PKCS #11 URI in the Nginx configuration file. This is because the other pkcs11 prefix refers to the engine name.

5.6. Ressources supplémentaires

-

pkcs11.conf(5)man page.

Chapitre 6. Controlling access to smart cards using polkit

To cover possible threats that cannot be prevented by mechanisms built into smart cards, such as PINs, PIN pads, and biometrics, and for more fine-grained control, RHEL uses the polkit framework for controlling access control to smart cards.

System administrators can configure polkit to fit specific scenarios, such as smart-card access for non-privileged or non-local users or services.

6.1. Smart-card access control through polkit

The Personal Computer/Smart Card (PC/SC) protocol specifies a standard for integrating smart cards and their readers into computing systems. In RHEL, the pcsc-lite package provides middleware to access smart cards that use the PC/SC API. A part of this package, the pcscd (PC/SC Smart Card) daemon, ensures that the system can access a smart card using the PC/SC protocol.

Because access-control mechanisms built into smart cards, such as PINs, PIN pads, and biometrics, do not cover all possible threats, RHEL uses the polkit framework for more robust access control. The polkit authorization manager can grant access to privileged operations. In addition to granting access to disks, you can use polkit also to specify policies for securing smart cards. For example, you can define which users can perform which operations with a smart card.

After installing the pcsc-lite package and starting the pcscd daemon, the system enforces policies defined in the /usr/share/polkit-1/actions/ directory. The default system-wide policy is in the /usr/share/polkit-1/actions/org.debian.pcsc-lite.policy file. Polkit policy files use the XML format and the syntax is described in the polkit(8) man page.

The polkitd service monitors the /etc/polkit-1/rules.d/ and /usr/share/polkit-1/rules.d/ directories for any changes in rule files stored in these directories. The files contain authorization rules in JavaScript format. System administrators can add custom rule files in both directories, and polkitd reads them in lexical order based on their file name. If two files have the same names, then the file in /etc/polkit-1/rules.d/ is read first.

Ressources supplémentaires

-

polkit(8),polkitd(8), andpcscd(8)man pages.

6.2. Troubleshooting problems related to PC/SC and polkit

Polkit policies that are automatically enforced after you install the pcsc-lite package and start the pcscd daemon may ask for authentication in the user’s session even if the user does not directly interact with a smart card. In GNOME, you can see the following error message:

Authentication is required to access the PC/SC daemon

Note that the system can install the pcsc-lite package as a dependency when you install other packages related to smart cards such as opensc.

If your scenario does not require any interaction with smart cards and you want to prevent displaying authorization requests for the PC/SC daemon, you can remove the pcsc-lite package. Keeping the minimum of necessary packages is a good security practice anyway.

If you use smart cards, start troubleshooting by checking the rules in the system-provided policy file at /usr/share/polkit-1/actions/org.debian.pcsc-lite.policy. You can add your custom rule files to the policy in the /etc/polkit-1/rules.d/ directory, for example, 03-allow-pcscd.rules. Note that the rule files use the JavaScript syntax, the policy file is in the XML format.

To understand what authorization requests the system displays, check the Journal log, for example:

$ journalctl -b | grep pcsc

...

Process 3087 (user: 1001) is NOT authorized for action: access_pcsc

...

The previous log entry means that the user is not authorized to perform an action by the policy. You can solve this denial by adding a corresponding rule to /etc/polkit-1/rules.d/.

You can search also for log entries related to the polkitd unit, for example:

$ journalctl -u polkit

...

polkitd[NNN]: Error compiling script /etc/polkit-1/rules.d/00-debug-pcscd.rules

...

polkitd[NNN]: Operator of unix-session:c2 FAILED to authenticate to gain authorization for action org.debian.pcsc-lite.access_pcsc for unix-process:4800:14441 [/usr/libexec/gsd-smartcard] (owned by unix-user:group)

...

In the previous output, the first entry means that the rule file contains some syntax error. The second entry means that the user failed to gain the access to pcscd.

You can also list all applications that use the PC/SC protocol by a short script. Create an executable file, for example, pcsc-apps.sh, and insert the following code:

#!/bin/bash cd /proc for p in [0-9]* do if grep libpcsclite.so.1.0.0 $p/maps &> /dev/null then echo -n "process: " cat $p/cmdline echo " ($p)" fi done

Run the script as root:

# ./pcsc-apps.sh

process: /usr/libexec/gsd-smartcard (3048)

enable-sync --auto-ssl-client-auth --enable-crashpad (4828)

...Ressources supplémentaires

-

journalctl,polkit(8),polkitd(8), andpcscd(8)man pages.

6.3. Displaying more detailed information about polkit authorization to PC/SC

In the default configuration, the polkit authorization framework sends only limited information to the Journal log. You can extend polkit log entries related to the PC/SC protocol by adding new rules.

Conditions préalables

-

You have installed the

pcsc-litepackage on your system. -

The

pcscddaemon is running.

Procédure

Create a new file in the

/etc/polkit-1/rules.d/directory:# touch /etc/polkit-1/rules.d/00-test.rulesEdit the file in an editor of your choice, for example:

# vi /etc/polkit-1/rules.d/00-test.rulesInsert the following lines:

polkit.addRule(function(action, subject) { if (action.id == "org.debian.pcsc-lite.access_pcsc" || action.id == "org.debian.pcsc-lite.access_card") { polkit.log("action=" + action); polkit.log("subject=" + subject); } });Save the file, and exit the editor.

Restart the

pcscdandpolkitservices:# systemctl restart pcscd.service pcscd.socket polkit.service

Vérification

-

Make an authorization request for

pcscd. For example, open the Firefox web browser or use thepkcs11-tool -Lcommand provided by theopenscpackage. Display the extended log entries, for example:

# journalctl -u polkit --since "1 hour ago" polkitd[1224]: <no filename>:4: action=[Action id='org.debian.pcsc-lite.access_pcsc'] polkitd[1224]: <no filename>:5: subject=[Subject pid=2020481 user=user' groups=user,wheel,mock,wireshark seat=null session=null local=true active=true]

Ressources supplémentaires

-

polkit(8)andpolkitd(8)man pages.

6.4. Ressources supplémentaires

- Controlling access to smart cards Red Hat Blog article.

Chapitre 7. Scanning the system for configuration compliance and vulnerabilities

A compliance audit is a process of determining whether a given object follows all the rules specified in a compliance policy. The compliance policy is defined by security professionals who specify the required settings, often in the form of a checklist, that a computing environment should use.

Compliance policies can vary substantially across organizations and even across different systems within the same organization. Differences among these policies are based on the purpose of each system and its importance for the organization. Custom software settings and deployment characteristics also raise a need for custom policy checklists.

7.1. Configuration compliance tools in RHEL

Red Hat Enterprise Linux provides tools that enable you to perform a fully automated compliance audit. These tools are based on the Security Content Automation Protocol (SCAP) standard and are designed for automated tailoring of compliance policies.

-

SCAP Workbench - The

scap-workbenchgraphical utility is designed to perform configuration and vulnerability scans on a single local or remote system. You can also use it to generate security reports based on these scans and evaluations. -

OpenSCAP - The

OpenSCAPlibrary, with the accompanyingoscapcommand-line utility, is designed to perform configuration and vulnerability scans on a local system, to validate configuration compliance content, and to generate reports and guides based on these scans and evaluations.

You can experience memory-consumption problems while using OpenSCAP, which can cause stopping the program prematurely and prevent generating any result files. See the OpenSCAP memory-consumption problems Knowledgebase article for details.

-

SCAP Security Guide (SSG) - The

scap-security-guidepackage provides the latest collection of security policies for Linux systems. The guidance consists of a catalog of practical hardening advice, linked to government requirements where applicable. The project bridges the gap between generalized policy requirements and specific implementation guidelines. -

Script Check Engine (SCE) - SCE is an extension to the SCAP protocol that enables administrators to write their security content using a scripting language, such as Bash, Python, and Ruby. The SCE extension is provided in the

openscap-engine-scepackage. The SCE itself is not part of the SCAP standard.

To perform automated compliance audits on multiple systems remotely, you can use the OpenSCAP solution for Red Hat Satellite.

Ressources supplémentaires

-

oscap(8),scap-workbench(8), andscap-security-guide(8)man pages - Red Hat Security Demos: Creating Customized Security Policy Content to Automate Security Compliance

- Red Hat Security Demos: Defend Yourself with RHEL Security Technologies

- Security Compliance Management in the Administering Red Hat Satellite Guide.

7.2. Analyse de la vulnérabilité

7.2.1. Red Hat Security Advisories OVAL feed

Red Hat Enterprise Linux security auditing capabilities are based on the Security Content Automation Protocol (SCAP) standard. SCAP is a multi-purpose framework of specifications that supports automated configuration, vulnerability and patch checking, technical control compliance activities, and security measurement.

SCAP specifications create an ecosystem where the format of security content is well-known and standardized although the implementation of the scanner or policy editor is not mandated. This enables organizations to build their security policy (SCAP content) once, no matter how many security vendors they employ.

The Open Vulnerability Assessment Language (OVAL) is the essential and oldest component of SCAP. Unlike other tools and custom scripts, OVAL describes a required state of resources in a declarative manner. OVAL code is never executed directly but using an OVAL interpreter tool called scanner. The declarative nature of OVAL ensures that the state of the assessed system is not accidentally modified.

Like all other SCAP components, OVAL is based on XML. The SCAP standard defines several document formats. Each of them includes a different kind of information and serves a different purpose.

Red Hat Product Security helps customers evaluate and manage risk by tracking and investigating all security issues affecting Red Hat customers. It provides timely and concise patches and security advisories on the Red Hat Customer Portal. Red Hat creates and supports OVAL patch definitions, providing machine-readable versions of our security advisories.

Because of differences between platforms, versions, and other factors, Red Hat Product Security qualitative severity ratings of vulnerabilities do not directly align with the Common Vulnerability Scoring System (CVSS) baseline ratings provided by third parties. Therefore, we recommend that you use the RHSA OVAL definitions instead of those provided by third parties.

The RHSA OVAL definitions are available individually and as a complete package, and are updated within an hour of a new security advisory being made available on the Red Hat Customer Portal.

Each OVAL patch definition maps one-to-one to a Red Hat Security Advisory (RHSA). Because an RHSA can contain fixes for multiple vulnerabilities, each vulnerability is listed separately by its Common Vulnerabilities and Exposures (CVE) name and has a link to its entry in our public bug database.

The RHSA OVAL definitions are designed to check for vulnerable versions of RPM packages installed on a system. It is possible to extend these definitions to include further checks, for example, to find out if the packages are being used in a vulnerable configuration. These definitions are designed to cover software and updates shipped by Red Hat. Additional definitions are required to detect the patch status of third-party software.

The Red Hat Insights for Red Hat Enterprise Linux compliance service helps IT security and compliance administrators to assess, monitor, and report on the security policy compliance of Red Hat Enterprise Linux systems. You can also create and manage your SCAP security policies entirely within the compliance service UI.

Ressources supplémentaires

7.2.2. Scanning the system for vulnerabilities

The oscap command-line utility enables you to scan local systems, validate configuration compliance content, and generate reports and guides based on these scans and evaluations. This utility serves as a front end to the OpenSCAP library and groups its functionalities to modules (sub-commands) based on the type of SCAP content it processes.

Conditions préalables

-

The

openscap-scannerandbzip2packages are installed.

Procédure

Download the latest RHSA OVAL definitions for your system:

# wget -O - https://www.redhat.com/security/data/oval/v2/RHEL9/rhel-9.oval.xml.bz2 | bzip2 --decompress > rhel-9.oval.xmlScan the system for vulnerabilities and save results to the vulnerability.html file:

# oscap oval eval --report vulnerability.html rhel-9.oval.xml

Vérification

Check the results in a browser of your choice, for example:

$ firefox vulnerability.html &

Ressources supplémentaires

-

oscap(8)man page - Red Hat OVAL definitions

- OpenSCAP memory consumption problems

7.2.3. Scanning remote systems for vulnerabilities

You can check also remote systems for vulnerabilities with the OpenSCAP scanner using the oscap-ssh tool over the SSH protocol.

Conditions préalables

-

The

openscap-utilsandbzip2packages are installed on the system you use for scanning. -

The

openscap-scannerpackage is installed on the remote systems. - The SSH server is running on the remote systems.

Procédure

Download the latest RHSA OVAL definitions for your system:

# wget -O - https://www.redhat.com/security/data/oval/v2/RHEL9/rhel-9.oval.xml.bz2 | bzip2 --decompress > rhel-9.oval.xmlScan a remote system with the machine1 host name, SSH running on port 22, and the joesec user name for vulnerabilities and save results to the remote-vulnerability.html file:

# oscap-ssh joesec@machine1 22 oval eval --report remote-vulnerability.html rhel-9.oval.xml

Ressources supplémentaires

7.3. Configuration compliance scanning

7.3.1. Configuration compliance in RHEL

You can use configuration compliance scanning to conform to a baseline defined by a specific organization. For example, if you work with the US government, you might have to align your systems with the Operating System Protection Profile (OSPP), and if you are a payment processor, you might have to align your systems with the Payment Card Industry Data Security Standard (PCI-DSS). You can also perform configuration compliance scanning to harden your system security.

Red Hat recommends you follow the Security Content Automation Protocol (SCAP) content provided in the SCAP Security Guide package because it is in line with Red Hat best practices for affected components.

The SCAP Security Guide package provides content which conforms to the SCAP 1.2 and SCAP 1.3 standards. The openscap scanner utility is compatible with both SCAP 1.2 and SCAP 1.3 content provided in the SCAP Security Guide package.

Performing a configuration compliance scanning does not guarantee the system is compliant.

The SCAP Security Guide suite provides profiles for several platforms in a form of data stream documents. A data stream is a file that contains definitions, benchmarks, profiles, and individual rules. Each rule specifies the applicability and requirements for compliance. RHEL provides several profiles for compliance with security policies. In addition to the industry standard, Red Hat data streams also contain information for remediation of failed rules.

Structure of compliance scanning resources

Data stream ├── xccdf | ├── benchmark | ├── profile | | ├──rule reference | | └──variable | ├── rule | ├── human readable data | ├── oval reference ├── oval ├── ocil reference ├── ocil ├── cpe reference └── cpe └── remediation

A profile is a set of rules based on a security policy, such as OSPP, PCI-DSS, and Health Insurance Portability and Accountability Act (HIPAA). This enables you to audit the system in an automated way for compliance with security standards.

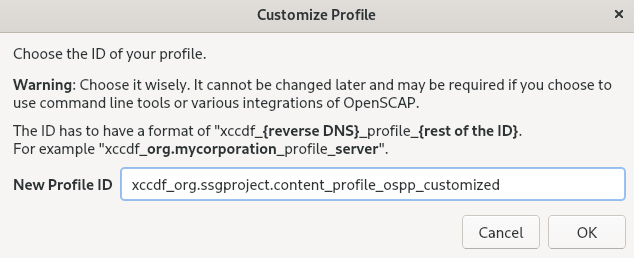

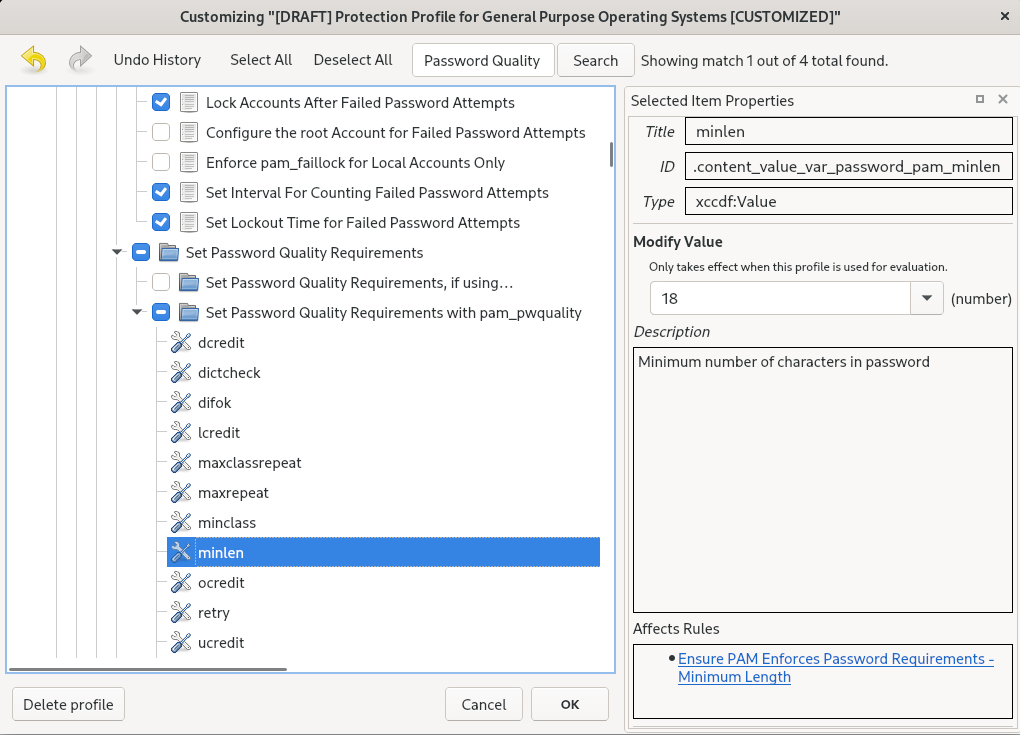

You can modify (tailor) a profile to customize certain rules, for example, password length. For more information about profile tailoring, see Customizing a security profile with SCAP Workbench.

7.3.2. Possible results of an OpenSCAP scan

Depending on various properties of your system and the data stream and profile applied to an OpenSCAP scan, each rule may produce a specific result. This is a list of possible results with brief explanations of what they mean.

Tableau 7.1. Possible results of an OpenSCAP scan

| Résultat | Explication |

|---|---|

| Pass | The scan did not find any conflicts with this rule. |

| Fail | The scan found a conflict with this rule. |

| Not checked | OpenSCAP does not perform an automatic evaluation of this rule. Check whether your system conforms to this rule manually. |

| Sans objet | This rule does not apply to the current configuration. |

| Not selected | This rule is not part of the profile. OpenSCAP does not evaluate this rule and does not display these rules in the results. |

| Error |

The scan encountered an error. For additional information, you can enter the |

| Inconnu |

The scan encountered an unexpected situation. For additional information, you can enter the |

7.3.3. Viewing profiles for configuration compliance

Before you decide to use profiles for scanning or remediation, you can list them and check their detailed descriptions using the oscap info subcommand.

Conditions préalables

-

The

openscap-scannerandscap-security-guidepackages are installed.

Procédure

List all available files with security compliance profiles provided by the SCAP Security Guide project:

$ ls /usr/share/xml/scap/ssg/content/ ssg-rhel9-ds.xmlDisplay detailed information about a selected data stream using the

oscap infosubcommand. XML files containing data streams are indicated by the-dsstring in their names. In theProfilessection, you can find a list of available profiles and their IDs:$ oscap info /usr/share/xml/scap/ssg/content/ssg-rhel9-ds.xml Profiles: ... Title: Australian Cyber Security Centre (ACSC) Essential Eight Id: xccdf_org.ssgproject.content_profile_e8 Title: Health Insurance Portability and Accountability Act (HIPAA) Id: xccdf_org.ssgproject.content_profile_hipaa Title: PCI-DSS v3.2.1 Control Baseline for Red Hat Enterprise Linux 9 Id: xccdf_org.ssgproject.content_profile_pci-dss ...Select a profile from the data-stream file and display additional details about the selected profile. To do so, use

oscap infowith the--profileoption followed by the last section of the ID displayed in the output of the previous command. For example, the ID of the HIPPA profile is:xccdf_org.ssgproject.content_profile_hipaa, and the value for the--profileoption ishipaa:$ oscap info --profile hipaa /usr/share/xml/scap/ssg/content/ssg-rhel9-ds.xml ... Profile Title: [RHEL9 DRAFT] Health Insurance Portability and Accountability Act (HIPAA) Id: xccdf_org.ssgproject.content_profile_hipaa Description: The HIPAA Security Rule establishes U.S. national standards to protect individuals’ electronic personal health information that is created, received, used, or maintained by a covered entity. The Security Rule requires appropriate administrative, physical and technical safeguards to ensure the confidentiality, integrity, and security of electronic protected health information. This profile configures Red Hat Enterprise Linux 9 to the HIPAA Security Rule identified for securing of electronic protected health information. Use of this profile in no way guarantees or makes claims against legal compliance against the HIPAA Security Rule(s).

Ressources supplémentaires

-

scap-security-guide(8)man page - OpenSCAP memory consumption problems

7.3.4. Assessing configuration compliance with a specific baseline

To determine whether your system conforms to a specific baseline, follow these steps.

Conditions préalables

-

The

openscap-scannerandscap-security-guidepackages are installed - You know the ID of the profile within the baseline with which the system should comply. To find the ID, see Viewing Profiles for Configuration Compliance.

Procédure

Evaluate the compliance of the system with the selected profile and save the scan results in the report.html HTML file, for example:

$ oscap xccdf eval --report report.html --profile hipaa /usr/share/xml/scap/ssg/content/ssg-rhel9-ds.xmlOptional: Scan a remote system with the

machine1host name, SSH running on port22, and thejoesecuser name for compliance and save results to theremote-report.htmlfile:$ oscap-ssh joesec@machine1 22 xccdf eval --report remote_report.html --profile hipaa /usr/share/xml/scap/ssg/content/ssg-rhel9-ds.xml

Ressources supplémentaires

-

scap-security-guide(8)man page -

SCAP Security Guidedocumentation in the/usr/share/doc/scap-security-guide/directory -

/usr/share/doc/scap-security-guide/guides/ssg-rhel9-guide-index.html- [Guide to the Secure Configuration of Red Hat Enterprise Linux 9] installed with thescap-security-guide-docpackage - OpenSCAP memory consumption problems

7.4. Remediating the system to align with a specific baseline

Use this procedure to remediate the RHEL system to align with a specific baseline. This example uses the Health Insurance Portability and Accountability Act (HIPAA) profile.

If not used carefully, running the system evaluation with the Remediate option enabled might render the system non-functional. Red Hat does not provide any automated method to revert changes made by security-hardening remediations. Remediations are supported on RHEL systems in the default configuration. If your system has been altered after the installation, running remediation might not make it compliant with the required security profile.

Conditions préalables

-

The

scap-security-guidepackage is installed on your RHEL system.

Procédure

Use the

oscapcommand with the--remediateoption:# oscap xccdf eval --profile hipaa --remediate /usr/share/xml/scap/ssg/content/ssg-rhel9-ds.xml- Restart your system.

Vérification

Evaluate compliance of the system with the HIPAA profile, and save scan results in the

hipaa_report.htmlfile:$ oscap xccdf eval --report hipaa_report.html --profile hipaa /usr/share/xml/scap/ssg/content/ssg-rhel9-ds.xml

Ressources supplémentaires

-

scap-security-guide(8)andoscap(8)man pages

7.5. Remediating the system to align with a specific baseline using an SSG Ansible playbook

Use this procedure to remediate your system with a specific baseline using an Ansible playbook file from the SCAP Security Guide project. This example uses the Health Insurance Portability and Accountability Act (HIPAA) profile.

If not used carefully, running the system evaluation with the Remediate option enabled might render the system non-functional. Red Hat does not provide any automated method to revert changes made by security-hardening remediations. Remediations are supported on RHEL systems in the default configuration. If your system has been altered after the installation, running remediation might not make it compliant with the required security profile.

Conditions préalables

-

The

scap-security-guidepackage is installed. -

The

ansible-corepackage is installed. See the Ansible Installation Guide for more information.

In RHEL 8.6 and later versions, Ansible Engine is replaced by the ansible-core package, which contains only built-in modules. Note that many Ansible remediations use modules from the community and Portable Operating System Interface (POSIX) collections, which are not included in the built-in modules. In this case, you can use Bash remediations as a substitute to Ansible remediations. The Red Hat Connector in RHEL 9 includes the necessary Ansible modules to enable the remediation playbooks to function with Ansible Core.

Procédure

Remediate your system to align with HIPAA using Ansible:

# ansible-playbook -i localhost, -c local /usr/share/scap-security-guide/ansible/rhel9-playbook-hipaa.yml- Restart the system.

Vérification

Evaluate compliance of the system with the HIPAA profile, and save scan results in the

hipaa_report.htmlfile:# oscap xccdf eval --profile hipaa --report hipaa_report.html /usr/share/xml/scap/ssg/content/ssg-rhel9-ds.xml

Ressources supplémentaires

-

scap-security-guide(8)andoscap(8)man pages - Ansible Documentation

7.6. Creating a remediation Ansible playbook to align the system with a specific baseline

You can create an Ansible playbook containing only the remediations that are required to align your system with a specific baseline. This example uses the Health Insurance Portability and Accountability Act (HIPAA) profile. With this procedure, you create a smaller playbook that does not cover already satisfied requirements. By following these steps, you do not modify your system in any way, you only prepare a file for later application.

In RHEL 9, Ansible Engine is replaced by the ansible-core package, which contains only built-in modules. Note that many Ansible remediations use modules from the community and Portable Operating System Interface (POSIX) collections, which are not included in the built-in modules. In this case, you can use Bash remediations as a substitute for Ansible remediations. The Red Hat Connector in RHEL 9.0 includes the necessary Ansible modules to enable the remediation playbooks to function with Ansible Core.

Conditions préalables

-

The

scap-security-guidepackage is installed.

Procédure

Scan the system and save the results:

# oscap xccdf eval --profile hipaa --results hipaa-results.xml /usr/share/xml/scap/ssg/content/ssg-rhel9-ds.xmlGenerate an Ansible playbook based on the file generated in the previous step:

# oscap xccdf generate fix --fix-type ansible --profile hipaa --output hipaa-remediations.yml hipaa-results.xml-

The

hipaa-remediations.ymlfile contains Ansible remediations for rules that failed during the scan performed in step 1. After reviewing this generated file, you can apply it with theansible-playbook hipaa-remediations.ymlcommand.

Vérification

-

In a text editor of your choice, review that the

hipaa-remediations.ymlfile contains rules that failed in the scan performed in step 1.

Ressources supplémentaires

-

scap-security-guide(8)andoscap(8)man pages - Ansible Documentation

7.7. Creating a remediation Bash script for a later application

Use this procedure to create a Bash script containing remediations that align your system with a security profile such as HIPAA. Using the following steps, you do not do any modifications to your system, you only prepare a file for later application.

Conditions préalables

-

The

scap-security-guidepackage is installed on your RHEL system.

Procédure

Use the

oscapcommand to scan the system and to save the results to an XML file. In the following example,oscapevaluates the system against thehipaaprofile:# oscap xccdf eval --profile hipaa --results hipaa-results.xml /usr/share/xml/scap/ssg/content/ssg-rhel9-ds.xmlGenerate a Bash script based on the results file generated in the previous step:

# oscap xccdf generate fix --profile hipaa --fix-type bash --output hipaa-remediations.sh hipaa-results.xml-

The

hipaa-remediations.shfile contains remediations for rules that failed during the scan performed in step 1. After reviewing this generated file, you can apply it with the./hipaa-remediations.shcommand when you are in the same directory as this file.

Vérification

-

In a text editor of your choice, review that the

hipaa-remediations.shfile contains rules that failed in the scan performed in step 1.

Ressources supplémentaires

-

scap-security-guide(8),oscap(8), andbash(1)man pages

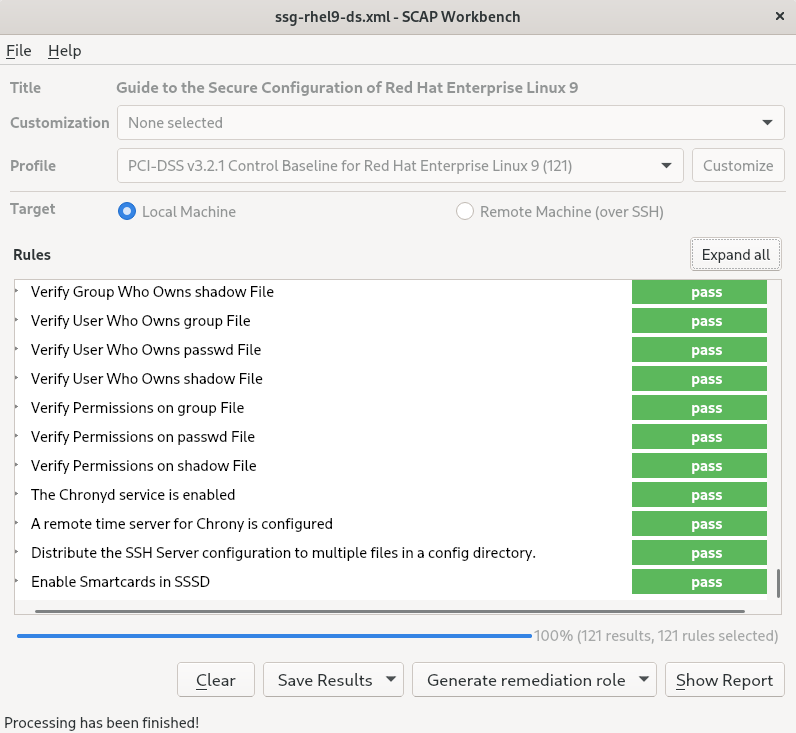

7.8. Scanning the system with a customized profile using SCAP Workbench

SCAP Workbench, which is contained in the scap-workbench package, is a graphical utility that enables users to perform configuration and vulnerability scans on a single local or a remote system, perform remediation of the system, and generate reports based on scan evaluations. Note that SCAP Workbench has limited functionality compared with the oscap command-line utility. SCAP Workbench processes security content in the form of data-stream files.

7.8.1. Using SCAP Workbench to scan and remediate the system

To evaluate your system against the selected security policy, use the following procedure.

Conditions préalables

-

The

scap-workbenchpackage is installed on your system.

Procédure

To run

SCAP Workbenchfrom theGNOME Classicdesktop environment, press the Super key to enter theActivities Overview, typescap-workbench, and then press Enter. Alternatively, use:$ scap-workbench &Select a security policy using either of the following options:

-

Load Contentbutton on the starting window -