Automation Controller User Guide

User Guide for Automation Controller

Abstract

Preface

Thank you for your interest in Red Hat Ansible Automation Platform automation controller. Automation controller helps teams manage complex multitiered deployments by adding control, knowledge, and delegation to Ansible-powered environments.

The Automation controller User Guide describes all of the functionality available in automation controller. It assumes moderate familiarity with Ansible, including concepts such as playbooks, variables, and tags. For more information about these and other Ansible concepts, see the Ansible documentation.

Making open source more inclusive

Red Hat is committed to replacing problematic language in our code, documentation, and web properties. We are beginning with these four terms: master, slave, blacklist, and whitelist. Because of the enormity of this endeavor, these changes will be implemented gradually over several upcoming releases. For more details, see our CTO Chris Wright’s message.

Providing feedback on Red Hat documentation

If you have a suggestion to improve this documentation, or find an error, please contact technical support at https://access.redhat.com to create an issue on the Ansible Automation Platform Jira project using the docs-product component.

Chapter 1. Automation controller overview

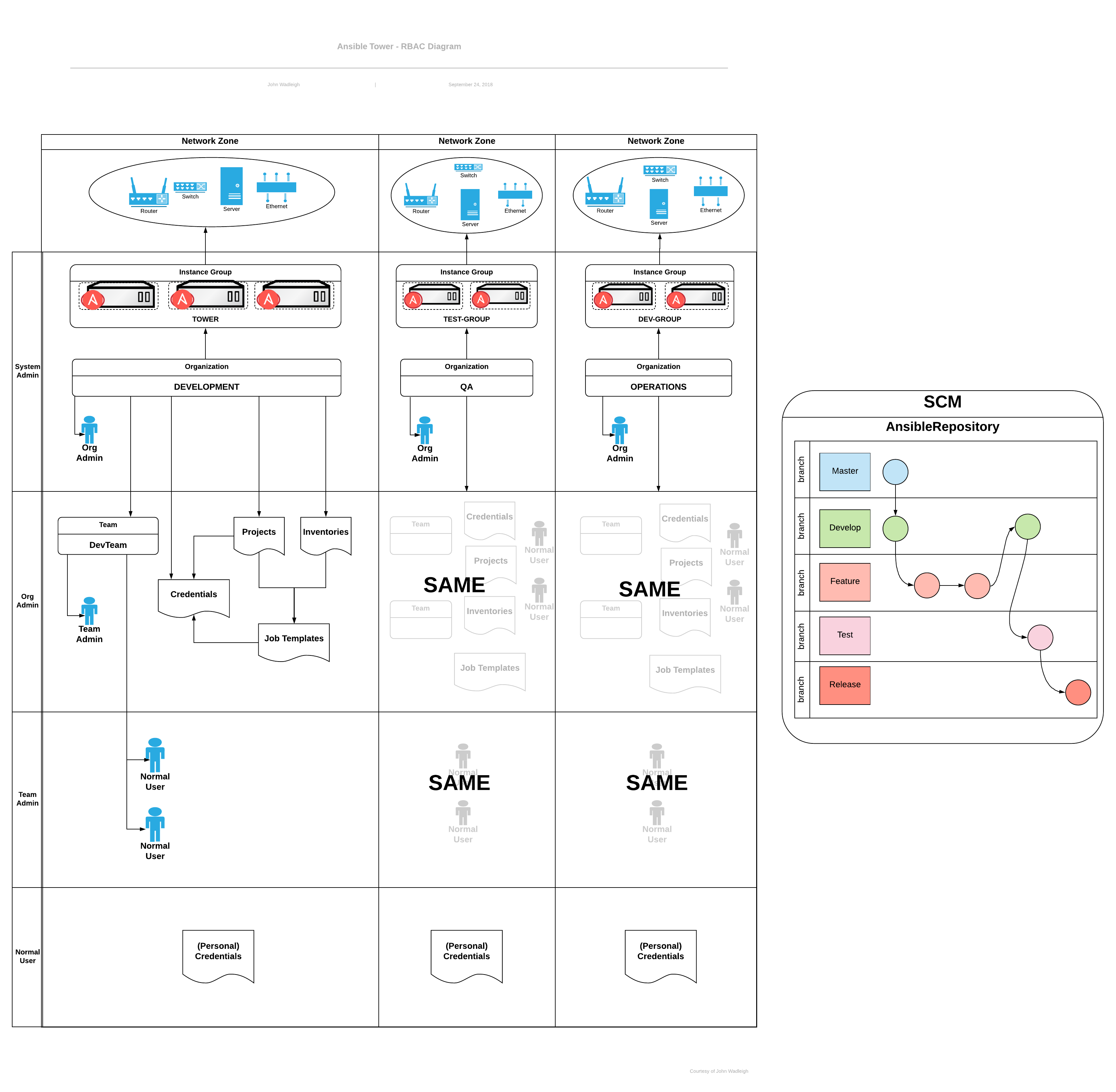

With Ansible Automation Platform users across an organization can share, vet, and manage automation content by means of a simple, powerful, and agentless technical implementation. IT managers can provide guidelines on how automation is applied to individual teams. Automation developers can write tasks that use existing knowledge, without the operational overhead of conforming to complex tools and frameworks. It is a more secure and stable foundation for deploying end-to-end automation solutions, from hybrid cloud to the edge.

Ansible Automation Platform includes automation controller, which enables users to define, operate, scale, and delegate automation across their enterprise.

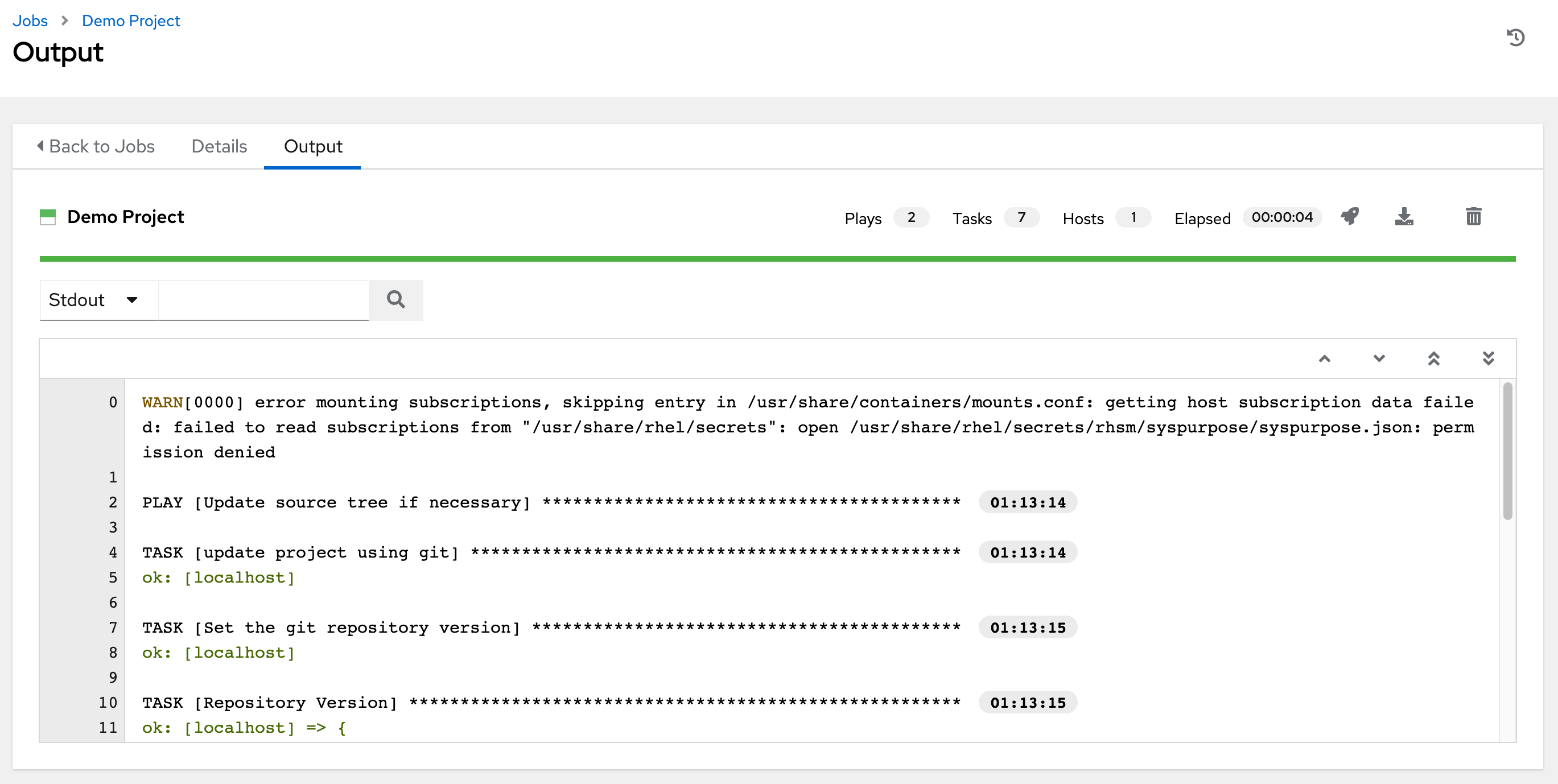

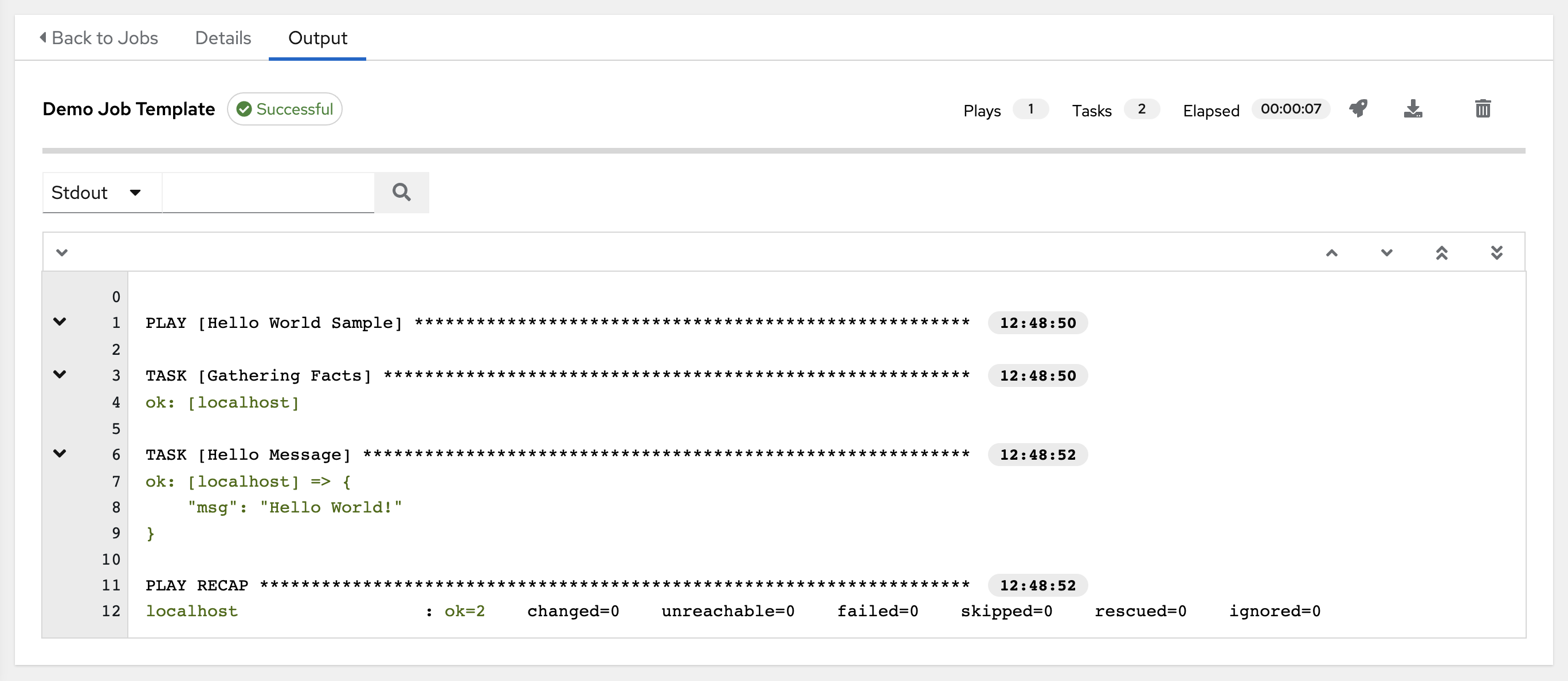

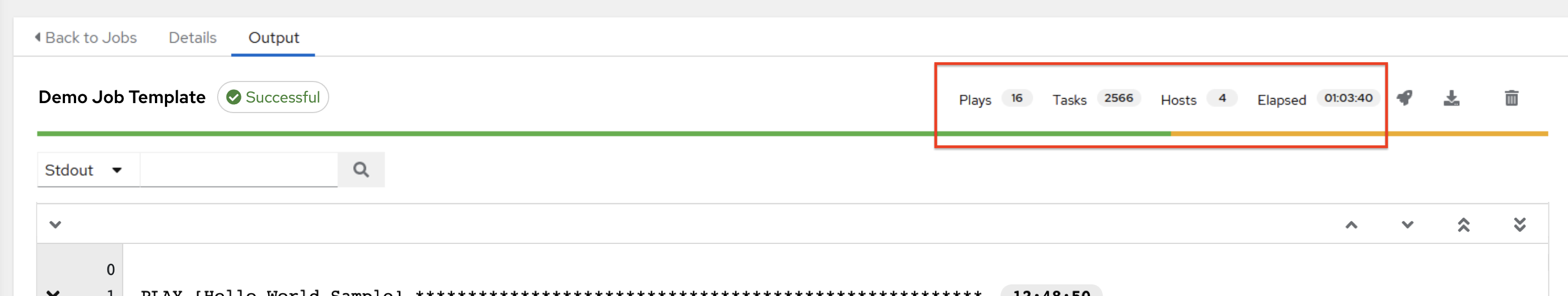

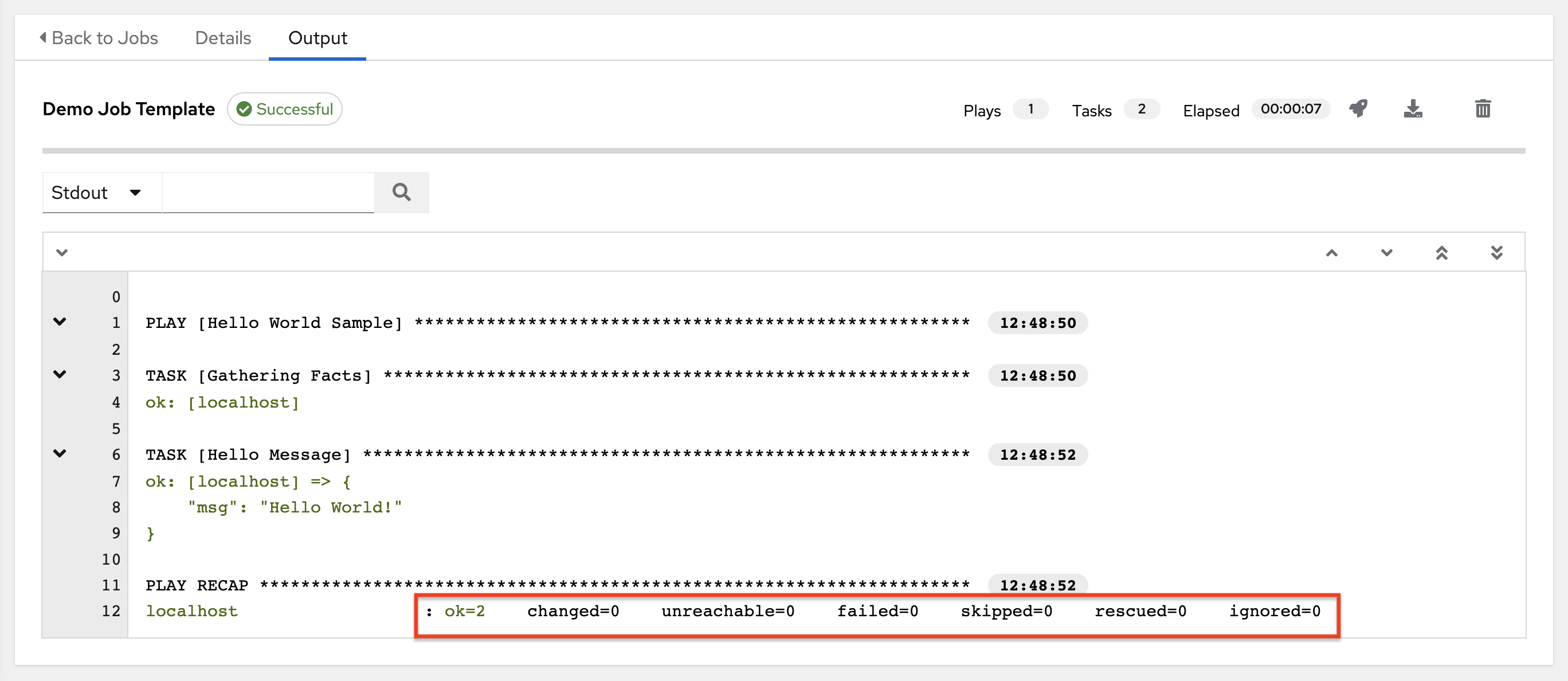

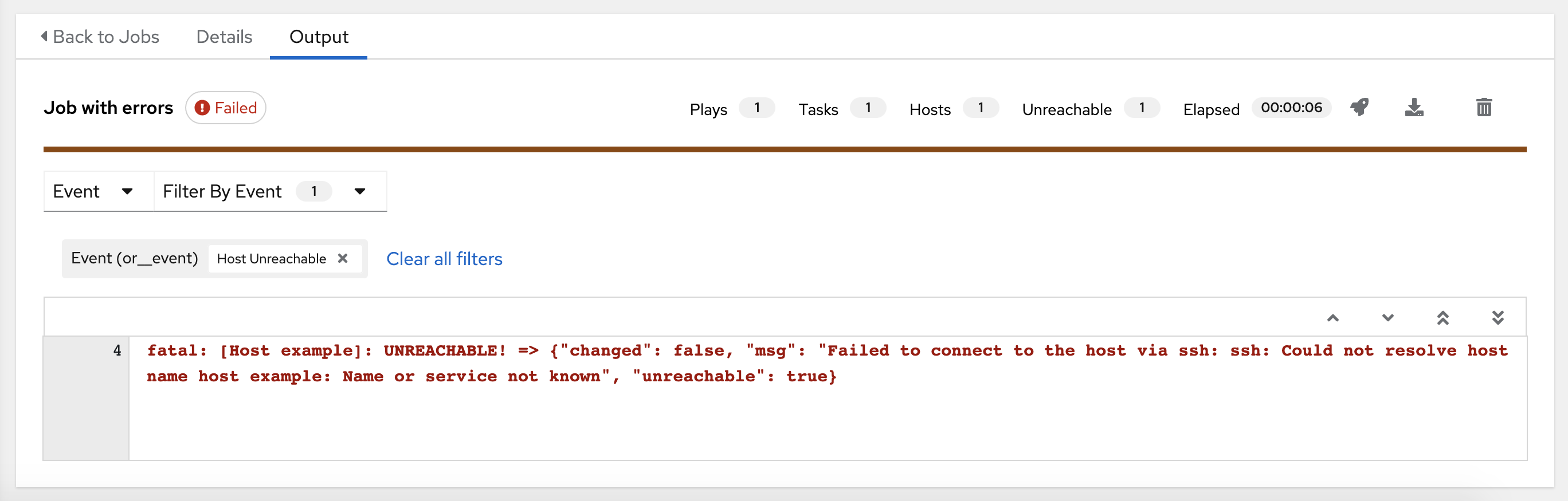

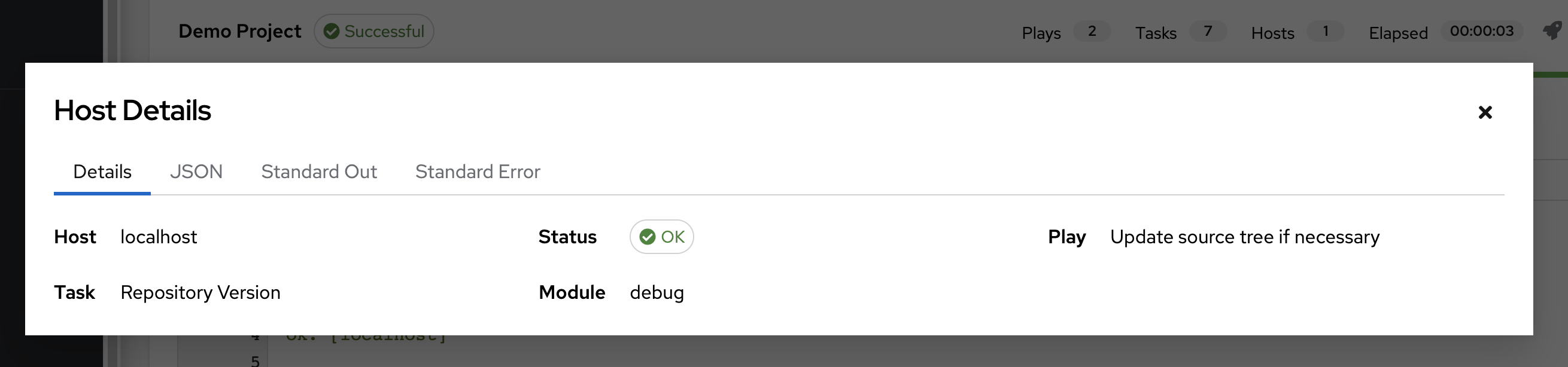

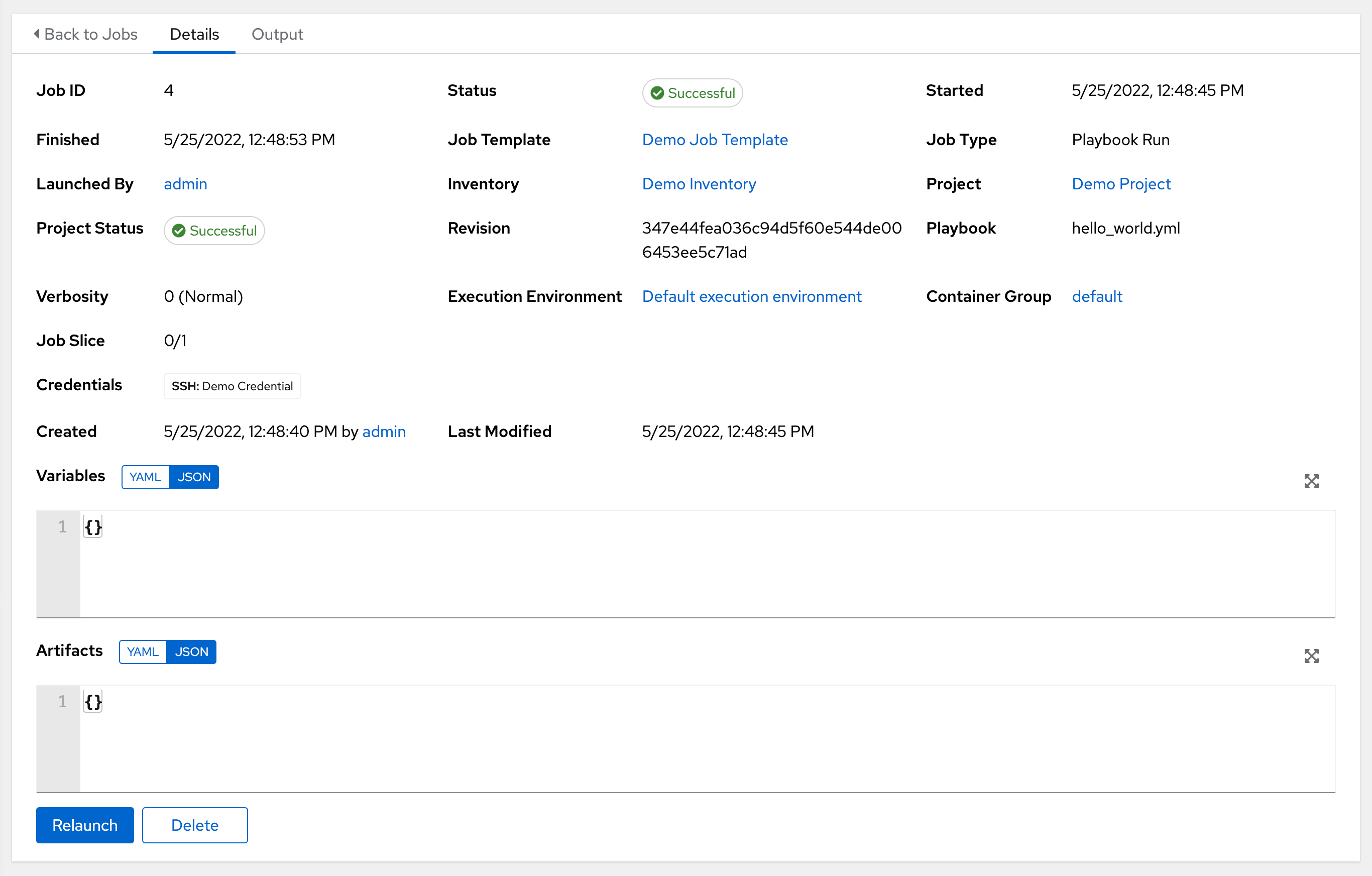

1.1. Real-time playbook output and exploration

Automation controller enables you to watch playbooks run in real time, seeing each host as they check in. You can go back and explore the results for specific tasks and hosts in great detail, search for specific plays or hosts and see just those results, or locate errors that need to be corrected.

1.2. "Push Button" automation

Automation controller enables you to access your favorite projects and re-trigger execution from the web interface. Automation controller asks for input variables, prompts for your credentials, starts and monitors jobs, and displays results and host history.

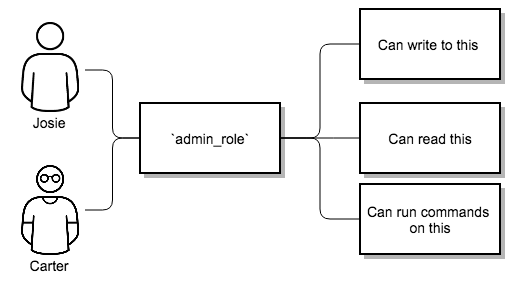

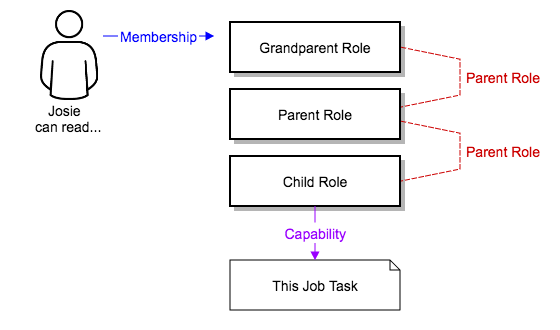

1.3. Simplified role-based access control and auditing

Automation controller enables you to:

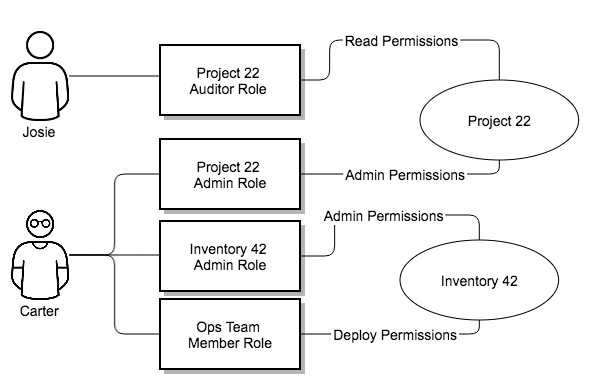

- Grant permissions to perform a specific task to different teams or explicit users through role-based access control (RBAC). Example tasks include viewing, creating, or modifying a file.

- Keep some projects private, while enabling some users to edit inventories, and others to run playbooks against certain systems, either in check (dry run) or live mode.

- Enable certain users to use credentials without exposing the credentials to them.

Automation controller records the history of operations and who made them, including objects edited and jobs launched.

If you want to give any user or team permissions to use a job template, you can assign permissions directly on the job template. Credentials are full objects in the automation controller RBAC system, and can be assigned to multiple users or teams for use.

Automation controller includes an auditor type. A system-level auditor can see all aspects of the systems automation, but does not have permission to run or change automation. An auditor is useful for a service account that scrapes automation information from the REST API.

Additional resources

- For more information about user roles, see Role-Based Access Controls.

1.4. Cloud and autoscaling flexibility

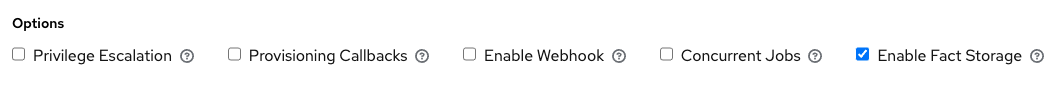

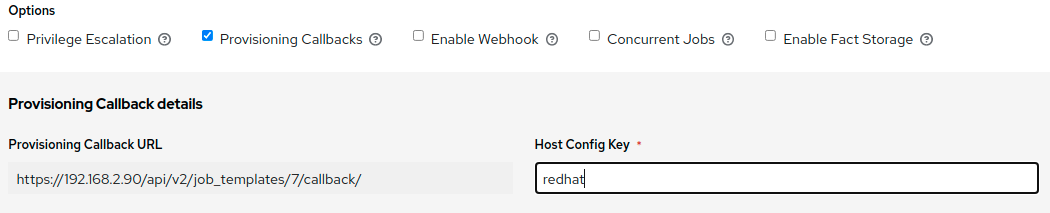

Automation controller includes a powerful optional provisioning callback feature that enables nodes to request configuration on demand. This is an ideal solution for a cloud auto-scaling scenario and includes the following features:

- It integrates with provisioning servers like Cobbler and deals with managed systems with unpredictable uptimes.

- It requires no management software to be installed on remote nodes.

-

The callback solution can be triggered by a call to

curlorwget, and can be embedded ininitscripts, kickstarts, or preseeds. - You can control access so that only machines listed in the inventory can request configuration.

1.5. The ideal RESTful API

The automation controller REST API is the ideal RESTful API for a systems management application, with all resources fully discoverable, paginated, searchable, and well modeled. A styled API browser enables API exploration from the API root at http://<server name>/api/, showing off every resource and relation. Everything that can be done in the user interface can be done in the API.

1.6. Backup and restore

Ansible Automation Platform can backup and restore your systems or systems, making it easy for you to backup and replicate your instance as required.

1.7. Ansible Galaxy integration

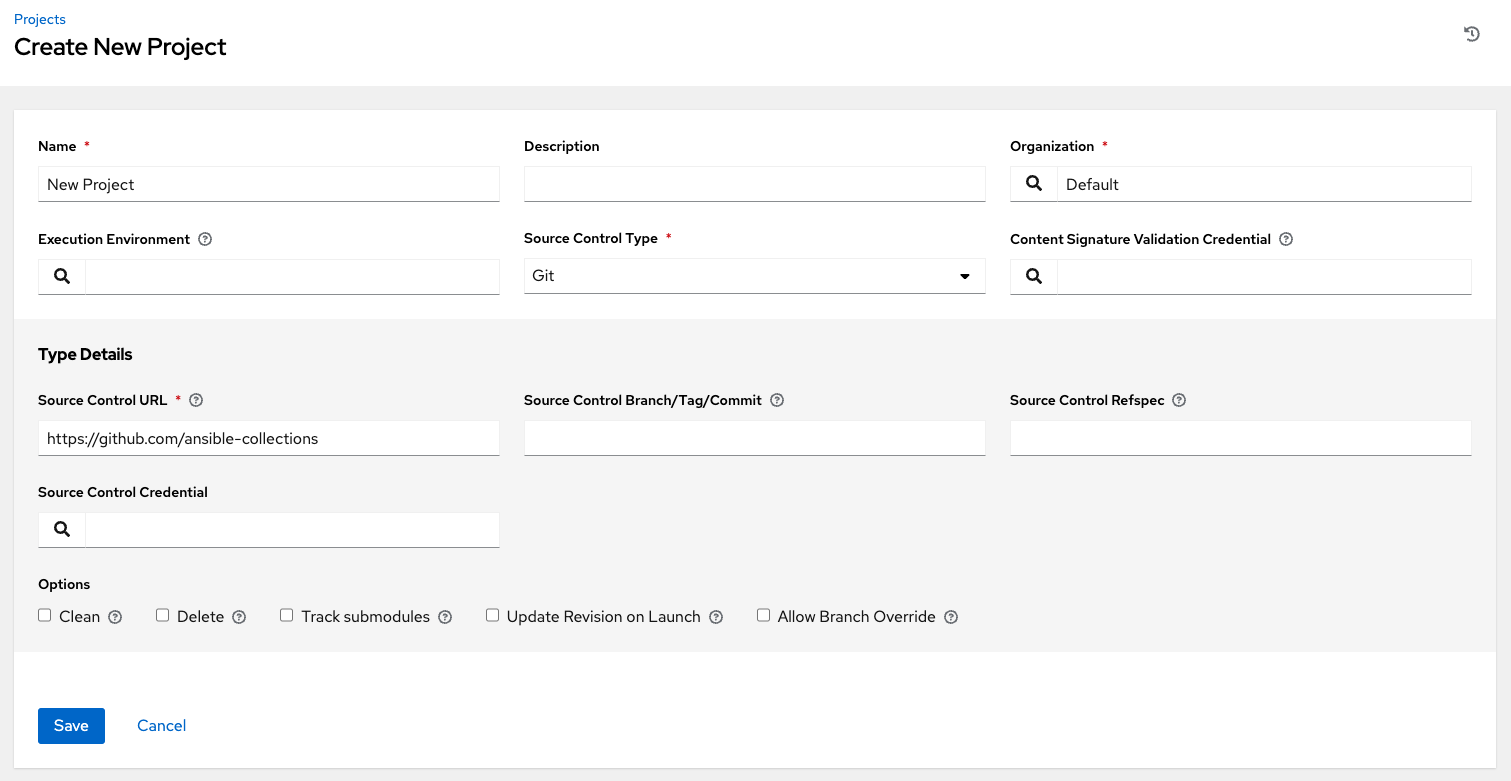

By including an Ansible Galaxy requirements.yml file in your project directory, automation controller automatically fetches the roles your playbook needs from Galaxy, GitHub, or your local source control. For more information, see Ansible Galaxy Support.

1.8. Inventory support for OpenStack

Dynamic inventory support is available for OpenStack. This enables you to target any of the virtual machines or images running in your OpenStack cloud.

For more information, see Openstack.

1.9. Remote command execution

Use remote command execution to perform a simple tasks, such as adding a single user, updating a single security vulnerability, or restarting a failing service. Any task that you can describe as a single Ansible play can be run on a host or group of hosts in your inventory, enabling you to manage your systems quickly and easily. Because of an RBAC engine and detailed audit logging, you know which user has completed a specific task.

1.10. System tracking

You can collect facts using the fact caching feature. For more information, see Fact Caching.

1.11. Integrated notifications

Keep track of the status of your automation.

You can configure the following notifications:

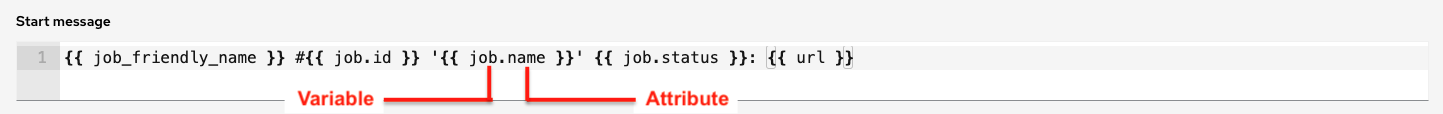

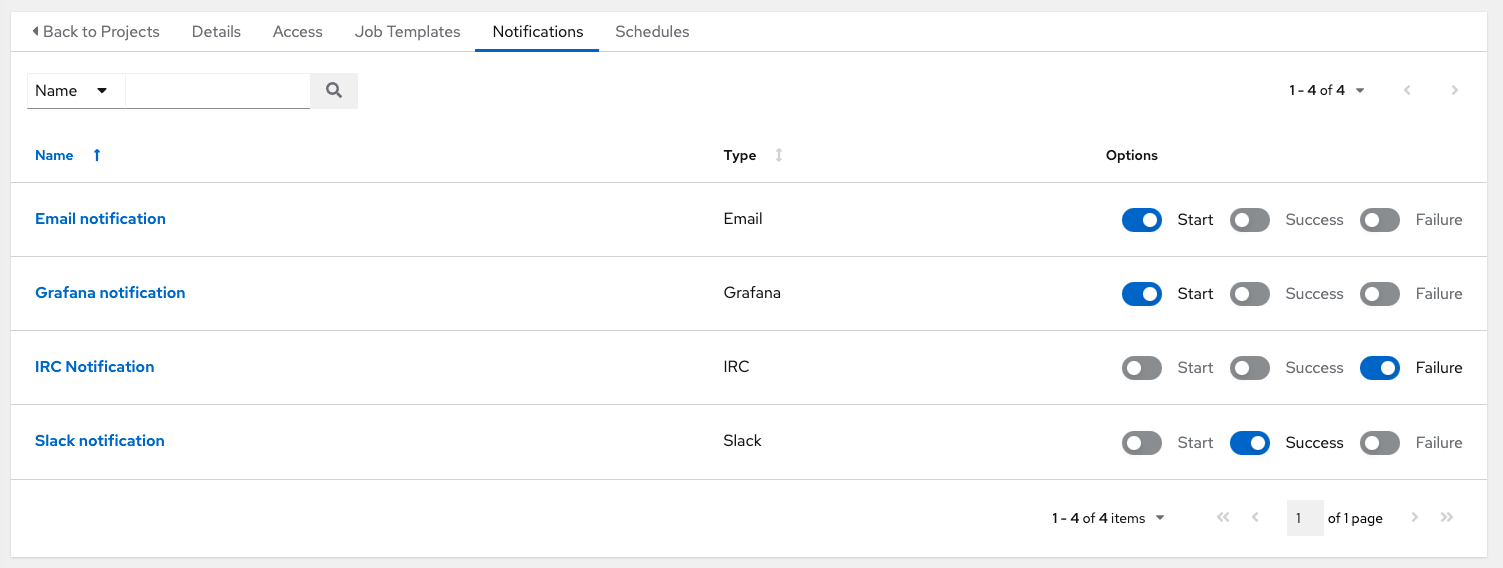

- stackable notifications for job templates, projects, or entire organizations

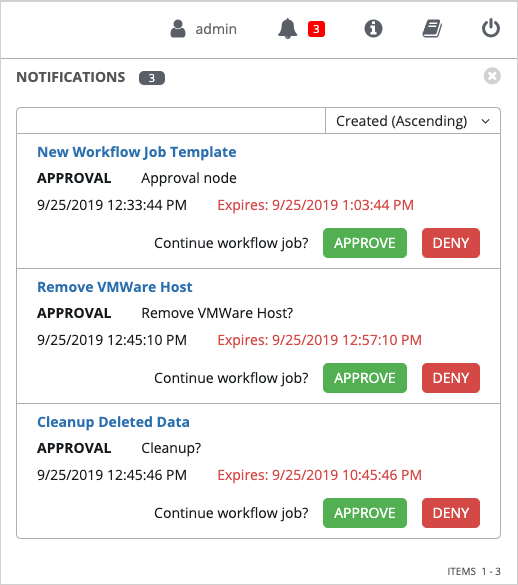

- different notifications for job start, job success, job failure, and job approval (for workflow nodes)

The following notification sources are supported:

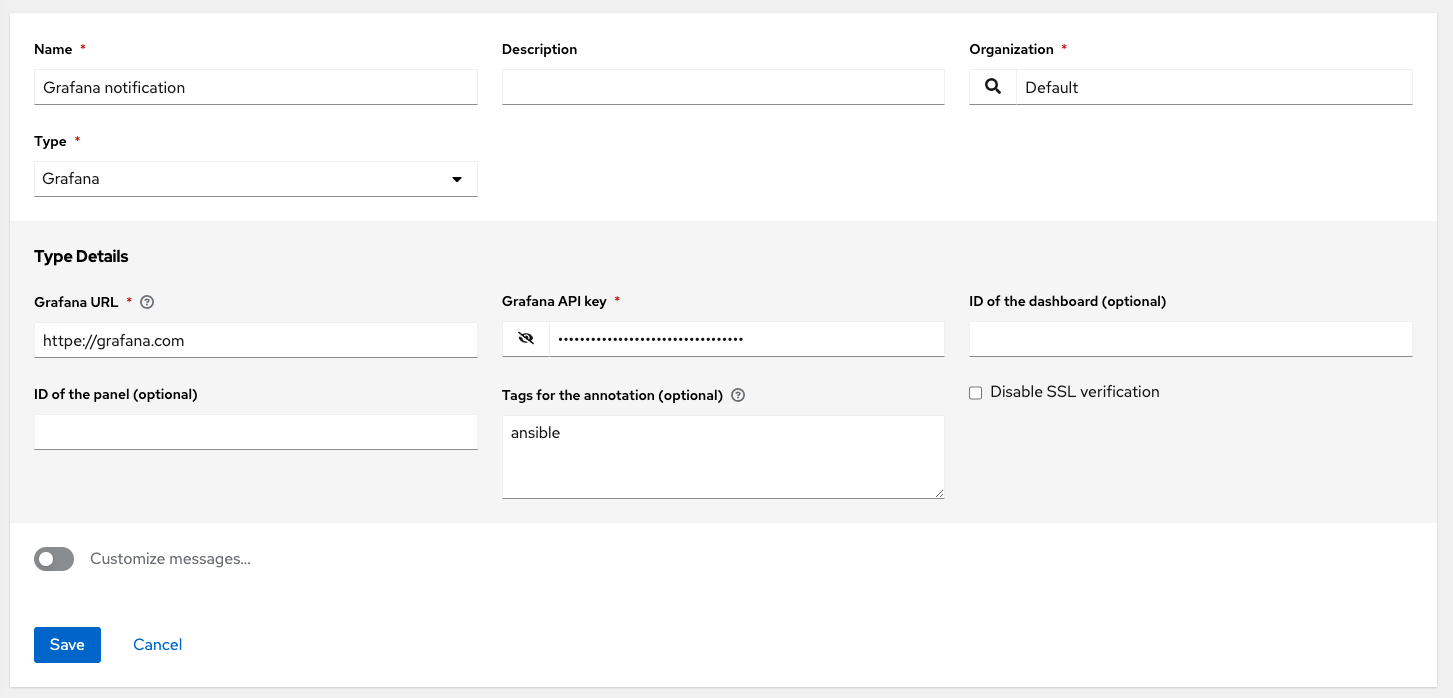

- Grafana

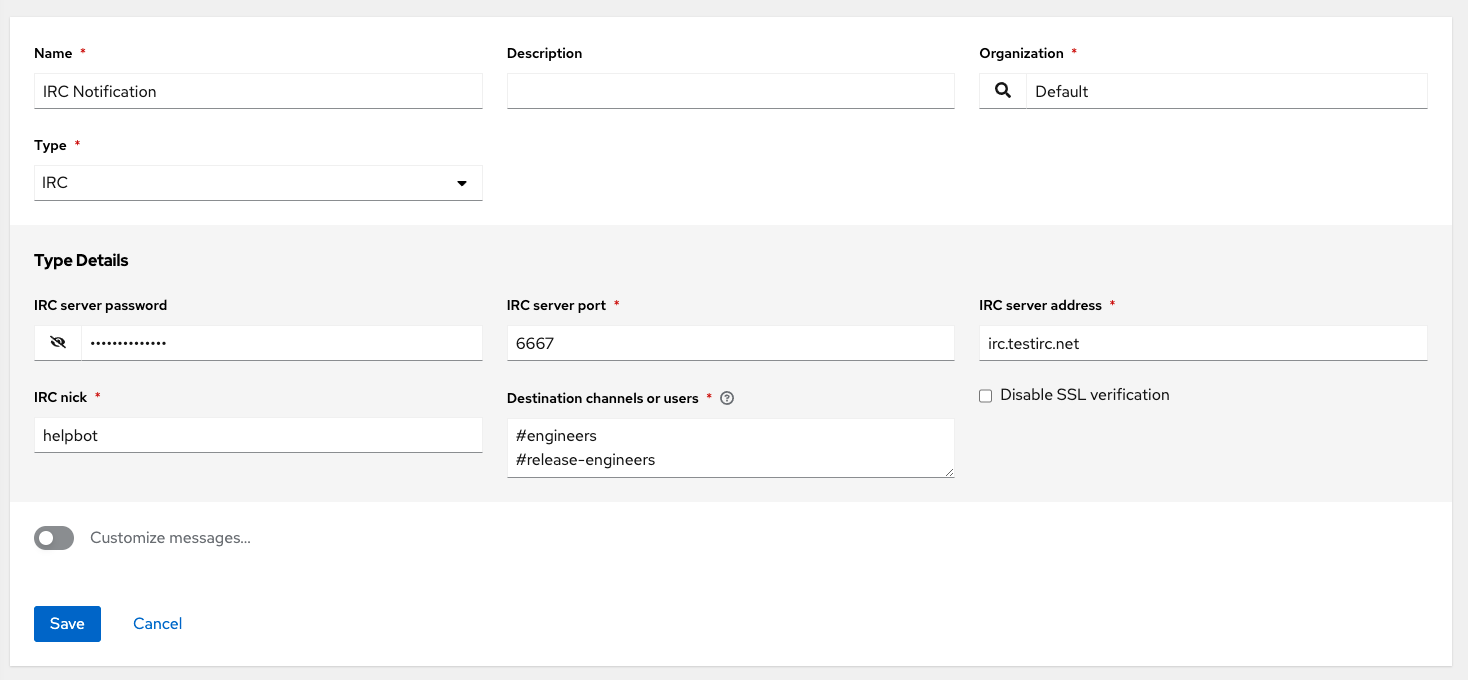

- IRC

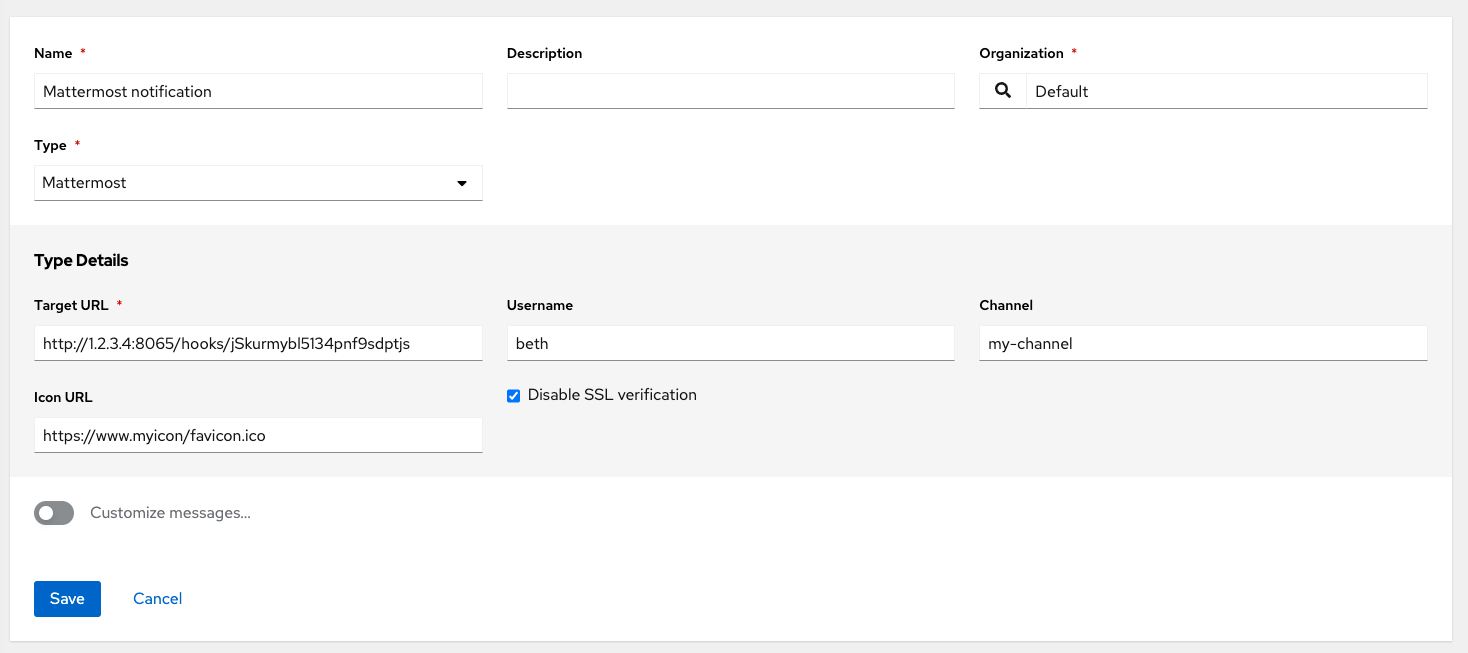

- Mattermost

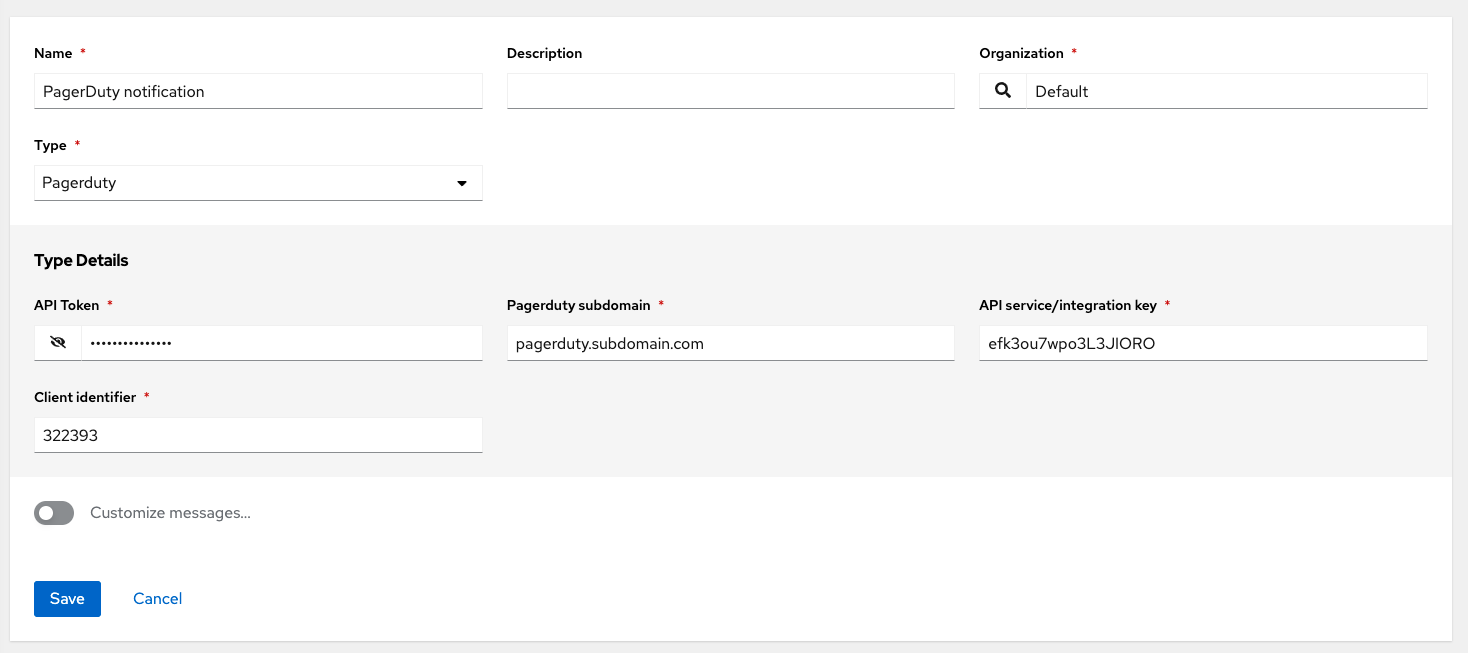

- PagerDuty

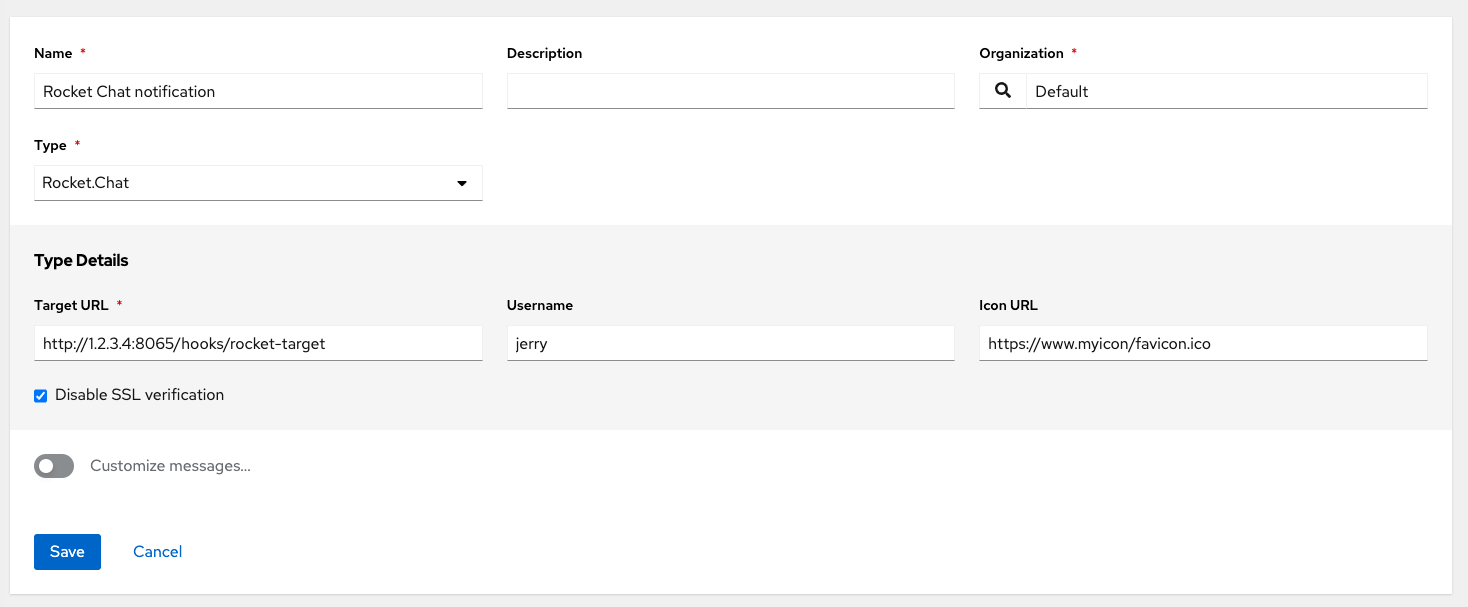

- Rocket.Chat

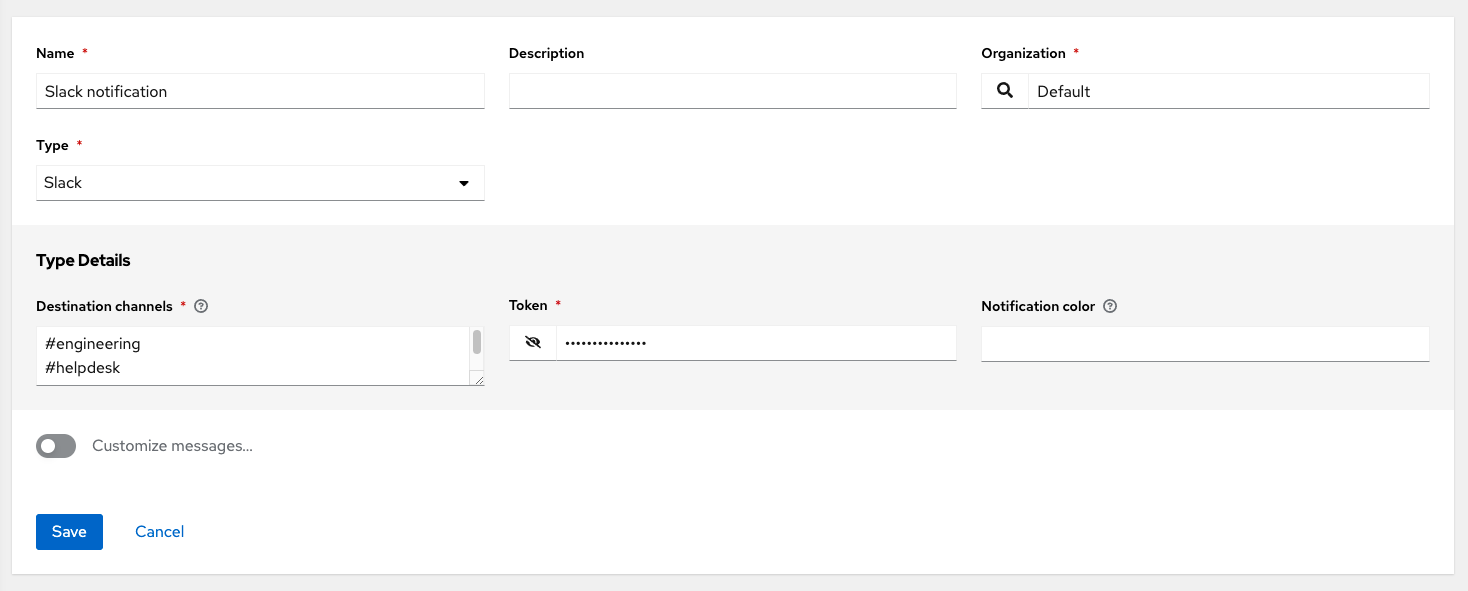

- Slack

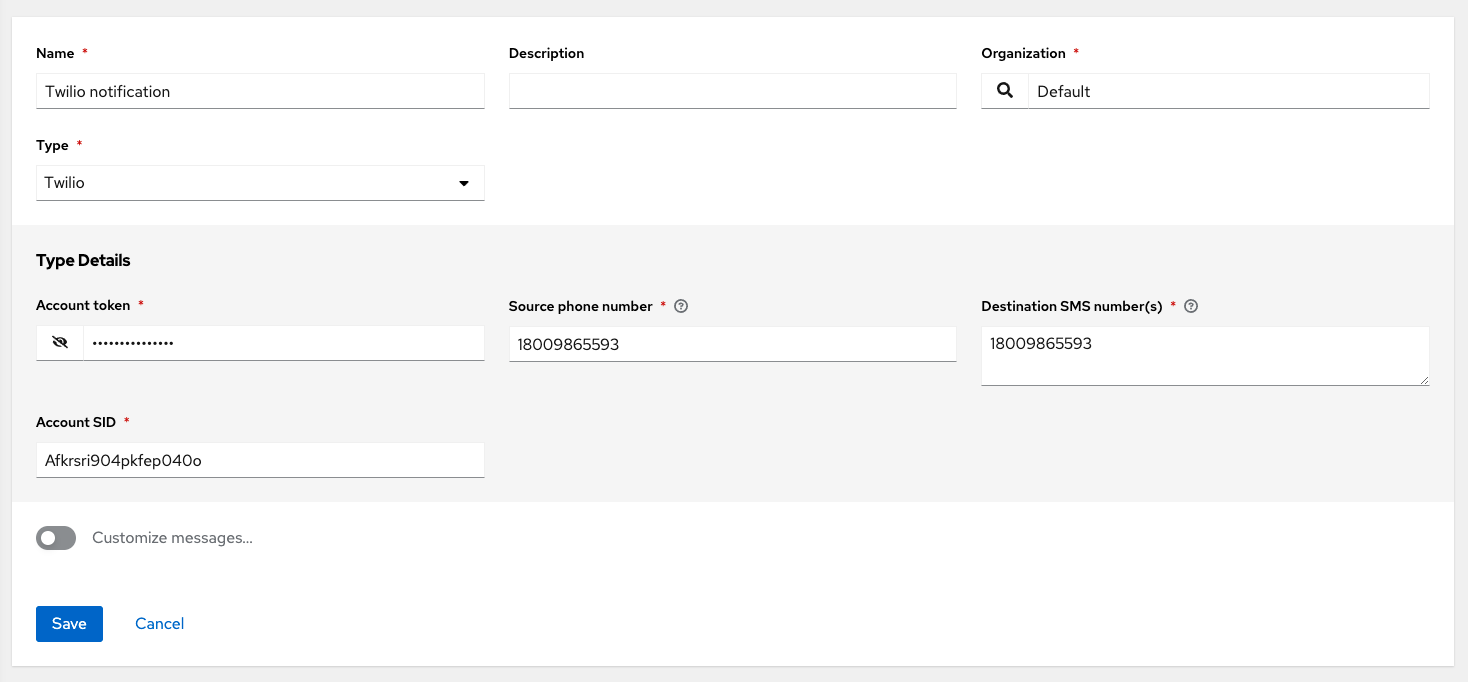

- Twilio

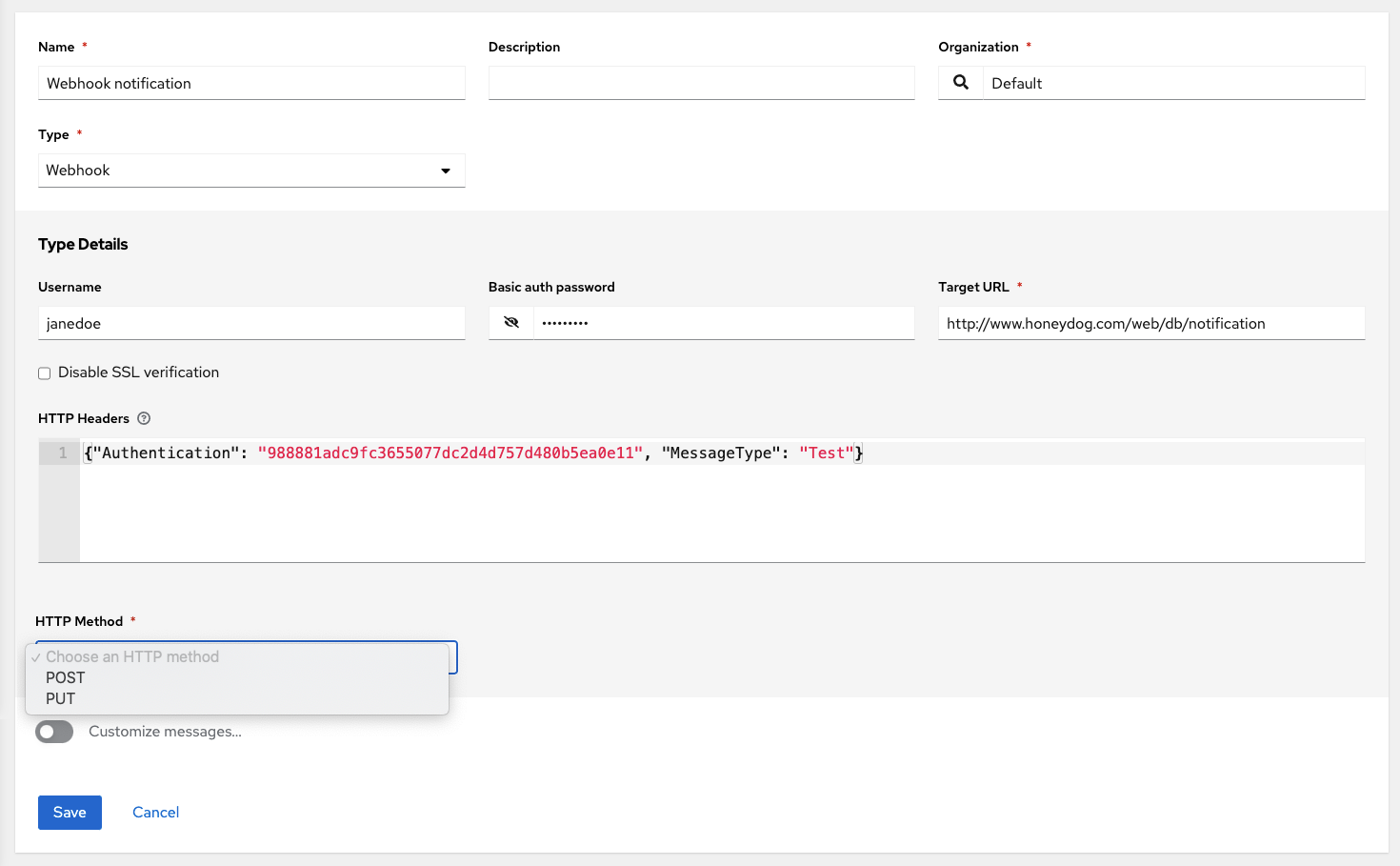

- Webhook (post to an arbitrary webhook, for integration into other tools)

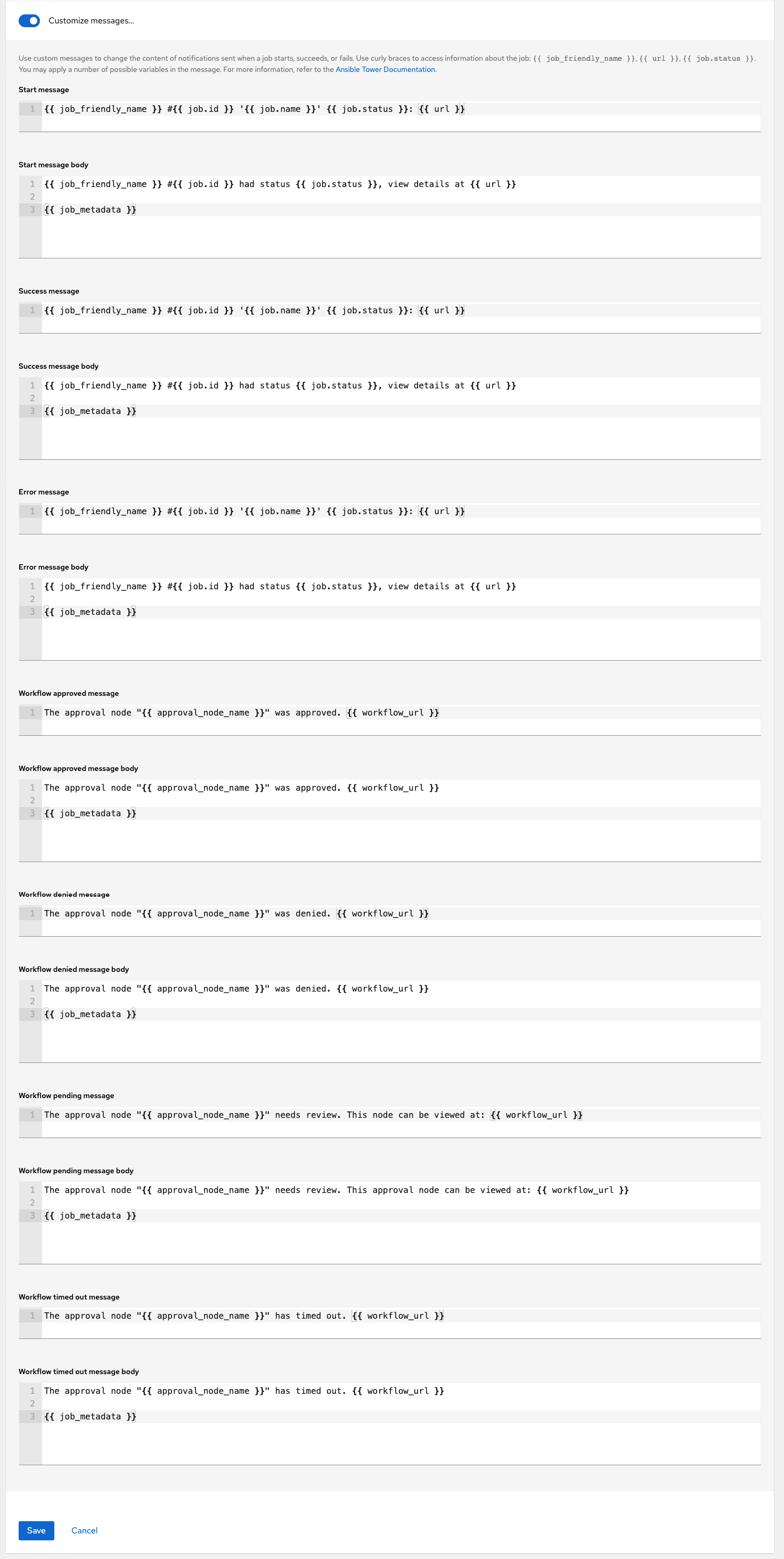

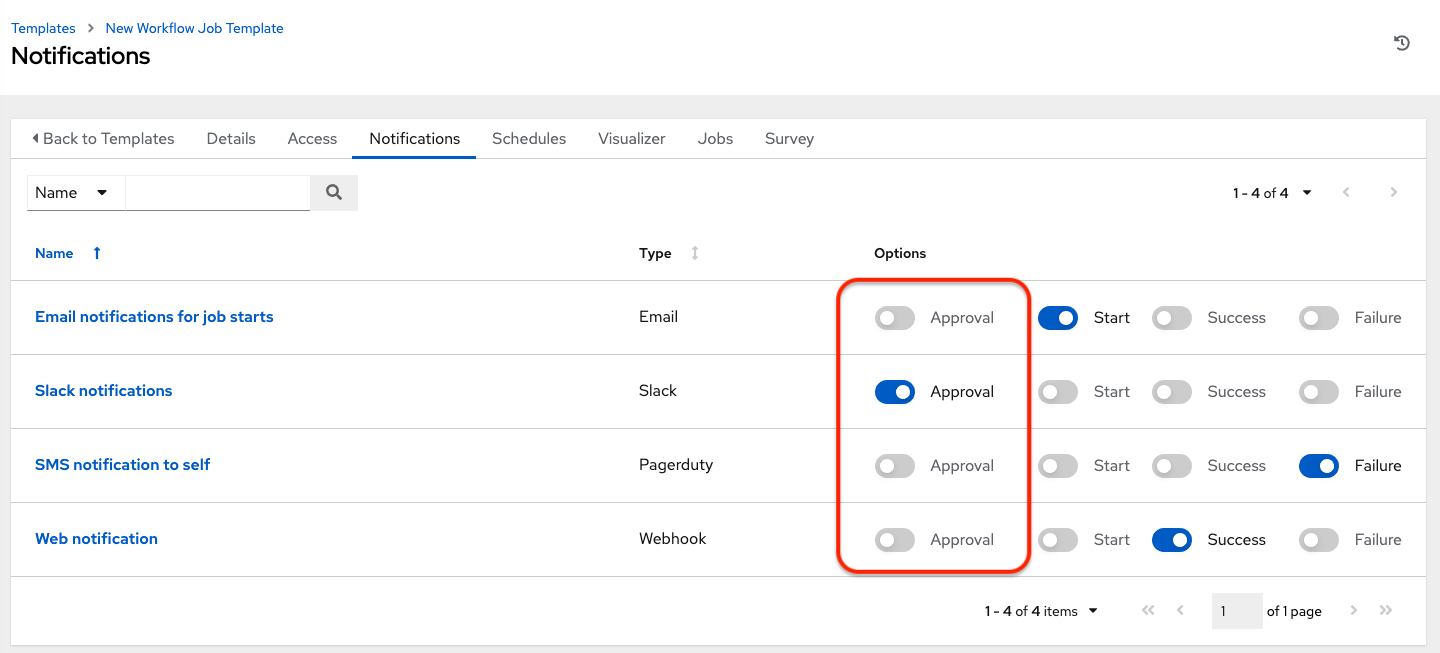

You can also customize notification messages for each of the preceding notification types.

1.12. Integrations

Automation controller supports the following integrations:

- Dynamic inventory sources for Red Hat Satellite 6.

For more information, see Red Hat Satellite 6.

- Red Hat Insights integration, enabling Insights playbooks to be used as an Ansible Automation Platform project.

For more information, see Setting up Insights Remediations.

- Automation hub acts as a content provider for automation controller, requiring both an automation controller deployment and an automation hub deployment running alongside each other.

1.13. Custom Virtual Environments

Custom Ansible environment support enables you to have different Ansible environments and specify custom paths for different teams and jobs.

1.14. Authentication enhancements

Automation controller supports:

- LDAP

- SAML

- token-based authentication

LDAP and SAML support enable you to integrate your enterprise account information in a more flexible manner.

Token-based authentication permits authentication of third-party tools and services with automation controller through integrated OAuth 2 token support.

1.15. Cluster management

Run-time management of cluster groups enables configurable scaling.

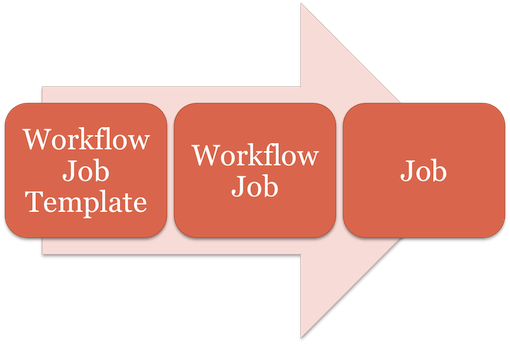

1.16. Workflow enhancements

To model your complex provisioning, deployment, and orchestration workflows, you can use automation controller expanded workflows in several ways:

- Inventory overrides for Workflows You can override an inventory across a workflow at workflow definition time, or at launch time. Automation controller enables you to define your application deployment workflows, and then re-use them in multiple environments.

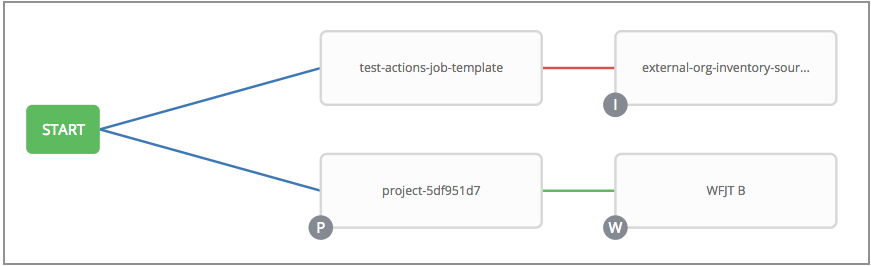

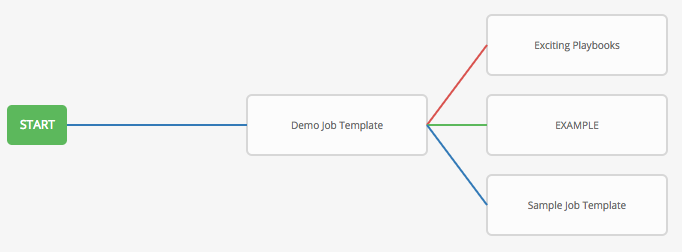

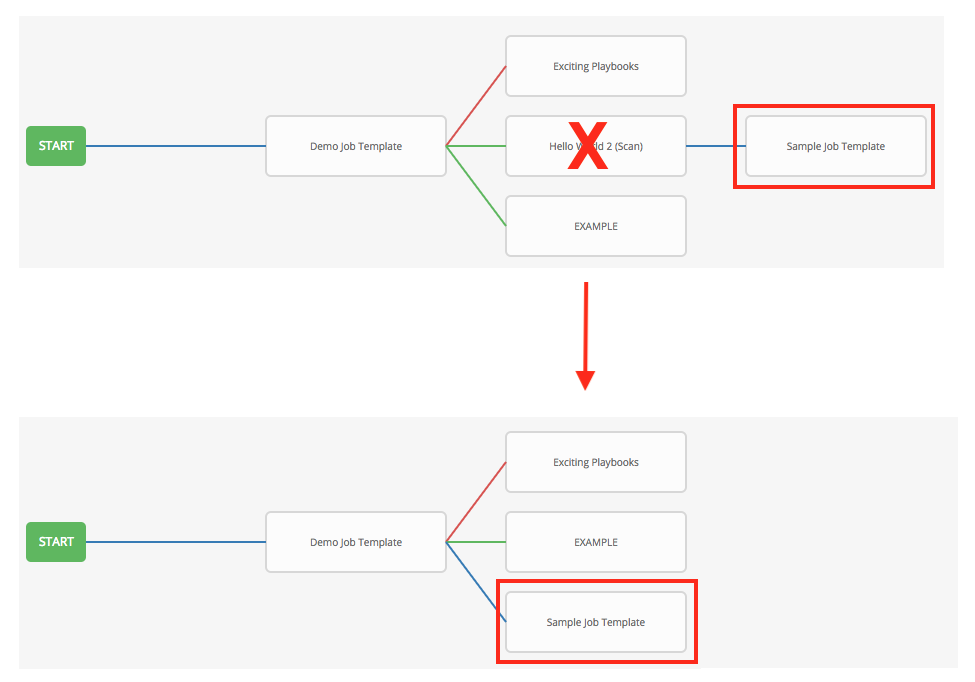

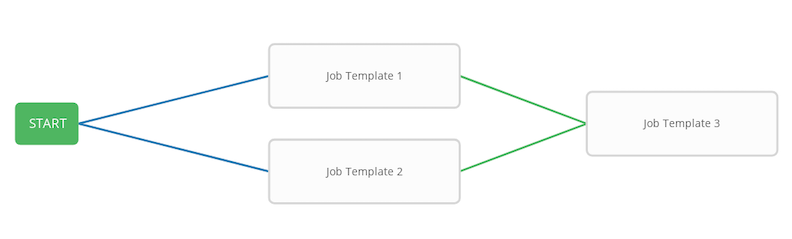

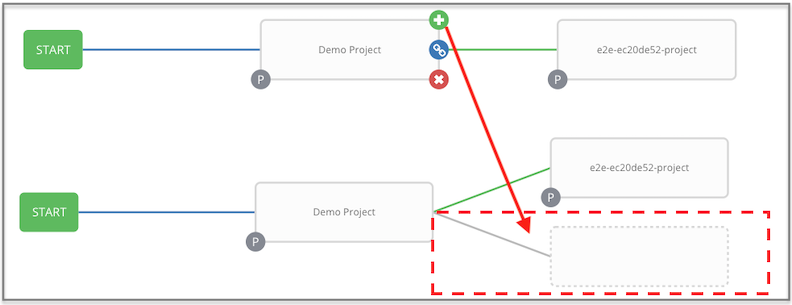

- Convergence nodes for Workflows When modeling complex processes, you must sometimes wait for multiple steps to finish before proceeding. Automation controller workflows can replicate this; workflow steps can wait for any number of previous workflow steps to complete properly before proceeding.

- Workflow Nesting You can re-use individual workflows as components of a larger workflow. Examples include combining provisioning and application deployment workflows into a single workflow.

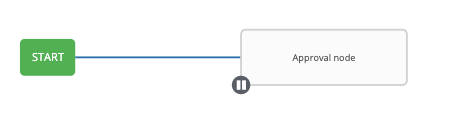

- Workflow Pause and Approval You can build workflows containing approval nodes that require user intervention. This makes it possible to pause workflows in between playbooks so that a user can give approval (or denial) for continuing on to the next step in the workflow.

For more information, see Workflows in automation controller

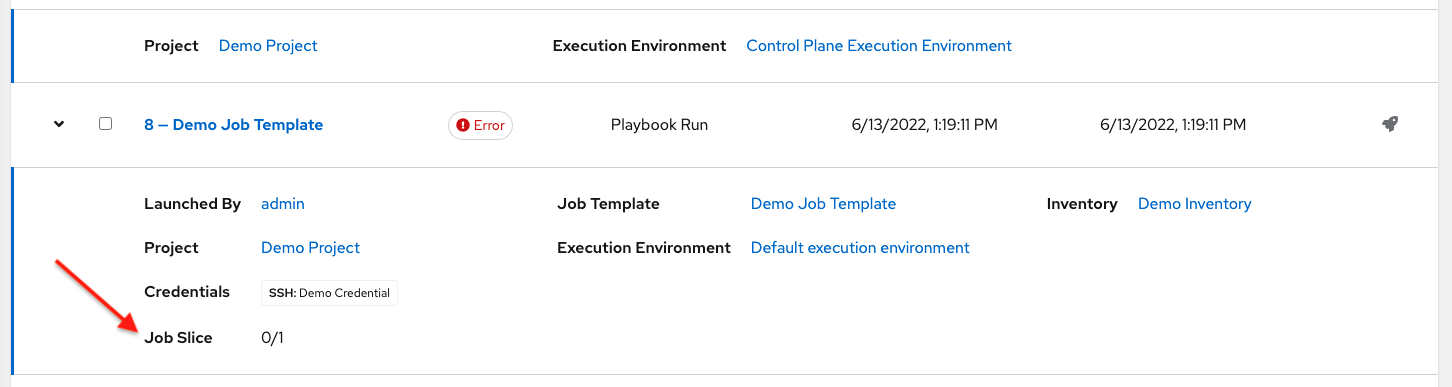

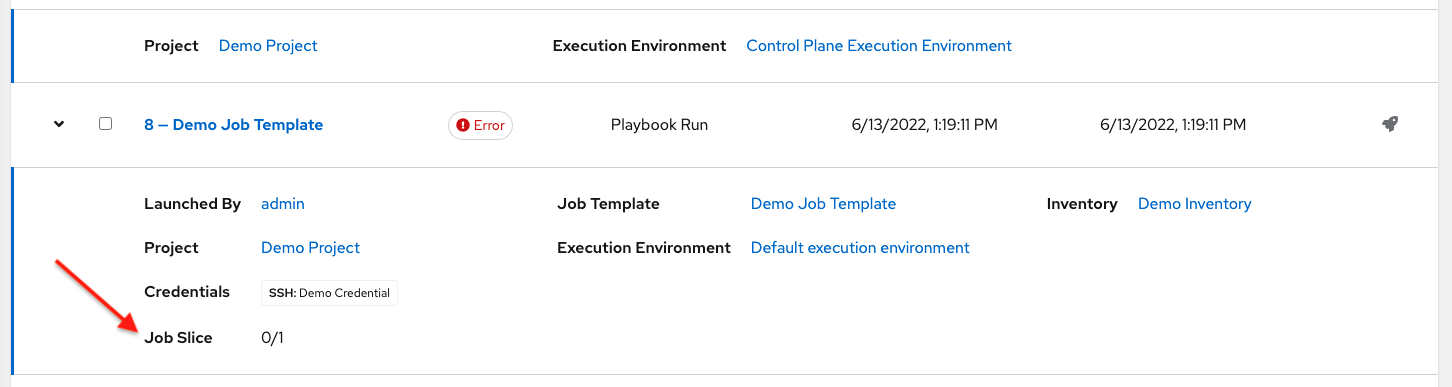

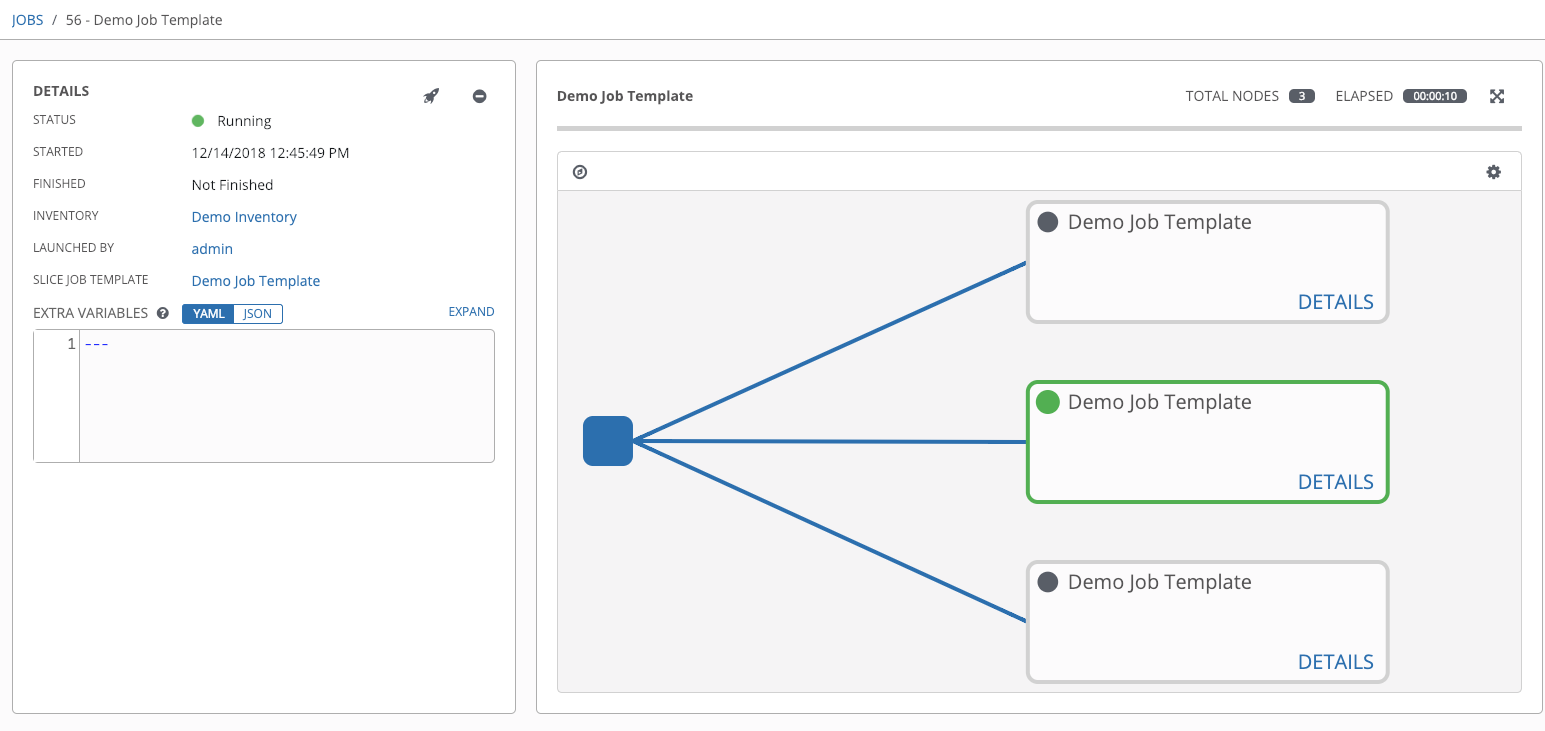

1.17. Job distribution

Take a fact gathering or configuration job running across thousands of machines and divide it into slices that can be distributed across your automation controller cluster for increased reliability, faster job completion, and improved cluster use.

For example, you can change a parameter across 15,000 switches at scale, or gather information across your multi-thousand-node RHEL estate.

For more information, see Job Slicing.

1.18. Support for deployment in a FIPS-enabled environment

Automation controller deploys and runs in restricted modes such as FIPS.

1.19. Limit the number of hosts per organization

Many large organizations have instances shared among many organizations. To ensure that one organization cannot use all the licensed hosts, this feature enables superusers to set a specified upper limit on how many licensed hosts can be allocated to each organization. The automation controller algorithm factors changes in the limit for an organization and the number of total hosts across all organizations. Inventory updates fail if an inventory synchronization brings an organization out of compliance with the policy. Additionally, superusers are able to over-allocate their licenses, with a warning.

1.20. Inventory plugins

The following inventory plugins are used from upstream collections:

-

amazon.aws.aws_ec2 -

community.vmware.vmware_vm_inventory -

azure.azcollection.azure_rm -

google.cloud.gcp_compute -

theforeman.foreman.foreman -

openstack.cloud.openstack -

ovirt.ovirt.ovirt -

awx.awx.tower

1.21. Secret management system

With a secret management system, external credentials are stored and supplied for use in automation controller so you need not provide them directly.

Chapter 2. Automation controller licensing, updates and support

Automation controller is provided as part of your annual Red Hat Ansible Automation Platform subscription.

Ansible is an open source software project and is licensed under the GNU General Public License version 3, as described in the Ansible Source Code

You must have valid subscriptions attached before installing Ansible Automation Platform.

For more information, see Attaching Subscriptions.

2.1. Trial and evaluation

You require a license to run automation controller. You can start by using a free trial license.

- Trial licenses for Ansible Automation Platform are available at: http://ansible.com/license

- Support is not included in a trial license or during an evaluation of the automation controller software.

2.2. Component licenses

To view the license information for the components included in automation controller, refer to /usr/share/doc/automation-controller-<version>/README.

where <version> refers to the version of automation controller you have installed.

To view a specific license, refer to /usr/share/doc/automation-controller-<version>/*.txt.

where * is the license file name to which you are referring.

2.3. Node counting in licenses

The automation controller license defines the number of Managed Nodes that can be managed as part of a Red Hat Ansible Automation Platform subscription.

A typical license says "License Count: 500", which sets the maximum number of Managed Nodes at 500.

For more information on managed node requirements for licensing, see https://access.redhat.com/articles/3331481.

Ansible does not recycle node counts or reset automated hosts.

Chapter 3. Logging into automation controller after installation

After you install automation controller, you must log in.

Procedure

- With the login information provided after your installation completed, open a web browser and log in to the automation controller by navigating to its server URL at: https://<CONTROLLER_SERVER_NAME>/

Use the credentials specified during the installation process to login:

- The default username is admin.

- The password for admin is the value specified.

- Click the More Actions icon ⋮ next to the desired user.

- Click Edit.

- Edit the required details and click Save.

Chapter 4. Managing your Ansible automation controller subscription

Before you can use automation controller, you must have a valid subscription, which authorizes its use.

4.1. Subscription Types

Red Hat Ansible Automation Platform is provided at various levels of support and number of machines as an annual subscription.

Standard:

- Manage any size environment

- Enterprise 8x5 support and SLA

- Maintenance and upgrades included

- Review the SLA at Product Support Terms of Service

- Review the Red Hat Support Severity Level Definitions

Premium:

- Manage any size environment, including mission-critical environments

- Premium 24x7 support and SLA

- Maintenance and upgrades included

- Review the SLA at Product Support Terms of Service

- Review the Red Hat Support Severity Level Definitions

All subscription levels include regular updates and releases of automation controller, Ansible, and any other components of the Platform.

For more information, contact Ansible through the Red Hat Customer Portal or at http://www.ansible.com/contact-us/.

4.2. Obtaining an authorized Ansible automation controller subscription

If you already have a subscription to a Red Hat product, you can acquire an automation controller subscription through that subscription. If you do not have a subscription to Red Hat Ansible Automation Platform and Red Hat Satellite, you can request a trial subscription.

Procedure

- If you have a Red Hat Ansible Automation Platform subscription, use your Red Hat customer credentials when you launch the automation controller to access your subscription information. See Importing a subscription.

If you have a non-Ansible Red Hat or Satellite subscription, access automation controller with one of these methods:

- Enter your username and password on the license page.

- Obtain a subscriptions manifest from the Subscription Allocations page on the Red Hat Customer Portal. For more information, see Obtaining a subscriptions manifest.

- If you do not have a Red Hat Ansible Automation Platform subscription, go to Try Red Hat Ansible Automation Platform and request a trial subscription.

Additional resources

- To understand what is supported with your subscription, see Automation controller licensing, updates and support.

- If you have issues with your subscription, contact your Sales Account Manager or Red Hat Customer Service at: https://access.redhat.com/support/contact/customerService/.

4.3. Obtaining a subscriptions manifest

To upload a subscriptions manifest, first set up your subscription allocations:

Procedure

- Navigate to https://access.redhat.com/management/subscription_allocations. The Subscriptions Allocations page contains no subscriptions until you create one.

Click Create New subscription allocation.

NoteIf Create New subscription allocation does not display, or is disabled, you do not have the proper permissions to create subscription allocations. To create a subscription allocation, you must either be an Administrator on the Customer Portal, or have the Manage Your Subscriptions role. Contact an

access.redhat.comadministrator, or organization administrator who can grant you permission to manage subscriptions.Enter a Name for your subscription and select 6.15 from the Type drop-down menu.

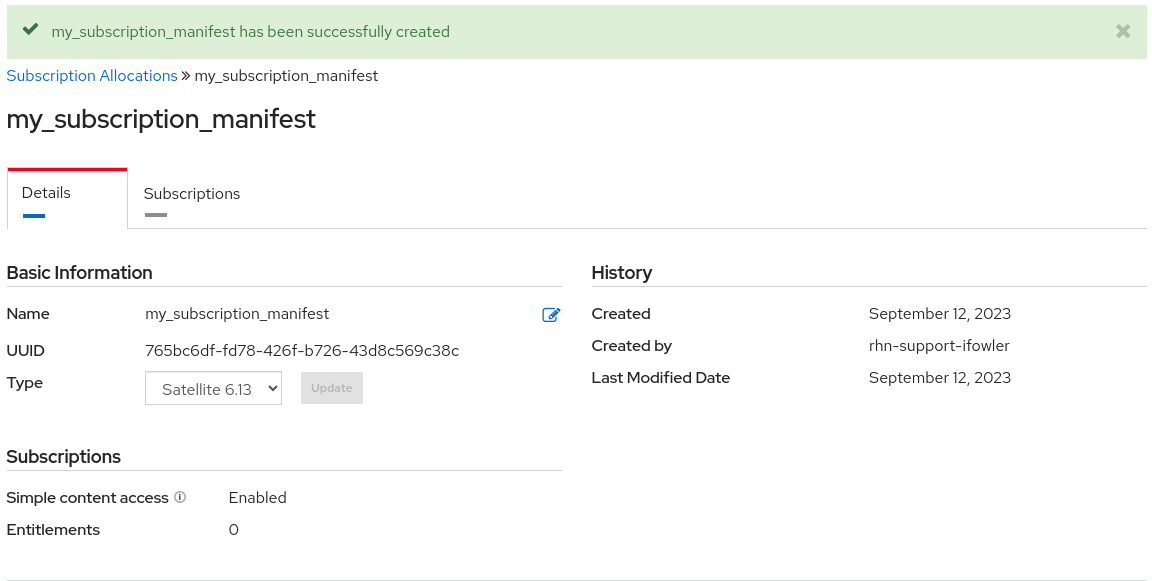

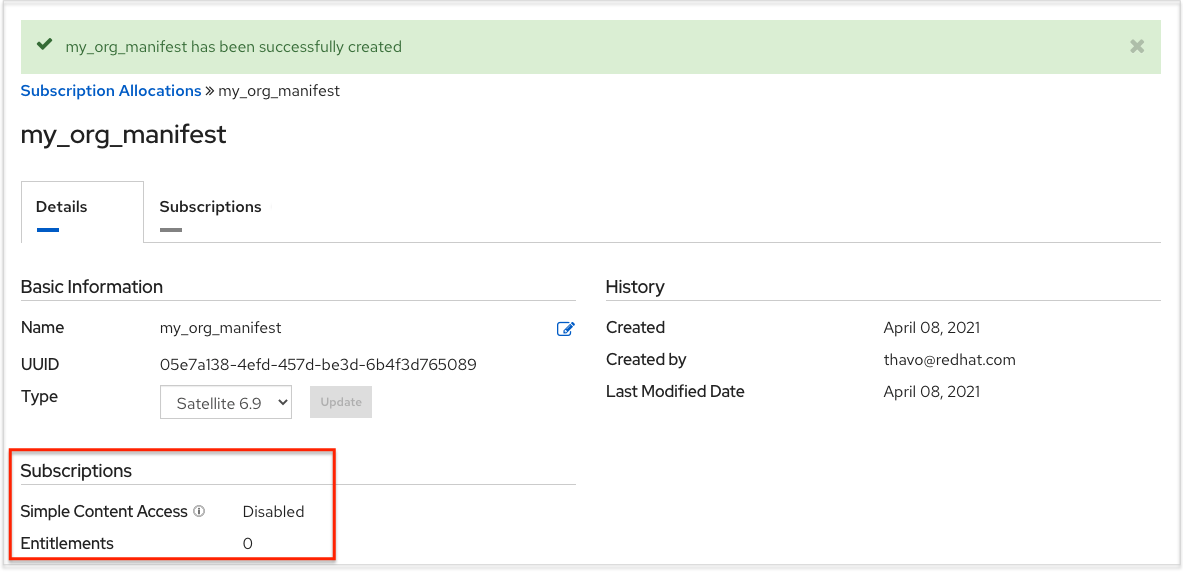

Click Create.

When your subscriptions manifest is successfully created, the number indicated next to Entitlements indicates the number of entitlements associated with your subscription.

4.3.1. Setting up a subscriptions manifest

To obtain a subscriptions manifest, you must add an entitlement to your subscriptions through the Subscriptions tab.

Procedure

- Click the Subscriptions tab.

- If there are no subscriptions to display, click Add Subscriptions.

The following screen enables you to select and add entitlements to put in the manifest file.

You can select multiple Ansible Automation Platform subscriptions in your subscription allocation. Valid Ansible Automation Platform subscriptions commonly go by the name "Red Hat Ansible Automation…".

Specify the number of entitlements or managed nodes to put in the manifest file. This enables you to split up a subscription, for example: 400 nodes on a development cluster and 600 nodes for the production cluster, out of a 1000 node subscription.

NoteYou can apply multiple subscriptions to a single installation by adding multiple subscriptions of the same type to a manifest file and uploading them. Similarly, a subset of a subscription can be applied by only allocating a portion of the subscription when creating the manifest.

Click Submit.

The allocations you specified, when successfully added, are displayed in the Subscriptions tab.

- Click the Details tab to access the subscription manifest file.

-

Click Export Manifest to export the manifest file for this subscription. A folder pre-pended with

manifest_is downloaded to your local drive. Multiple subscriptions with the same SKU are aggregated. - When you have a subscription manifest, go to the Subscription screen.

- Click Browse to upload the entire manifest file.

- Navigate to the location where the file is saved. Do not open it or upload individual parts of it.

4.4. Importing a subscription

After you have obtained an authorized Ansible Automation Platform subscription, you must import it into the automation controller system before you can use automation controller. .Prerequisites

- You have obtained a subscriptions manifest. For more information, see Obtaining a subscriptions manifest.

Procedure

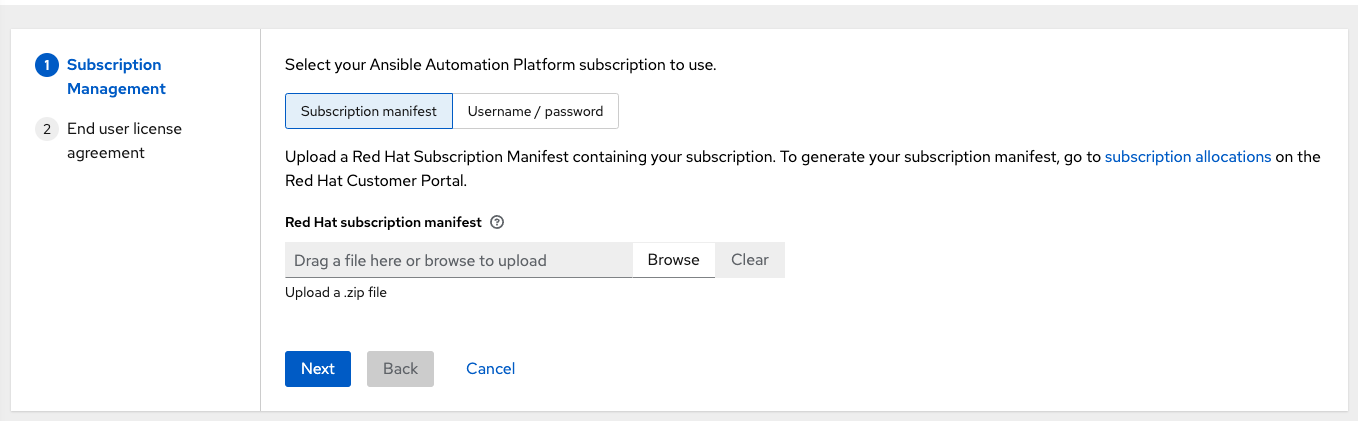

Launch automation controller for the first time. The Subscription Management screen displays.

Retrieve and import your subscription by completing either of the following steps:

If you have obtained a subscription manifest, upload it by navigating to the location where the file is saved. The subscription manifest is the complete

.zipfile, and not only its component parts.NoteIf the Browse option in the Subscription manifest option is disabled, clear the username and password fields to enable it.

The subscription metadata is then retrieved from the RHSM/Satellite API, or from the manifest provided. If many subscription counts were applied in a single installation, automation controller combines the counts but uses the earliest expiration date as the expiry (at which point you must refresh your subscription).

If you are using your Red Hat customer credentials, enter your username and password on the license page. Use your Satellite username or password if your automation controller cluster nodes are registered to Satellite with Subscription Manager. After you enter your credentials, click Get Subscriptions.

Automation controller retrieves your configured subscription service. Then, it prompts you to select the subscription that you want to run and applies that metadata to automation controller. You can log in over time and retrieve new subscriptions if you have renewed.

Click Next to proceed to the Tracking and Insights page.

Tracking and insights collect data to help Red Hat improve the product and deliver a better user experience. For more information about data collection, see Usability Analytics and Data Collection of the Automation controller Administration Guide.

This option is checked by default, but you can opt out of any of the following:

- User analytics. Collects data from the controller UI.

Insights Analytics. Provides a high level analysis of your automation with automation controller. It helps you to identify trends and anomalous use of the controller. For opt-in of Automation Analytics to be effective, your instance of automation controller must be running on Red Hat Enterprise Linux. For more information, see the Automation Analytics section.

NoteYou can change your analytics data collection preferences at any time.

- After you have specified your tracking and Insights preferences, click Next to proceed to the End User Agreement.

Review and check the I agree to the End User License Agreement checkbox and click Submit.

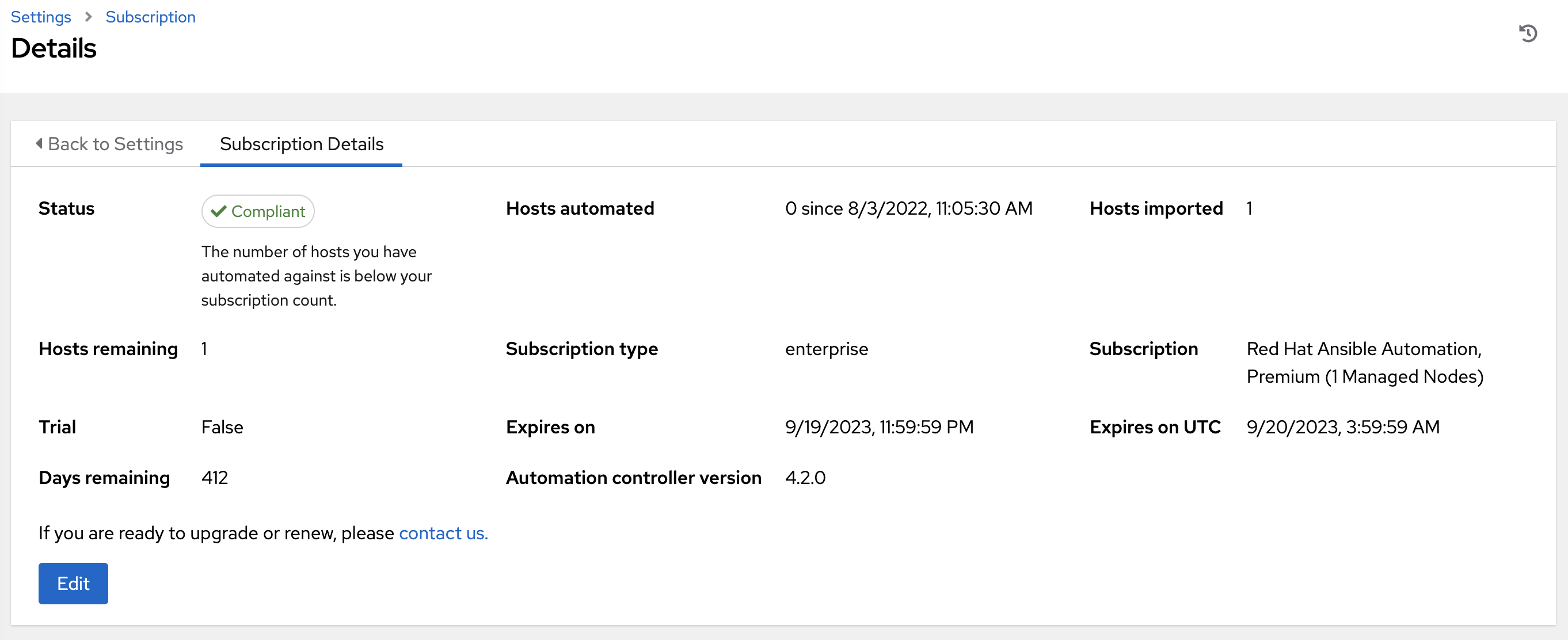

After your subscription is accepted, automation controller displays the subscription details and opens the Dashboard. To return to the Subscription settings screen from the Dashboard, select Settings → Subscription settings from the Subscription option in the navigation panel.

Optional: To return to the Subscription settings screen from the Dashboard, select Settings → Subscription settings option in the navigation panel.

Troubleshooting your subscription

When your subscription expires (you can check this in the Subscription details of the Subscription settings window), you must renew it in automation controller. You can do this by either importing a new subscription, or setting up a new subscription.

If you meet the "Error fetching licenses" message, check that you have the proper permissions required for the Satellite user. The automation controller administrator requires this to apply a subscription.

The Satellite username and password is used to query the Satellite API for existing subscriptions. From the Satellite API, the automation controller receives metadata about those subscriptions, then filters through to find valid subscriptions that you can apply. These are then displayed as valid subscription options in the UI.

The following Satellite roles grant proper access:

-

Custom with

view_subscriptionsandview_organizationsfilter - Viewer

- Administrator

- Organization Administrator

- Manager

Use the Custom role for your automation controller integration, as it is the most restrictive. For more information, see the Satellite documentation on managing users and roles.

The System Administrator role is not equal to the Administrator user checkbox, and does not offer enough permissions to access the subscriptions API page.

4.5. Add a subscription manually

If you are unable to apply or update the subscription information by using the automation controller user interface, you can upload the subscriptions manifest manually in an Ansible playbook.

Use the license module in the ansible.controller collection:

- name: Set the license using a file license: manifest: "/tmp/my_manifest.zip"

For more information, see the Automation controller license module.

4.6. Attaching Subscriptions

You must have valid Ansible Automation Platform subscriptions attached before installing Ansible Automation Platform.

Attaching subscriptions is unnecessary if your Red Hat account has enabled Simple Content Access Mode. However, you must register to Red Hat Subscription Management (RHSM) or Red Hat Satellite before installing Ansible Automation Platform.

Procedure

To find the

pool_idof your subscription, enter the following command:# subscription-manager list --available --all | grep "Ansible Automation Platform" -B 3 -A 6

The command returns the following:

Subscription Name: Red Hat Ansible Automation Platform, Premium (5000 Managed Nodes) Provides: Red Hat Ansible Engine Red Hat Single Sign-On Red Hat Ansible Automation Platform SKU: MCT3695 Contract: ******** Pool ID: ******************** Provides Management: No Available: 4999 Suggested: 1

To attach this subscription, enter the following command:

# subscription-manager attach --pool=<pool_id>

If all nodes have attached, then the repositories are found.

To check whether the subscription attached successfully, enter the following command:

# subscription-manager list --consumed

To remove this subscription, enter the following command:

#subscription-manager remove --pool=<pool_id>

4.7. Troubleshooting: Keep your subscription in compliance

Your subscription has two possible statuses:

- Compliant: Indicates that your subscription is appropriate for the number of hosts that you have automated within your subscription count.

- Out of compliance: Indicates that you have exceeded the number of hosts in your subscription.

Compliance is computed as follows:

managed > manifest_limit => non-compliant managed =< manifest_limit => compliant

Where: managed is the number of unique managed hosts without deletions, and manifest_limit is the number of managed hosts in the subscription manifest.

Other important information displayed are:

- Hosts automated: Host count automated by the job, which consumes the license count.

- Hosts imported: Host count considering unique host names across all inventory sources. This number does not impact hosts remaining.

- Hosts remaining: Total host count minus hosts automated.

- Hosts deleted: Hosts that were deleted, freeing the license capacity.

- Active hosts previously deleted: Number of hosts now active that were previously deleted.

For example, if you have a subscription capacity of 10 hosts:

- Starting with 9 hosts, 2 hosts were added and 3 hosts were deleted, you now have 8 hosts (compliant).

- 3 hosts were automated again, now you have 11 hosts, which puts you over the subscription limit of 10 (non-compliant).

- If you delete hosts, refresh the subscription details to see the change in count and status.

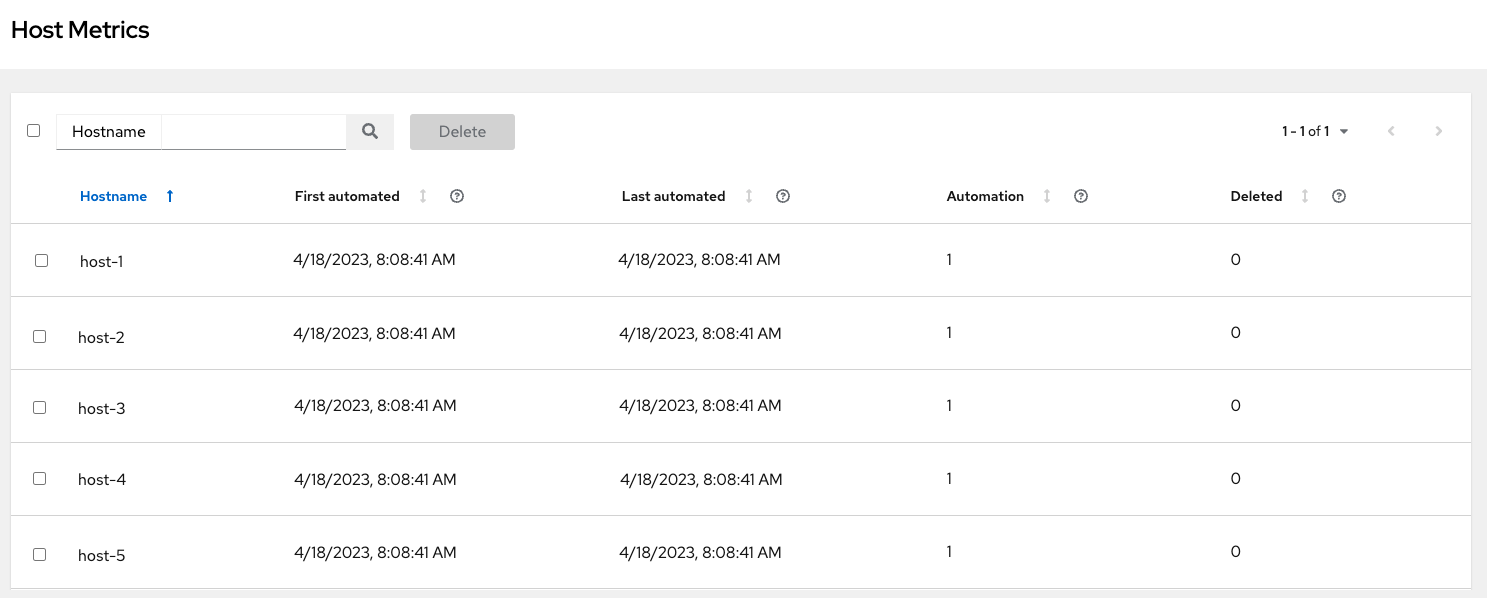

4.8. Viewing the host activity

Procedure

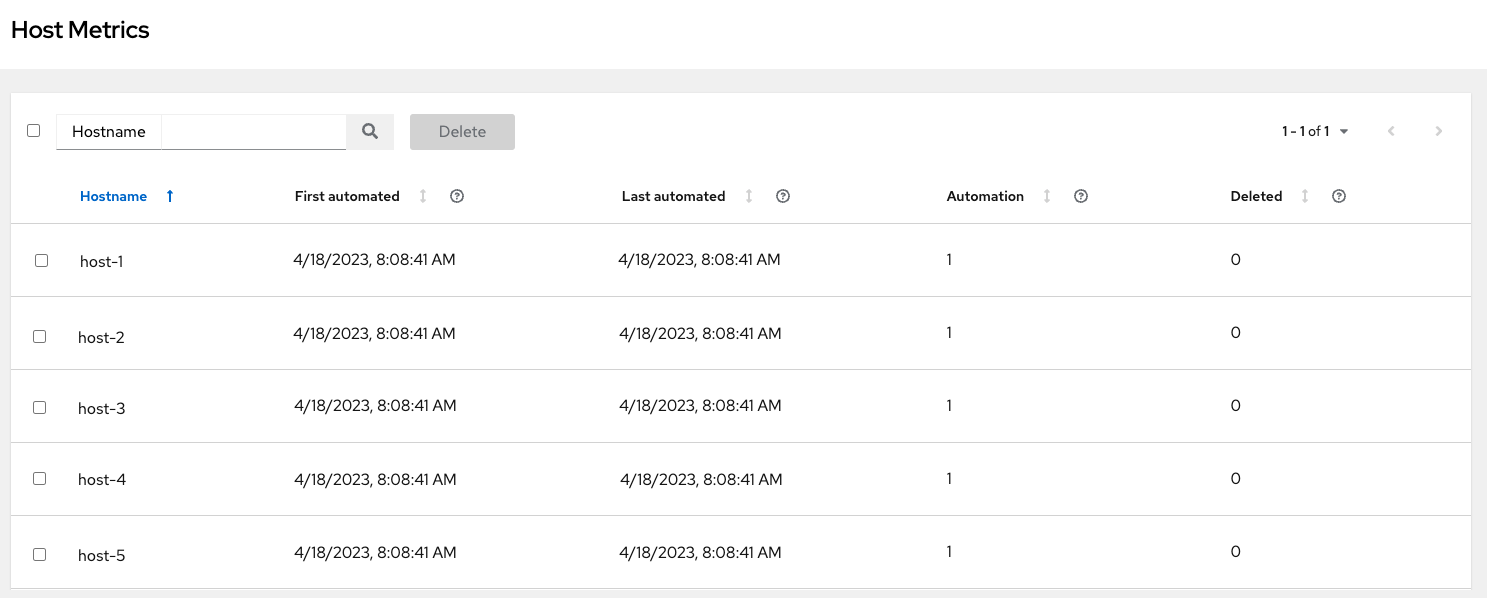

In the navigation panel, select Host Metrics to view the activity associated with hosts, such as those that have been automated and deleted.

Each unique hostname is listed and sorted by the user’s preference.

Note

NoteA scheduled task automatically updates these values on a weekly basis and deletes jobs with hosts that were last automated more than a year ago.

Delete unnecessary hosts directly from the Host Metrics view by selecting the desired hosts and clicking Delete.

These are soft-deleted, meaning their records are not removed, but are not being used and thereby not counted towards your subscription.

4.9. Host metric utilities

Automation controller provides a way to generate a CSV output of the host metric data and host metric summary through the Command Line Interface (CLI). You can also soft delete hosts in bulk through the API.

4.9.1. awx-manage utility

The awx-manage utility supports the following options:

awx-manage host_metric --csv

This command produces host metric data, a host metrics summary file, and a cluster info file. To package all the files into a single tarball for distribution and sharing use:

awx-manage host_metric --tarball

To specify the number of rows (<n>) to output to each file:

awx-manage host_metric --tarball --rows_per_file <n>

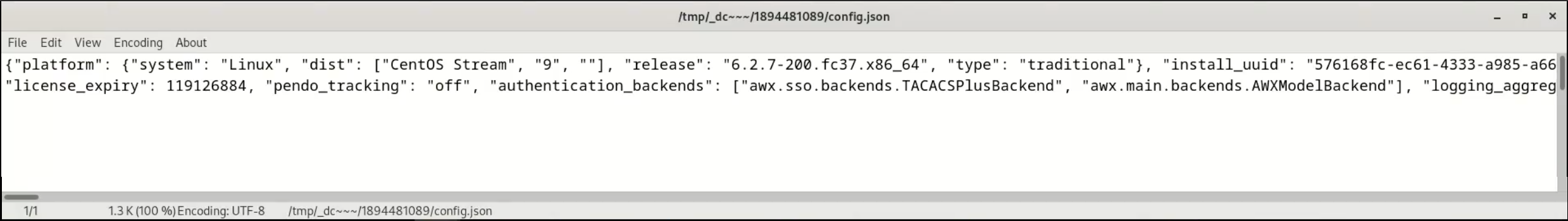

The following is an example of a configuration file:

Automation Analytics receives and uses the JSON file.

4.9.2. API endpoint functions

The API lists only non-deleted records and are sortable by last_automation and used_in_inventories columns.

You can use the host metric API endpoint,api/v2/host_metric to soft delete hosts:

api/v2/host_metric <n> DELETE

A monthly scheduled task automatically deletes jobs that uses hosts from the Host Metric table that were last automated more than a year ago.

Chapter 5. The User Interface

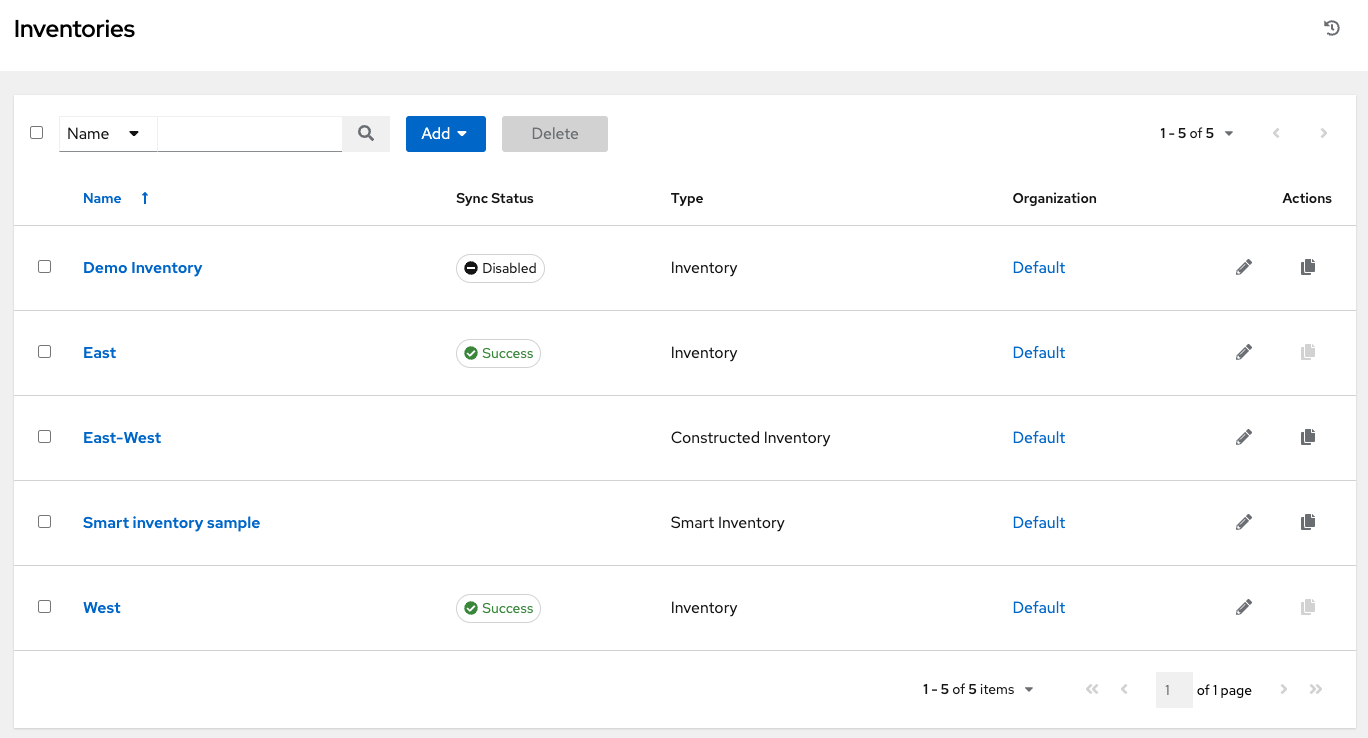

The automation controller User Interface (UI) provides a graphical framework for your IT orchestration requirements. The navigation panel provides quick access to automation controller resources, such as Projects, Inventories, Job Templates, and Jobs.

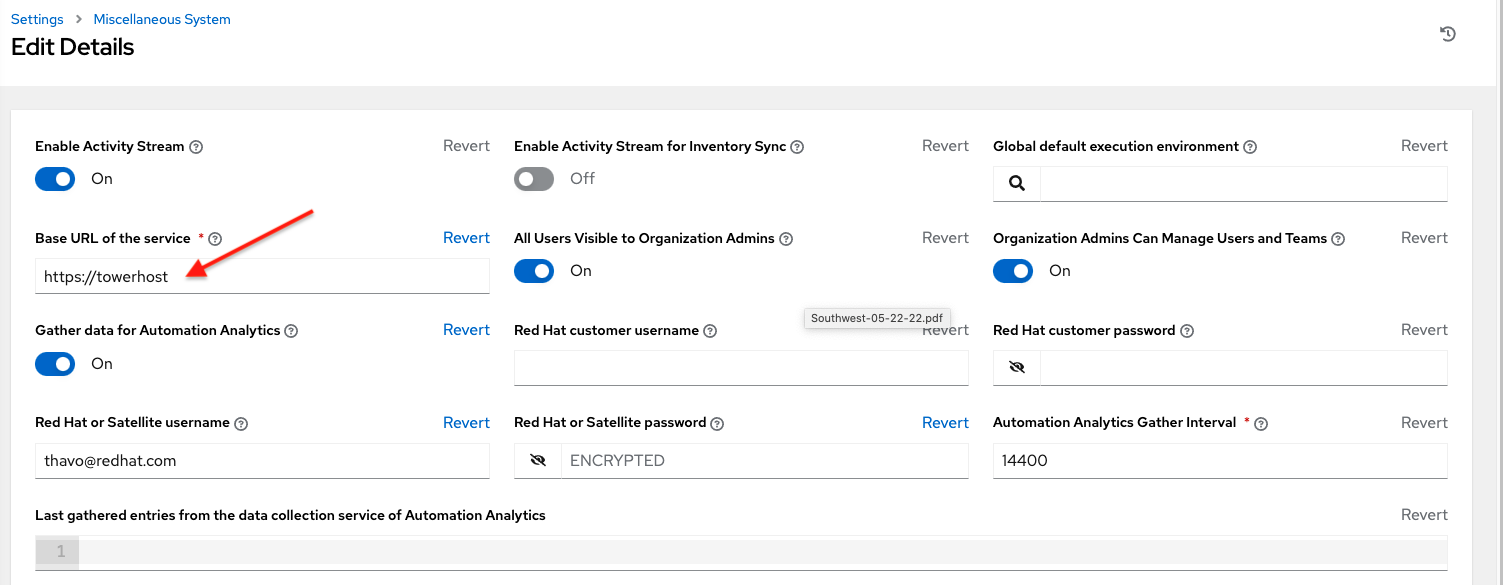

The automation controller UI is also available as a technical preview and is subject to change in future releases. To preview the new UI, click the Enable Preview of New User Interface toggle to On from the Miscellaneous System option of the Settings menu.

After saving, logout and log back in to access the new UI from the preview banner. To return to the current UI, click the link on the top banner where indicated.

Access your user profile, the About page, view related documentation, or log out using the icons in the page header.

You can view the activity stream for that user by clicking the Activity Stream ![]() icon.

icon.

5.1. Views

The automation controller UI provides several options for viewing information.

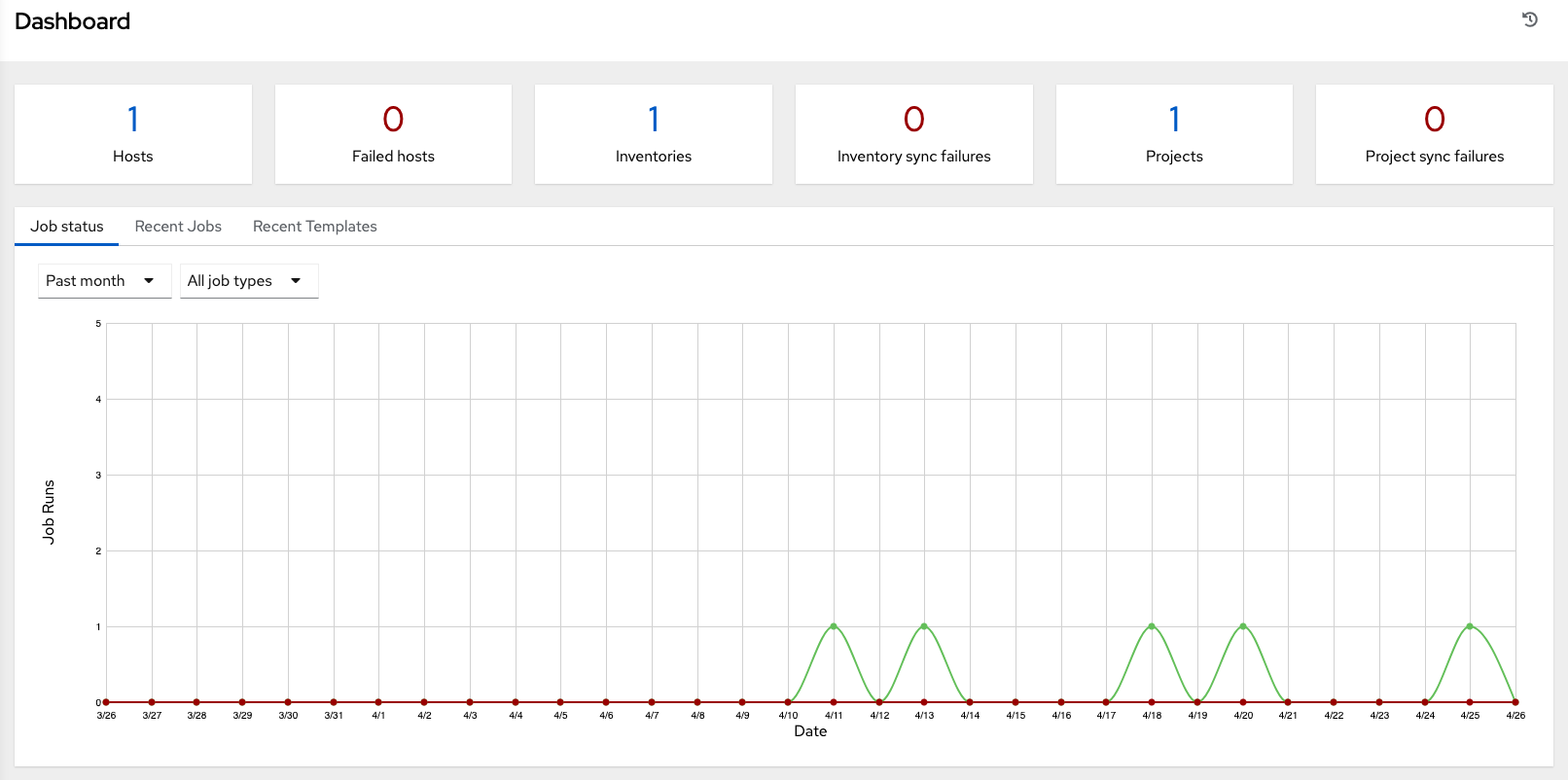

5.1.1. Dashboard View

Use the navigation menu to complete the following tasks:

- Display different views

- Navigate to your resources

- Grant access to users

- Administer automation controller features in the UI

Procedure

- From the navigation panel, select Views to hide or display the Views options.

The dashboard displays a summary of your current Job status.

- You can filter the job status within a period of time or by job type.

- You can also display summaries of Recent Jobs and Recent Templates.

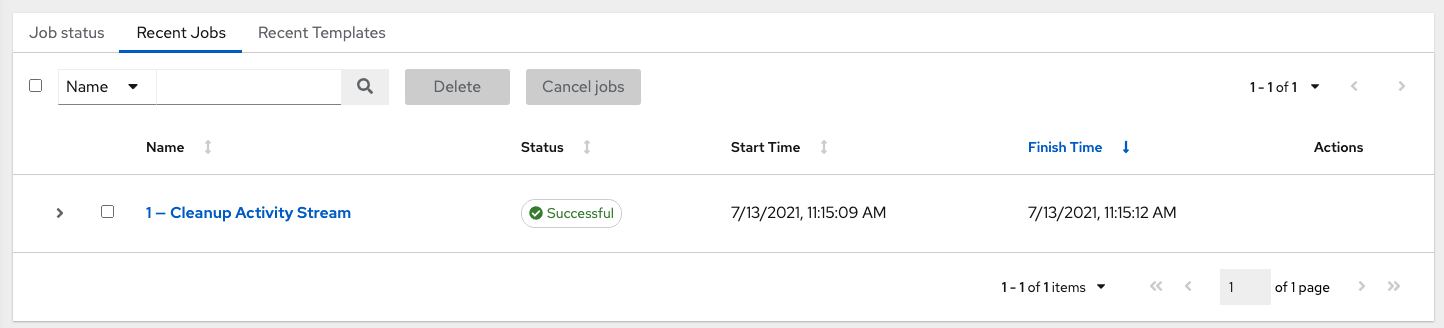

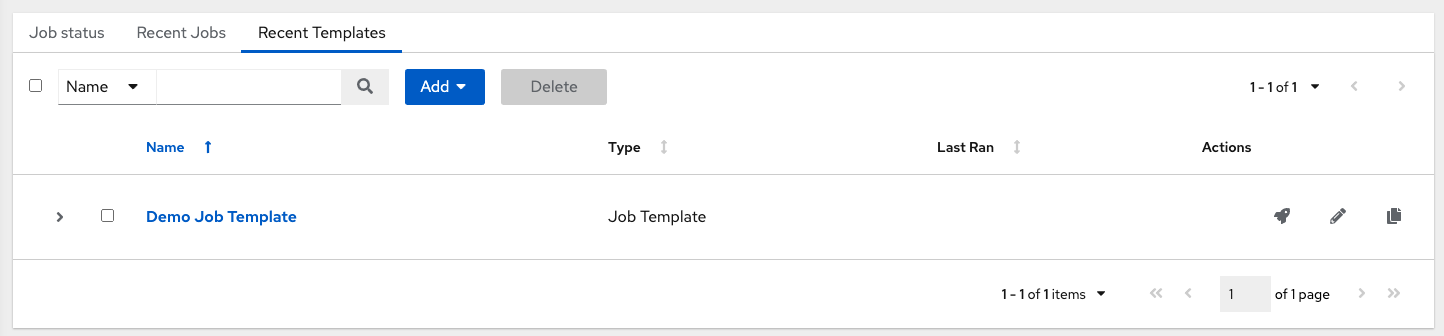

The Recent Jobs tab displays which jobs were most recently run, their status, and the time at which they were run.

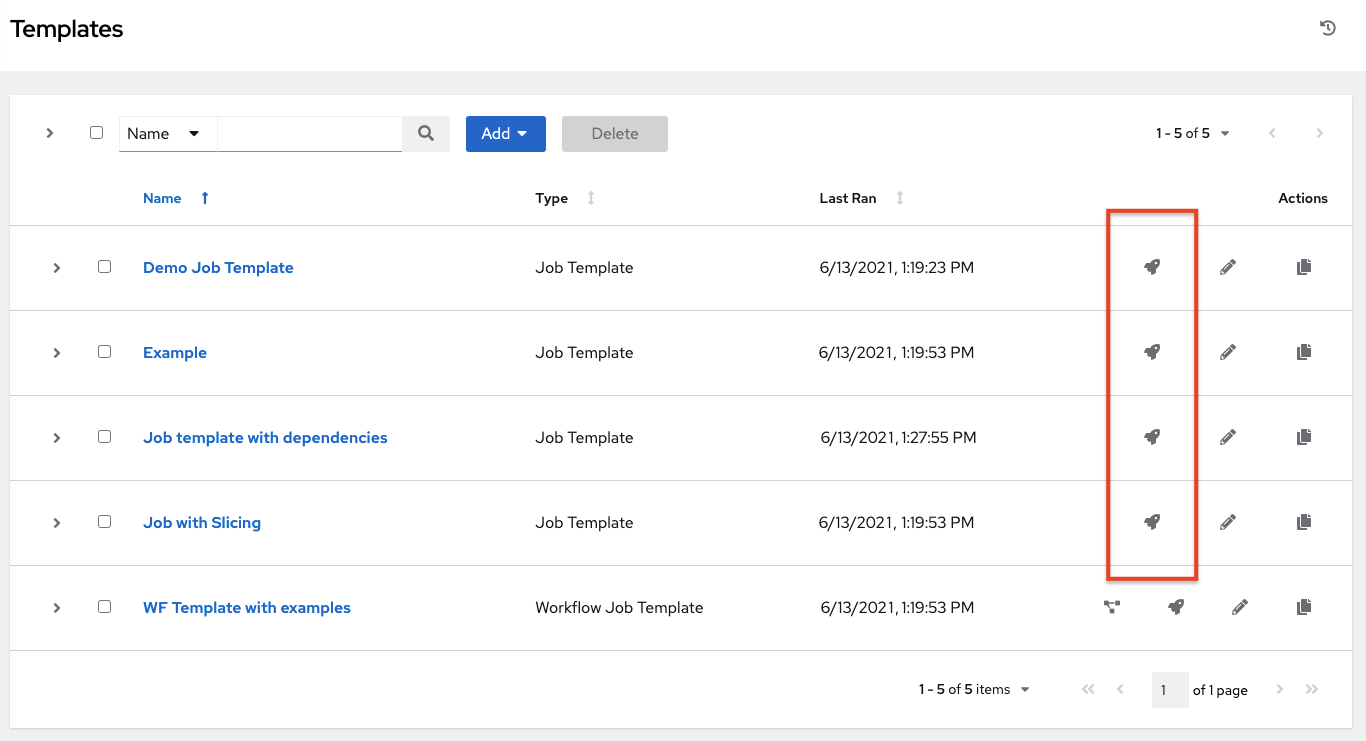

The Recent Templates tab displays a summary of the most recently used templates. You can also access this summary by selecting Resources → Templates from the navigation panel.

Click Views → Dashboard on the navigation panel, or the Ansible Automation Platform logo at any time to return to the Dashboard.

5.1.2. Jobs view

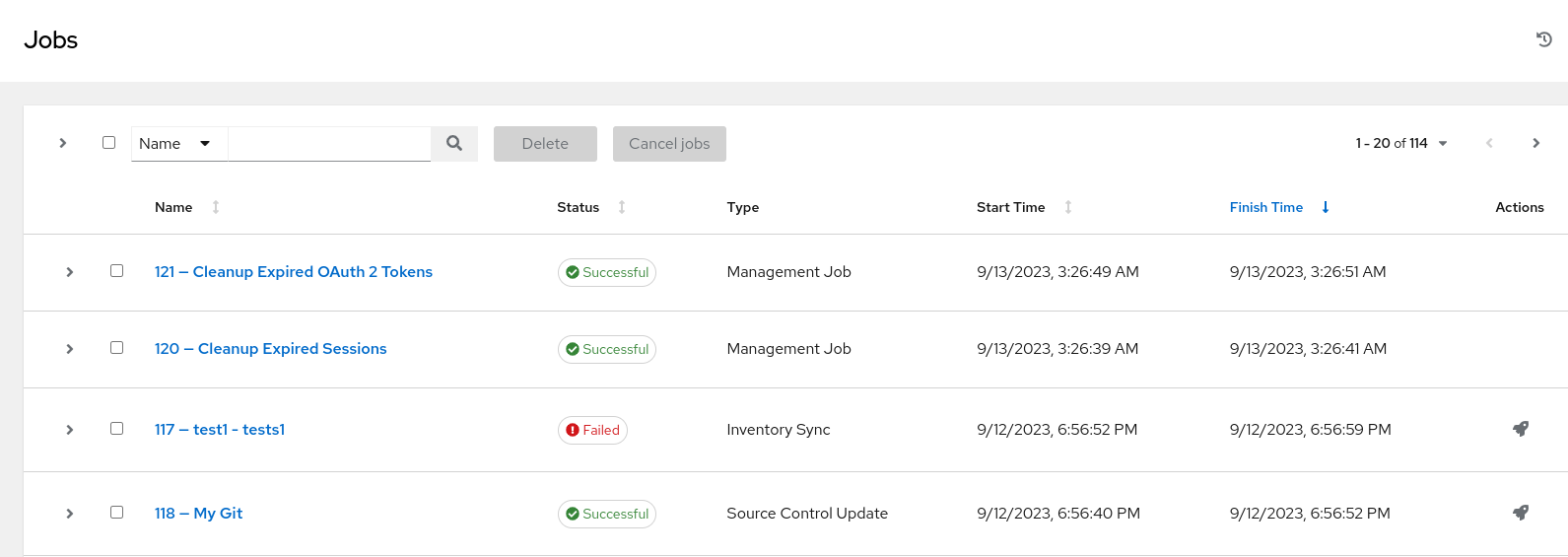

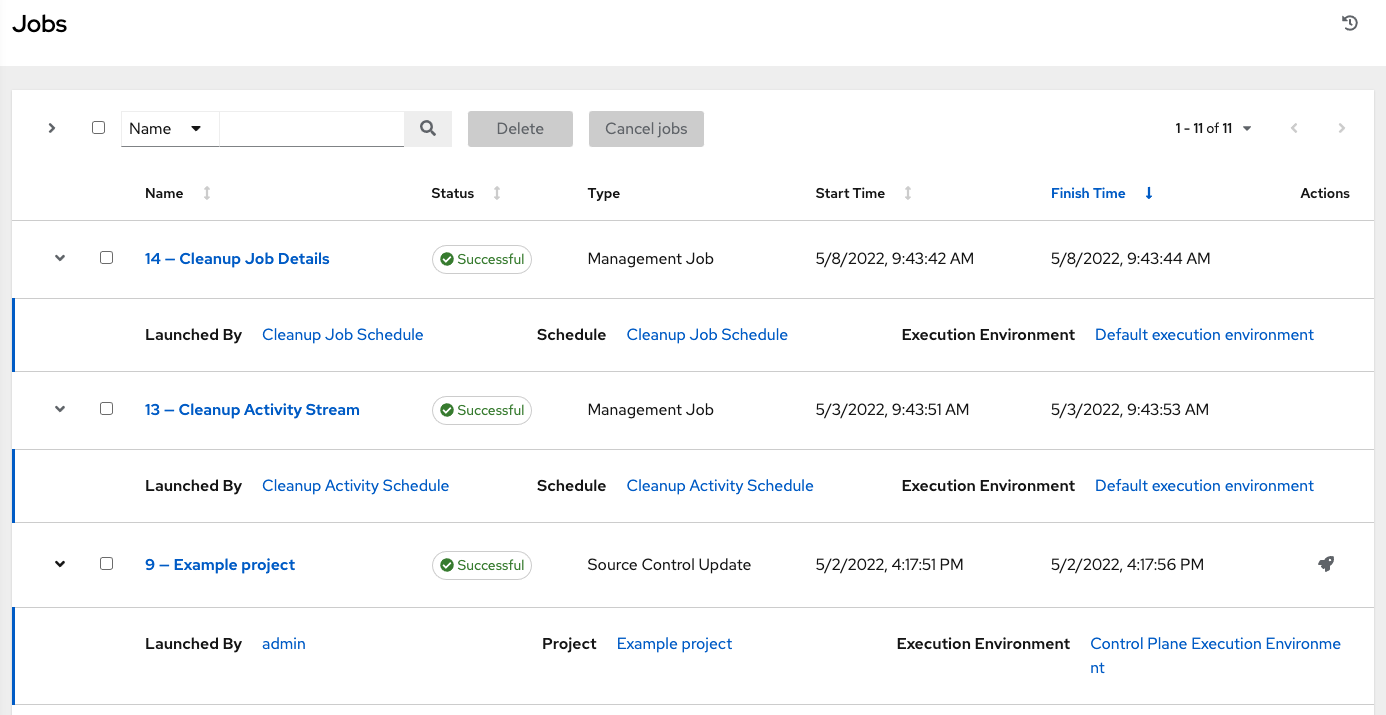

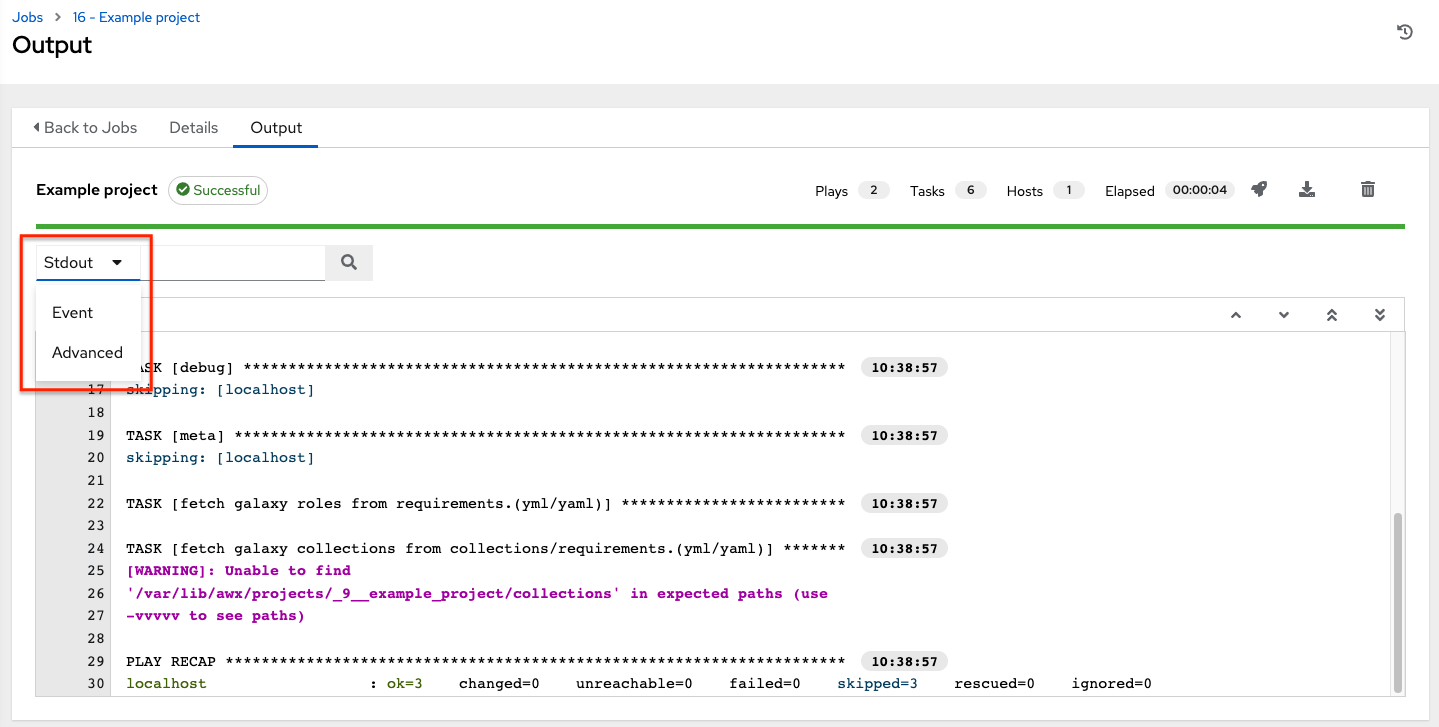

- From the navigation panel, select Views → Jobs. This view displays the jobs that have run, including projects, templates, management jobs, SCM updates, and playbook runs.

5.1.3. Schedules view

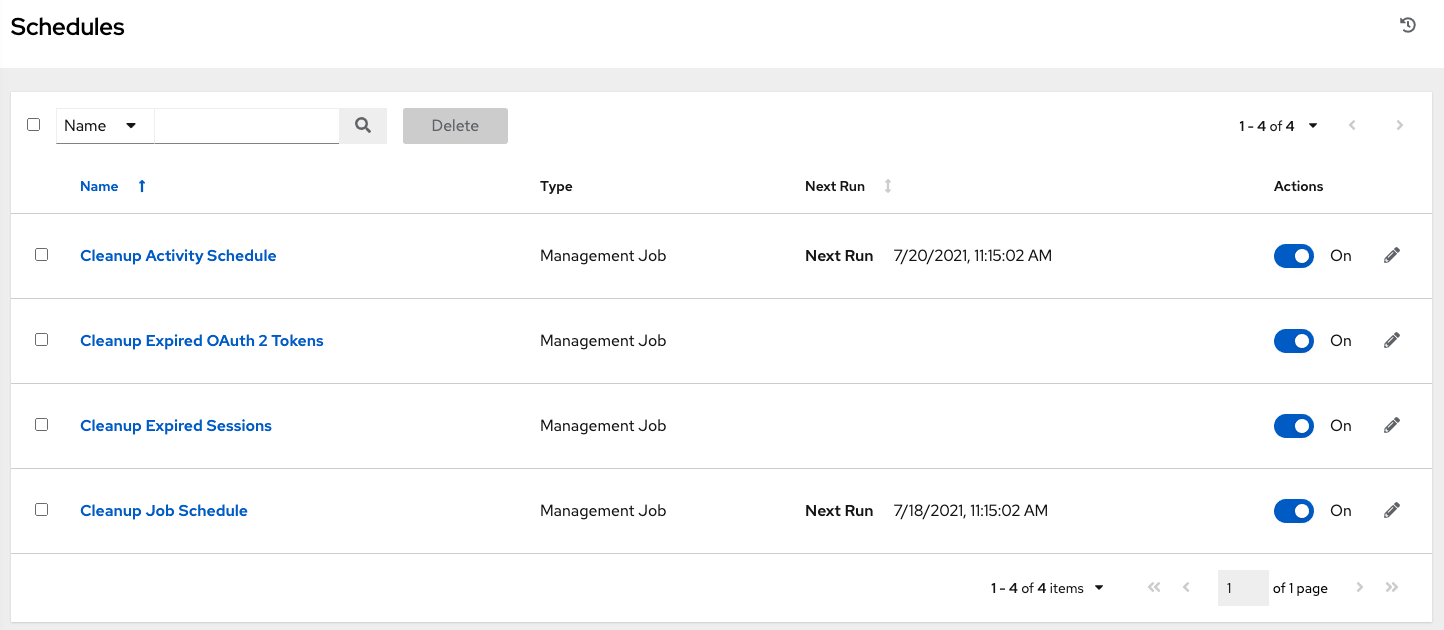

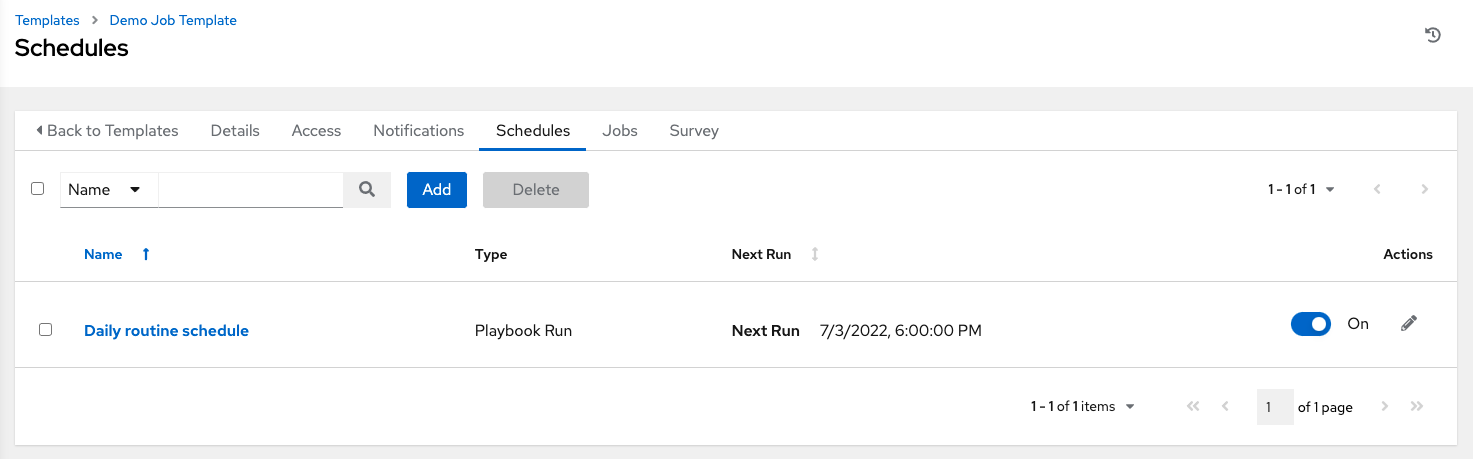

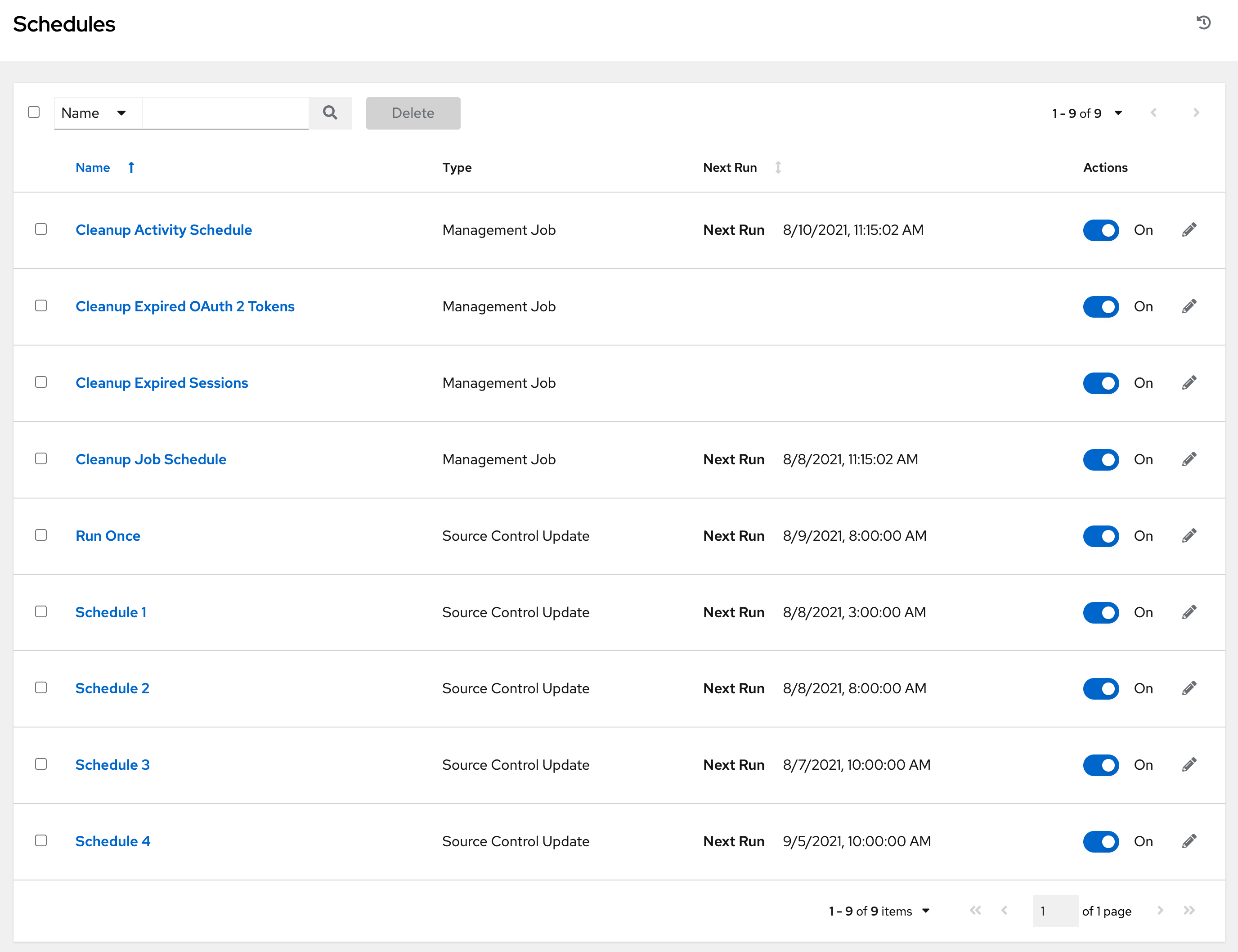

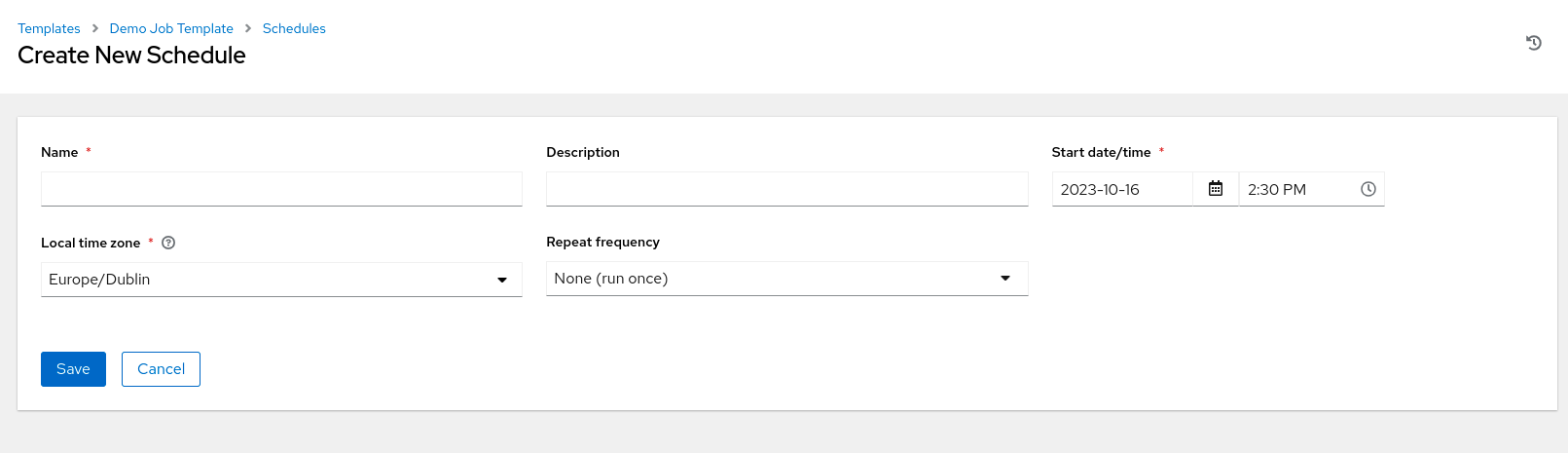

From the navigation panel, select Views → Schedules. This view shows all the scheduled jobs that are configured.

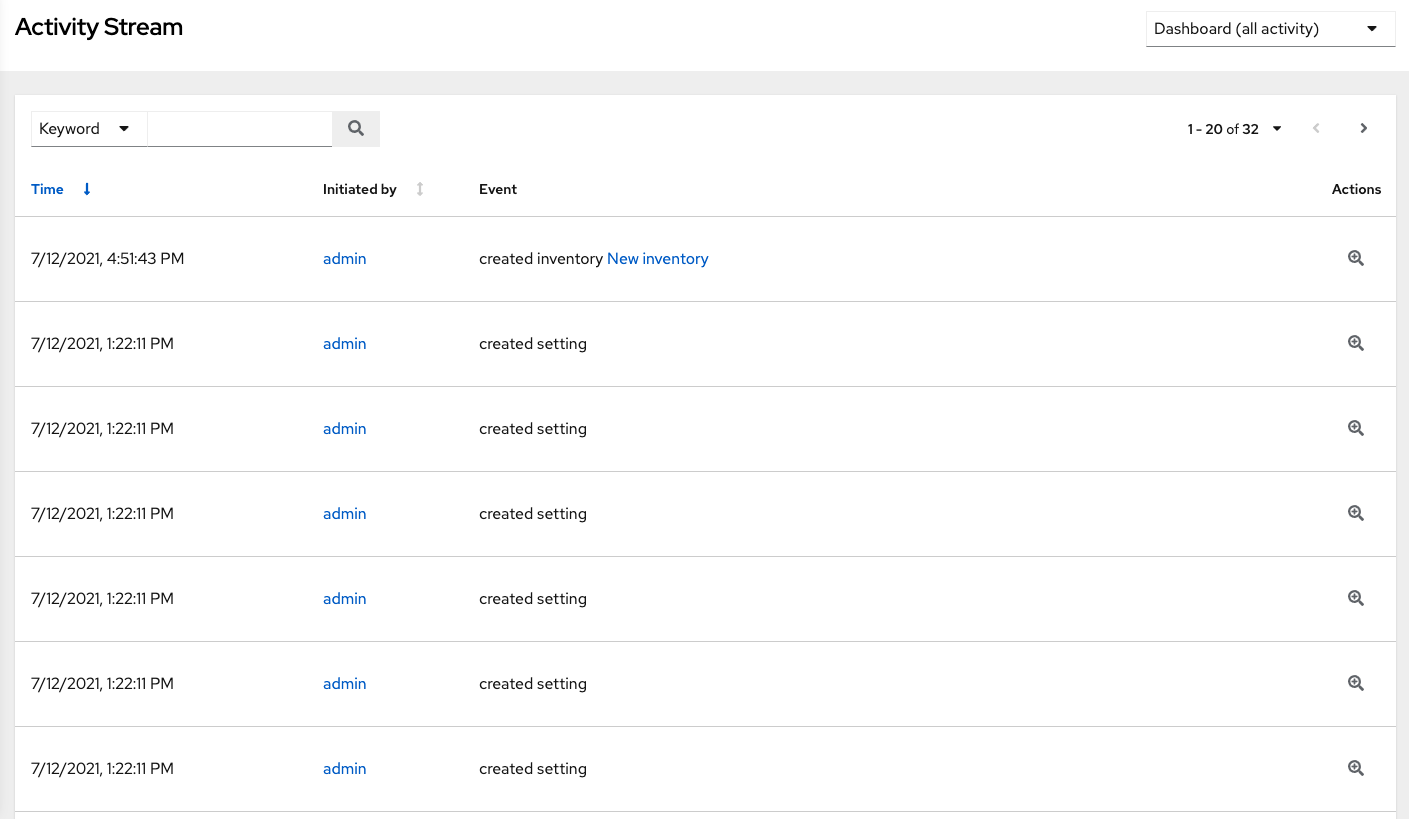

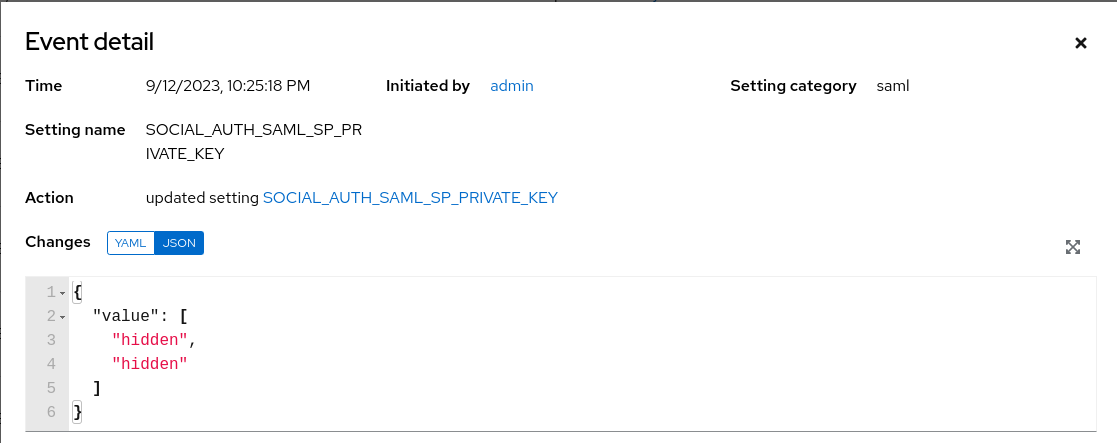

5.1.4. Activity Stream

-

From the navigation panel, select Views → Activity Stream to display Activity Streams. Most screens have an Activity Stream

icon.

icon.

An Activity Stream shows all changes for a particular object. For each change, the Activity Stream shows the time of the event, the user that initiated the event, and the action. The information displayed varies depending on the type of event. Click the Examine ![]() icon to display the event log for the change.

icon to display the event log for the change.

You can filter the Activity Stream by the initiating user, by system (if it was system initiated), or by any related object, such as a credential, job template, or schedule.

The Activity Stream on the main Dashboard shows the Activity Stream for the entire instance. Most pages permit viewing an activity stream filtered for that specific object.

5.1.5. Workflow Approvals

- From the navigation panel, select Views → Workflow Approvals to see your workflow approval queue. The list contains actions that require you to approve or deny before a job can proceed.

5.1.6. Host Metrics

- From the navigation panel, select Host Metrics to see the activity associated with hosts, which includes counts on those that have been automated, used in inventories, and deleted.

For further information, see Troubleshooting: Keeping your subscription in compliance.

5.2. Resources Menu

The Resources menu provides access to the following components of automation controller:

- Templates

- Credentials

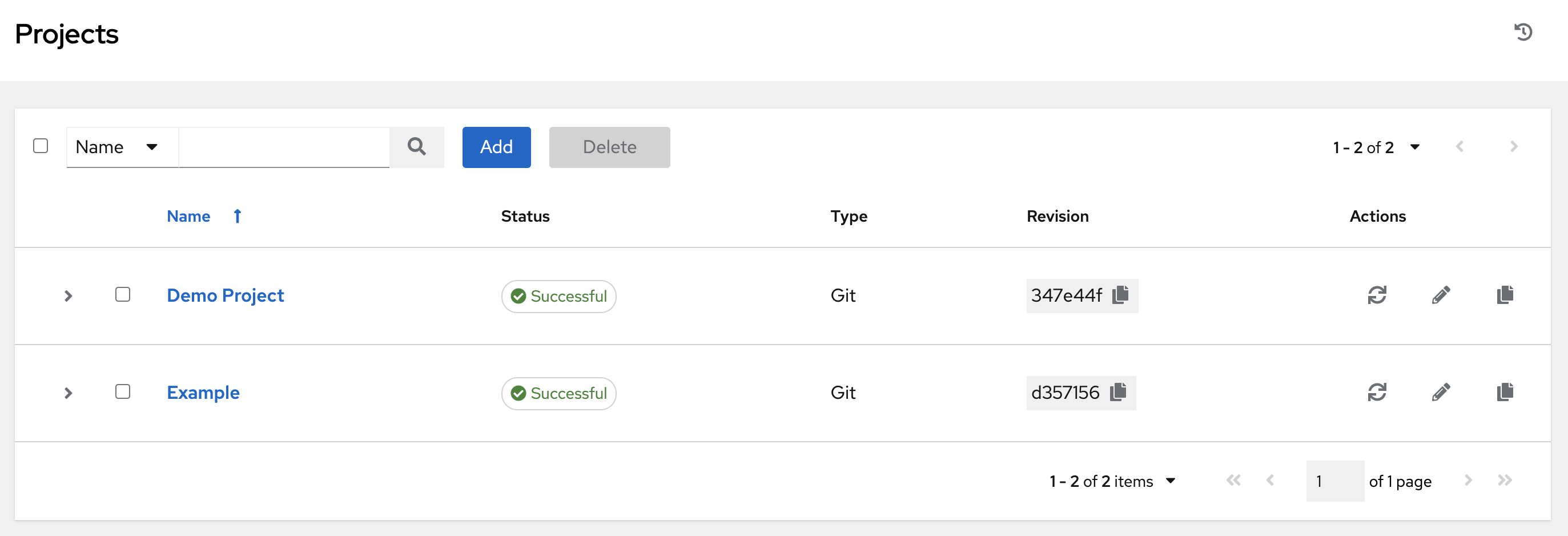

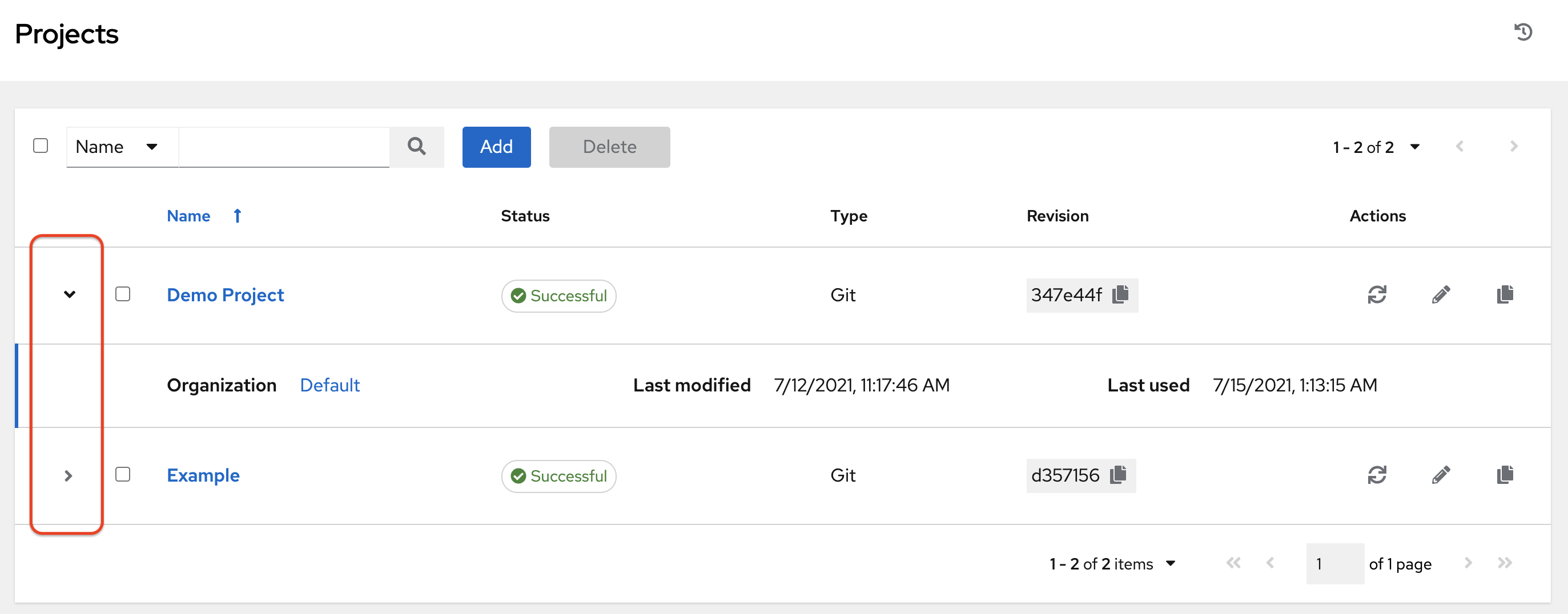

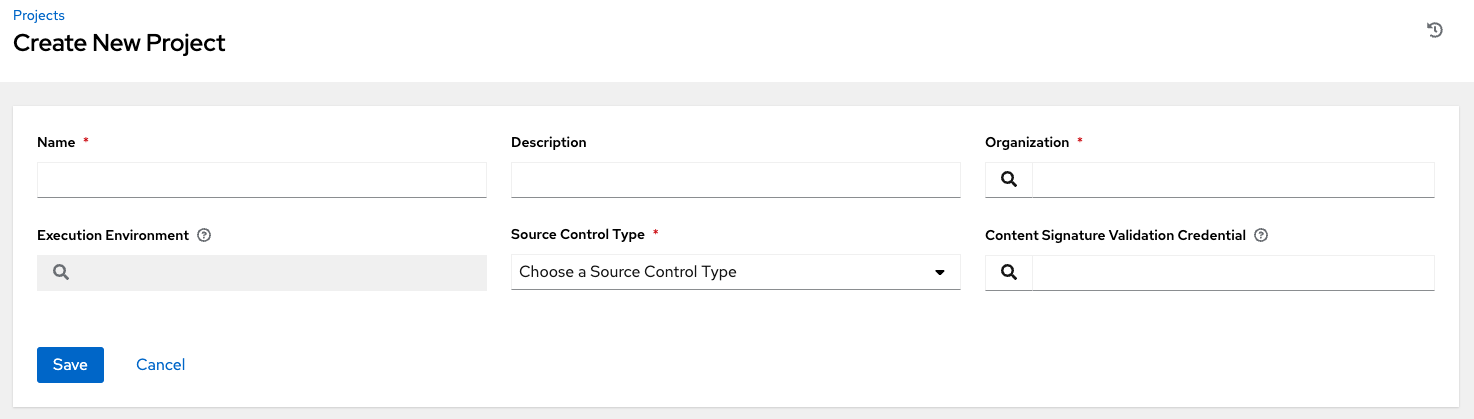

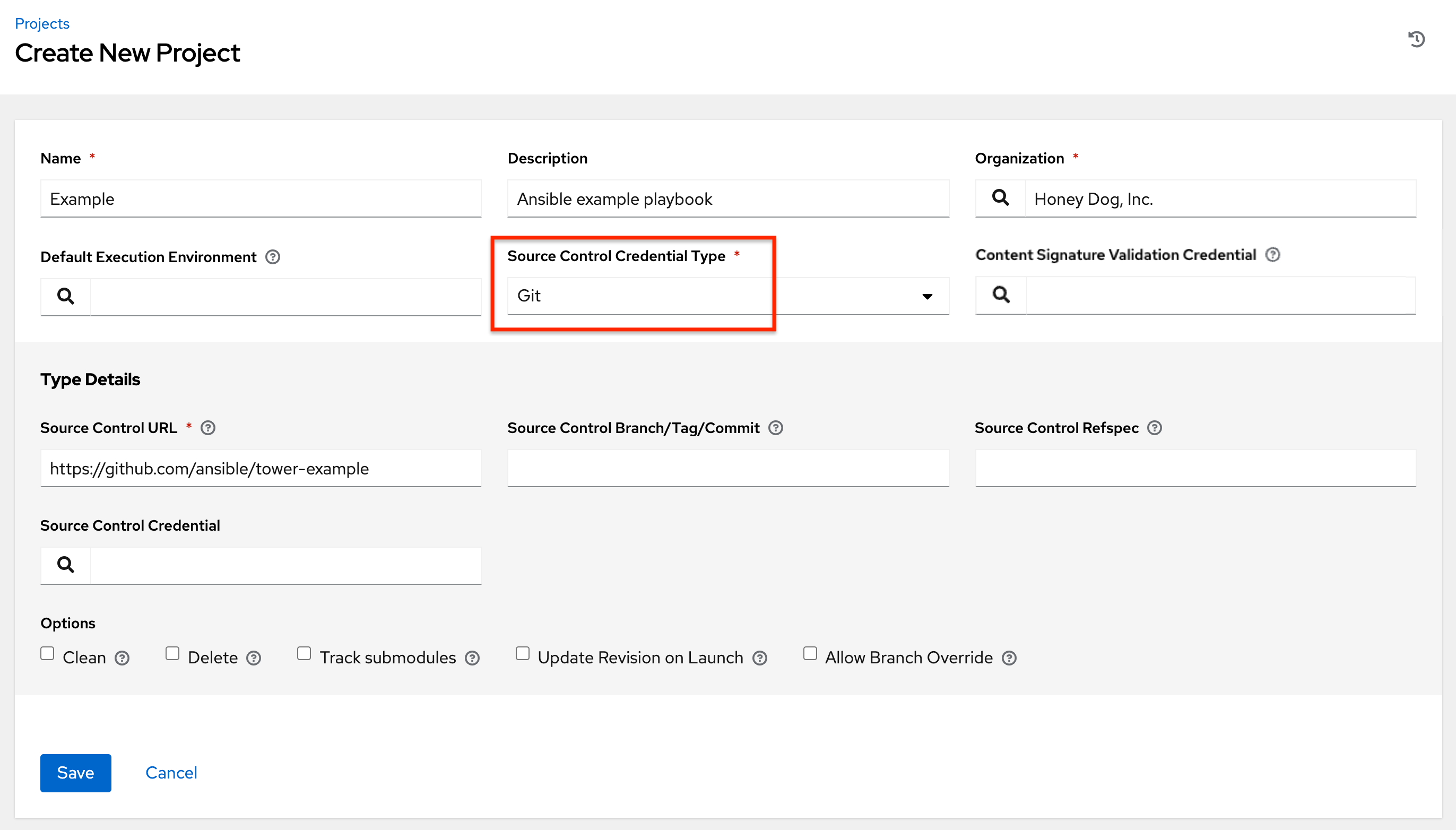

- Projects

- Inventories

- Hosts

5.3. Access Menu

The Access menu enables you to configure who has permissions to automation controller resources:

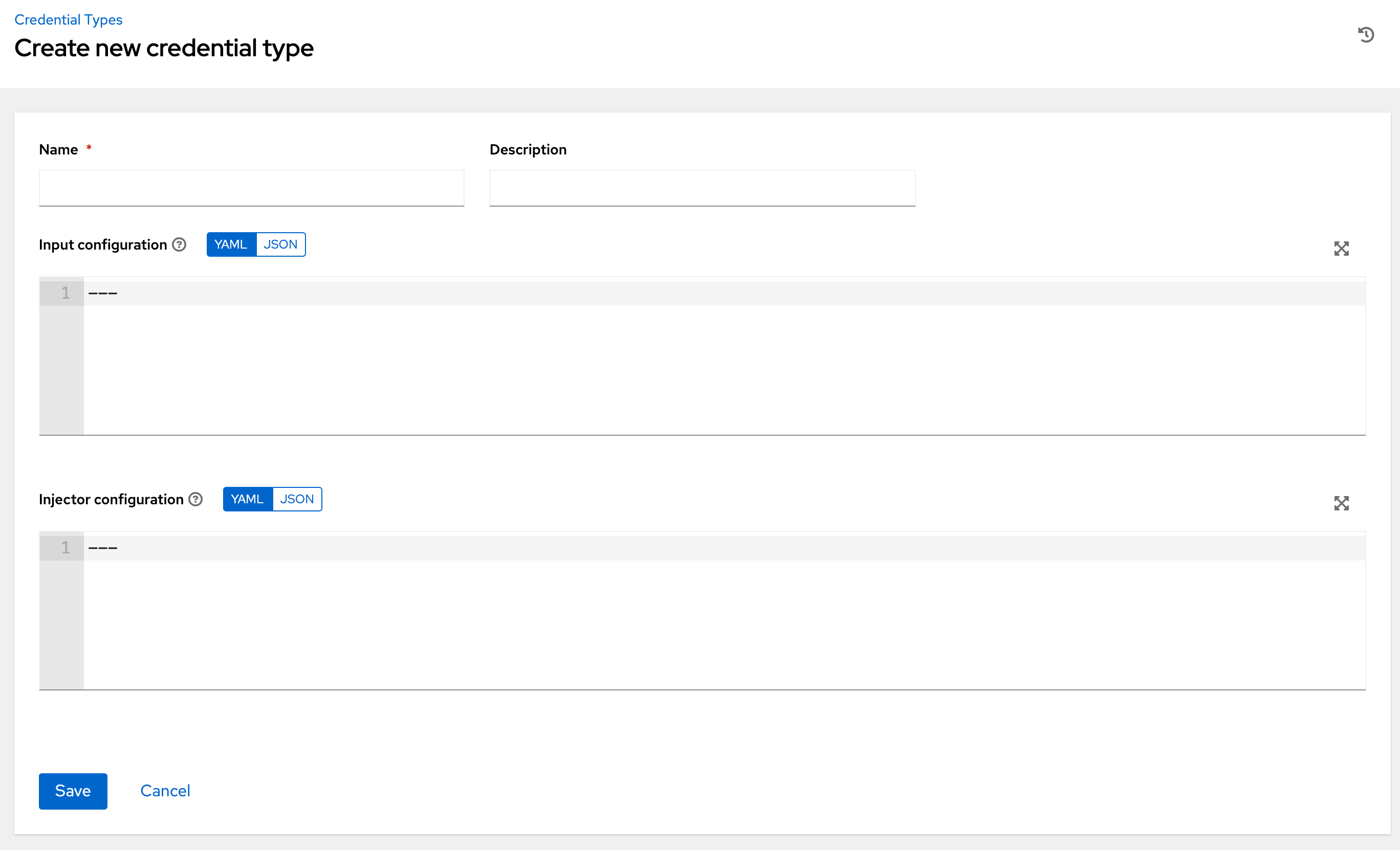

5.4. Administration

The Administration menu provides access to the administrative options of automation controller. From here, you can create, view, and edit:

- Credential types

- Notifications

- Management_jobs

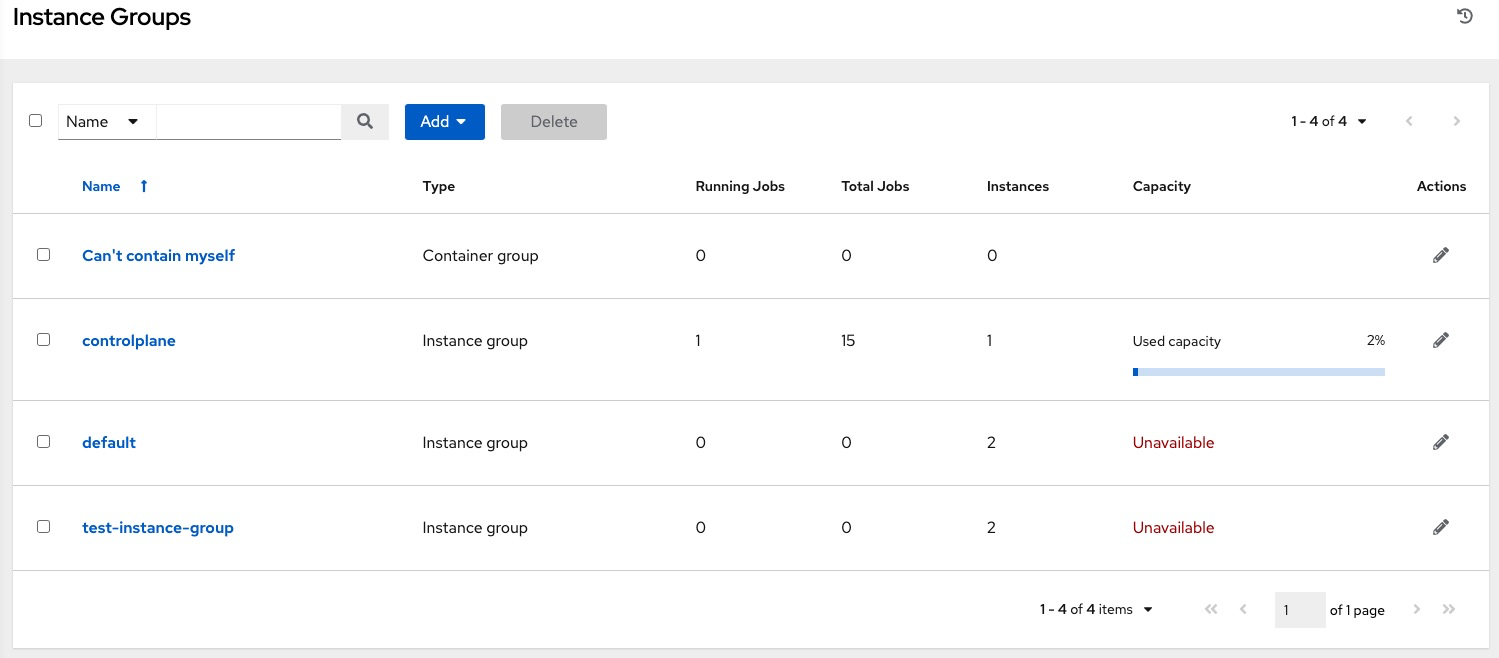

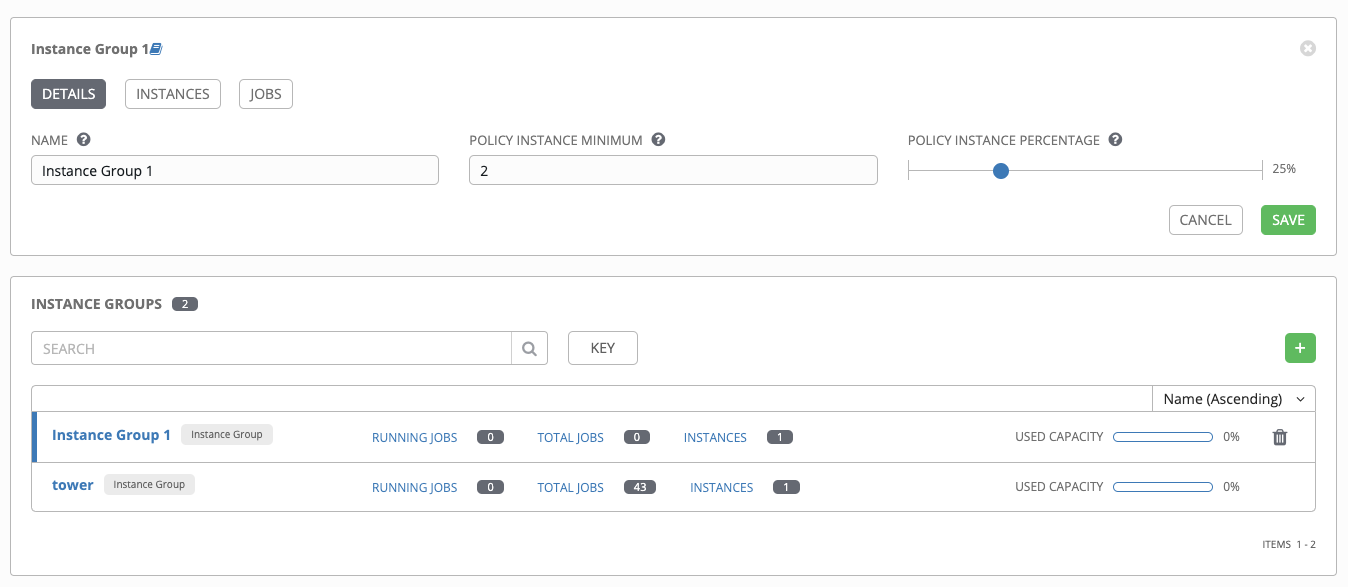

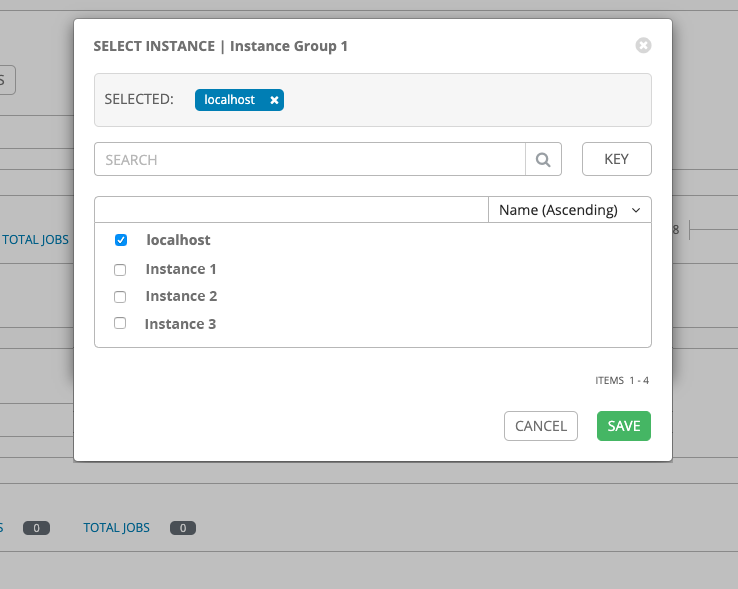

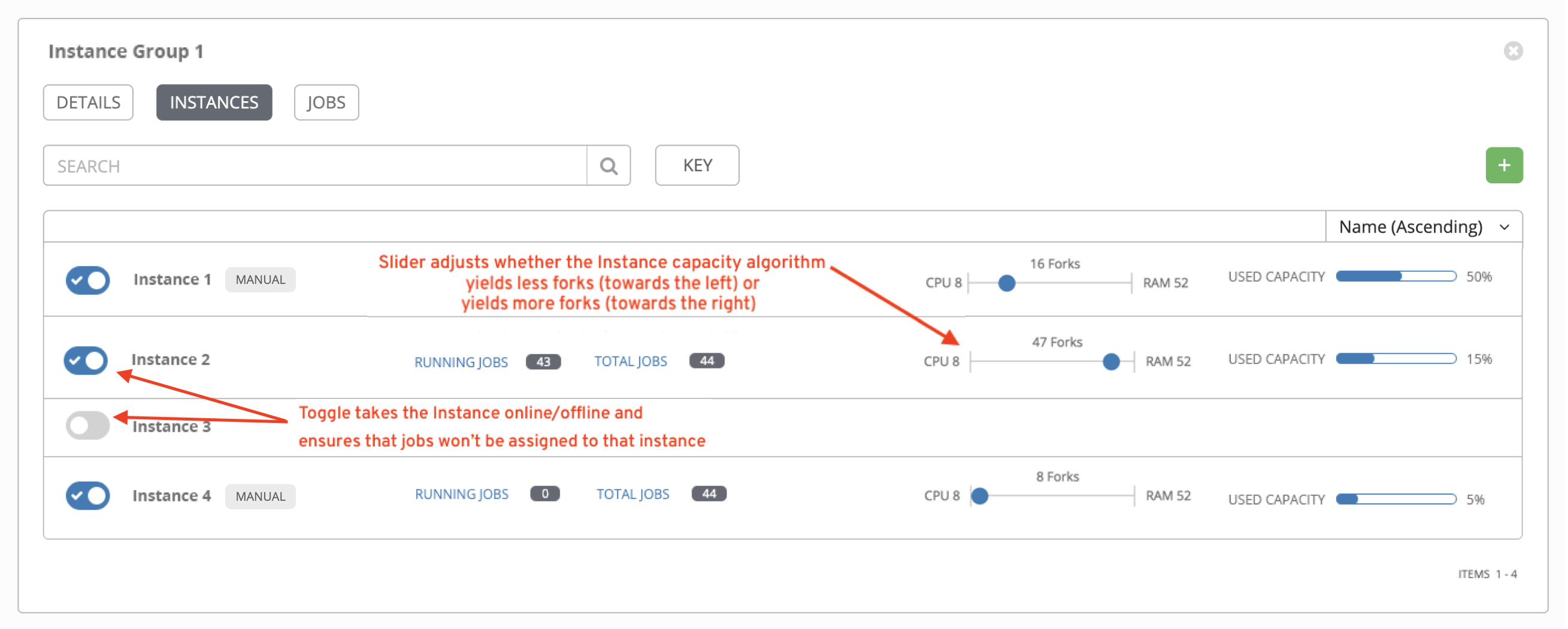

- Instance groups

- Instances

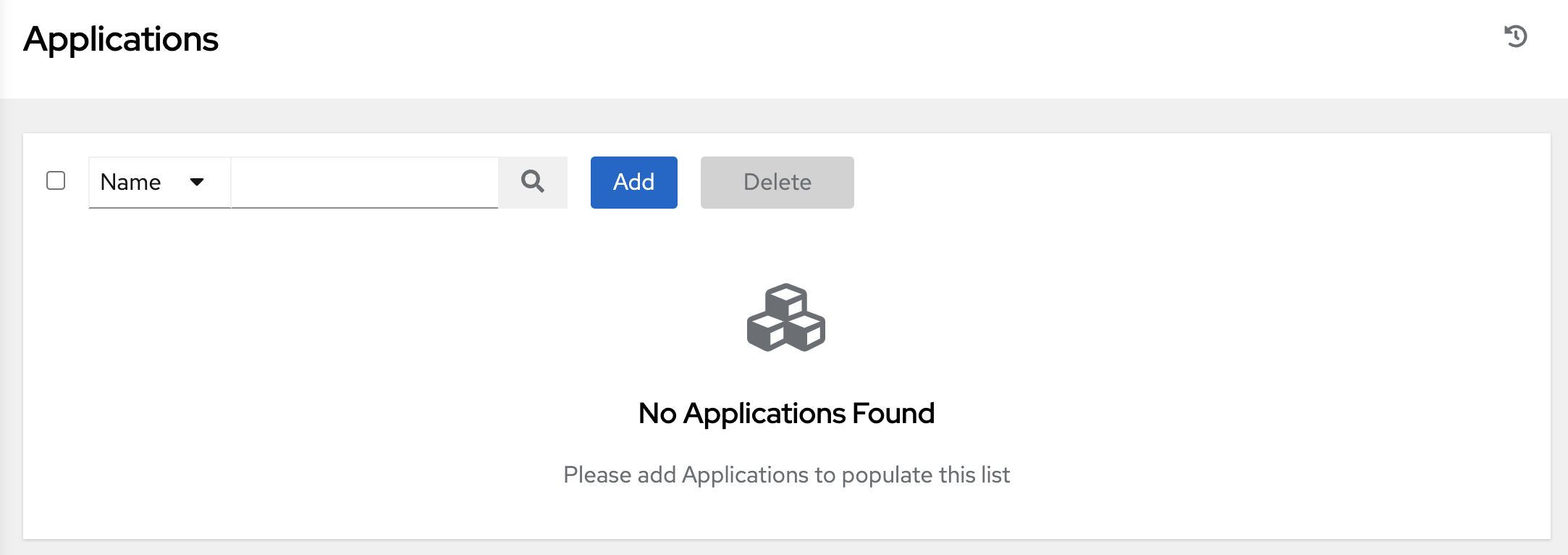

- Applications

- Execution environments

- Topology view

5.5. The Settings menu

Configure global and system-level settings using the Settings menu. The Settings menu provides access to automation controller configuration settings.

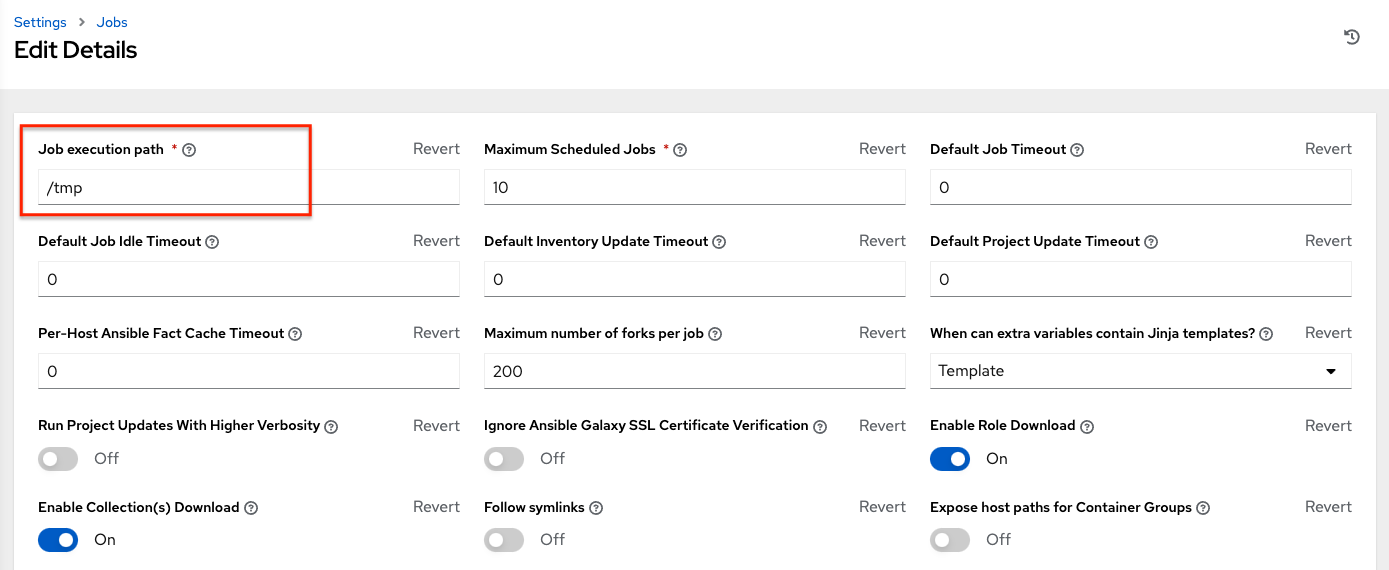

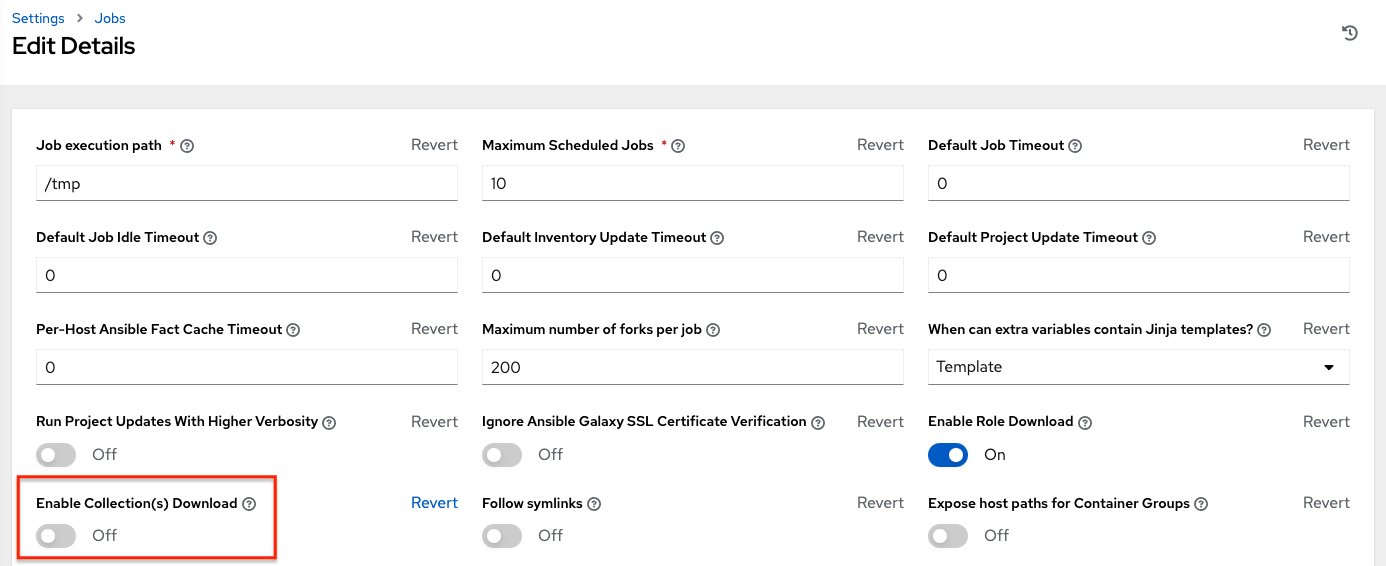

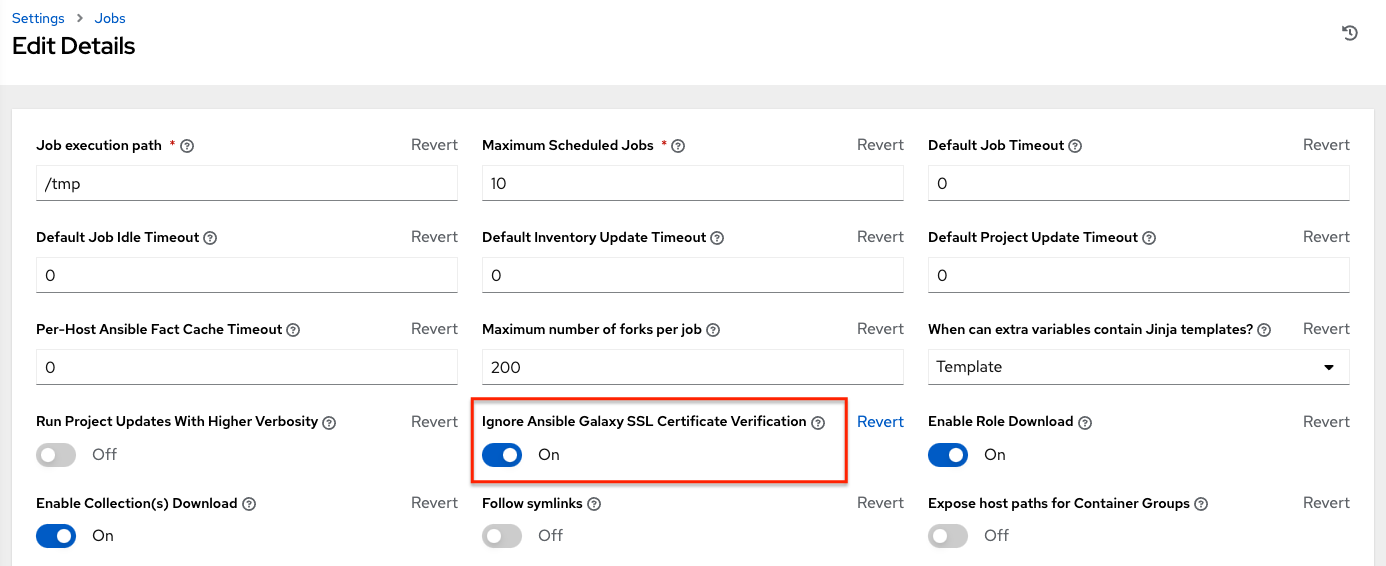

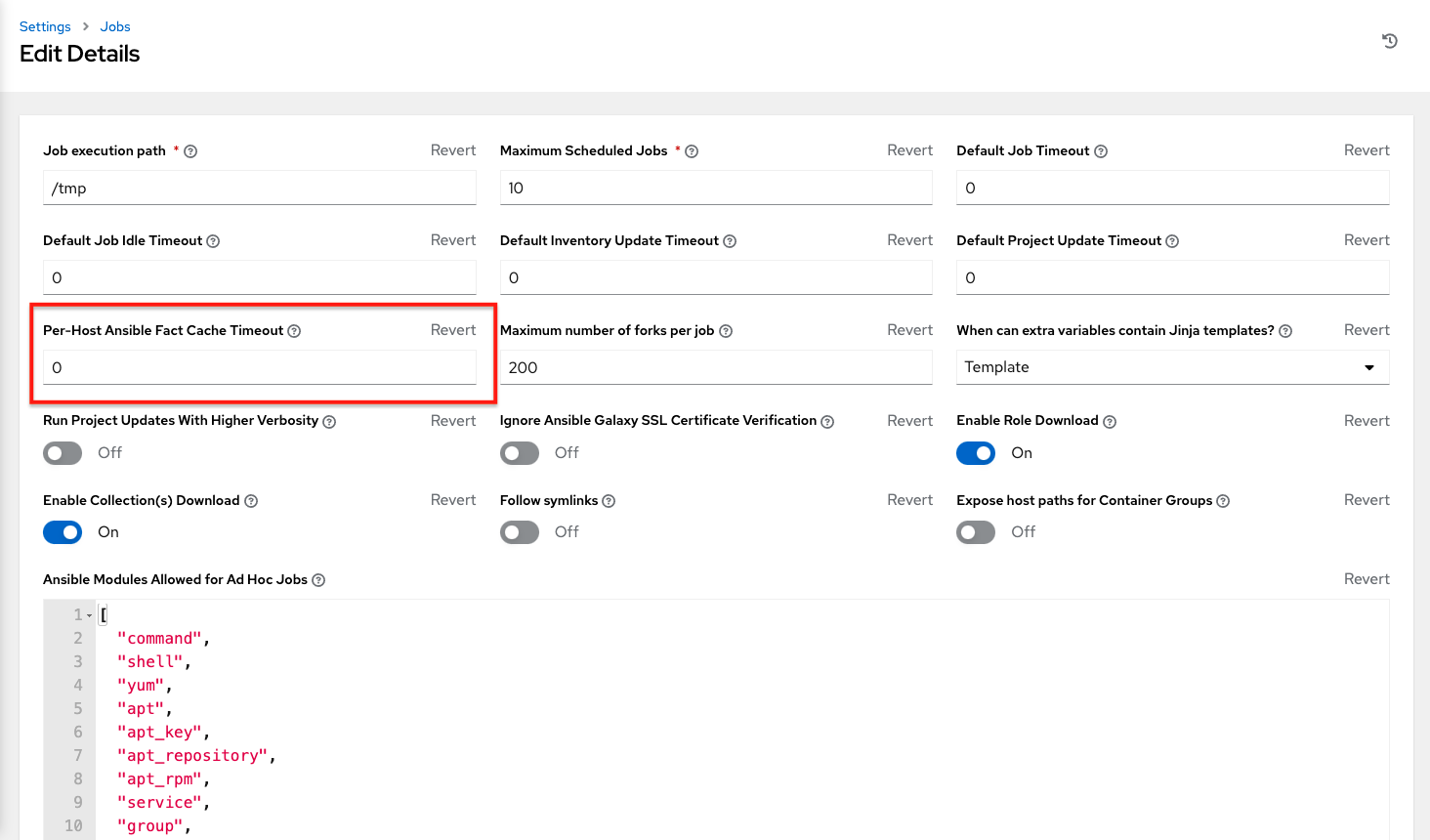

The Settings page enables administrators to configure the following:

- Authentication

- Jobs

- System-level attributes

- Customize the UI

- Product license information

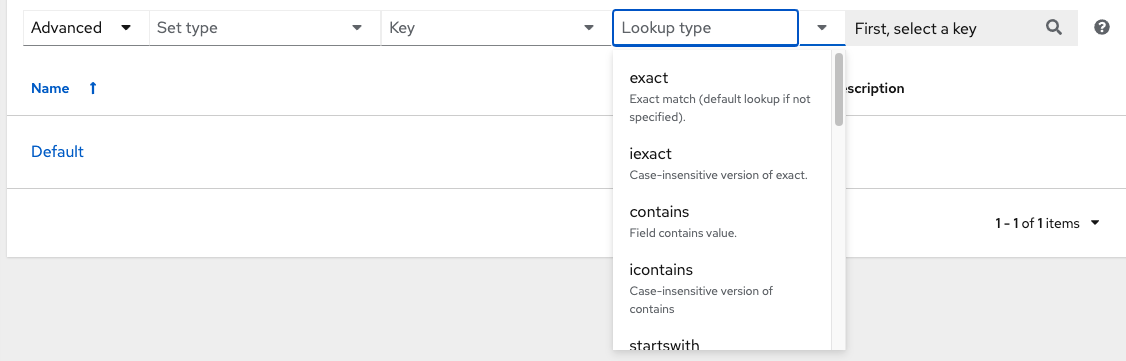

Chapter 6. Search

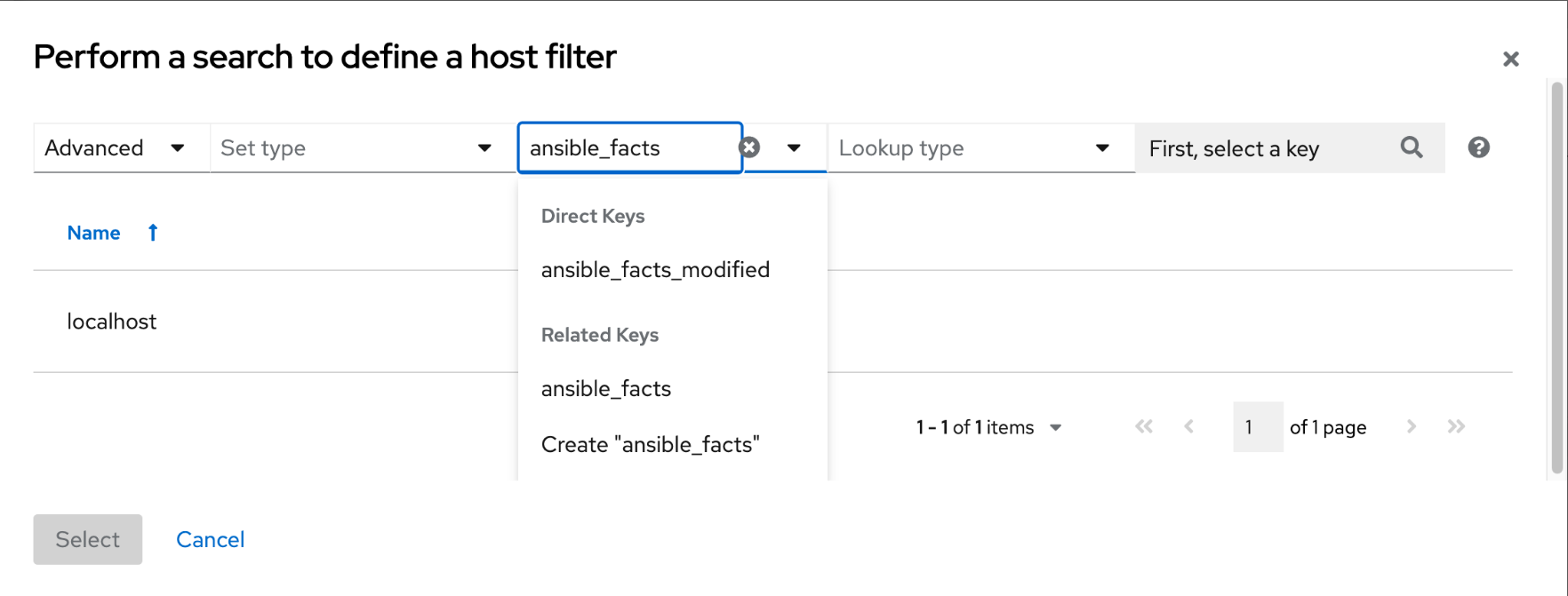

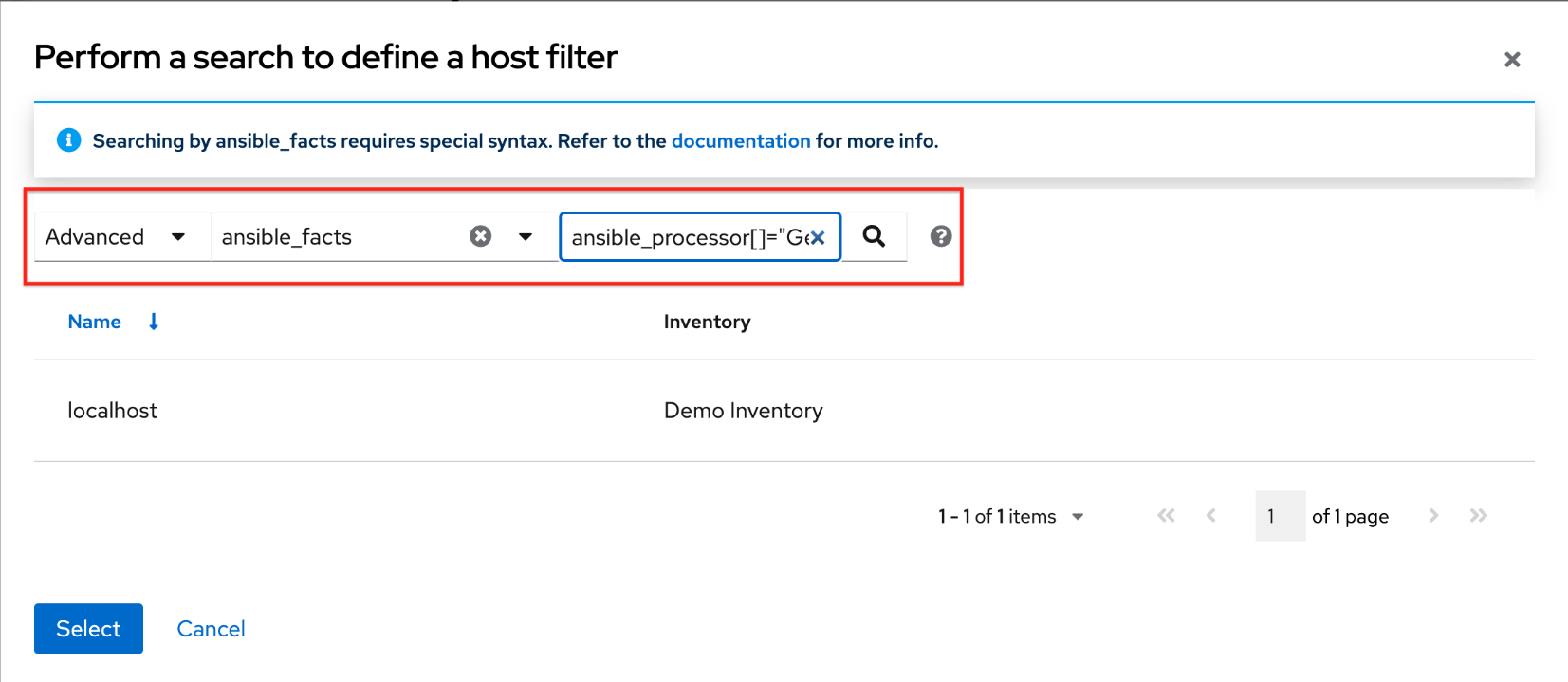

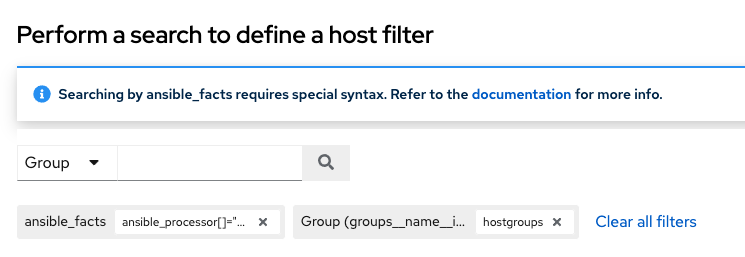

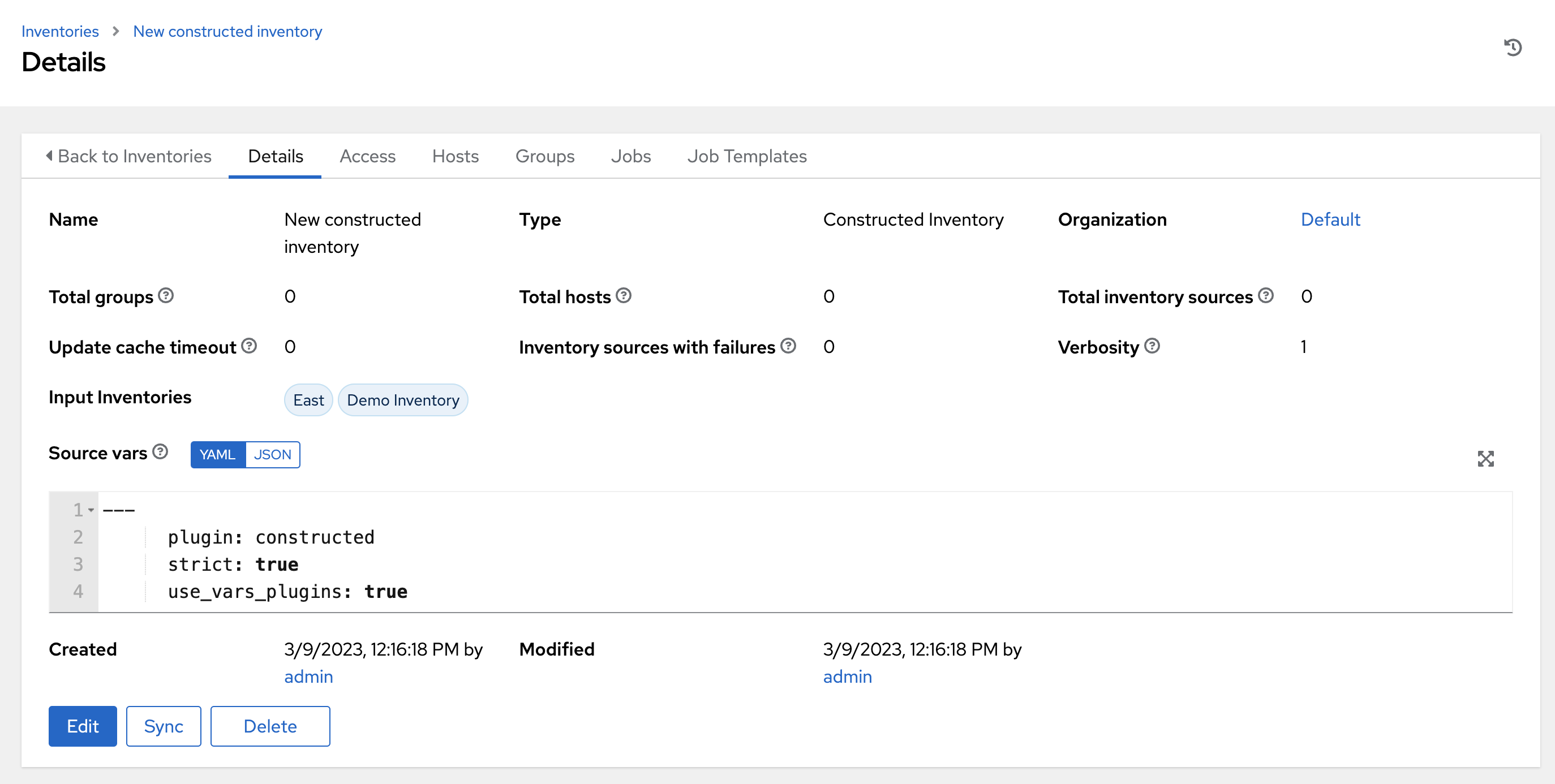

Use automation controller’s search tool for search and filter capabilities across multiple functions. An expandable list of search conditions is available from the Advanced option from the Name menu in the search field.

From there, use the combination of Set Type, Key, and Lookup type to filter.

6.1. Rules for searching

These searching tips assume that you are not searching hosts. Most of this section still applies to hosts but with some subtle differences.

- The typical syntax of a search consists of a field (left-hand side) and a value (right-hand side).

- A colon is used to separate the field that you want to search from the value.

-

If the search has no colon (see example 3) it is treated as a simple string search where

?search=foobaris sent.

The following are examples of syntax used for searching:

-

name:localhostIn this example, the user is searching for the string `localhost' in the name attribute. If that string does not match something from Fields or Related Fields, the entire search is treated as a string. -

organization.name:DefaultThis example shows a Related Field Search. The period inorganization.nameseparates the model from the field. Depending on how deep or complex the search is, you can have multiple periods in that part of the query. -

foobarThis is a simple string (key term) search that finds all instances of the search term using anicontainssearch against the name and description fields. If you use a space between terms, for examplefoo bar, then results that contain both terms are returned. If the terms are wrapped in quotes, for example,"foo bar", automation controller searches for the string with the terms appearing together.

Specific name searches search against the API name. For example, Management job in the user interface is system_job in the API. . organization:Default This example shows a Related Field search but without specifying a field to go along with the organization. This is supported by the API and is analogous to a simple string search but carried out against the organization (does an icontains search against both the name and description).

6.1.1. Values for search fields

To find values for certain fields, refer to the API endpoint for extensive options and their valid values. For example, if you want to search against /api/v2/jobs > type field, you can find the values by performing an OPTIONS request to /api/v2/jobs and look for entries in the API for "type". Additionally, you can view the related searches by scrolling to the bottom of each screen. In the example for /api/v2/jobs, the related search shows:

"related_search_fields": [

"modified_by__search",

"project__search",

"project_update__search",

"credentials__search",

"unified_job_template__search",

"created_by__search",

"inventory__search",

"labels__search",

"schedule__search",

"webhook_credential__search",

"job_template__search",

"job_events__search",

"dependent_jobs__search",

"launch_config__search",

"unifiedjob_ptr__search",

"notifications__search",

"unified_job_node__search",

"instance_group__search",

"hosts__search",

"job_host_summaries__search"

The values for Fields come from the keys in a GET request. url, related, and summary_fields are not used. The values for Related Fields also come from the OPTIONS response, but from a different attribute. Related Fields is populated by taking all the values from related_search_fields and stripping off the __search from the end.

Any search that does not start with a value from Fields or a value from the Related Fields, is treated as a generic string search. Searching for localhost, for example, results in the UI sending ?search=localhost as a query parameter to the API endpoint. This is a shortcut for an icontains search on the name and description fields.

6.1.2. Searching using values from related fields

Searching a Related Field requires you to start the search string with the Related Field. The following example describes how to search using values from the Related Field, organization.

The left-hand side of the search string must start with organization, for example, organization:Default. Depending on the related field, you can provide more specific direction for the search by providing secondary and tertiary fields. An example of this is to specify that you want to search for all job templates that use a project matching a certain name. The syntax on this would look like: job_template.project.name:"A Project".

This query executes against the unified_job_templates endpoint which is why it starts with job_template. If you were searching against the job_templates endpoint, then you would not need the job_template portion of the query.

6.1.3. Other search considerations

Be aware of the following issues when searching in automation controller:

- There is currently no supported syntax for OR queries. All search terms are ANDed in the query parameters.

- The left-hand portion of a search parameter can be wrapped in quotes to support searching for strings with spaces. For more information, see Tips for searching.

-

Currently, the values in the Fields are direct attributes expected to be returned in a GET request. Whenever you search against one of the values, automation controller carries out an

__icontainssearch. So, for example,name:localhostsends back?name__icontains=localhost. Automation controller currently performs this search for every Field value, evenid.

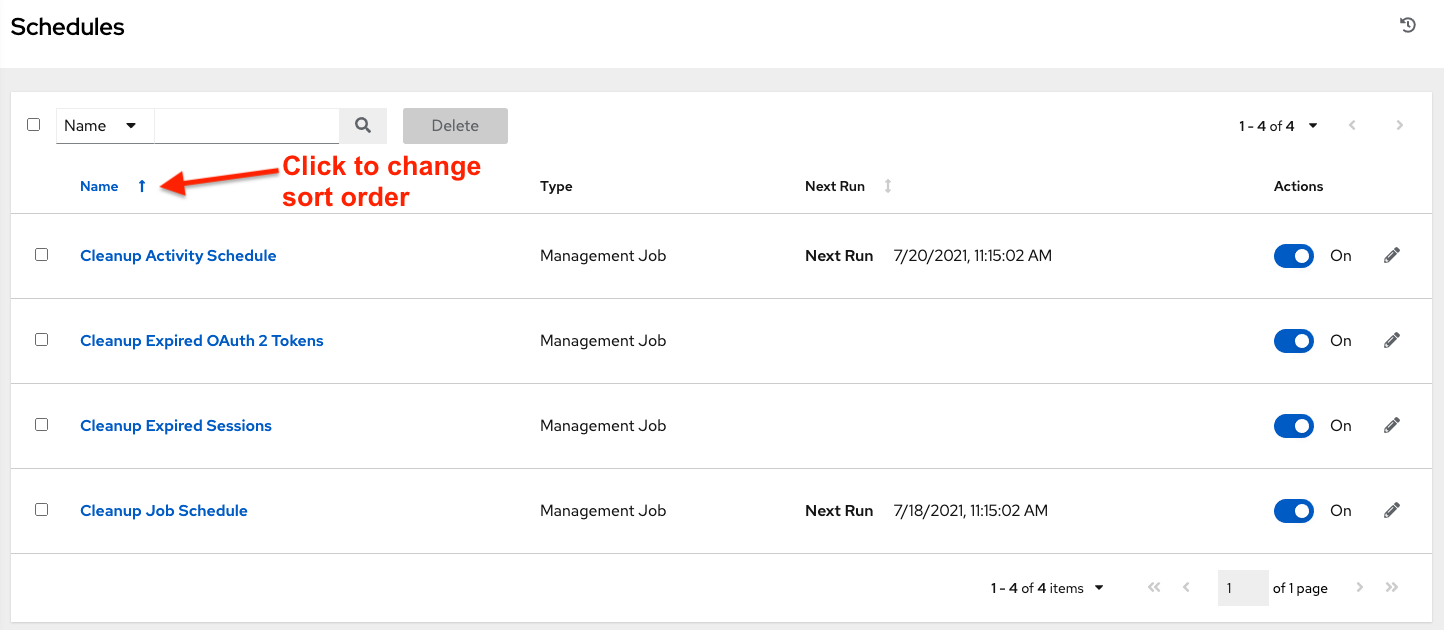

6.2. Sort

Where applicable, use the arrows in each column to sort by ascending order. The following is an example from the schedules list:

The direction of the arrow indicates the sort order of the column.

Chapter 7. Organizations

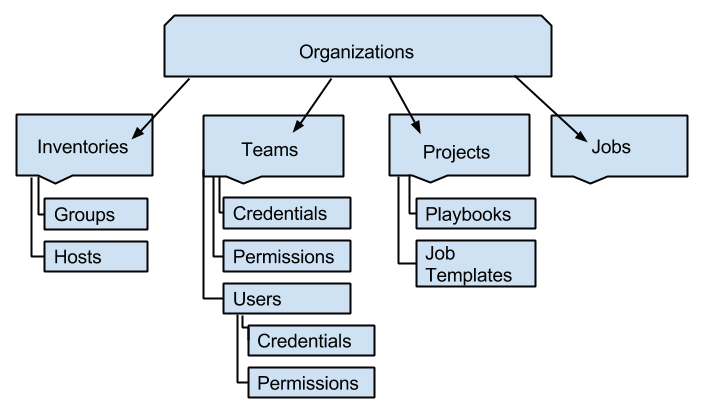

An organization is a logical collection of users, teams, projects, and inventories. It is the highest level object in the controller object hierarchy.

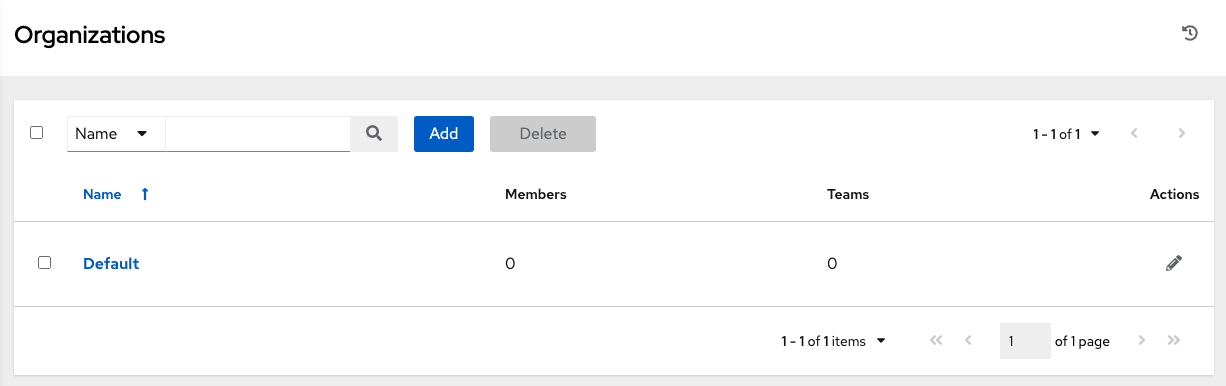

From the navigation menu, select Organizations to display the existing organizations for your installation.

Organizations can be searched by Name or Description.

Modify organizations using the

![]() icon. Click Delete to remove a selected organization.

icon. Click Delete to remove a selected organization.

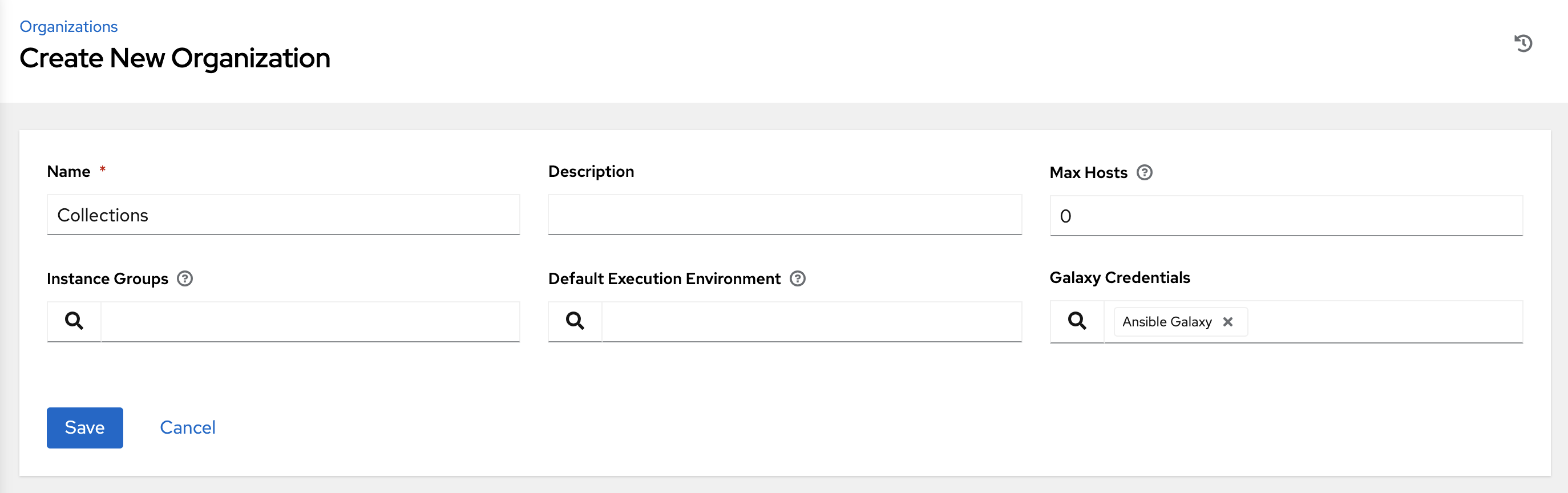

7.1. Creating an organization

Automation controller automatically creates a default organization. If you have a Self-support level license, you have only the default organization available and must not delete it.

You can use the default organization as it is initially set up and edit it later.

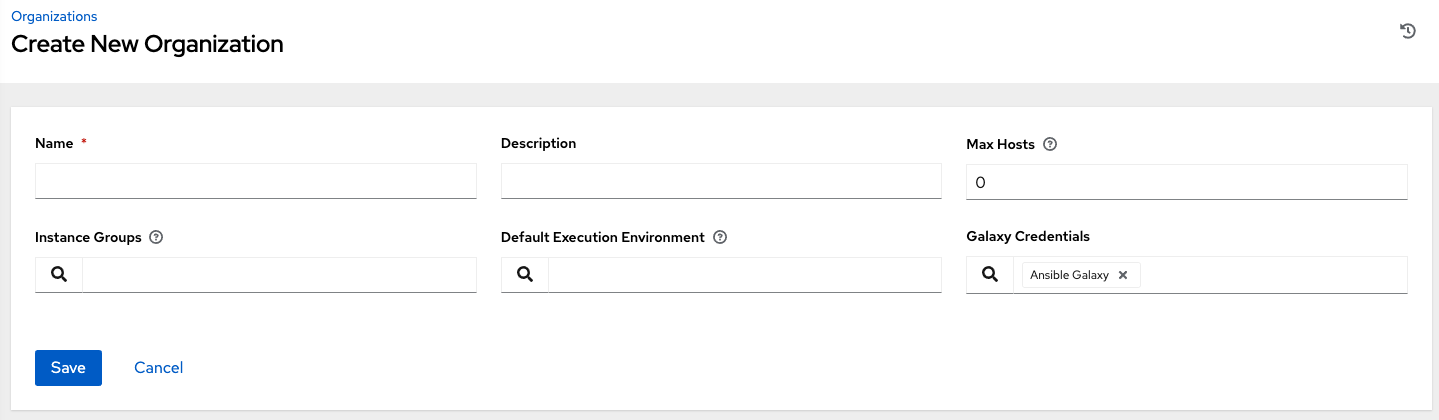

Click Add to create a new organization.

You can configure several attributes of an organization:

- Enter the Name for your organization (required).

- Enter a Description for the organization.

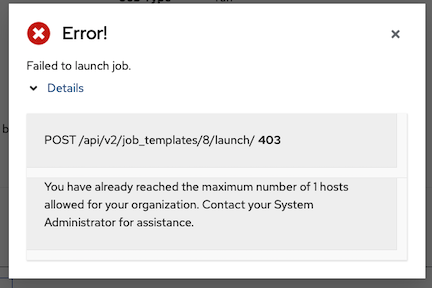

Max Hosts is only editable by a superuser to set an upper limit on the number of license hosts that an organization can have. Setting this value to 0 signifies no limit. If you try to add a host to an organization that has reached or exceeded its cap on hosts, an error message displays:

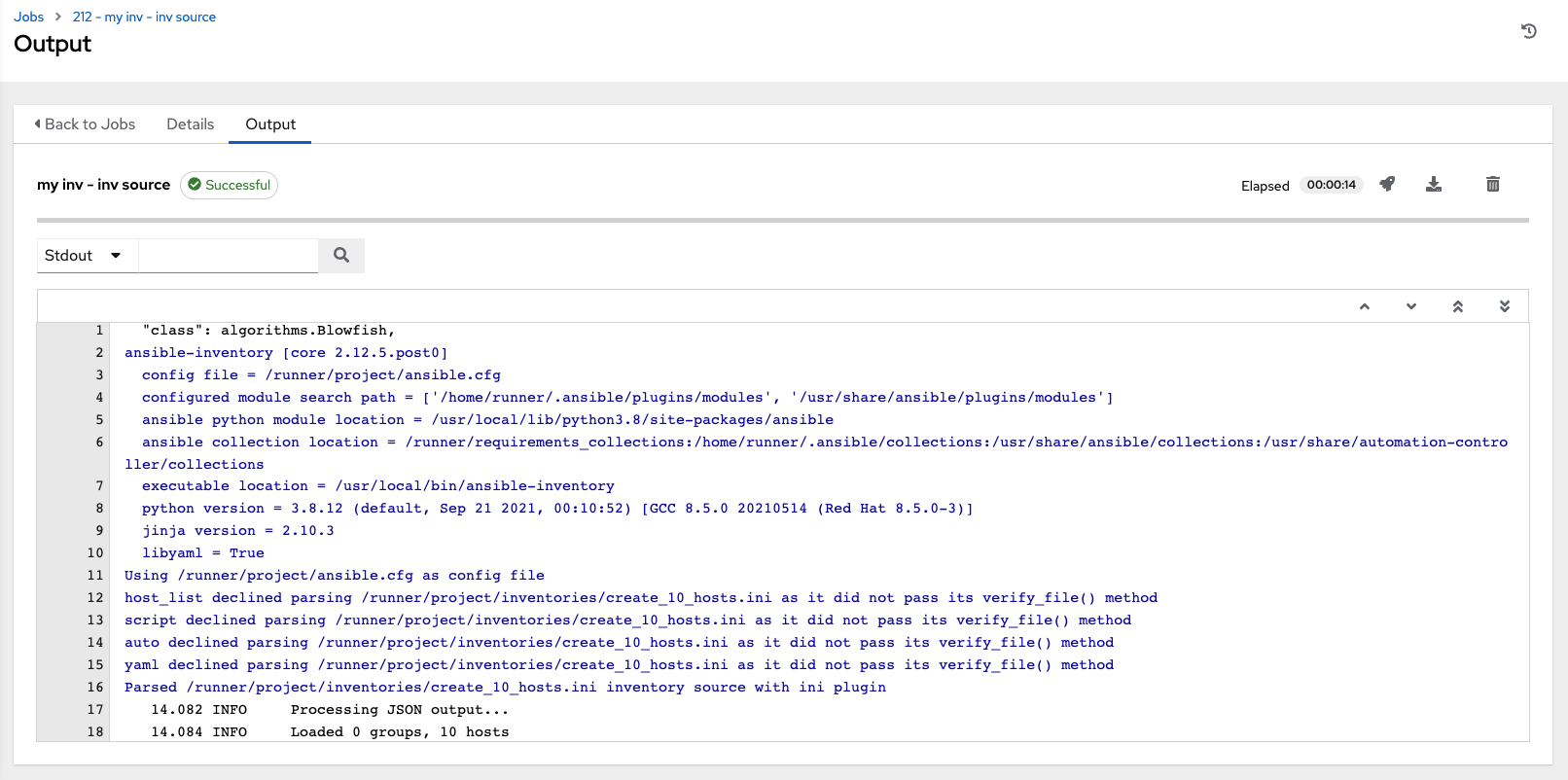

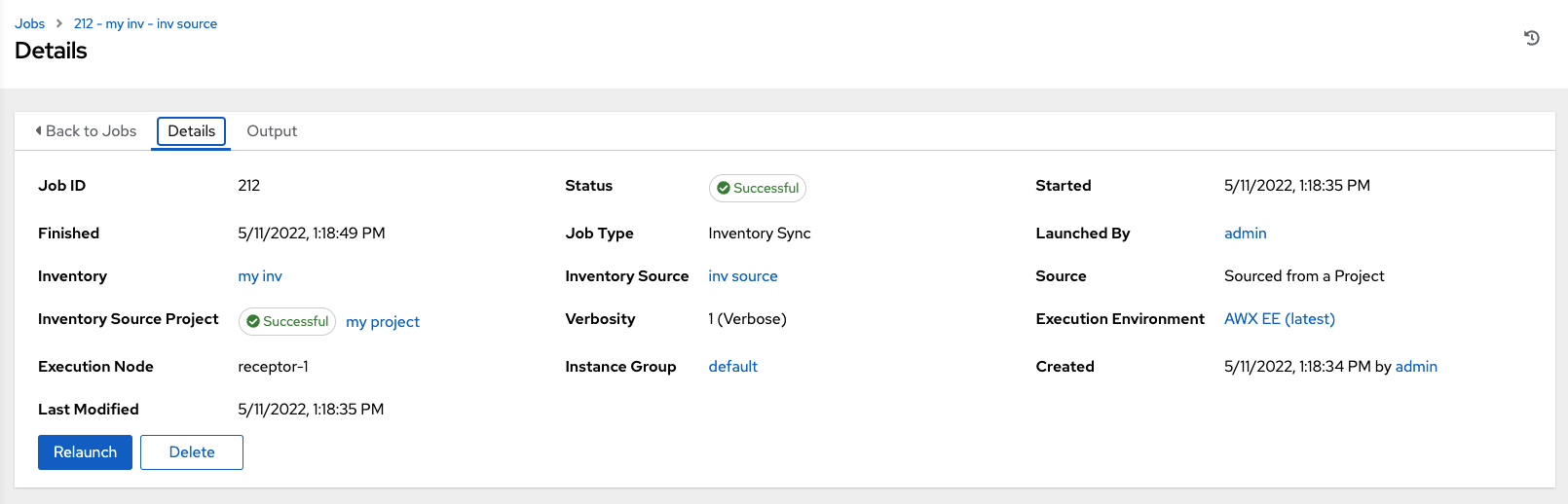

The inventory sync output view also shows the host limit error.

Click Details for additional information about the error.

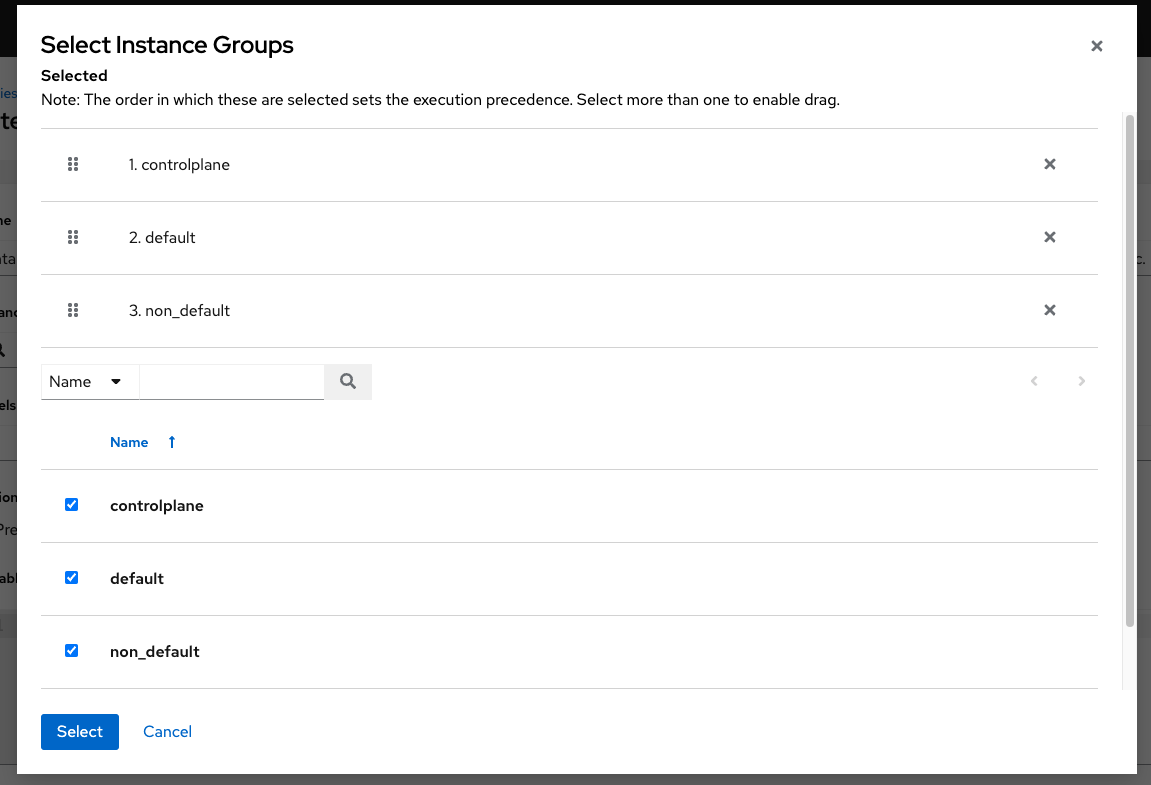

- Enter the name of the Instance Groups on which to run this organization.

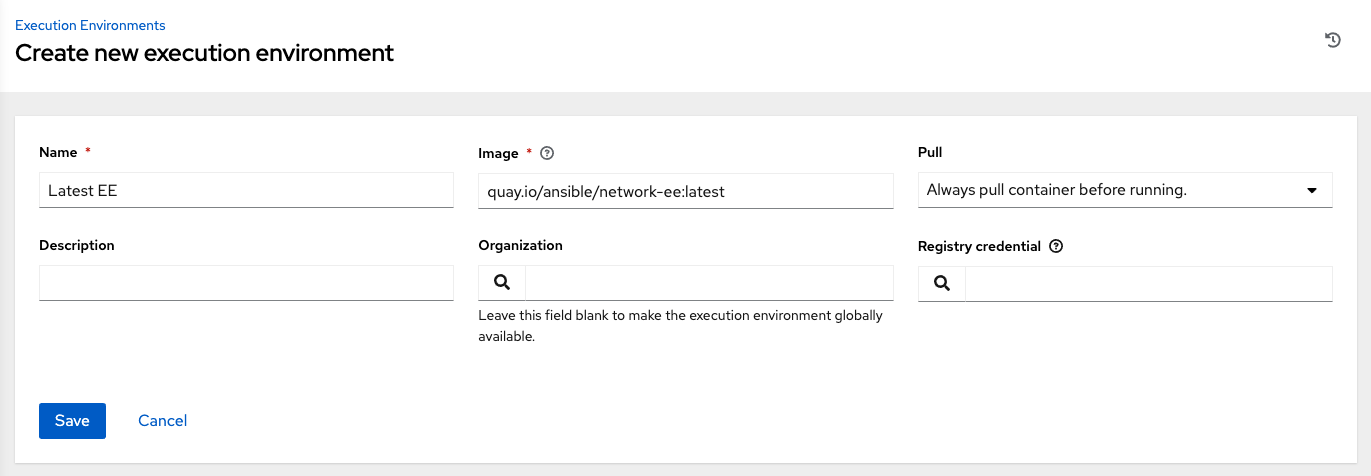

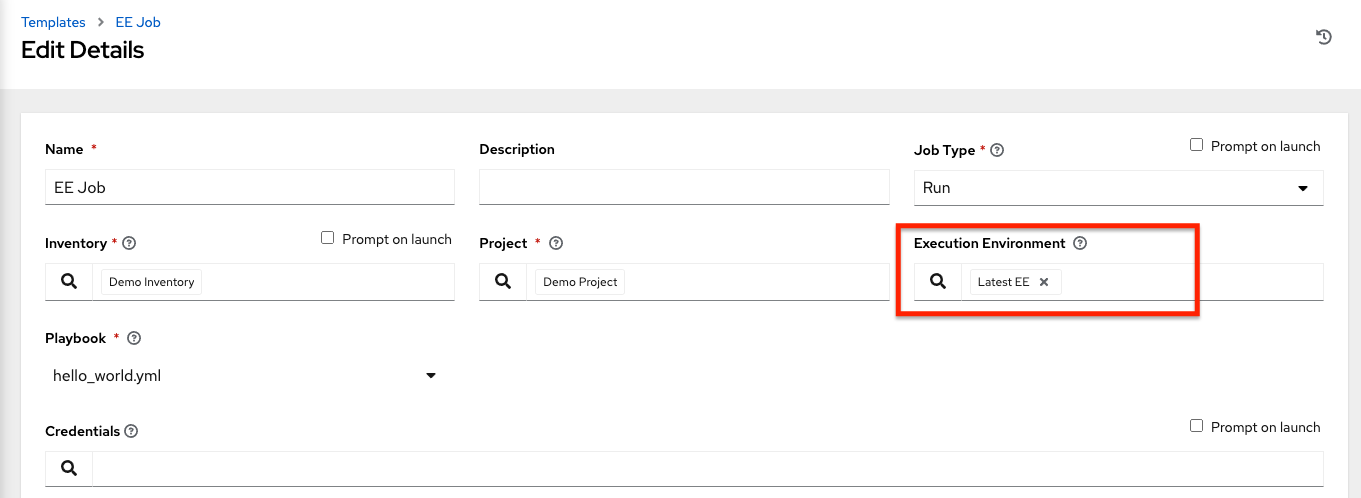

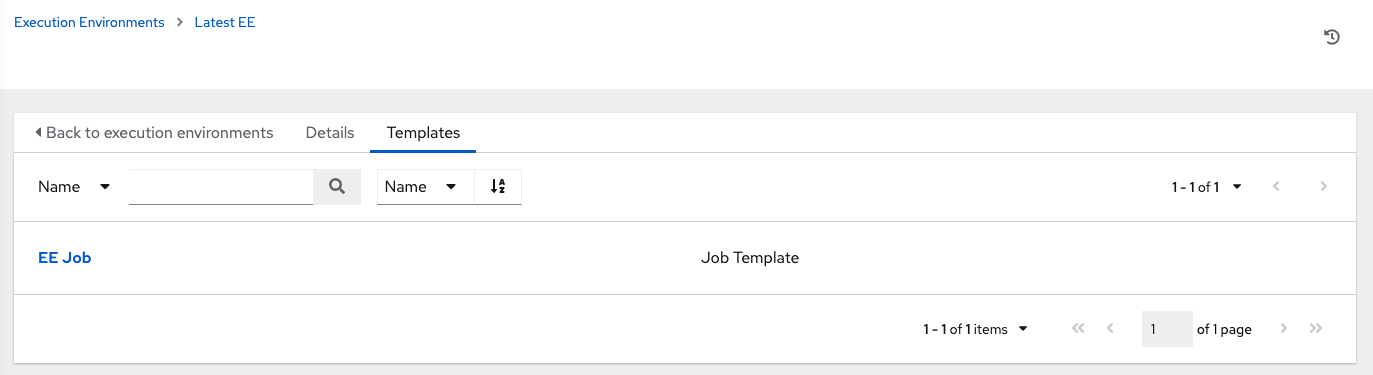

- Enter the name of the execution environment or search for one that exists on which to run this organization. For more information, see Upgrading to Execution Environments.

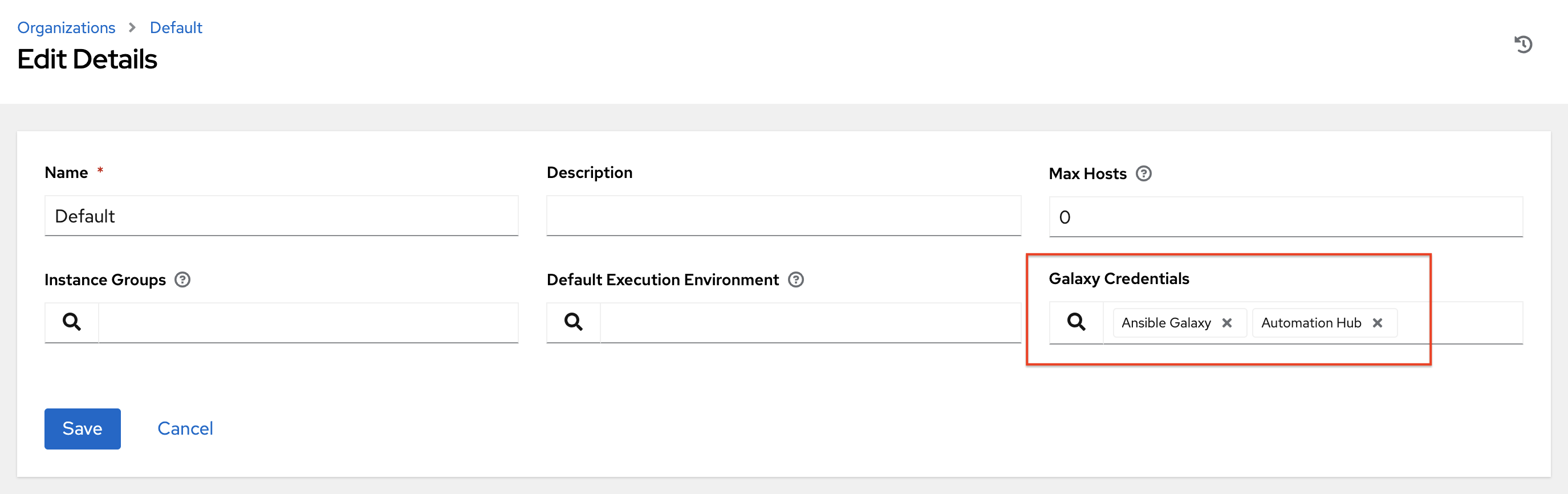

- Optional: Enter the Galaxy Credentials or search from a list of existing ones.

- Click Save to finish creating the organization.

When the organization is created, automation controller displays the Organization details, and enables you to manage access and execution environments for the organization.

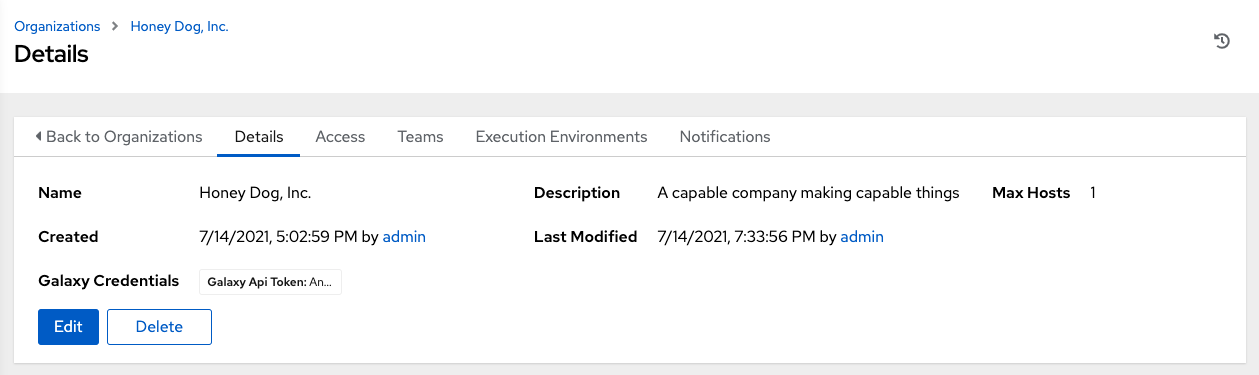

From the Details tab, you can edit or delete the organization.

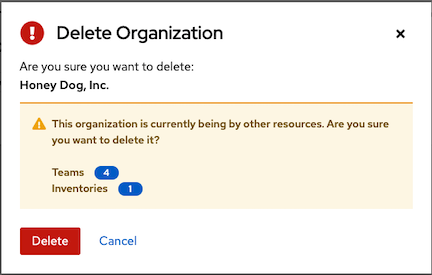

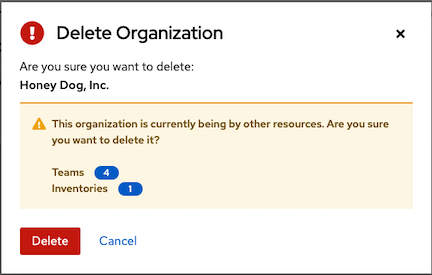

If you attempt to delete items that are used by other work items, a message lists the items that are affected by the deletion and prompts you to confirm the deletion. Some screens contain items that are invalid or have been deleted previously, and will fail to run.

The following is an example of such a message:

7.2. Access to organizations

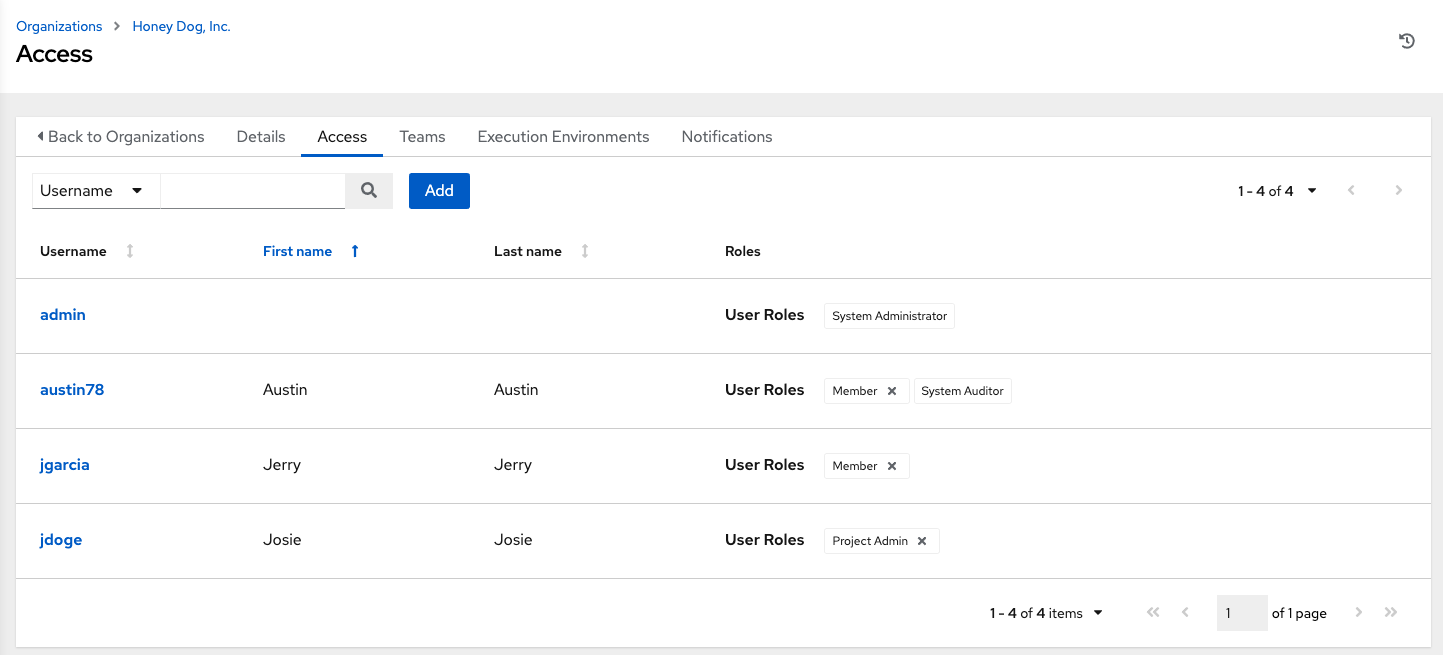

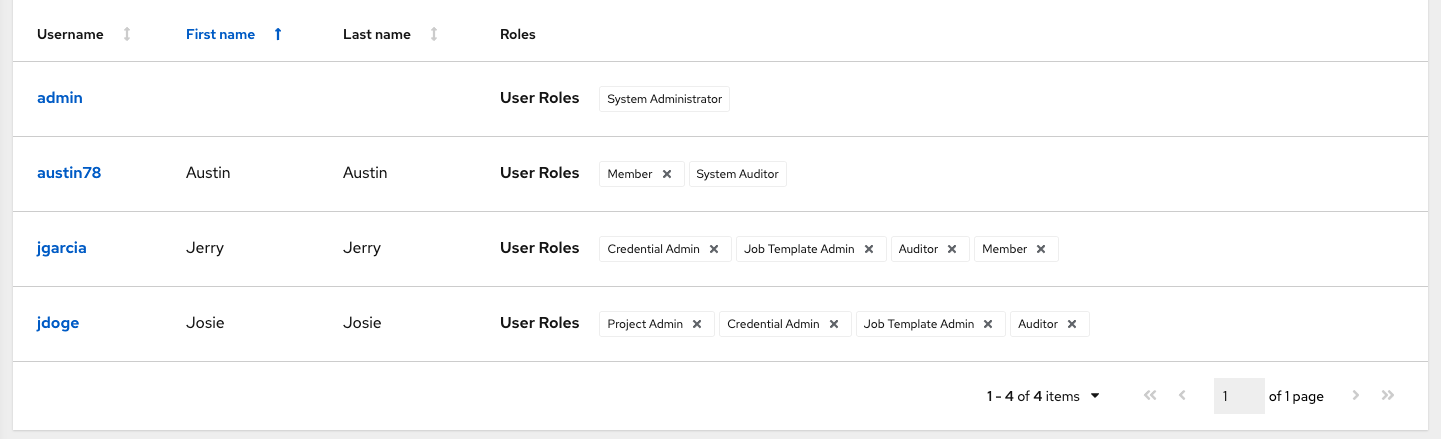

- Select Access when viewing your organization to display the users associated with this organization, and their roles.

Use this page to complete the following tasks:

- Manage the user membership for this organization. Click Users on the navigation panel to manage user membership on a per-user basis from the Users page.

- Assign specific users certain levels of permissions within your organization.

- Enable them to act as an administrator for a particular resource. For more information, see Role-Based Access Controls.

Click a user to display that user’s details. You can review, grant, edit, and remove associated permissions for that user. For more information, see Users.

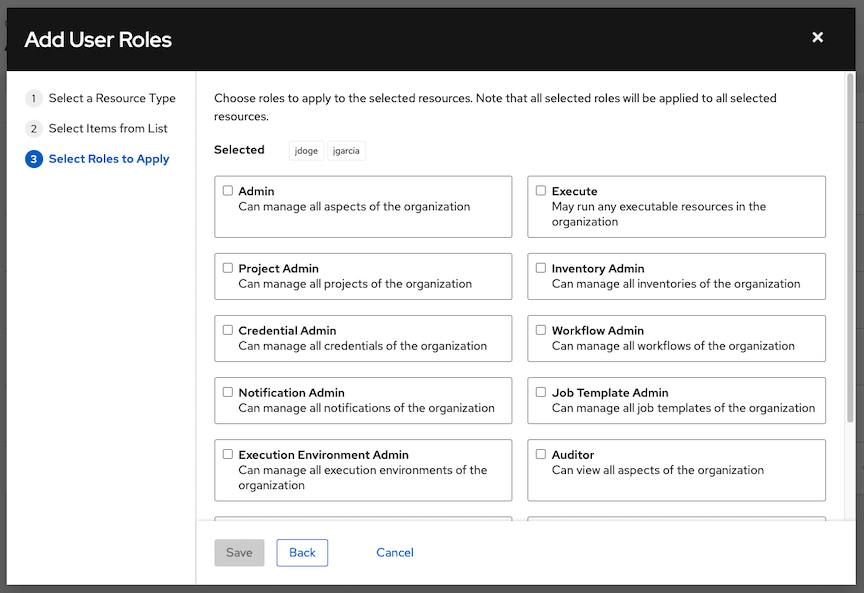

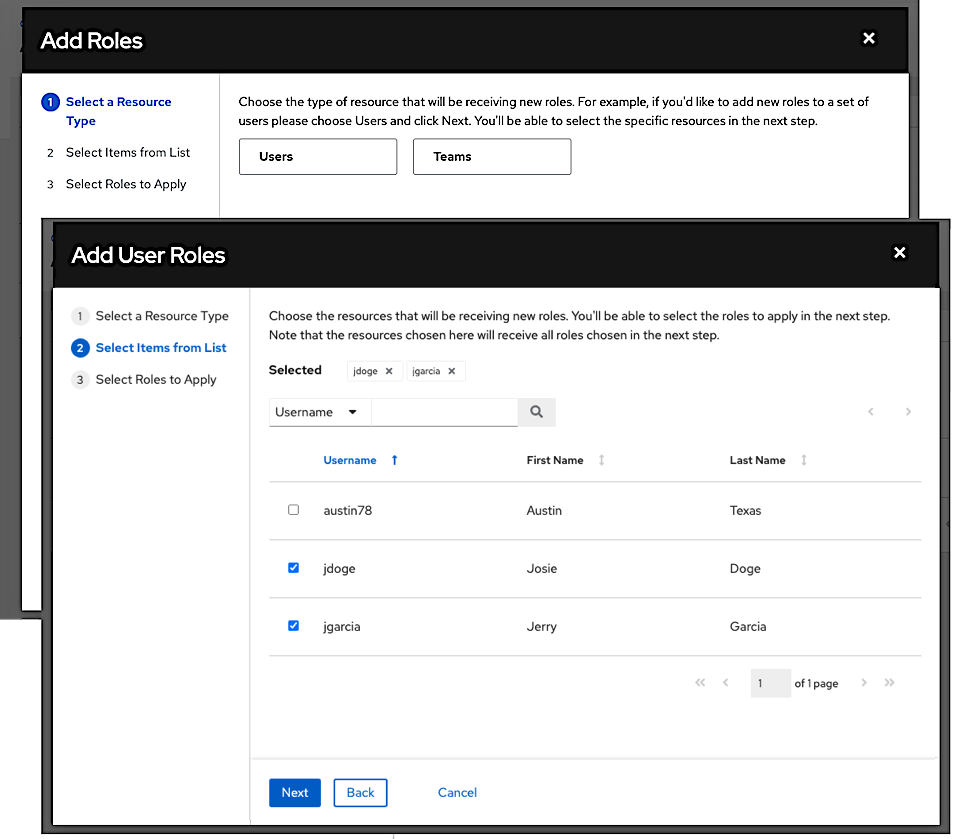

7.2.1. Add a User or Team

To add a user or team to an organization, the user or team must already exist.

For more information, see Creating a User and Creating a Team.

To add existing users or team to the Organization:

Procedure

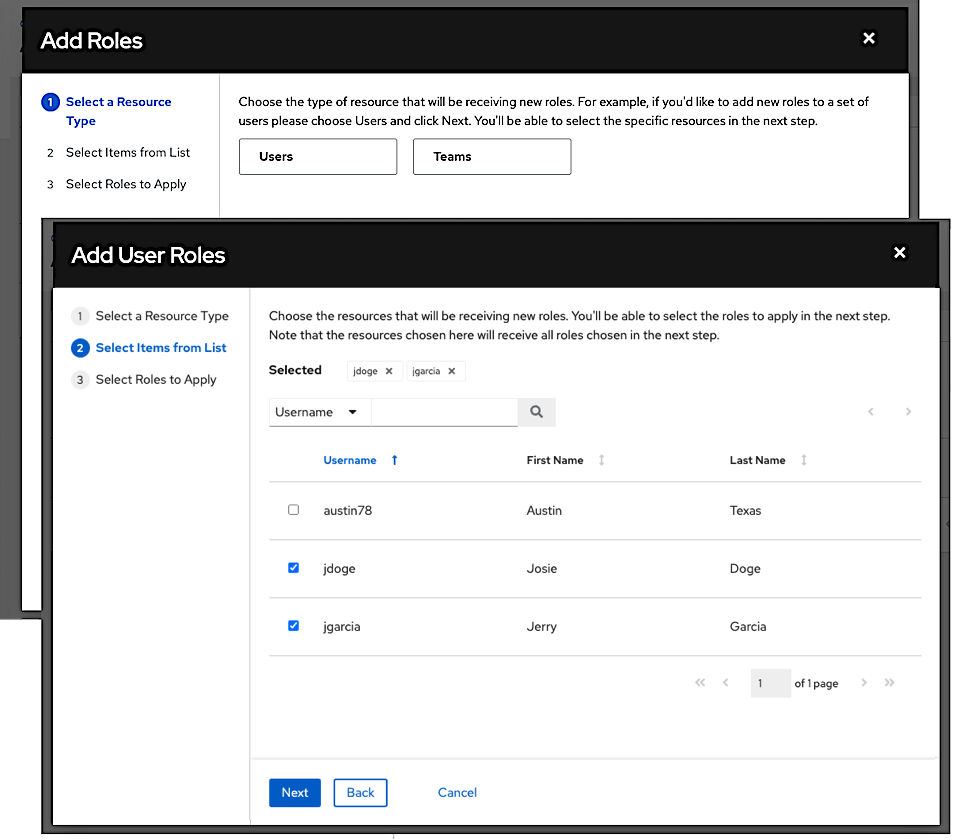

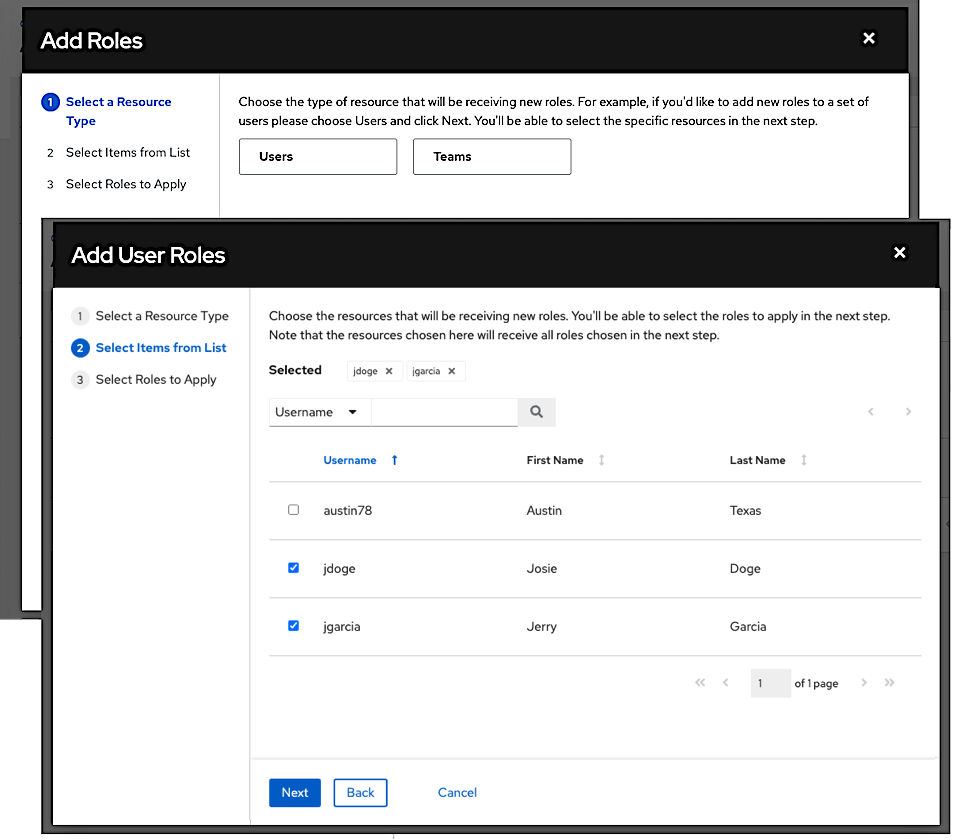

- In the Access tab of the Organization page, click Add.

- Select a user or team to add.

- Click Next.

- Select one or more users or teams from the list by clicking the checkbox next to the name to add them as members.

Click Next.

In this example, two users have been selected.

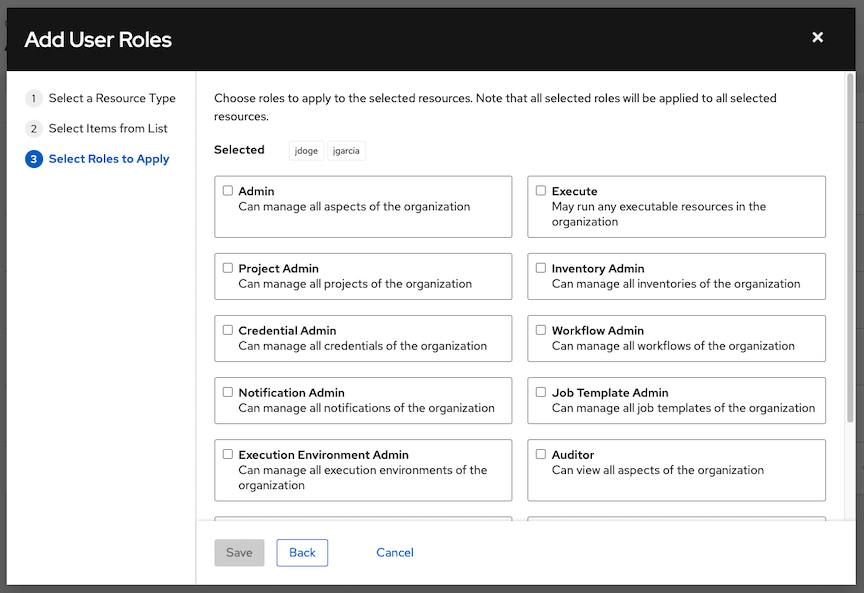

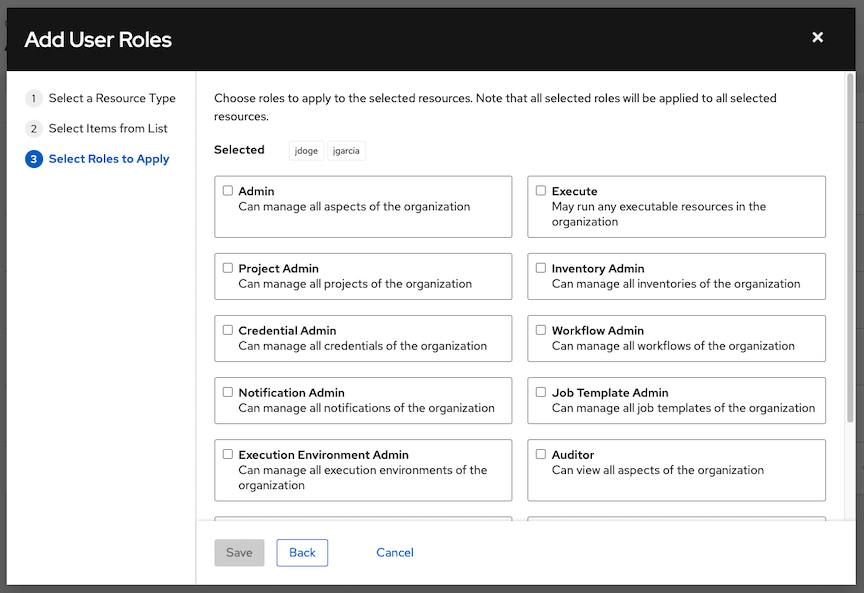

Select the role you want the selected user or team to have. Scroll down for a complete list of roles. Different resources have different options available.

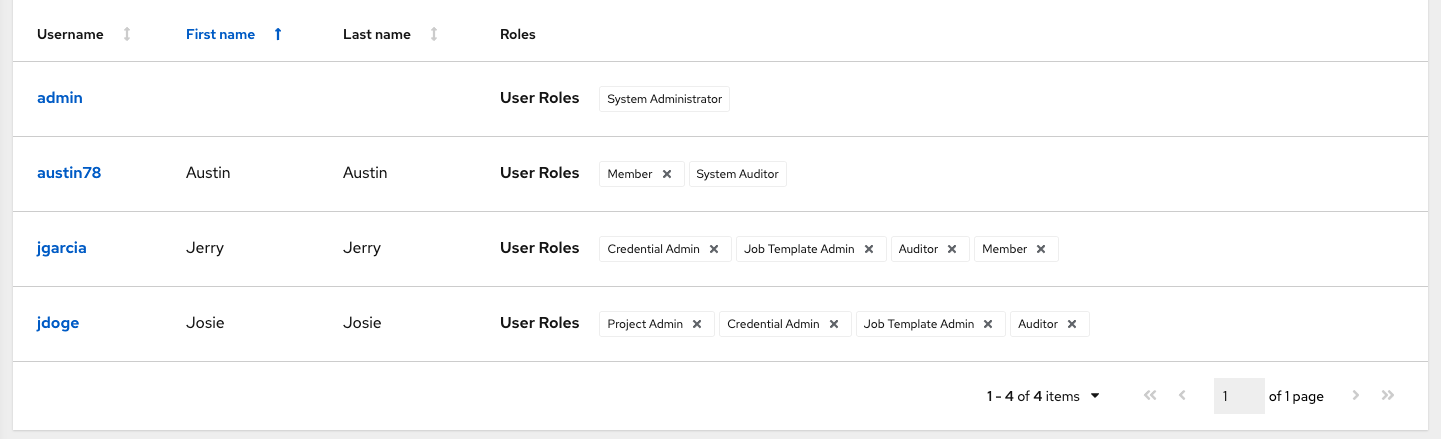

Click Save to apply the roles to the selected user or team, and to add them as members. The Add Users or Add Teams window displays the updated roles assigned for each user and team.

NoteA user or team with associated roles retains them if they are reassigned to another organization.

-

To remove roles for a particular user, click the disassociate

icon next to its resource. This launches a confirmation dialog, asking you to confirm the disassociation.

icon next to its resource. This launches a confirmation dialog, asking you to confirm the disassociation.

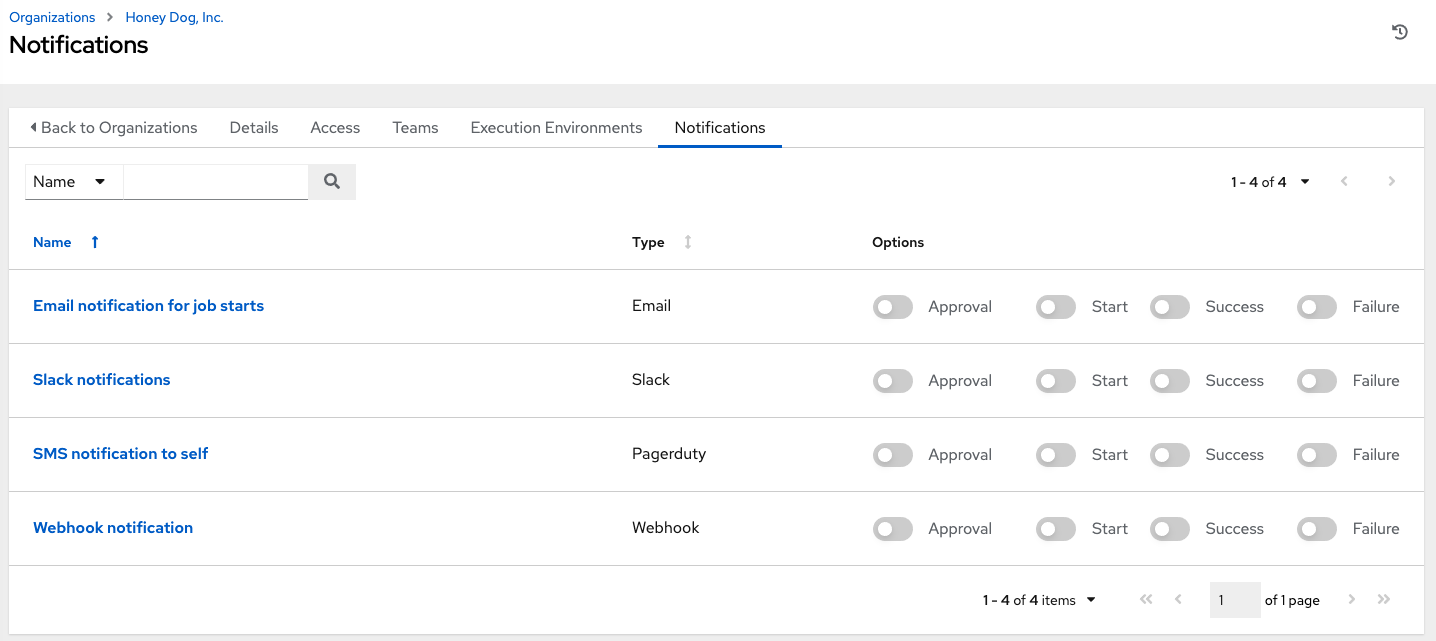

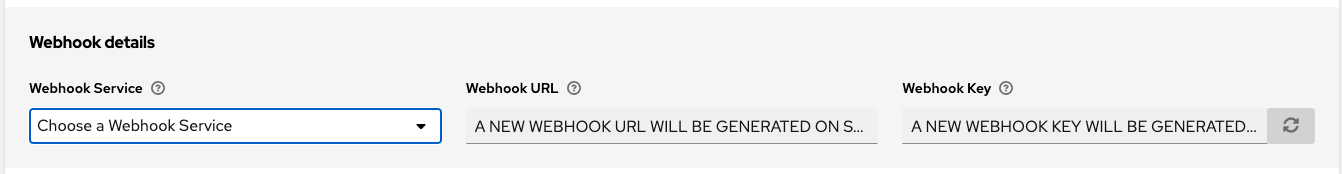

7.2.2. Work with Notifications

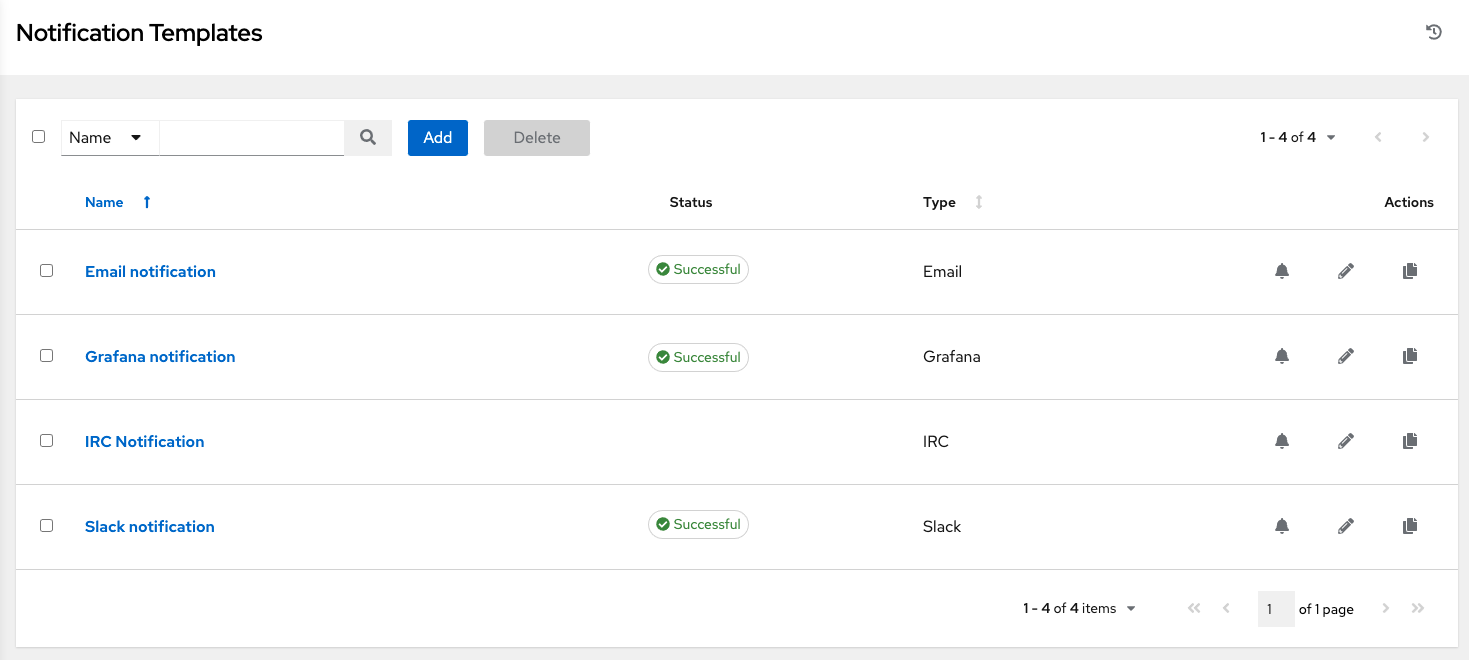

Selecting the Notifications tab on the Organization details page enables you to review any notification integrations you have set up.

Use the toggles to enable or disable the notifications to use with your particular organization. For more information, see Enable and Disable Notifications.

If no notifications have been set up, select Administration → Notifications from the navigation panel.

For information on configuring notification types, see Notification Types.

Chapter 8. Managing Users in automation controller

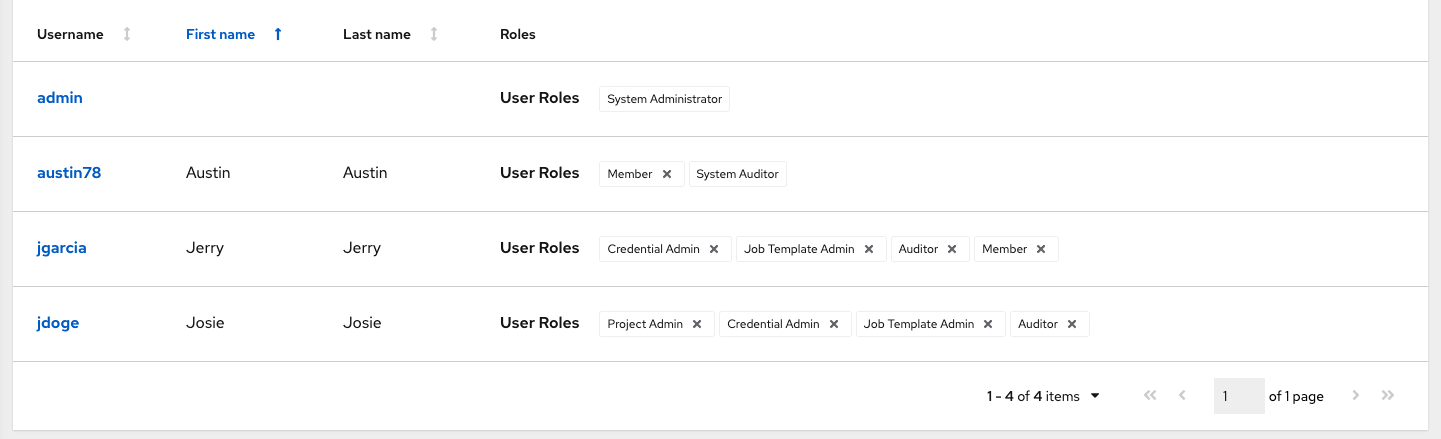

Users associated with an organization are shown in the Access tab of the organization.

Other users can be added to an organization, including a Normal User, System Auditor, or System Administrator, but they must be created first.

You can sort or search the User list by Username, First Name, or Last Name. Click the headers to toggle your sorting preference.

You can view user permissions and user type beside the user name on the Users page.

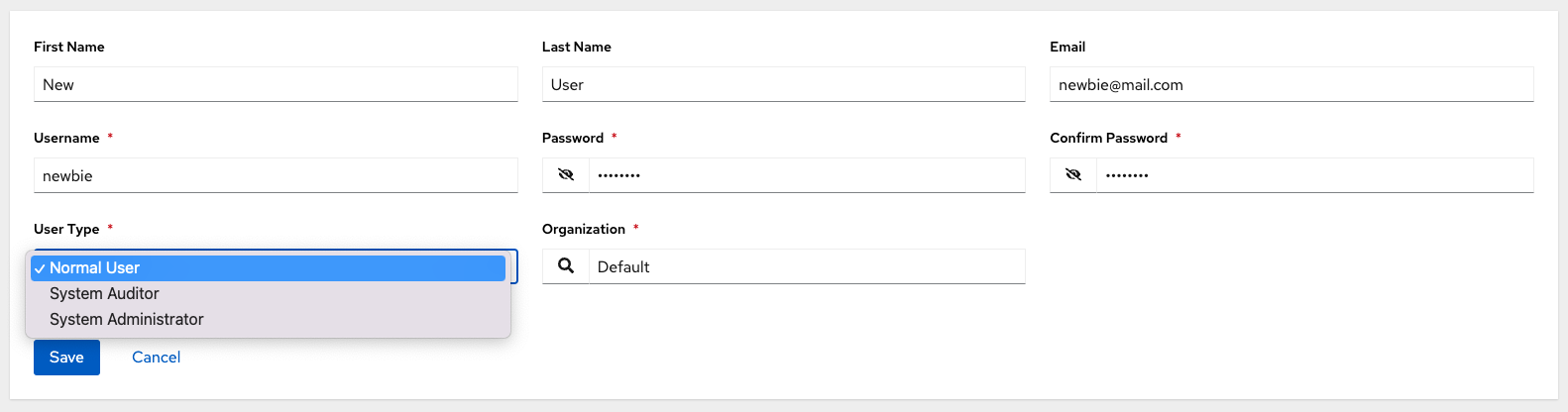

8.1. Creating a user

To create new users in automation controller and assign them a role.

Procedure

On the Users page, click Add.

The Create User dialog opens.

Enter the appropriate details about your new user. Fields marked with an asterisk (*) are required.

NoteIf you are modifying your own password, log out and log back in again for it to take effect.

You can assign three types of users:

- Normal User: Normal Users have read and write access limited to the resources (such as inventory, projects, and job templates) for which that user has been granted the appropriate roles and privileges.

- System Auditor: Auditors inherit the read-only capability for all objects within the environment.

System Administrator: A System Administrator (also known as a Superuser) has full system administration privileges — with full read and write privileges over the entire installation. A System Administrator is typically responsible for managing all aspects of and delegating responsibilities for day-to-day work to various users.

Note

NoteA default administrator with the role of System Administrator is automatically created during the installation process and is available to all users of automation controller. One System Administrator must always exist. To delete the System Administrator account, you must first create another System Administrator account.

Click Save.

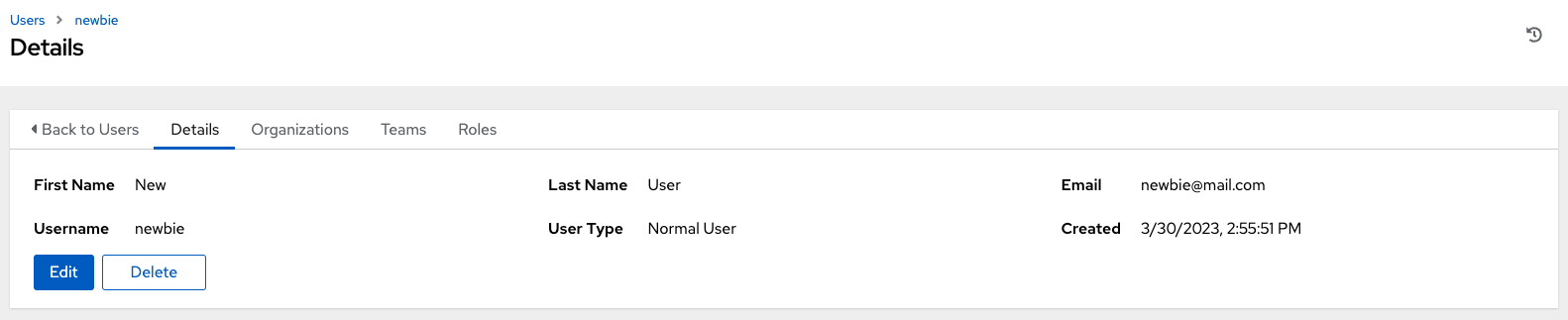

When the user is successfully created, the User dialog opens.

Click Delete to delete the user, or you can delete users from a list of current users. For more information, see Deleting a user.

The same window opens whether you click the user’s name, or the Edit

icon beside the user. You can use this window to review and modify the User’s Organizations, Teams, Roles, and other user membership details.

icon beside the user. You can use this window to review and modify the User’s Organizations, Teams, Roles, and other user membership details.

If the user is not newly-created, the details screen displays the last login activity of that user.

If you log in as yourself, and view the details of your user profile, you can manage tokens from your user profile.

For more information, see Adding a user token.

8.2. Deleting a user

Before you can delete a user, you must have user permissions. When you delete a user account, the name and email of the user are permanently removed from automation controller.

Procedure

- From the navigation panel, select Access → Users.

- Click Users to display a list of the current users.

- Select the check box for the user that you want to remove.

- Click Delete.

- Click Delete in the confirmation warning message to permanently delete the user.

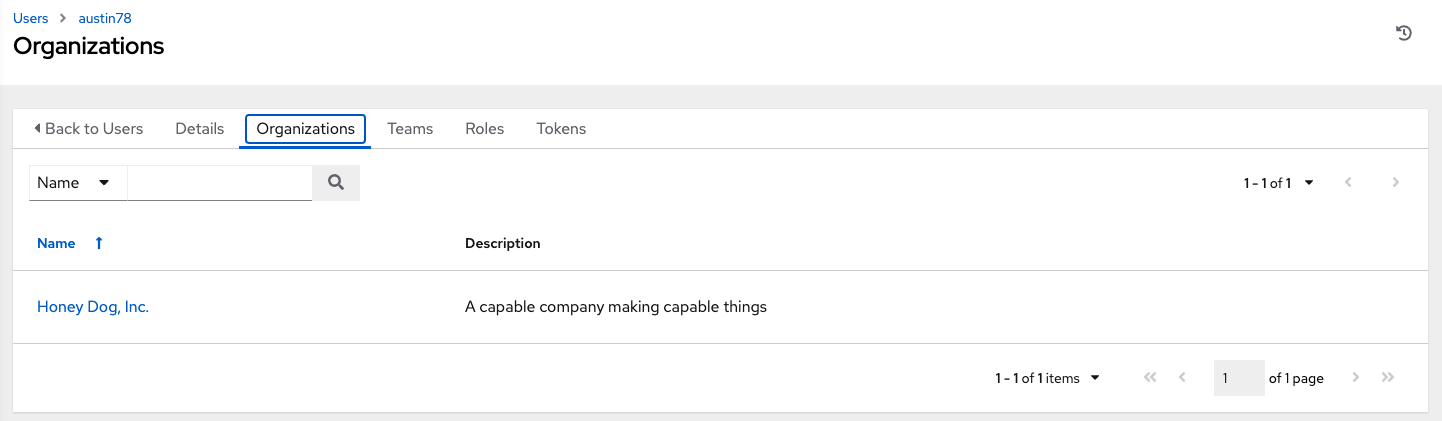

8.3. Displaying user organizations

Select a specific user to display the Details page, select the Organizations tab to display the list of organizations of which that user is a member.

Organization membership cannot be modified from this display panel.

8.4. Displaying a user’s teams

From the Users > Details page, select the Teams tab to display the list of teams of which that user is a member.

You cannot modify team membership from this display panel. For more information, see Teams.

Until you create a team and assign a user to that team, the assigned teams details for that user is displayed as empty.

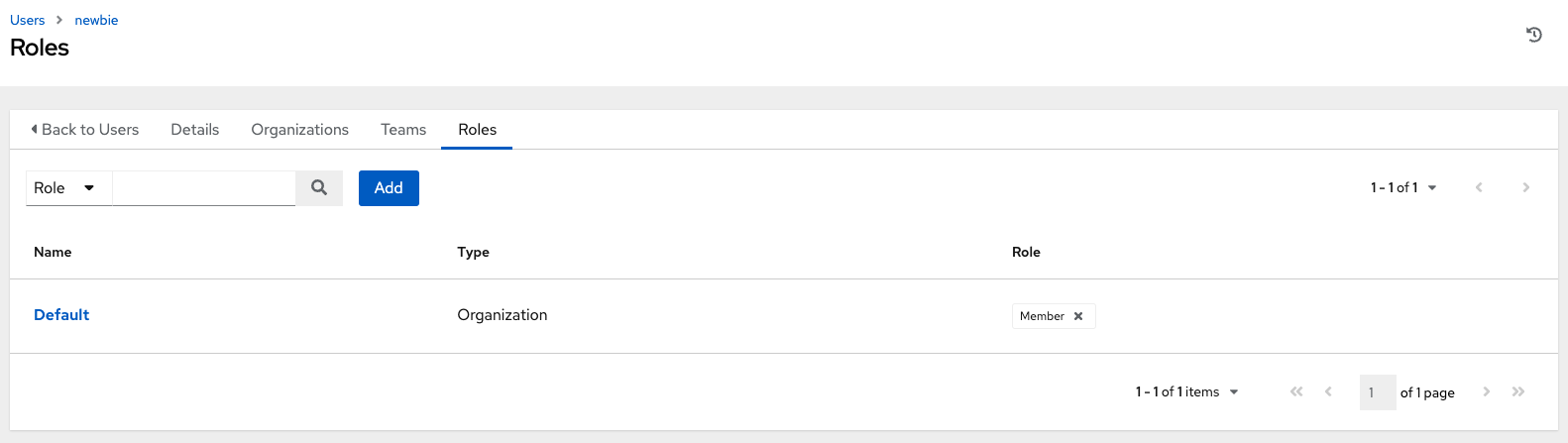

8.5. Displaying a user’s roles

From the Users > Details page, select the Roles tab to display the set of roles assigned to this user. These offer the ability to read, change, and administer projects, inventories, job templates, and other elements.

8.5.1. Adding and removing user permissions

To add permissions to a particular user:

Procedure

- From the Users list view, click on the name of a user.

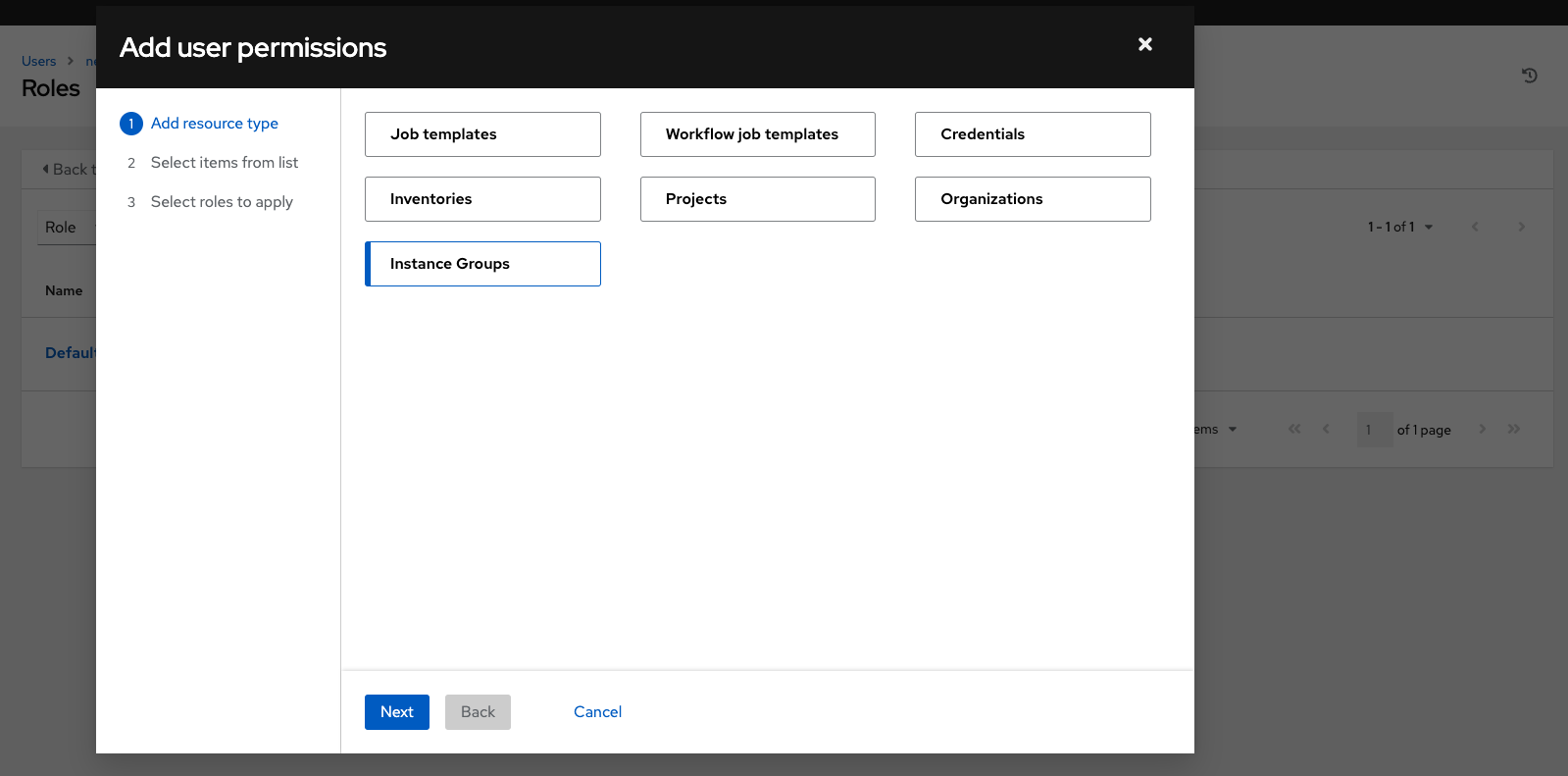

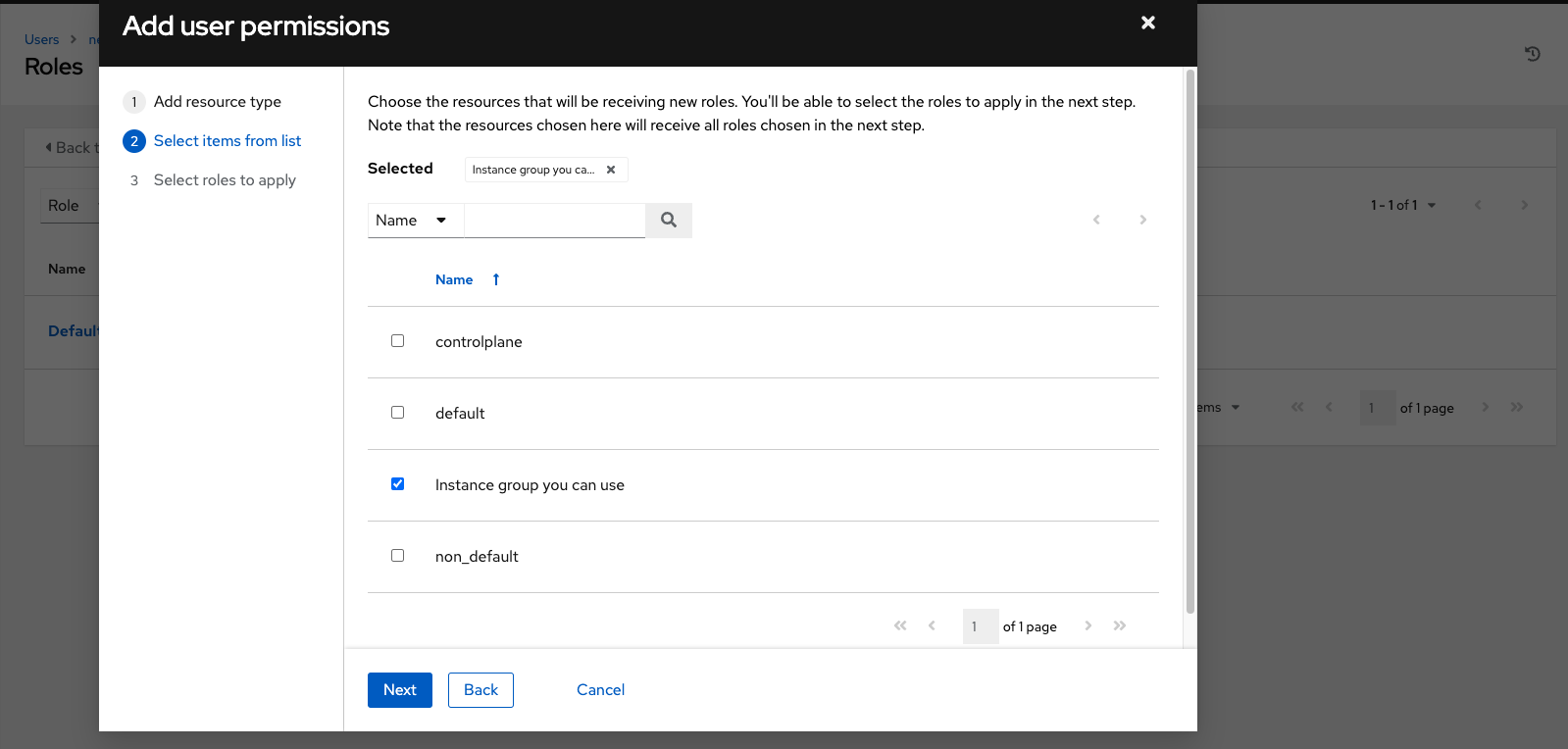

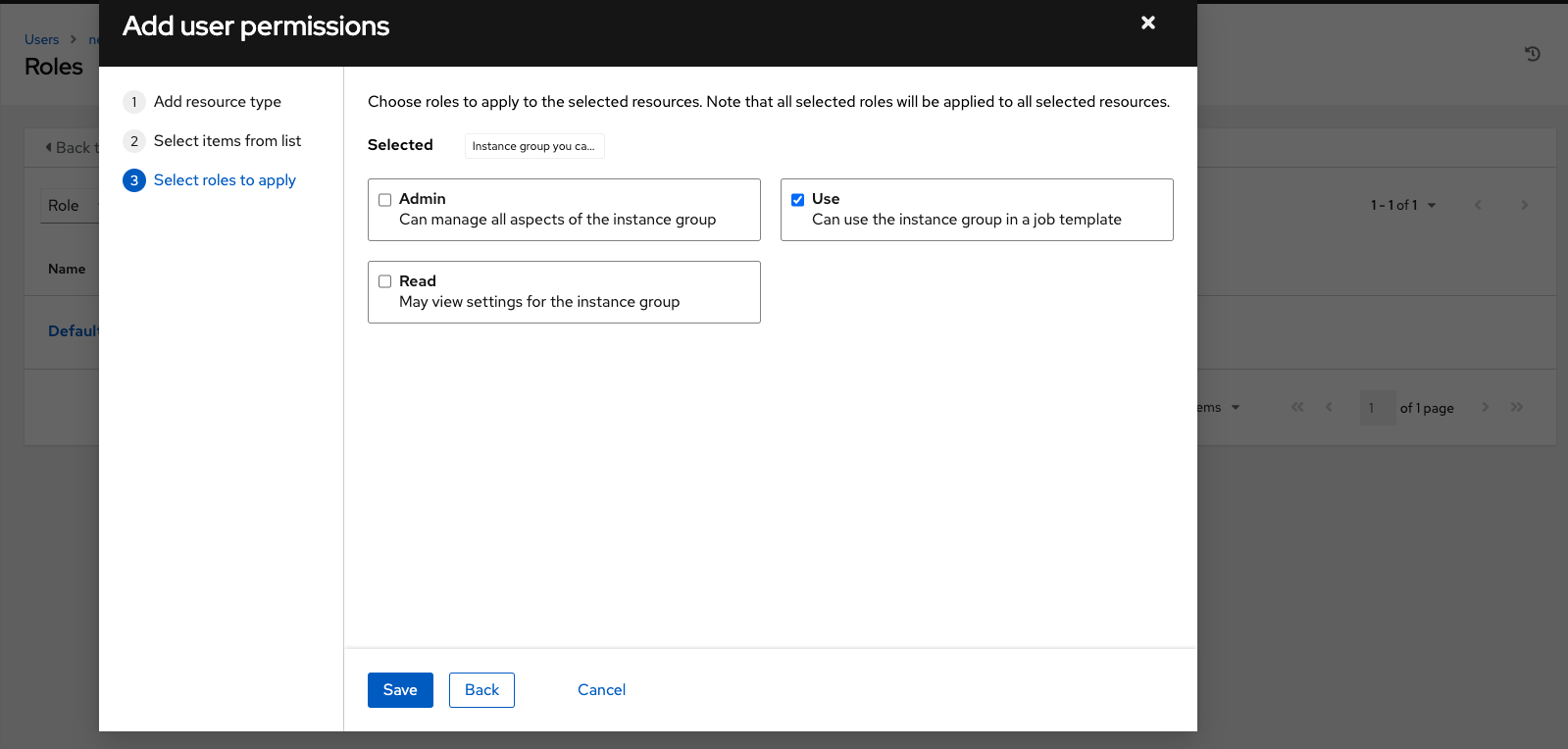

On the Details page, click Add. This opens the Add user permissions wizard.

- Select the object to a assign permissions, for which the user will have access.

- Click Next.

Select the resource to assign team roles and click Next.

Select the resource you want to assign permissions to. Different resources have different options available.

- Click Save.

- The Roles page displays the updated profile for the user with the permissions assigned for each selected resource.

You can also add teams, individual, or multiple users and assign them permissions at the object level. This includes templates, credentials, inventories, projects, organizations, or instance groups. This feature reduces the time for an organization to onboard many users at one time.

To remove permissions:

-

Click the

icon next to the resource. This launches a confirmation dialog asking you to confirm the disassociation.

icon next to the resource. This launches a confirmation dialog asking you to confirm the disassociation.

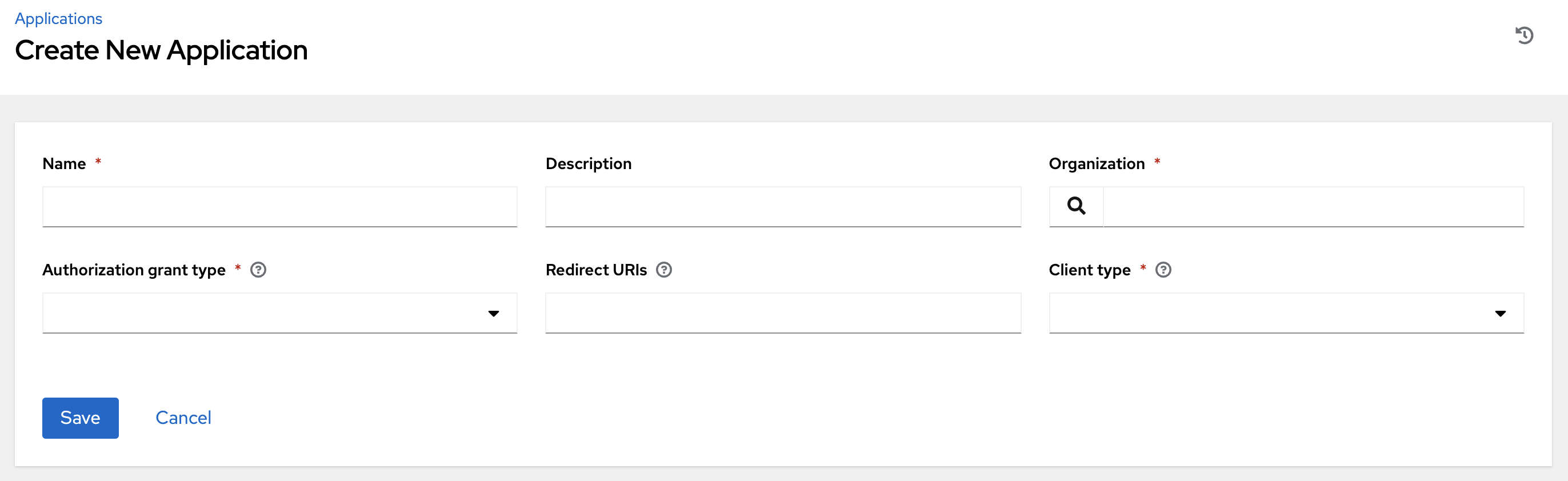

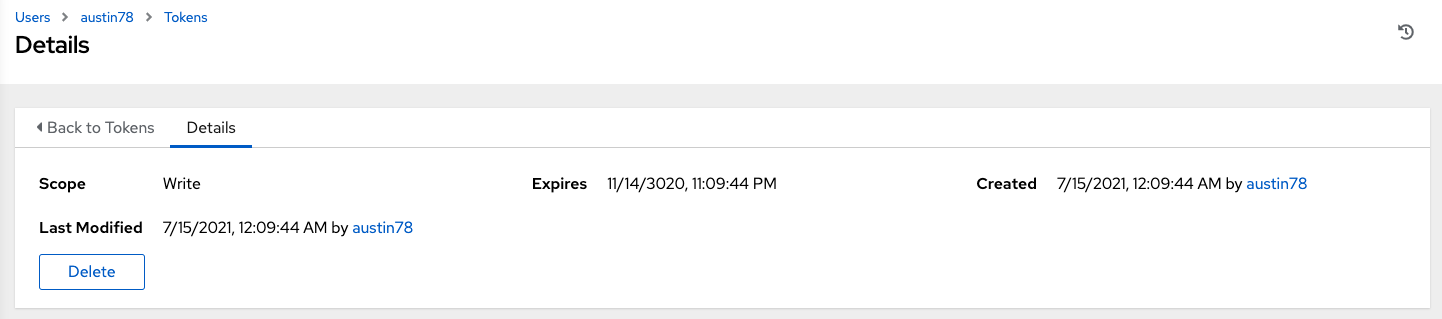

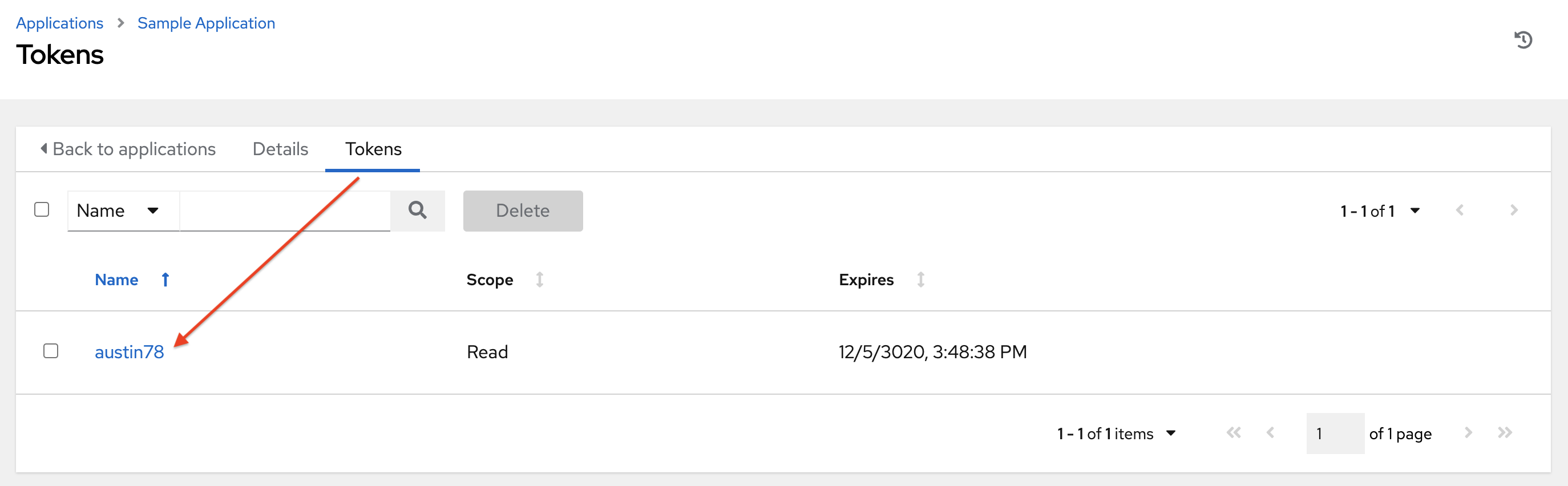

8.6. Creating tokens for a user

The Tokens tab is only present for the user you created for yourself.

Before you add a token for your user, you might want to Create an application if you want to associate your token with it.

You can also create a Personal Access Token (PAT) without associating it with any application.

Procedure

- Select your user from the Users list view to configure your OAuth 2 tokens.

- Select the Tokens tab from your user’s profile.

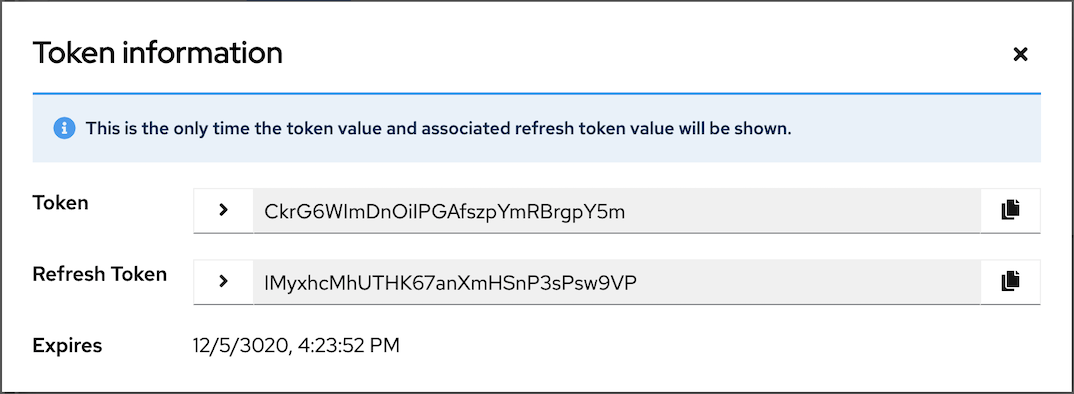

- Click Add to open the Create Token window.

Enter the following information:

Application: Enter the name of the application with which you want to associate your token.

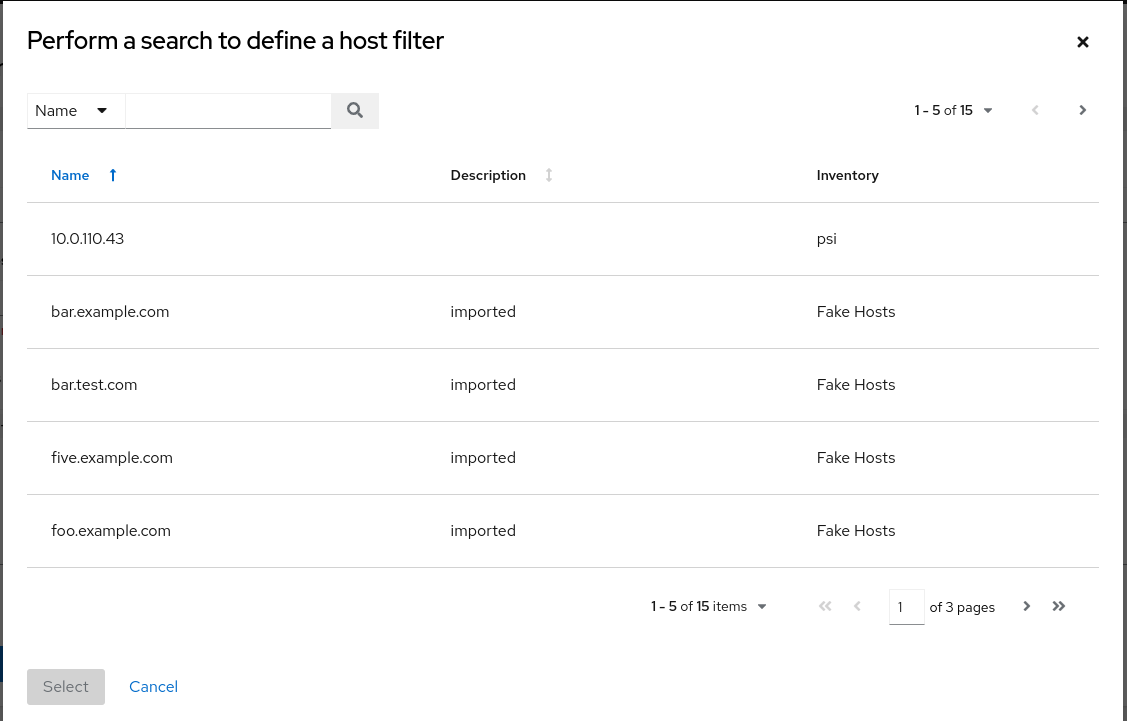

Alternatively, you can search for the application name clicking the

icon. This opens a separate window that enables you to choose from the available options. Use the Search bar to filter by name if the list is extensive.

icon. This opens a separate window that enables you to choose from the available options. Use the Search bar to filter by name if the list is extensive.

Leave this field blank if you want to create a PAT that is not linked to any application.

- Optional: Description: Provide a short description for your token.

- Scope: Specify the level of access you want this token to have.

- Click Save or Cancel to abandon your changes.

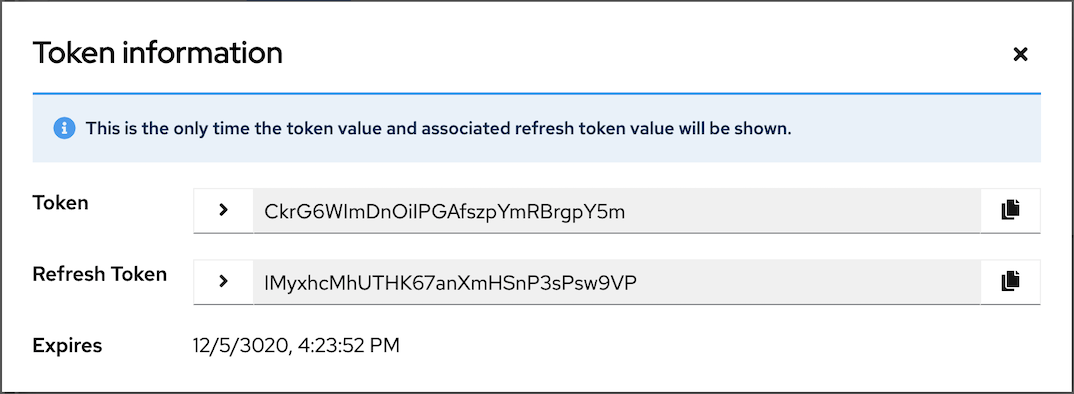

After the token is saved, the newly created token for the user is displayed.

Important

ImportantThis is the only time the token value and associated refresh token value are ever shown.

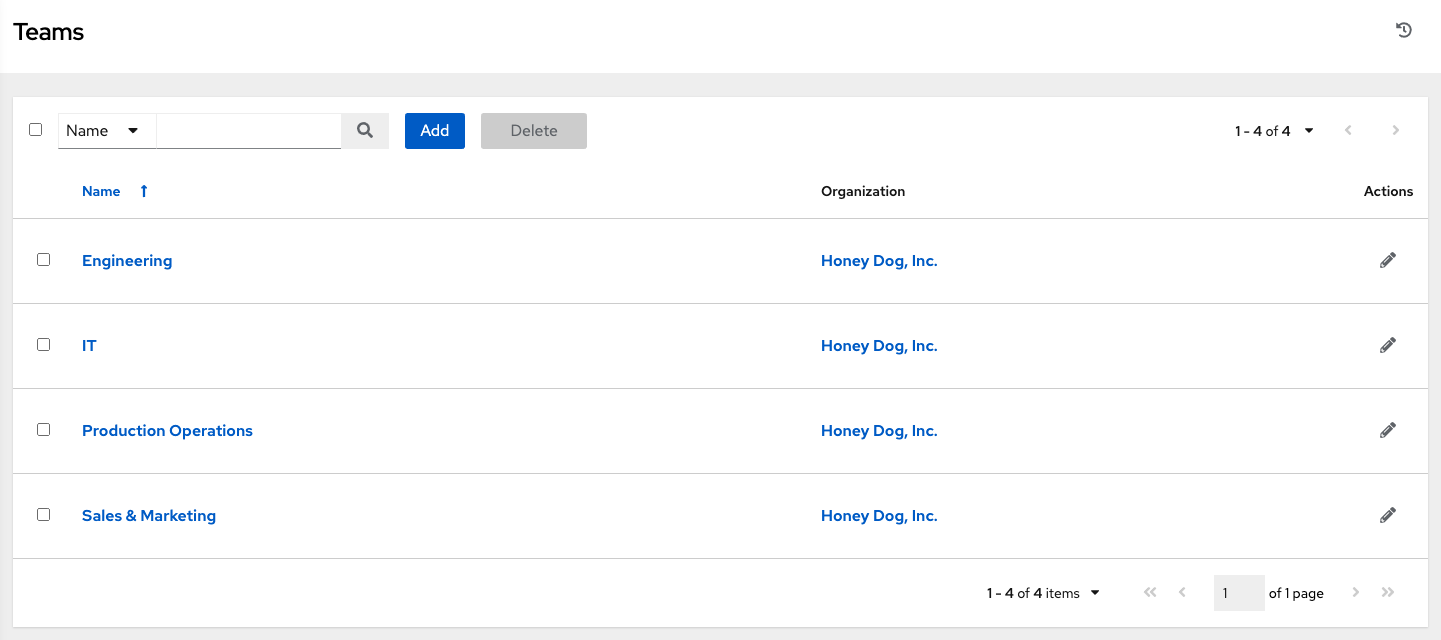

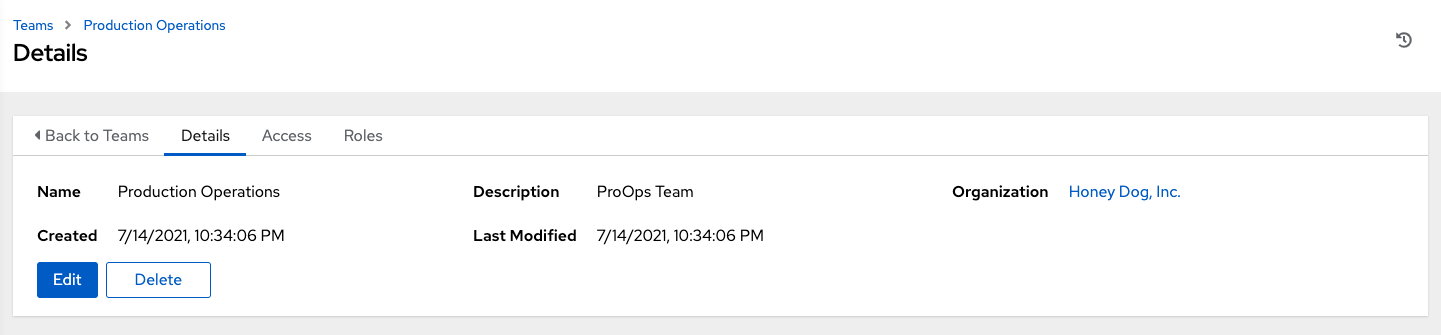

Chapter 9. Managing teams

A Team is a subdivision of an organization with associated users, projects, credentials, and permissions. Teams offer a means to implement role-based access control schemes and delegate responsibilities across organizations. For example, you can grant permissions to a whole team rather than to each user on the team.

From the navigation panel, select Access → Teams.

You can sort and search the team list and searched by Name or Organization.

Click the Edit

![]() icon next to the entry to edit information about the team. You can also review Users and Permissions associated with this team.

icon next to the entry to edit information about the team. You can also review Users and Permissions associated with this team.

9.1. Creating a team

You can create as many teams of users as you need for your organization. You can assign permissions to each team, just as with users. Teams can also assign ownership for credentials, minimizing the steps to assign the same credentials to the same user.

Procedure

- On the Teams page, click Add.

Enter the appropriate details into the following fields:

- Name

- Optional: Description

- Organization: You must select an existing organization

- Click Save. The Details dialog opens.

Review and edit your team information.

9.1.1. Adding or removing a user to a team

To add a user to a team, the user must already have been created. For more information, see Creating a user. Adding a user to a team adds them as a member only. Use the Access tab to specify a role for the user on different resources.

Procedure

- In the Access tab of the Details page click Add.

- Follow the prompts to add a user and assign them to roles.

- Click Save.

9.1.2. Removing roles for a user

Procedure

-

To remove roles for a particular user, click the

icon next to its resource.

icon next to its resource.

This launches a confirmation dialog, asking you to confirm the disassociation.

9.1.3. Team access

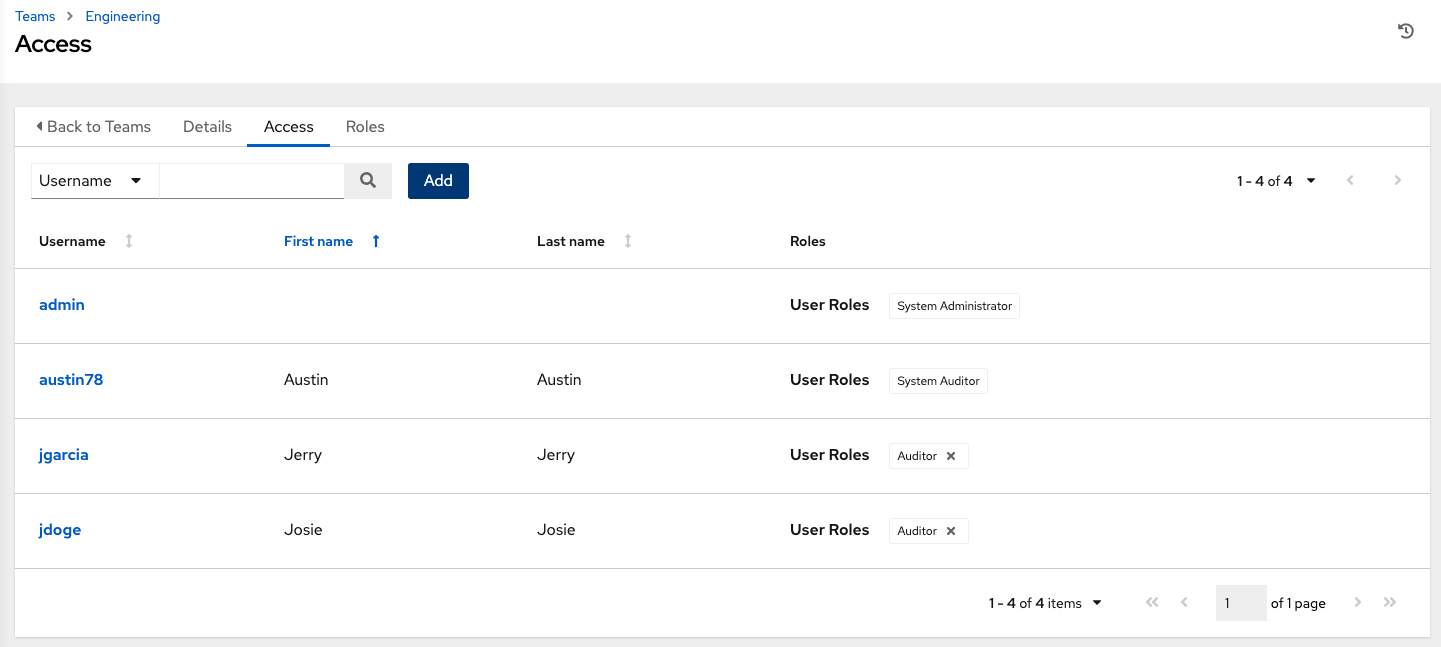

The Access tab displays the list of users that are members a specific team.

You can search this list by Username, First Name, or Last Name. For more information, see Users.

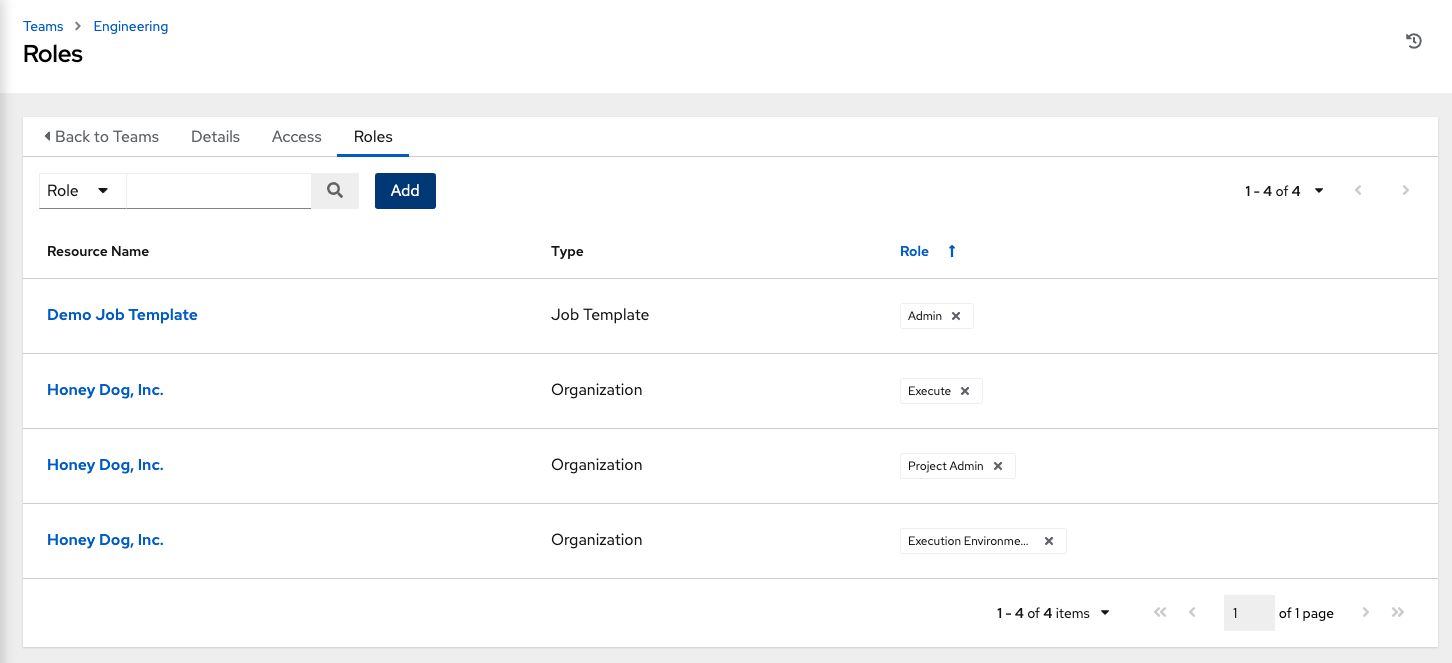

9.1.4. Team roles and permissions

Select the Roles tab on the Roles Details page to display a list of the permissions that are currently available for this team.

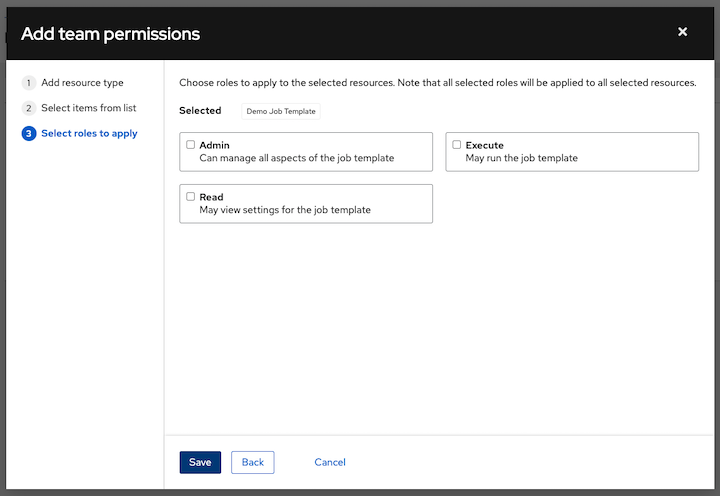

9.1.5. Adding and removing team permissions

By default, all teams that you create have read permissions. You can assign additional permissions, such as edit and administer projects, inventories, and other elements.

You can set permissions through an inventory, project, job template, or within the Organizations view.

Procedure

- From the Team list view , click the required user.

- On the Details page, click Add. This opens the Add team permissions wizard.

- Select the object to which the team requires access.

- Click Next.

- Select the resource to assign team roles.

- Click Next.

Click the checkbox beside the role to assign that role to your chosen type of resource. Different resources have different options available.

- Click Save.

The updated profile for the user with the roles assigned for each selected resource is displayed.

9.1.5.1. Removing team permissions

-

To remove Permissions for a particular resource, click the

icon next to its resource. This launches a confirmation dialog, asking you to confirm the disassociation.

icon next to its resource. This launches a confirmation dialog, asking you to confirm the disassociation.

You can also add teams, individual, or many users and assign them permissions at the object level. This includes projects, inventories, job templates, and workflow templates. This feature reduces the time for an organization to onboard many users at one time.

Chapter 10. Managing user credentials

Credentials authenticate the automation controller user when launching jobs against machines, synchronizing with inventory sources, and importing project content from a version control system.

You can grant users and teams the ability to use these credentials, without exposing the credential to the user. If a user moves to a different team or leaves the organization, you do not have to re-key all of your systems just because that credential was available in automation controller.

Automation controller encrypts passwords and key information in the database and never makes secret information visible through the API. For further information, see the Automation controller Administration Guide.

10.1. How credentials work

Automation controller uses SSH to connect to remote hosts. To pass the key from automation controller to SSH, the key must be decrypted before it can be written to a named pipe. Automation controller uses that pipe to send the key to SSH, so that the key is never written to disk. If passwords are used, automation controller handles them by responding directly to the password prompt and decrypting the password before writing it to the prompt.

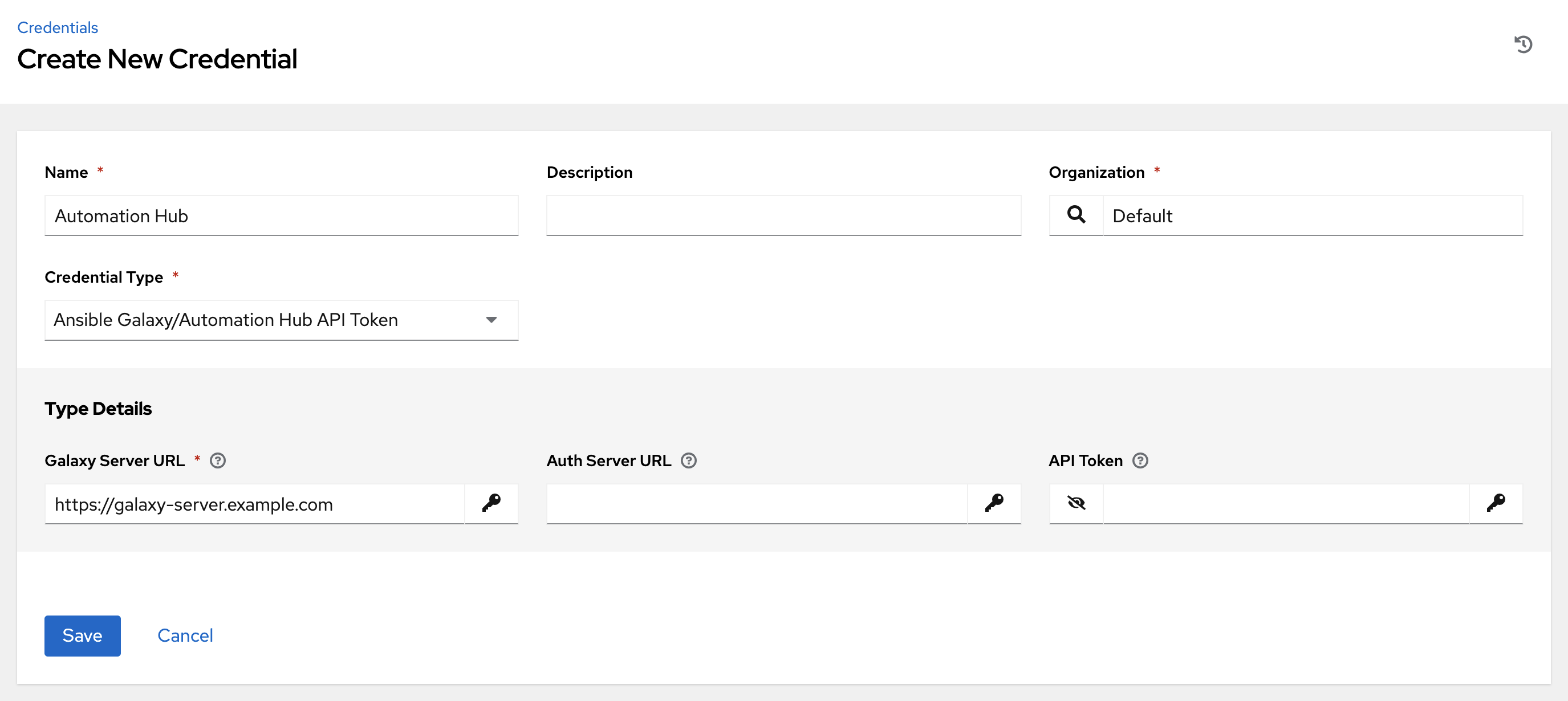

10.2. Creating new credentials

Credentials added to a team are made available to all members of the team. You can also add credentials to individual users.

As part of the initial setup, two credentials are available for your use: Demo Credential and Ansible Galaxy. Use the Ansible Galaxy credential as a template. You can copy this credential, but not edit it. Add more credentials as needed.

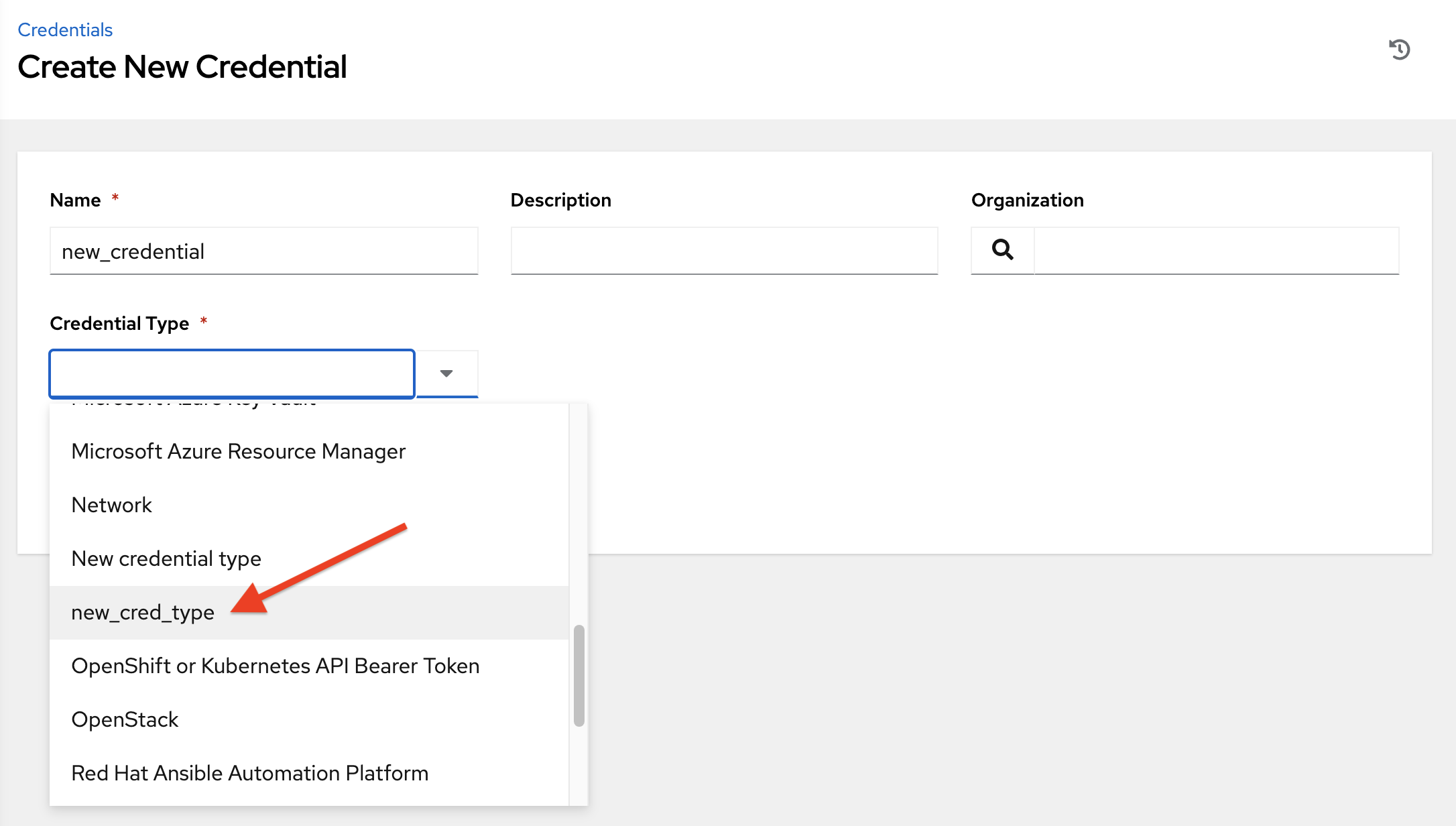

Procedure

- From the navigation panel, select Resources → Credentials.

- Click Add.

Enter the following information:

- The name for your new credential.

- Optional: a description for the new credential.

Optional: The name of the organization with which the credential is associated.

NoteA credential with a set of permissions associated with one organization persists if the credential is reassigned to another organization.

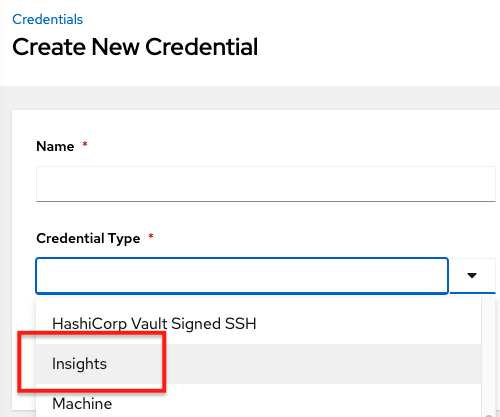

- In the Credential Type field, enter or select the credential type you want to create.

- Enter the appropriate details depending on the type of credential selected, as described in Credential types.

- Click Save.

10.3. Adding new users and job templates to existing credentials

Procedure

- From the navigation panel, select Resources → Credentials.

- Select the credential that you want to assign to additional users.

- Click the Access tab. You can see users and teams associated with this credential and their roles.

- Choose a user and click Add. If no users exist, add them from the Users menu. For more information, see Users.

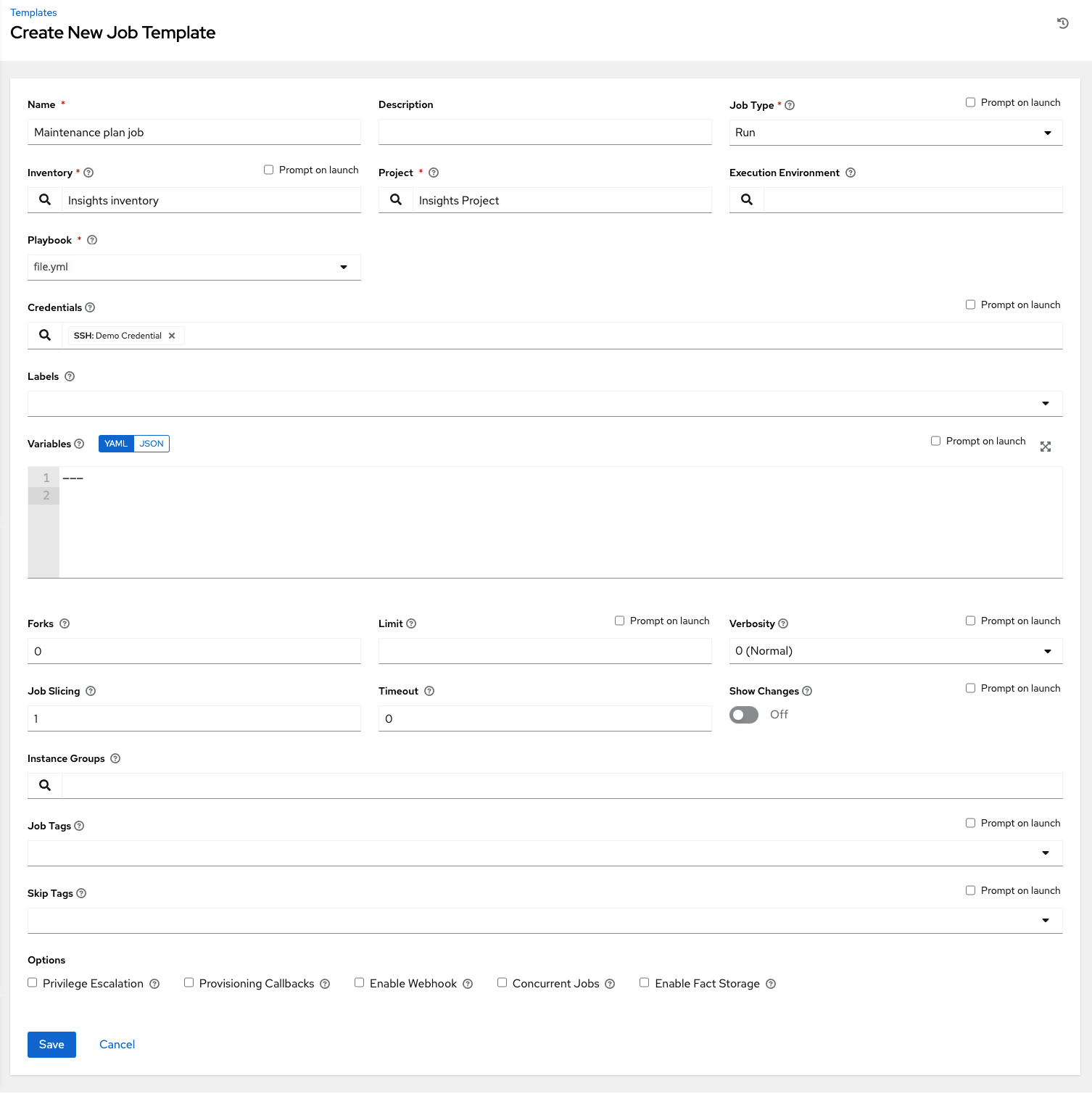

- Select Job Templates to display the job templates associated with this credential, and which jobs have run recently by using this credential.

- Choose a job template and click Add to assign the credential to additional job templates. For more information about creating new job templates, see the Job templates section.

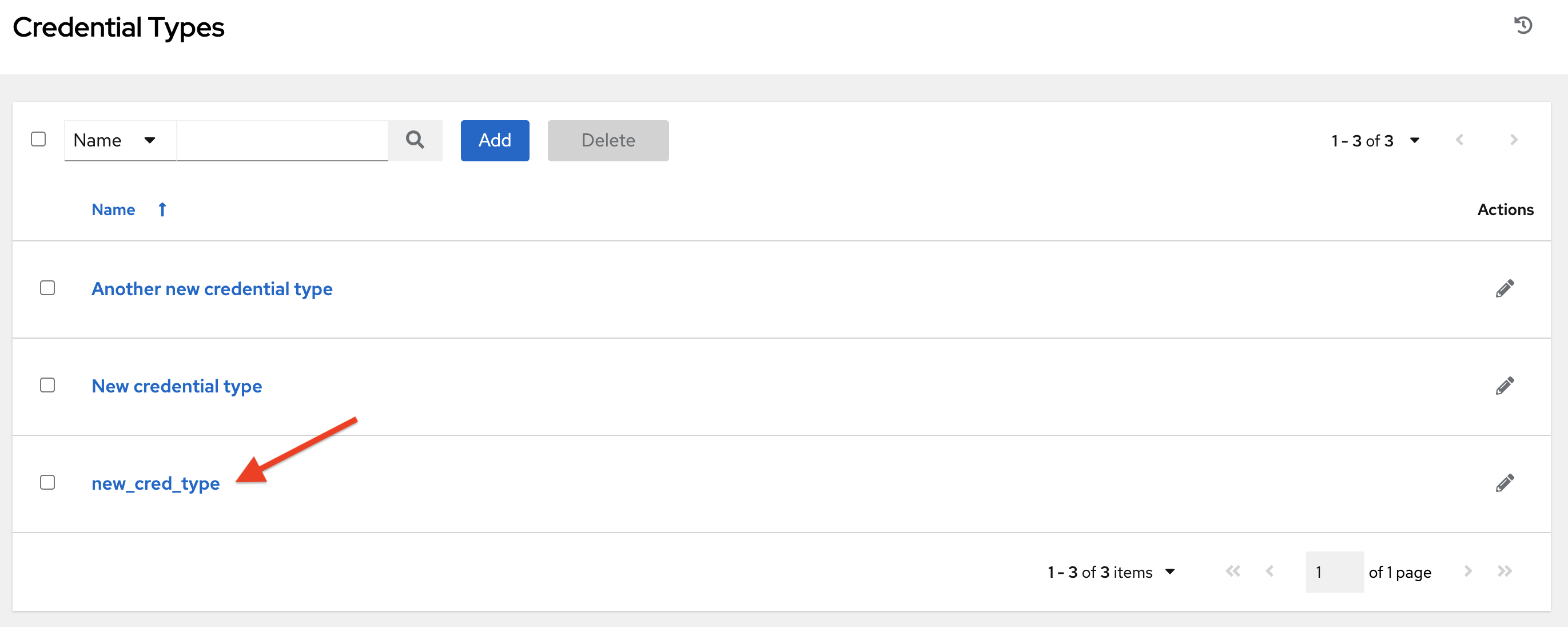

10.4. Credential types

Automation controller supports the following credential types:

- Amazon Web Services

- Ansible Galaxy/Automation Hub API Token

- Centrify Vault Credential Provider Lookup

- Container Registry

- CyberArk Central Credential Provider Lookup

- CyberArk Conjur Secrets Manager Lookup

- GitHub Personal Access Token

- GitLab Personal Access Token

- Google Compute Engine

- GPG Public Key

- HashiCorp Vault Secret Lookup

- HashiCorp Vault Signed SSH

- Insights

- Machine

- Microsoft Azure Key Vault

- Microsoft Azure Resource Manager

- Network

- OpenShift or Kubernetes API Bearer Token

- OpenStack

- Red Hat Ansible Automation Platform

- Red Hat Satellite 6

- Red Hat Virtualization

- Source Control

- Thycotic DevOps Secrets Vault

- Thycotic Secret Server

- Vault

- VMware vCenter

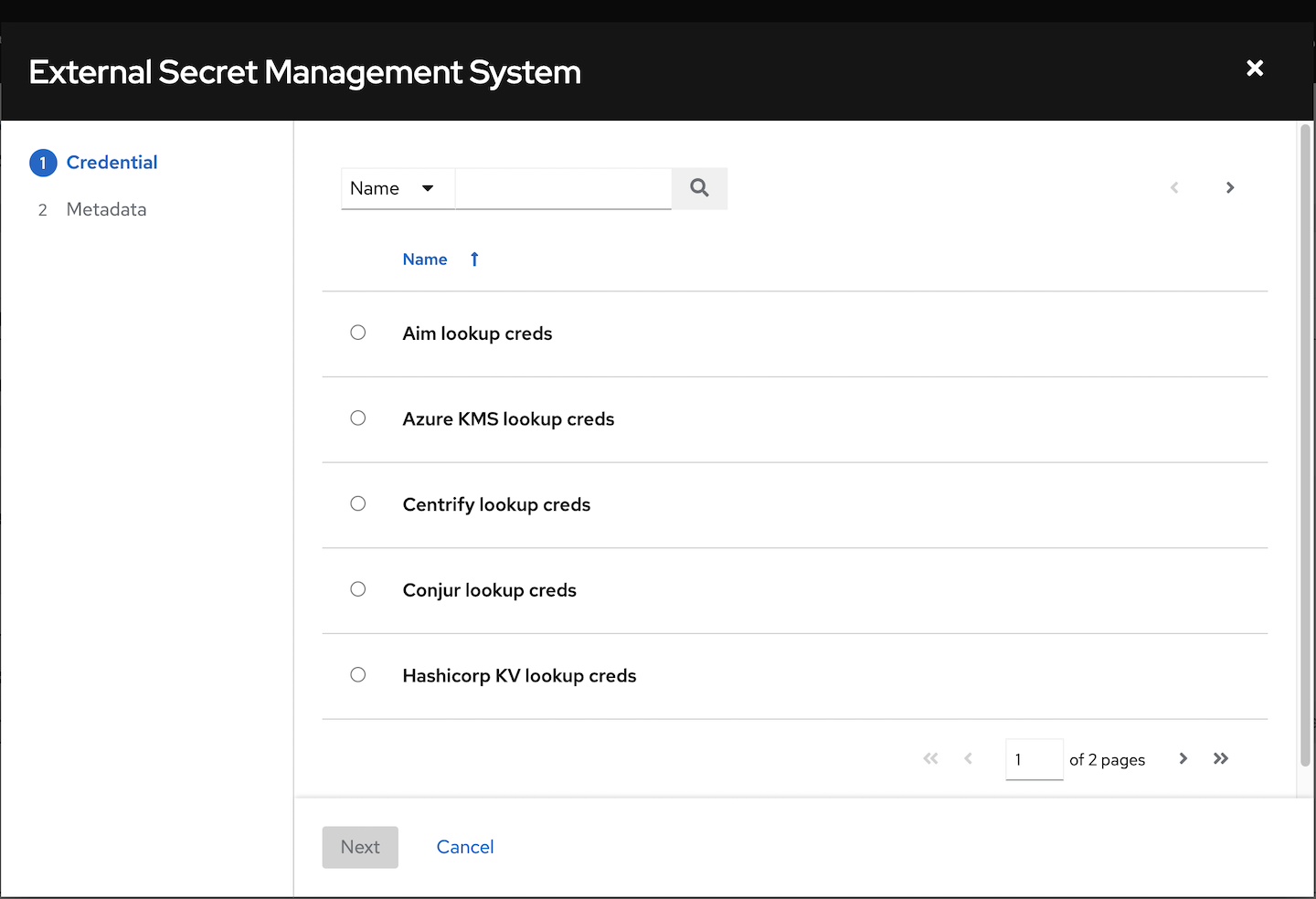

The credential types associated with Centrify, CyberArk, HashiCorp Vault, Microsoft Azure Key Vault, and Thycotic are part of the credential plugins capability that enables an external system to lookup your secrets information.

For more information, see Secrets Management System.

10.4.1. Amazon Web Services credential type

Select this credential to enable synchronization of cloud inventory with Amazon Web Services.

Automation controller uses the following environment variables for AWS credentials:

AWS_ACCESS_KEY_ID AWS_SECRET_ACCESS_KEY AWS_SECURITY_TOKEN

These are fields prompted in the user interface.

Amazon Web Services credentials consist of the AWS Access Key and Secret Key.

Automation controller provides support for EC2 STS tokens, also known as Identity and Access Management (IAM) STS credentials. Security Token Service (STS) is a web service that enables you to request temporary, limited-privilege credentials for AWS IAM users.

If the value of your tags in EC2 contain Booleans (yes/no/true/false), you must quote them.

To use implicit IAM role credentials, do not attach AWS cloud credentials in automation controller when relying on IAM roles to access the AWS API.

Attaching your AWS cloud credential to your job template forces the use of your AWS credentials, not your IAM role credentials.

Additional resources

For more information about the IAM/EC2 STS Token, see Temporary security credentials in IAM.

10.4.1.1. Access Amazon EC2 credentials in an Ansible Playbook

You can get AWS credential parameters from a job runtime environment:

vars:

aws:

access_key: '{{ lookup("env", "AWS_ACCESS_KEY_ID") }}'

secret_key: '{{ lookup("env", "AWS_SECRET_ACCESS_KEY") }}'

security_token: '{{ lookup("env", "AWS_SECURITY_TOKEN") }}'10.4.2. Ansible Galaxy/Automation Hub API token credential type

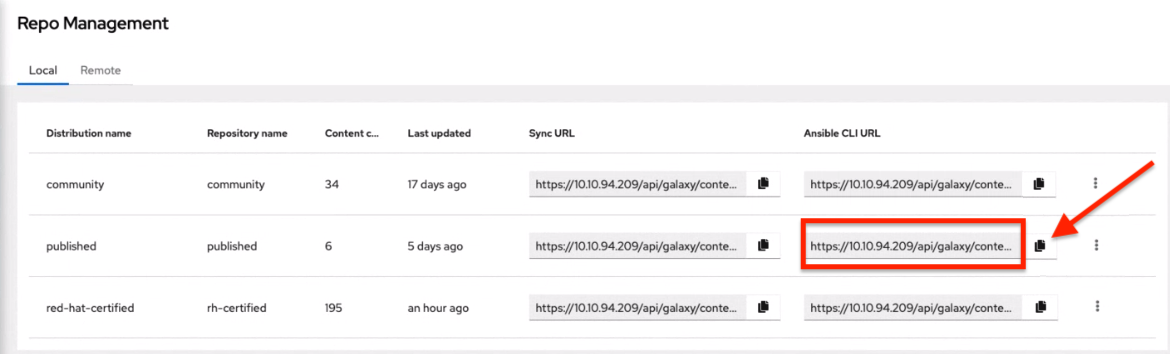

Select this credential to access Ansible Galaxy or use a collection published on an instance of private automation hub.

Entering the Galaxy server URL on this screen.

Populate the Galaxy Server URL field with the contents of the Server URL field at Red Hat Hybrid Cloud Console. Populate the Auth Server URL field with the contents of the SSO URL field at Red Hat Hybrid Cloud Console.

Additional resources

For more information, see Using Collections with automation hub.

10.4.3. Centrify Vault Credential Provider Lookup credential type

This is considered part of the secret management capability. For more information, see Centrify Vault Credential Provider Lookup.

10.4.4. Container Registry credential type

Select this credential to enable automation controller to access a collection of container images. For more information, see What is a container registry?.

You must specify a name. The Authentication URL field is pre-populated with a default value. You can change the value by specifying the authentication endpoint for a different container registry.

10.4.5. CyberArk Central Credential Provider Lookup credential type

This is considered part of the secret management capability.

For more information, see CyberArk Central Credential Provider (CCP) Lookup.

10.4.6. CyberArk Conjur Secrets Manager Lookup credential type

This is considered part of the secret management capability.

For more information, see CyberArk Conjur Secrets Manager Lookup.

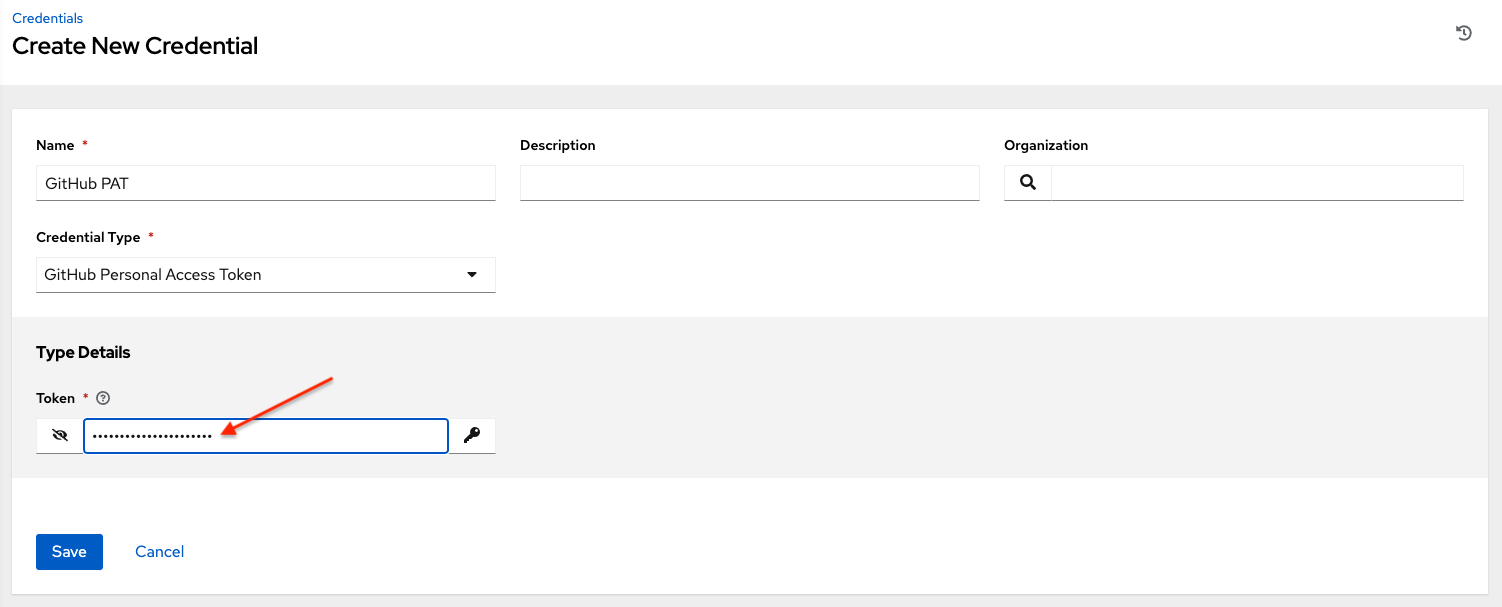

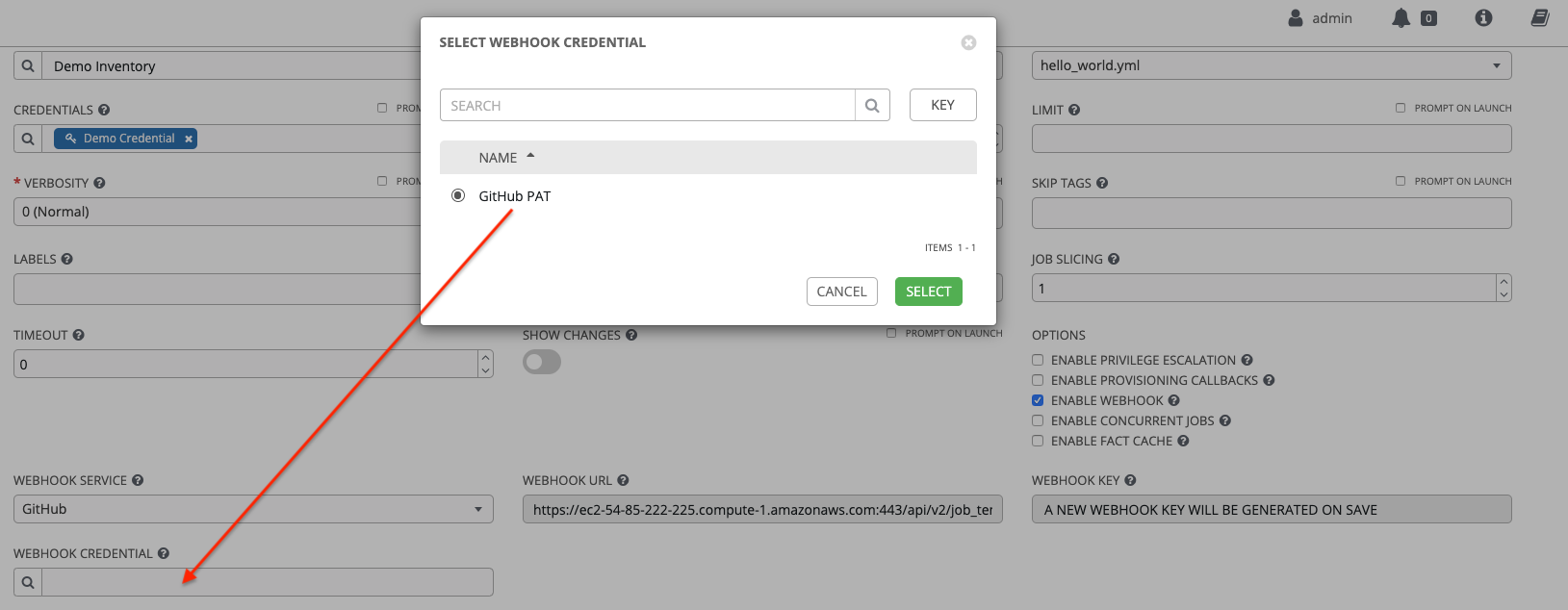

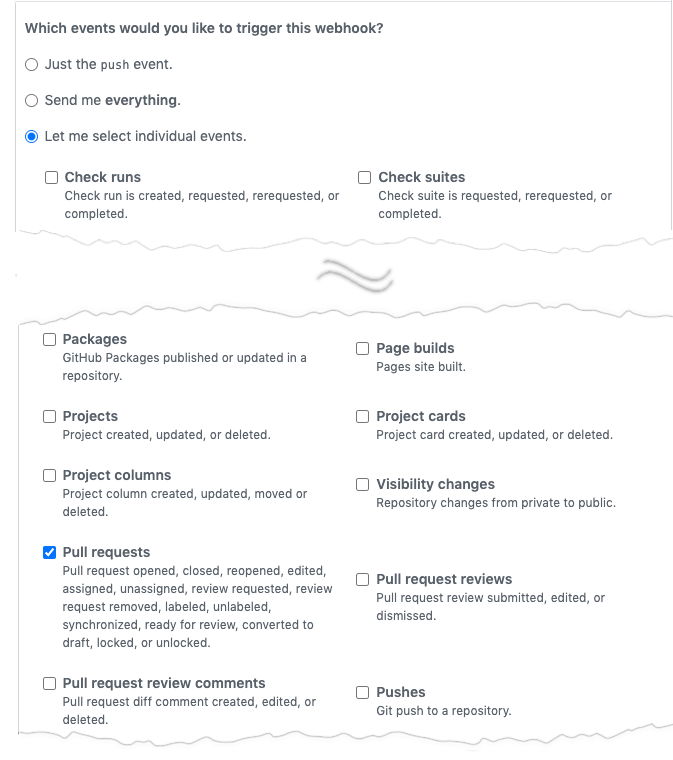

10.4.7. GitHub Personal Access Token credential type

Select this credential to enable you to access GitHub by using a Personal Access Token (PAT), which you can get through GitHub.

For more information, see Working with Webhooks.

GitHub PAT credentials require a value in the Token field, which is provided in your GitHub profile settings.

Use this credential to establish an API connection to GitHub for use in webhook listener jobs, to post status updates.

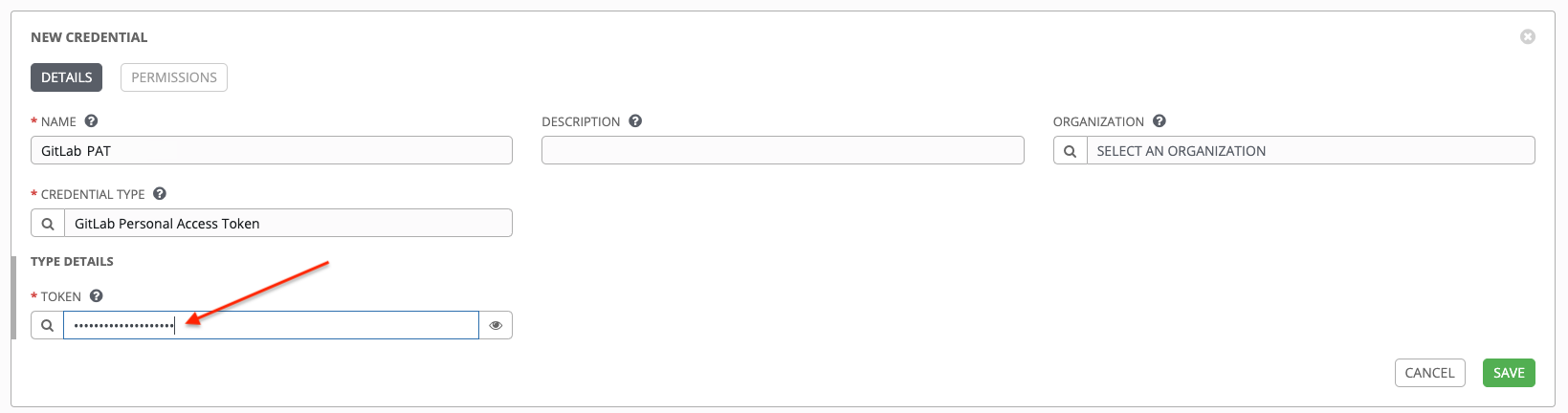

10.4.8. GitLab Personal Access Token credential type

Select this credential to enable you to access GitLab by using a Personal Access Token (PAT), which you can get through GitLab.

For more information, see Working with Webhooks.

GitLab PAT credentials require a value in the Token field, which is provided in your GitLab profile settings.

Use this credential to establish an API connection to GitLab for use in webhook listener jobs, to post status updates.

10.4.9. Google Compute Engine credential type

Select this credential to enable synchronization of a cloud inventory with Google Compute Engine (GCE).

Automation controller uses the following environment variables for GCE credentials:

GCE_EMAIL GCE_PROJECT GCE_CREDENTIALS_FILE_PATH

These are fields prompted in the user interface:

GCE credentials require the following information:

- Service Account Email Address: The email address assigned to the Google Compute Engine service account.

- Optional: Project: Provide the GCE assigned identification or the unique project ID that you provided at project creation time.

- Optional: Service Account JSON File: Upload a GCE service account file. Click Browse to browse for the file that has the special account information that can be used by services and applications running on your GCE instance to interact with other Google Cloud Platform APIs. This grants permissions to the service account and virtual machine instances.

- RSA Private Key: The PEM file associated with the service account email.

10.4.9.1. Access Google Compute Engine credentials in an Ansible Playbook

You can get GCE credential parameters from a job runtime environment:

vars:

gce:

email: '{{ lookup("env", "GCE_EMAIL") }}'

project: '{{ lookup("env", "GCE_PROJECT") }}'

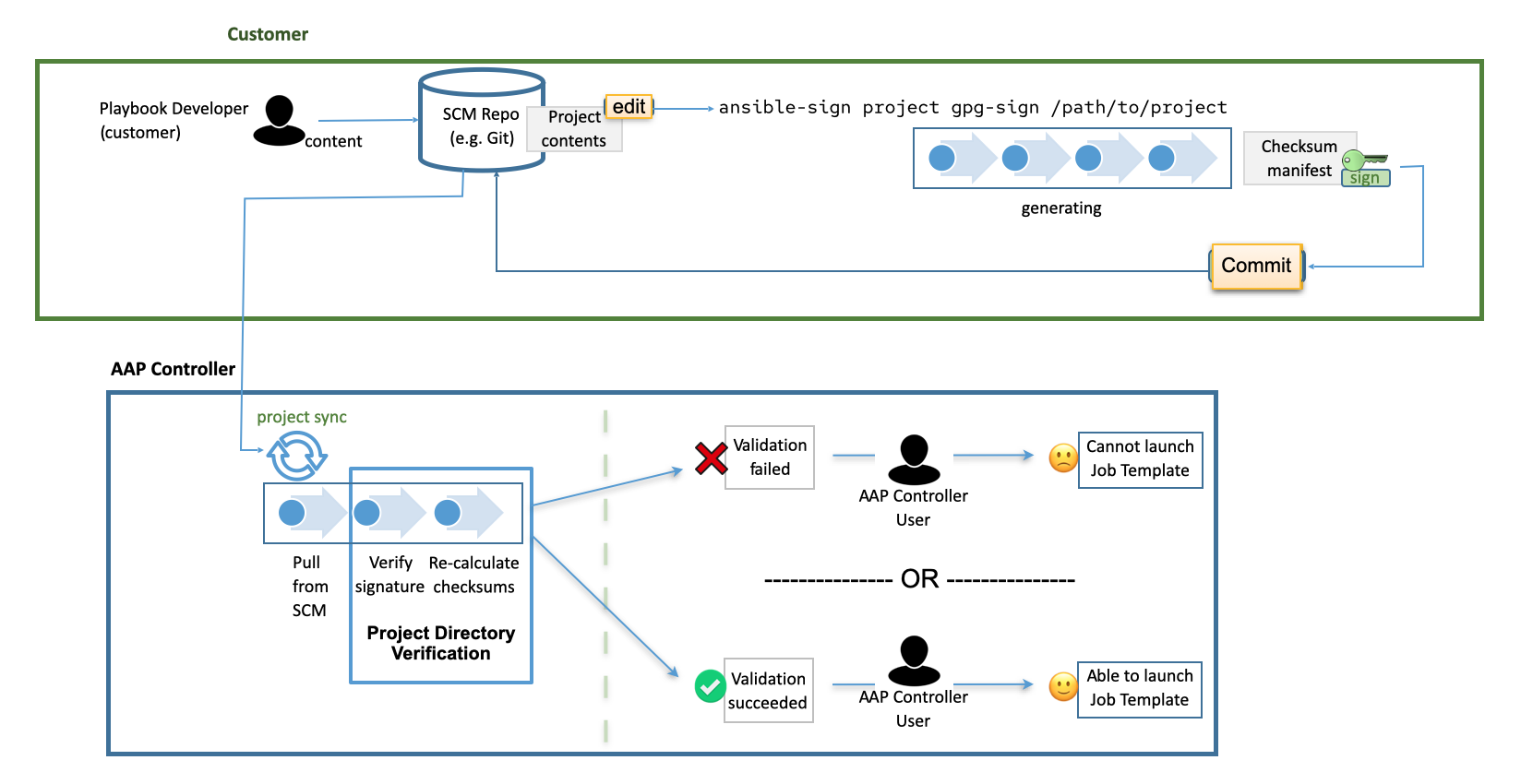

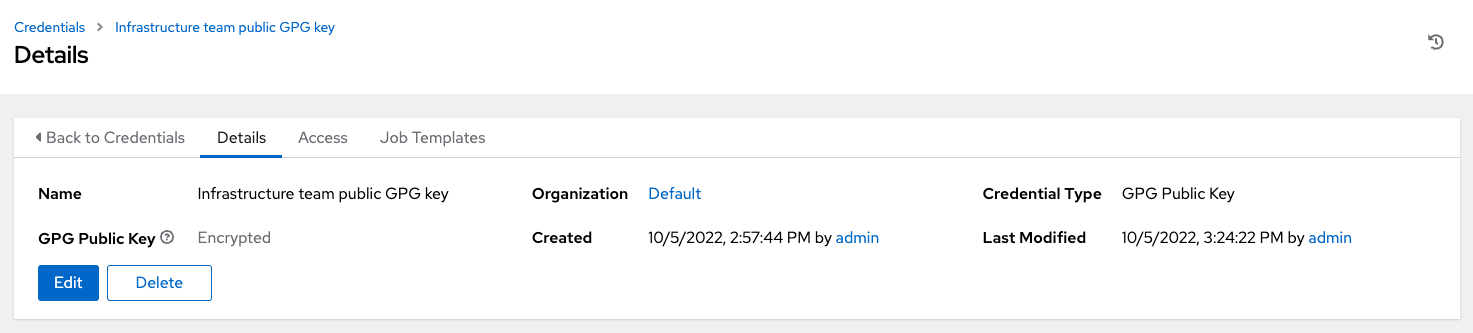

pem_file_path: '{{ lookup("env", "GCE_PEM_FILE_PATH") }}'10.4.10. GPG Public Key credential type

Select this credential type to enable automation controller to verify the integrity of the project when synchronizing from source control.

For more information about how to generate a valid keypair, use the CLI tool to sign content, and how to add the public key to the controller, see Project Signing and Verification.

10.4.11. HashiCorp Vault Secret Lookup credential type

This is considered part of the secret management capability.

For more information, see HashiCorp Vault Secret Lookup.

10.4.12. HashiCorp Vault Signed SSH credential type

This is considered part of the secret management capability.

For more information, see HashiCorp Vault Signed SSH.

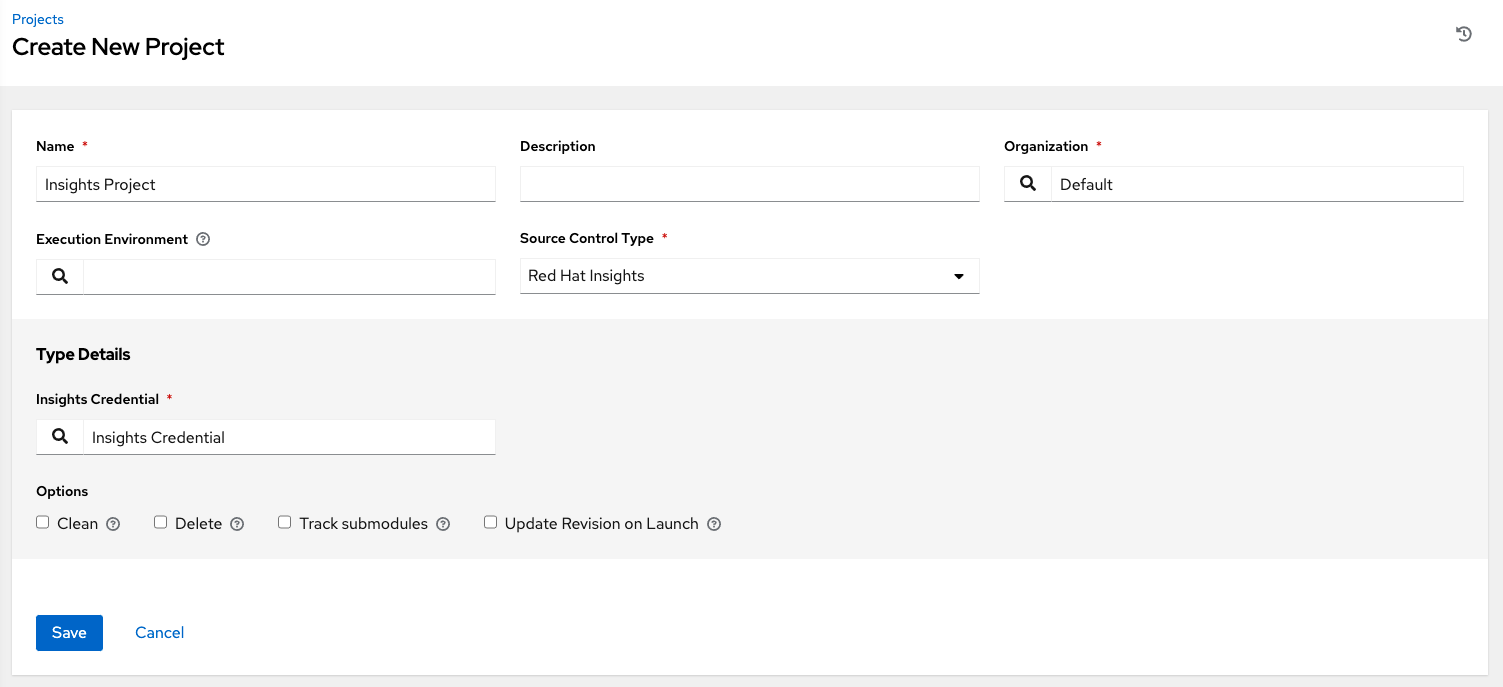

10.4.13. Insights credential type

Select this credential type to enable synchronization of cloud inventory with Red Hat Insights.

Insights credentials are the Insights Username and Password, which are the user’s Red Hat Customer Portal Account username and password.

The extra_vars and env injectors for Insights are as follows:

ManagedCredentialType(

namespace='insights',

....

....

....

injectors={

'extra_vars': {

"scm_username": "{{username}}",

"scm_password": "{{password}}",

},

'env': {

'INSIGHTS_USER': '{{username}}',

'INSIGHTS_PASSWORD': '{{password}}',

},10.4.14. Machine credential type

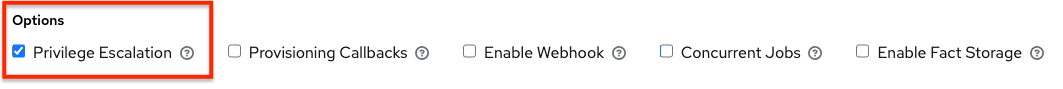

Machine credentials enable automation controller to call Ansible on hosts under your management. You can specify the SSH username, optionally give a password, an SSH key, a key password, or have automation controller prompt the user for their password at deployment time. They define SSH and user-level privilege escalation access for playbooks, and are used when submitting jobs to run playbooks on a remote host.

The following network connections use Machine as the credential type: httpapi, netconf, and network_cli

Machine and SSH credentials do not use environment variables. They pass the username through the ansible -u flag, and interactively write the SSH password when the underlying SSH client prompts for it.

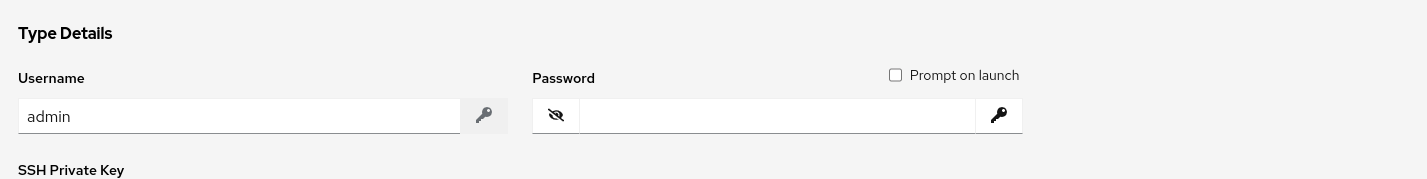

Machine credentials require the following inputs:

- Username: The username to use for SSH authentication.

- Password: The password to use for SSH authentication. This password is stored encrypted in the database, if entered. Alternatively, you can configure automation controller to ask the user for the password at launch time by selecting Prompt on launch. In these cases, a dialog opens when the job is launched, promoting the user to enter the password and password confirmation.

- SSH Private Key: Copy or drag-and-drop the SSH private key for the machine credential.

- Private Key Passphrase: If the SSH Private Key used is protected by a password, you can configure a Key Passphrase for the private key. This password is stored encrypted in the database, if entered. You can also configure automation controller to ask the user for the key passphrase at launch time by selecting Prompt on launch. In these cases, a dialog opens when the job is launched, prompting the user to enter the key passphrase and key passphrase confirmation.

-

Privilege Escalation Method: Specifies the type of escalation privilege to assign to specific users. This is the same as specifying the

--become-method=BECOME_METHODparameter, whereBECOME_METHODis any of the existing methods, or a custom method you have written. Begin entering the name of the method, and the appropriate name auto-populates.

-

empty selection: If a task or play has

becomeset toyesand is used with an empty selection, then it will default tosudo. - sudo: Performs single commands with superuser (root user) privileges.

- su: Switches to the superuser (root user) account (or to other user accounts).

- pbrun: Requests that an application or command be run in a controlled account and provides for advanced root privilege delegation and keylogging.

- pfexec: Executes commands with predefined process attributes, such as specific user or group IDs.

- dzdo: An enhanced version of sudo that uses RBAC information in Centrify’s Active Directory service. For more information, see Centrify’s site on DZDO.

- pmrun: Requests that an application is run in a controlled account. See Privilege Manager for Unix 6.0.

- runas: Enables you to run as the current user.

- enable: Switches to elevated permissions on a network device.

- doas: Enables your remote/login user to run commands as another user through the doas ("Do as user") utility.

- ksu: Enables your remote/login user to run commands as another user through Kerberos access.

-

machinectl: Enables you to manage containers through the

systemdmachine manager. - sesu: Enables your remote/login user to run commands as another user through the CA Privileged Access Manager.

Custom become plugins are available from Ansible 2.8+. For more information, see Understanding Privilege Escalation and the list of Become plugins

- Privilege Escalation Username: You see this field only if you selected an option for privilege escalation. Enter the username to use with escalation privileges on the remote system.

- Privilege Escalation Password: You see this field only if you selected an option for privilege escalation. Enter the password to use to authenticate the user through the selected privilege escalation type on the remote system. This password is stored encrypted in the database. You can also configure automation controller to ask the user for the password at launch time by selecting Prompt on launch. In these cases, a dialog opens when the job is launched, promoting the user to enter the password and password confirmation.

You must use sudo password must in combination with SSH passwords or SSH Private Keys, because automation controller must first establish an authenticated SSH connection with the host before invoking sudo to change to the sudo user.

Credentials that are used in scheduled jobs must not be configured as Prompt on launch.

10.4.14.1. Access machine credentials in an ansible playbook

You can get username and password from Ansible facts:

vars:

machine:

username: '{{ ansible_user }}'

password: '{{ ansible_password }}'10.4.15. Microsoft Azure Key Vault credential type

This is considered part of the secret management capability.

For more information, see Microsoft Azure Key Vault.

10.4.16. Microsoft Azure Resource Manager credential type

Select this credential type to enable synchronization of cloud inventory with Microsoft Azure Resource Manager.

Microsoft Azure Resource Manager credentials require the following inputs:

- Subscription ID: The Subscription UUID for the Microsoft Azure account.

- Username: The username to use to connect to the Microsoft Azure account.

- Password: The password to use to connect to the Microsoft Azure account.

- Client ID: The Client ID for the Microsoft Azure account.

- Client Secret: The Client Secret for the Microsoft Azure account.

- Tenant ID: The Tenant ID for the Microsoft Azure account.

- Azure Cloud Environment: The variable associated with Azure cloud or Azure stack environments.

These fields are equal to the variables in the API.

To pass service principal credentials, define the following variables:

AZURE_CLIENT_ID AZURE_SECRET AZURE_SUBSCRIPTION_ID AZURE_TENANT AZURE_CLOUD_ENVIRONMENT

To pass an Active Directory username and password pair, define the following variables:

AZURE_AD_USER AZURE_PASSWORD AZURE_SUBSCRIPTION_ID

You can also pass credentials as parameters to a task within a playbook. The order of precedence is parameters, then environment variables, and finally a file found in your home directory.

To pass credentials as parameters to a task, use the following parameters for service principal credentials:

client_id secret subscription_id tenant azure_cloud_environment

Alternatively, pass the following parameters for Active Directory username/password:

ad_user password subscription_id

10.4.16.1. Access Microsoft Azure resource manager credentials in an ansible playbook

You can get Microsoft Azure credential parameters from a job runtime environment:

vars:

azure:

client_id: '{{ lookup("env", "AZURE_CLIENT_ID") }}'

secret: '{{ lookup("env", "AZURE_SECRET") }}'

tenant: '{{ lookup("env", "AZURE_TENANT") }}'

subscription_id: '{{ lookup("env", "AZURE_SUBSCRIPTION_ID") }}'10.4.17. Network credential type

Select the Network credential type if you are using a local connection with provider to use Ansible networking modules to connect to and manage networking devices.

When connecting to network devices, the credential type must match the connection type:

-

For

localconnections usingprovider, credential type should be Network. -

For all other network connections (

httpapi,netconf, andnetwork_cli), the credential type should be Machine.

For more information about connection types available for network devices, see Multiple Communication Protocols.

Automation controller uses the following environment variables for Network credentials:

ANSIBLE_NET_USERNAME ANSIBLE_NET_PASSWORD

Provide the following information for network credentials:

- Username: The username to use in conjunction with the network device.

- Password: The password to use in conjunction with the network device.

- SSH Private Key: Copy or drag-and-drop the actual SSH Private Key to be used to authenticate the user to the network through SSH.

- Private Key Passphrase: The passphrase for the private key to authenticate the user to the network through SSH.

- Authorize: Select this from the Options field to control whether or not to enter privileged mode.

- If Authorize is checked, enter a password in the Authorize Password field to access privileged mode.

For more information, see Porting Ansible Network Playbooks with New Connection Plugins.

10.4.18. Access network credentials in an ansible playbook

You can get the username and password parameters from a job runtime environment:

vars:

network:

username: '{{ lookup("env", "ANSIBLE_NET_USERNAME") }}'

password: '{{ lookup("env", "ANSIBLE_NET_PASSWORD") }}'10.4.19. OpenShift or Kubernetes API Bearer Token credential type

Select this credential type to create instance groups that point to a Kubernetes or OpenShift container.

For more information, see Container and Instance Groups in the Automation controller Administration Guide.

Provide the following information for container credentials:

- OpenShift or Kubernetes API Endpoint (required): The endpoint used to connect to an OpenShift or Kubernetes container.

- API Authentication Bearer Token (required): The token used to authenticate the connection.

- Optional: Verify SSL: You can check this option to verify the server’s SSL/TLS certificate is valid and trusted. Environments that use internal or private Certificate Authority (CA) must leave this option unchecked to disable verification.

-

Certificate Authority Data: Include the

BEGIN CERTIFICATEandEND CERTIFICATElines when pasting the certificate, if provided.

A container group is a type of instance group that has an associated credential that enables connection to an OpenShift cluster. To set up a container group, you must have the following items:

- A namespace you can start into. Although every cluster has a default namespace, you can use a specific namespace.

- A service account that has the roles that enable it to start and manage pods in this namespace.

If you use execution environments in a private registry, and have a container registry credential associated with them in automation controller, the service account also requires the roles to get, create, and delete secrets in the namespace.

If you do not want to give these roles to the service account, you can pre-create the

ImagePullSecretsand specify them on the pod spec for the container group. In this case, the execution environment must not have a Container Registry credential associated, or automation controller attempts to create the secret for you in the namespace.- A token associated with that service account (OpenShift or Kubernetes Bearer Token)

- A CA certificate associated with the cluster

10.4.19.1. Creating a service account in an Openshift cluster

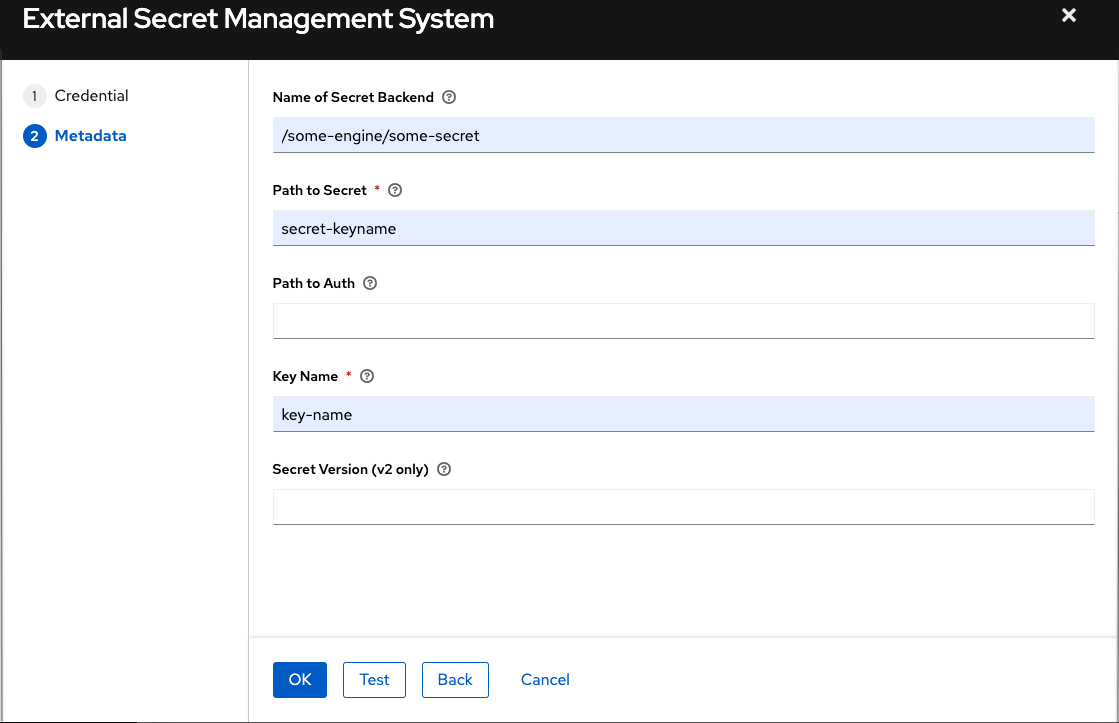

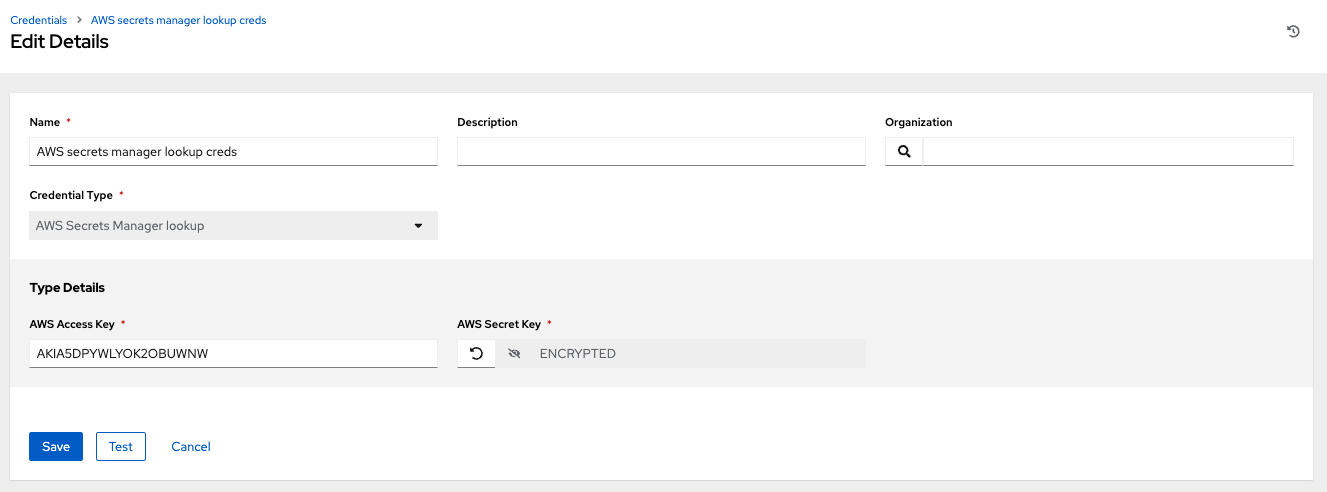

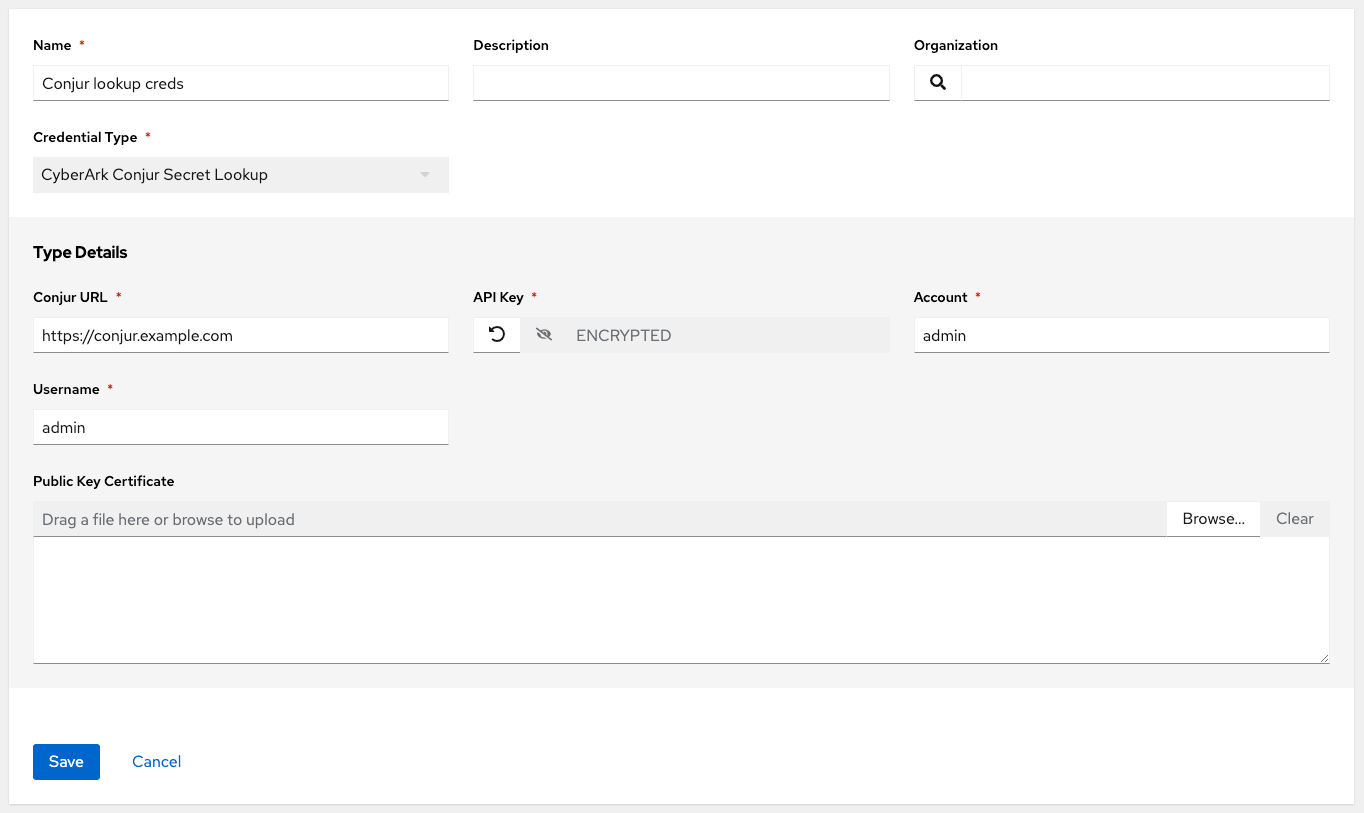

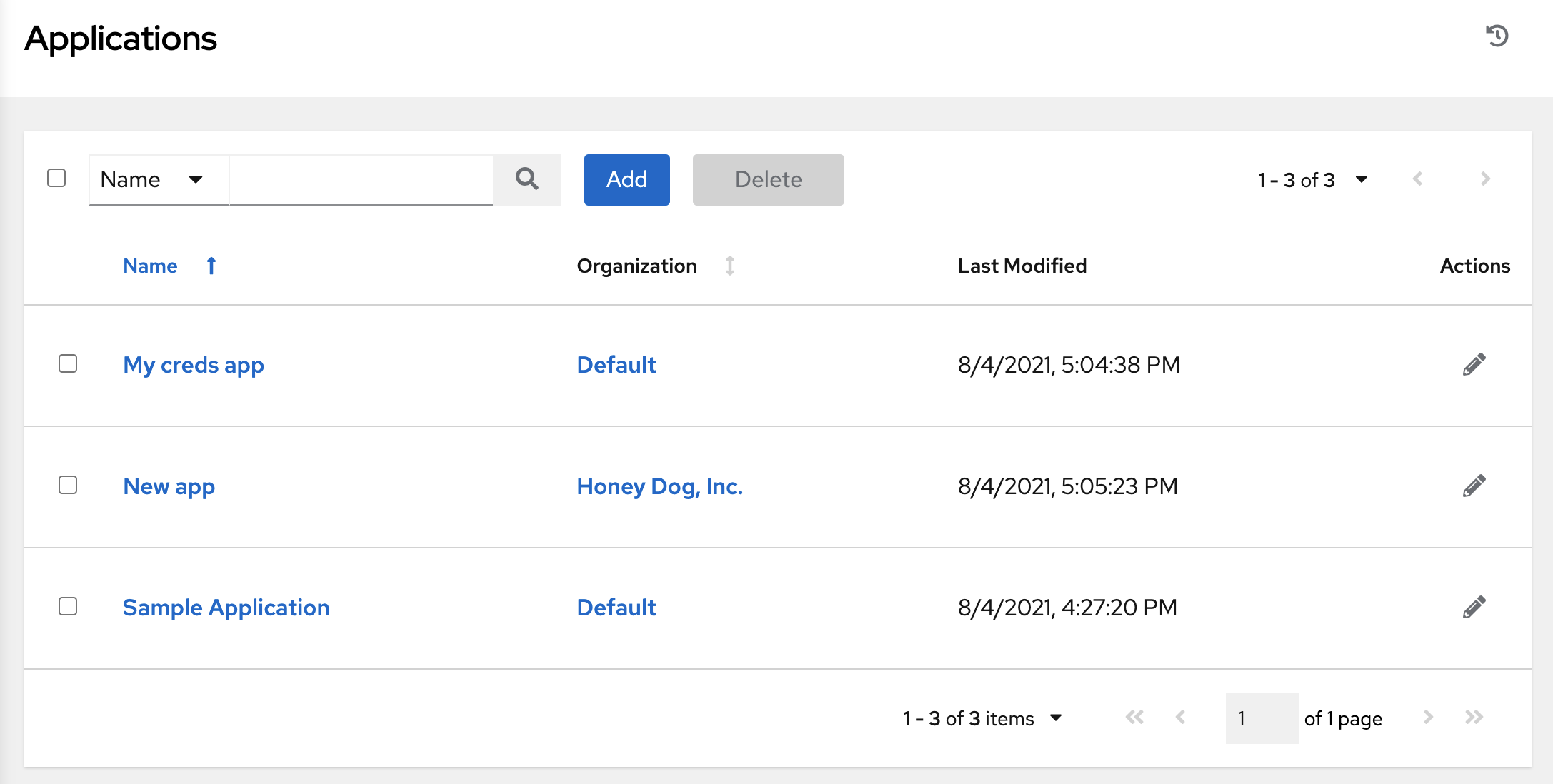

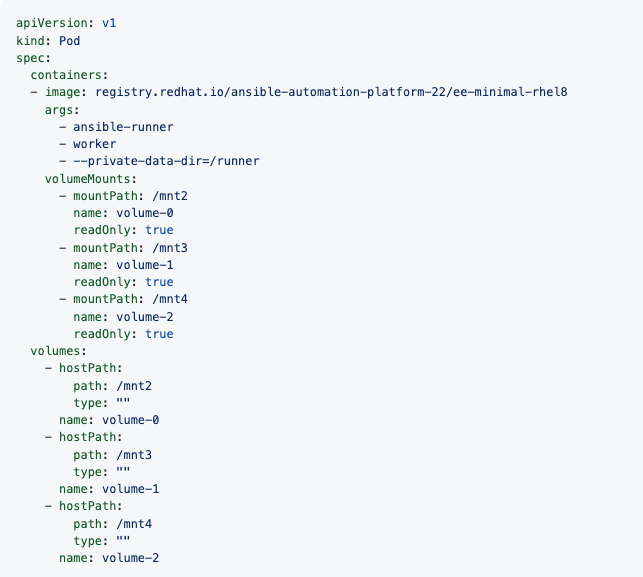

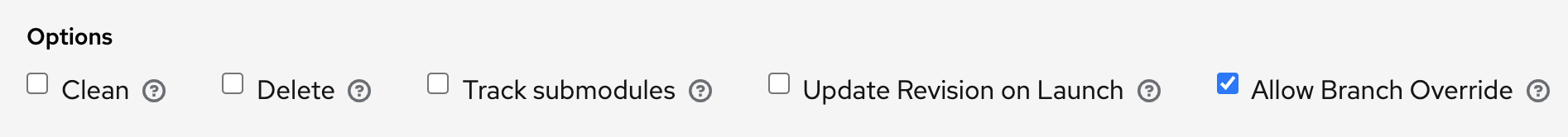

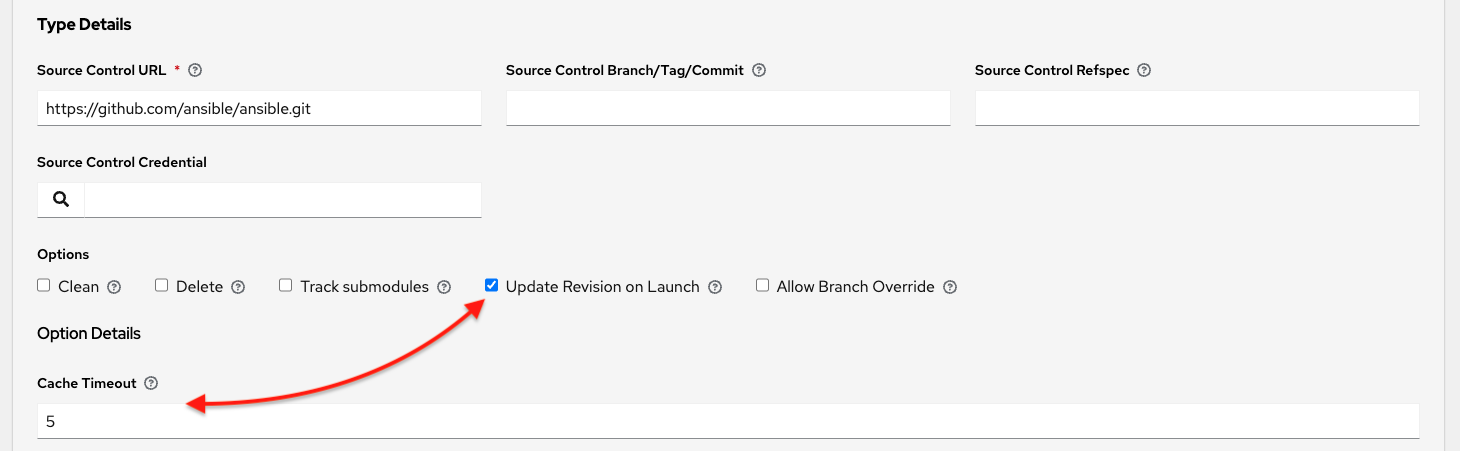

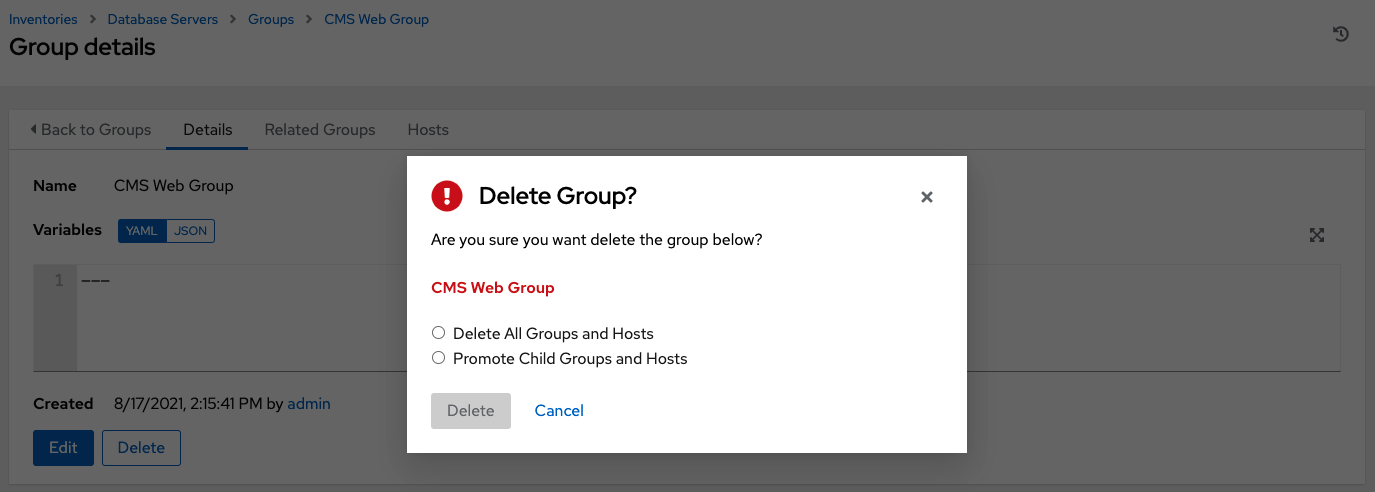

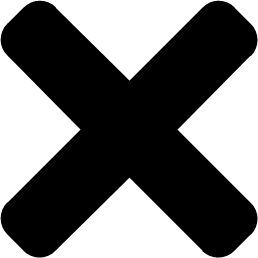

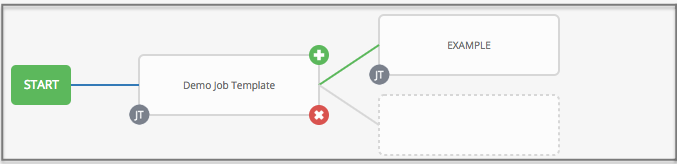

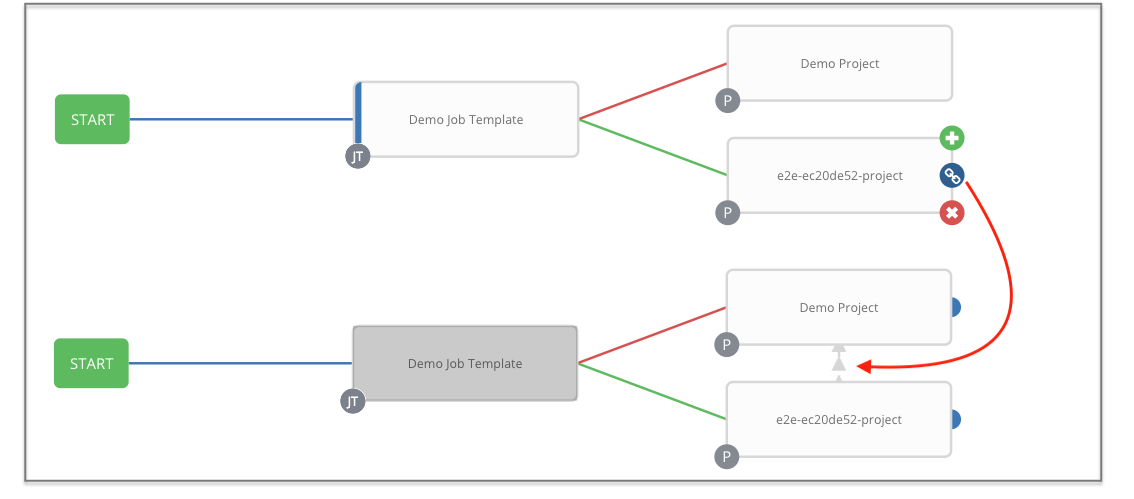

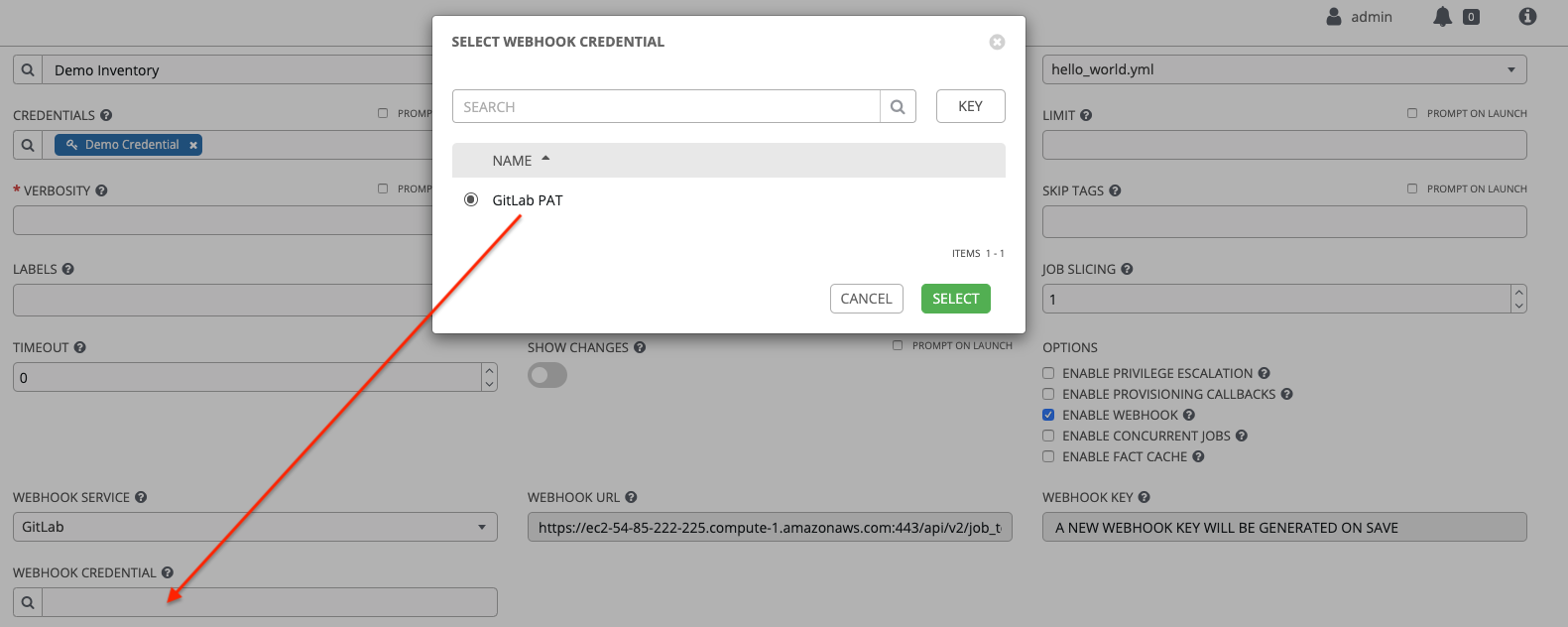

Creating a service account in an Openshift or Kubernetes cluster to be used to run jobs in a container group through automation controller. After you create the service account, its credentials are provided to automation controller in the form of an Openshift or Kubernetes API bearer token credential.